RHCSA Series: Reviewing Essential Commands & System Documentation – Part 1

RHCSA (Red Hat Certified System Administrator) is a certification exam from Red Hat company, which provides an open source operating system and software to the enterprise community, It also provides support, training and consulting services for the organizations.

RHCSA exam is the certification obtained from Red Hat Inc, after passing the exam (codename EX200). RHCSA exam is an upgrade to the RHCT (Red Hat Certified Technician) exam, and this upgrade is compulsory as the Red Hat Enterprise Linux was upgraded. The main variation between RHCT and RHCSA is that RHCT exam based on RHEL 5, whereas RHCSA certification is based on RHEL 6 and 7, the courseware of these two certifications are also vary to a certain level.

This Red Hat Certified System Administrator (RHCSA) is essential to perform the following core system administration tasks needed in Red Hat Enterprise Linux environments:

- Understand and use necessary tools for handling files, directories, command-environments line, and system-wide / packages documentation.

- Operate running systems, even in different run levels, identify and control processes, start and stop virtual machines.

- Set up local storage using partitions and logical volumes.

- Create and configure local and network file systems and its attributes (permissions, encryption, and ACLs).

- Setup, configure, and control systems, including installing, updating and removing software.

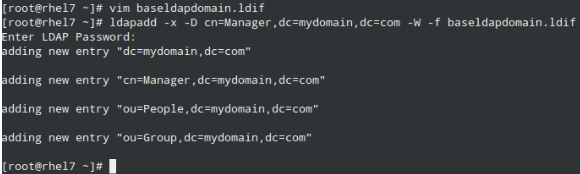

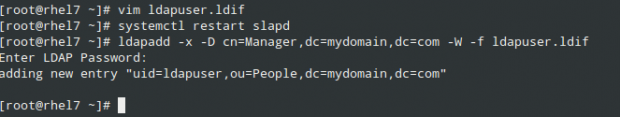

- Manage system users and groups, along with use of a centralized LDAP directory for authentication.

- Ensure system security, including basic firewall and SELinux configuration.

To view fees and register for an exam in your country, check the RHCSA Certification page.

In this 15-article RHCSA series, titled Preparation for the RHCSA (Red Hat Certified System Administrator) exam, we will going to cover the following topics on the latest releases of Red Hat Enterprise Linux 7.

In this Part 1 of the RHCSA series, we will explain how to enter and execute commands with the correct syntax in a shell prompt or terminal, and explained how to find, inspect, and use system documentation.

Prerequisites:

At least a slight degree of familiarity with basic Linux commands such as:

- cd command (change directory)

- ls command (list directory)

- cp command (copy files)

- mv command (move or rename files)

- touch command (create empty files or update the timestamp of existing ones)

- rm command (delete files)

- mkdir command (make directory)

The correct usage of some of them are anyway exemplified in this article, and you can find further information about each of them using the suggested methods in this article.

Though not strictly required to start, as we will be discussing general commands and methods for information search in a Linux system, you should try to install RHEL 7 as explained in the following article. It will make things easier down the road.

Interacting with the Linux Shell

If we log into a Linux box using a text-mode login screen, chances are we will be dropped directly into our default shell. On the other hand, if we login using a graphical user interface (GUI), we will have to open a shell manually by starting a terminal. Either way, we will be presented with the user prompt and we can start typing and executing commands (a command is executed by pressing the Enter key after we have typed it).

Commands are composed of two parts:

- the name of the command itself, and

- arguments

Certain arguments, called options (usually preceded by a hyphen), alter the behavior of the command in a particular way while other arguments specify the objects upon which the command operates.

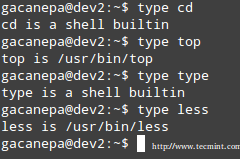

The type command can help us identify whether another certain command is built into the shell or if it is provided by a separate package. The need to make this distinction lies in the place where we will find more information about the command. For shell built-ins we need to look in the shell’s man page, whereas for other binaries we can refer to its own man page.

In the examples above, cd and type are shell built-ins, while top and less are binaries external to the shell itself (in this case, the location of the command executable is returned by type).

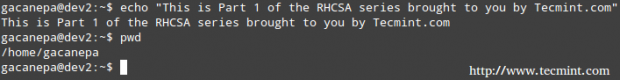

Other well-known shell built-ins include:

- echo command: Displays strings of text.

- pwd command: Prints the current working directory.

exec command

Runs an external program that we specify. Note that in most cases, this is better accomplished by just typing the name of the program we want to run, but the exec command has one special feature: rather than create a new process that runs alongside the shell, the new process replaces the shell, as can verified by subsequent.

# ps -ef | grep [original PID of the shell process]

When the new process terminates, the shell terminates with it. Run exec top and then hit the q key to quit top. You will notice that the shell session ends when you do, as shown in the following screencast:

export command

Exports variables to the environment of subsequently executed commands.

history Command

Displays the command history list with line numbers. A command in the history list can be repeated by typing the command number preceded by an exclamation sign. If we need to edit a command in history list before executing it, we can press Ctrl + r and start typing the first letters associated with the command. When we see the command completed automatically, we can edit it as per our current need:

This list of commands is kept in our home directory in a file called .bash_history. The history facility is a useful resource for reducing the amount of typing, especially when combined with command line editing. By default, bash stores the last 500 commands you have entered, but this limit can be extended by using the HISTSIZEenvironment variable:

But this change as performed above, will not be persistent on our next boot. In order to preserve the change in the HISTSIZE variable, we need to edit the .bashrc file by hand:

# for setting history length see HISTSIZE and HISTFILESIZE in bash(1) HISTSIZE=1000

Important: Keep in mind that these changes will not take effect until we restart our shell session.

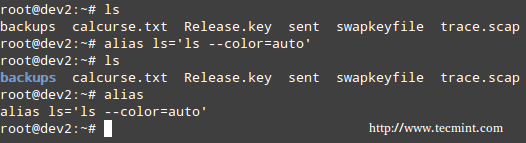

alias command

With no arguments or with the -p option prints the list of aliases in the form alias name=value on standard output. When arguments are provided, an alias is defined for each name whose value is given.

With alias, we can make up our own commands or modify existing ones by including desired options. For example, suppose we want to alias ls to ls –color=auto so that the output will display regular files, directories, symlinks, and so on, in different colors:

# alias ls='ls --color=auto'

Note: That you can assign any name to your “new command” and enclose as many commands as desired between single quotes, but in that case you need to separate them by semicolons, as follows:

# alias myNewCommand='cd /usr/bin; ls; cd; clear'

exit command

The exit and logout commands both terminate the shell. The exit command terminates any shell, but the logoutcommand terminates only login shells—that is, those that are launched automatically when you initiate a text-mode login.

If we are ever in doubt as to what a program does, we can refer to its man page, which can be invoked using the man command. In addition, there are also man pages for important files (inittab, fstab, hosts, to name a few), library functions, shells, devices, and other features.

Examples:

- man uname (print system information, such as kernel name, processor, operating system type, architecture, and so on).

- man inittab (init daemon configuration).

Another important source of information is provided by the info command, which is used to read info documents. These documents often provide more information than the man page. It is invoked by using the info keyword followed by a command name, such as:

# info ls # info cut

In addition, the /usr/share/doc directory contains several subdirectories where further documentation can be found. They either contain plain-text files or other friendly formats.

Make sure you make it a habit to use these three methods to look up information for commands. Pay special and careful attention to the syntax of each of them, which is explained in detail in the documentation.

Converting Tabs into Spaces with expand Command

Sometimes text files contain tabs but programs that need to process the files don’t cope well with tabs. Or maybe we just want to convert tabs into spaces. That’s where the expand tool (provided by the GNU coreutils package) comes in handy.

For example, given the file NumbersList.txt, let’s run expand against it, changing tabs to one space, and display on standard output.

# expand --tabs=1 NumbersList.txt

The unexpand command performs the reverse operation (converts spaces into tabs).

Display the first lines of a file with head and the last lines with tail

By default, the head command followed by a filename, will display the first 10 lines of the said file. This behavior can be changed using the -n option and specifying a certain number of lines.

# head -n3 /etc/passwd # tail -n3 /etc/passwd

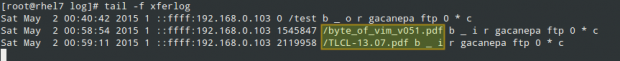

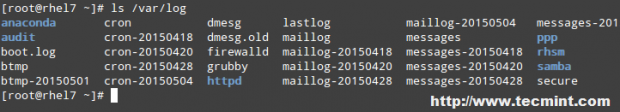

One of the most interesting features of tail is the possibility of displaying data (last lines) as the input file grows (tail -f my.log, where my.log is the file under observation). This is particularly useful when monitoring a log to which data is being continually added.

Read More: Manage Files Effectively using head and tail Commands

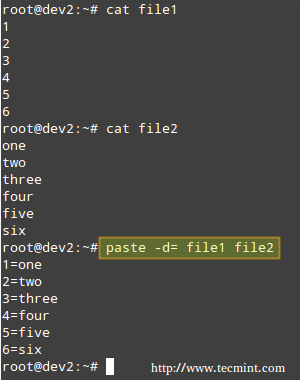

Merging Lines with paste

The paste command merges files line by line, separating the lines from each file with tabs (by default), or another delimiter that can be specified (in the following example the fields in the output are separated by an equal sign).

# paste -d= file1 file2

Breaking a file into pieces using split command

The split command is used split a file into two (or more) separate files, which are named according to a prefix of our choosing. The splitting can be defined by size, chunks, or number of lines, and the resulting files can have a numeric or alphabetic suffixes. In the following example, we will split bash.pdf into files of size 50 KB (-b 50KB), using numeric suffixes (-d):

# split -b 50KB -d bash.pdf bash_

You can merge the files to recreate the original file with the following command:

# cat bash_00 bash_01 bash_02 bash_03 bash_04 bash_05 > bash.pdf

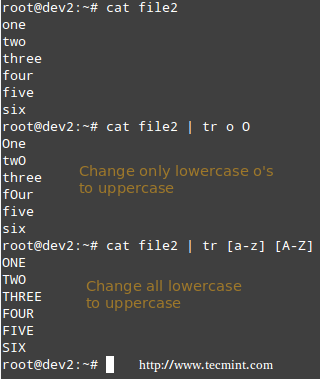

Translating characters with tr command

The tr command can be used to translate (change) characters on a one-by-one basis or using character ranges. In the following example we will use the same file2 as previously, and we will change:

- lowercase o’s to uppercase,

- and all lowercase to uppercase

# cat file2 | tr o O # cat file2 | tr [a-z] [A-Z]

Reporting or deleting duplicate lines with uniq and sort command

The uniq command allows us to report or remove duplicate lines in a file, writing to stdout by default. We must note that uniq does not detect repeated lines unless they are adjacent. Thus, uniq is commonly used along with a preceding sort (which is used to sort lines of text files).

By default, sort takes the first field (separated by spaces) as key field. To specify a different key field, we need to use the -k option. Please note how the output returned by sort and uniq change as we change the key field in the following example:

# cat file3 # sort file3 | uniq # sort -k2 file3 | uniq # sort -k3 file3 | uniq

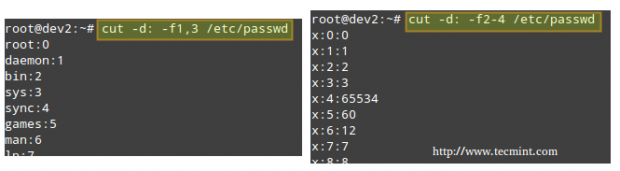

Extracting text with cut command

The cut command extracts portions of input lines (from stdin or files) and displays the result on standard output, based on number of bytes (-b), characters (-c), or fields (-f).

When using cut based on fields, the default field separator is a tab, but a different separator can be specified by using the -d option.

# cut -d: -f1,3 /etc/passwd # Extract specific fields: 1 and 3 in this case # cut -d: -f2-4 /etc/passwd # Extract range of fields: 2 through 4 in this example

Note that the output of the two examples above was truncated for brevity.

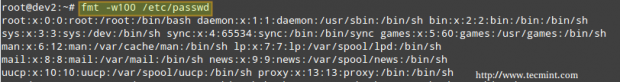

Reformatting files with fmt command

fmt is used to “clean up” files with a great amount of content or lines, or with varying degrees of indentation. The new paragraph formatting defaults to no more than 75 characters wide. You can change this with the -w (width) option, which set the line length to the specified number of characters.

For example, let’s see what happens when we use fmt to display the /etc/passwd file setting the width of each line to 100 characters. Once again, output has been truncated for brevity.

# fmt -w100 /etc/passwd

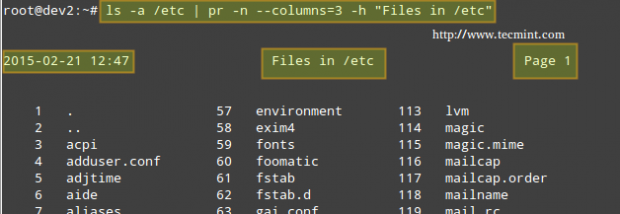

Formatting content for printing with pr command

pr paginates and displays in columns one or more files for printing. In other words, pr formats a file to make it look better when printed. For example, the following command:

# ls -a /etc | pr -n --columns=3 -h "Files in /etc"

Shows a listing of all the files found in /etc in a printer-friendly format (3 columns) with a custom header (indicated by the -h option), and numbered lines (-n).

Summary

In this article we have discussed how to enter and execute commands with the correct syntax in a shell prompt or terminal, and explained how to find, inspect, and use system documentation. As simple as it seems, it’s a large first step in your way to becoming a RHCSA.

If you would like to add other commands that you use on a periodic basis and that have proven useful to fulfill your daily responsibilities, feel free to share them with the world by using the comment form below. Questions are also welcome. We look forward to hearing from you!

RHCSA Series: How to Perform File and Directory Management – Part 2

In this article, RHCSA Part 2: File and directory management, we will review some essential skills that are required in the day-to-day tasks of a system administrator.

Create, Delete, Copy, and Move Files and Directories

File and directory management is a critical competence that every system administrator should possess. This includes the ability to create / delete text files from scratch (the core of each program’s configuration) and directories (where you will organize files and other directories), and to find out the type of existing files.

The touch command can be used not only to create empty files, but also to update the access and modification times of existing files.

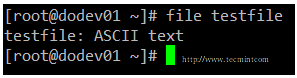

You can use file [filename] to determine a file’s type (this will come in handy before launching your preferred text editor to edit it).

and rm [filename] to delete it.

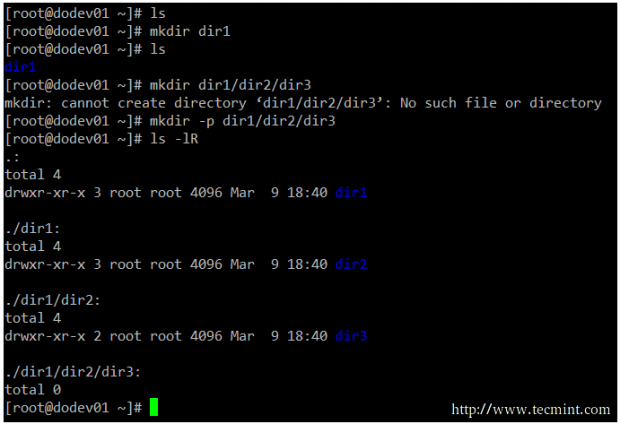

As for directories, you can create directories inside existing paths with mkdir [directory] or create a full path with mkdir -p [/full/path/to/directory].

When it comes to removing directories, you need to make sure that they’re empty before issuing the rmdir [directory] command, or use the more powerful (handle with care!) rm -rf [directory]. This last option will force remove recursively the [directory] and all its contents – so use it at your own risk.

Input and Output Redirection and Pipelining

The command line environment provides two very useful features that allows to redirect the input and output of commands from and to files, and to send the output of a command to another, called redirection and pipelining, respectively.

To understand those two important concepts, we must first understand the three most important types of I/O (Input and Output) streams (or sequences) of characters, which are in fact special files, in the *nix sense of the word.

- Standard input (aka stdin) is by default attached to the keyboard. In other words, the keyboard is the standard input device to enter commands to the command line.

- Standard output (aka stdout) is by default attached to the screen, the device that “receives” the output of commands and display them on the screen.

- Standard error (aka stderr), is where the status messages of a command is sent to by default, which is also the screen.

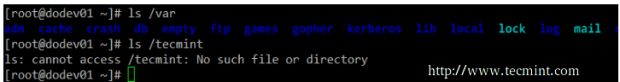

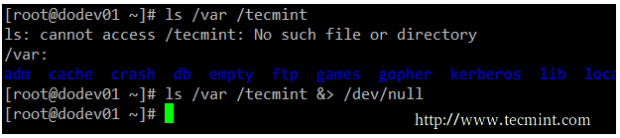

In the following example, the output of ls /var is sent to stdout (the screen), as well as the result of ls /tecmint. But in the latter case, it is stderr that is shown.

To more easily identify these special files, they are each assigned a file descriptor, an abstract representation that is used to access them. The essential thing to understand is that these files, just like others, can be redirected. What this means is that you can capture the output from a file or script and send it as input to another file, command, or script. This will allow you to store on disk, for example, the output of commands for later processing or analysis.

To redirect stdin (fd 0), stdout (fd 1), or stderr (fd 2), the following operators are available.

| Redirection Operator | Effect |

| > | Redirects standard output to a file containing standard output. If the destination file exists, it will be overwritten. |

| >> | Appends standard output to a file. |

| 2> | Redirects standard error to a file containing standard output. If the destination file exists, it will be overwritten. |

| 2>> | Appends standard error to the existing file. |

| &> | Redirects both standard output and standard error to a file; if the specified file exists, it will be overwritten. |

| < | Uses the specified file as standard input. |

| <> | The specified file is used for both standard input and standard output. |

As opposed to redirection, pipelining is performed by adding a vertical bar (|) after a command and before another one.

Remember:

- Redirection is used to send the output of a command to a file, or to send a file as input to a command.

- Pipelining is used to send the output of a command to another command as input.

Examples Of Redirection and Pipelining

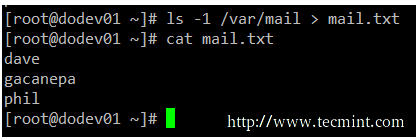

Example 1: Redirecting the output of a command to a file

There will be times when you will need to iterate over a list of files. To do that, you can first save that list to a file and then read that file line by line. While it is true that you can iterate over the output of ls directly, this example serves to illustrate redirection.

# ls -1 /var/mail > mail.txt

Example 2: Redirecting both stdout and stderr to /dev/null

In case we want to prevent both stdout and stderr to be displayed on the screen, we can redirect both file descriptors to /dev/null. Note how the output changes when the redirection is implemented for the same command.

# ls /var /tecmint # ls /var/ /tecmint &> /dev/null

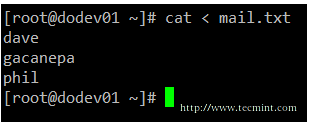

Example 3: Using a file as input to a command

While the classic syntax of the cat command is as follows.

# cat [file(s)]

You can also send a file as input, using the correct redirection operator.

# cat < mail.txt

Example 4: Sending the output of a command as input to another

If you have a large directory or process listing and want to be able to locate a certain file or process at a glance, you will want to pipeline the listing to grep.

Note that we use to pipelines in the following example. The first one looks for the required keyword, while the second one will eliminate the actual grep command from the results. This example lists all the processes associated with the apache user.

# ps -ef | grep apache | grep -v grep

Archiving, Compressing, Unpacking, and Uncompressing Files

If you need to transport, backup, or send via email a group of files, you will use an archiving (or grouping) tool such as tar, typically used with a compression utility like gzip, bzip2, or xz.

Your choice of a compression tool will be likely defined by the compression speed and rate of each one. Of these three compression tools, gzip is the oldest and provides the least compression, bzip2 provides improved compression, and xz is the newest and provides the best compression. Typically, files compressed with these utilities have .gz, .bz2, or .xz extensions, respectively.

| Command | Abbreviation | Description |

| –create | c | Creates a tar archive |

| –concatenate | A | Appends tar files to an archive |

| –append | r | Appends non-tar files to an archive |

| –update | u | Appends files that are newer than those in an archive |

| –diff or –compare | d | Compares an archive to files on disk |

| –list | t | Lists the contents of a tarball |

| –extract or –get | x | Extracts files from an archive |

| Operation modifier | Abbreviation | Description |

| —directory dir | C | Changes to directory dir before performing operations |

| —same-permissions and —same-owner | p | Preserves permissions and ownership information, respectively. |

| –verbose | v | Lists all files as they are read or extracted; if combined with –list, it also displays file sizes, ownership, and timestamps |

| —exclude file | — | Excludes file from the archive. In this case, file can be an actual file or a pattern. |

| —gzip or —gunzip | z | Compresses an archive through gzip |

| –bzip2 | j | Compresses an archive through bzip2 |

| –xz | J | Compresses an archive through xz |

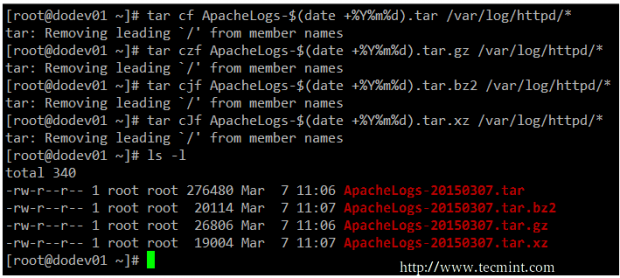

Example 5: Creating a tarball and then compressing it using the three compression utilities

You may want to compare the effectiveness of each tool before deciding to use one or another. Note that while compressing small files, or a few files, the results may not show much differences, but may give you a glimpse of what they have to offer.

# tar cf ApacheLogs-$(date +%Y%m%d).tar /var/log/httpd/* # Create an ordinary tarball # tar czf ApacheLogs-$(date +%Y%m%d).tar.gz /var/log/httpd/* # Create a tarball and compress with gzip # tar cjf ApacheLogs-$(date +%Y%m%d).tar.bz2 /var/log/httpd/* # Create a tarball and compress with bzip2 # tar cJf ApacheLogs-$(date +%Y%m%d).tar.xz /var/log/httpd/* # Create a tarball and compress with xz

Example 6: Preserving original permissions and ownership while archiving and when

If you are creating backups from users’ home directories, you will want to store the individual files with the original permissions and ownership instead of changing them to that of the user account or daemon performing the backup. The following example preserves these attributes while taking the backup of the contents in the /var/log/httpd directory:

# tar cJf ApacheLogs-$(date +%Y%m%d).tar.xz /var/log/httpd/* --same-permissions --same-owner

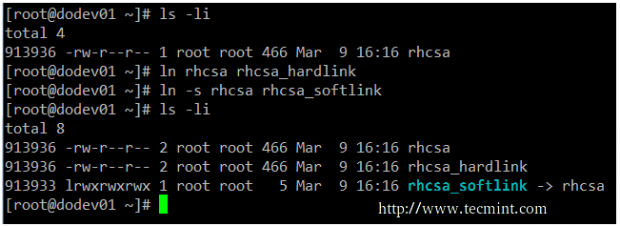

Create Hard and Soft Links

In Linux, there are two types of links to files: hard links and soft (aka symbolic) links. Since a hard link represents another name for an existing file and is identified by the same inode, it then points to the actual data, as opposed to symbolic links, which point to filenames instead.

In addition, hard links do not occupy space on disk, while symbolic links do take a small amount of space to store the text of the link itself. The downside of hard links is that they can only be used to reference files within the filesystem where they are located because inodes are unique inside a filesystem. Symbolic links save the day, in that they point to another file or directory by name rather than by inode, and therefore can cross filesystem boundaries.

The basic syntax to create links is similar in both cases:

# ln TARGET LINK_NAME # Hard link named LINK_NAME to file named TARGET # ln -s TARGET LINK_NAME # Soft link named LINK_NAME to file named TARGET

Example 7: Creating hard and soft links

There is no better way to visualize the relation between a file and a hard or symbolic link that point to it, than to create those links. In the following screenshot you will see that the file and the hard link that points to it share the same inode and both are identified by the same disk usage of 466 bytes.

On the other hand, creating a hard link results in an extra disk usage of 5 bytes. Not that you’re going to run out of storage capacity, but this example is enough to illustrate the difference between a hard link and a soft link.

A typical usage of symbolic links is to reference a versioned file in a Linux system. Suppose there are several programs that need access to file fooX.Y, which is subject to frequent version updates (think of a library, for example). Instead of updating every single reference to fooX.Y every time there’s a version update, it is wiser, safer, and faster, to have programs look to a symbolic link named just foo, which in turn points to the actual fooX.Y.

Thus, when X and Y change, you only need to edit the symbolic link foo with a new destination name instead of tracking every usage of the destination file and updating it.

Summary

In this article we have reviewed some essential file and directory management skills that must be a part of every system administrator’s tool-set. Make sure to review other parts of this series as well in order to integrate these topics with the content covered in this tutorial.

Feel free to let us know if you have any questions or comments. We are always more than glad to hear from our readers.

RHCSA Series: How to Manage Users and Groups in RHEL 7 – Part 3

Managing a RHEL 7 server, as it is the case with any other Linux server, will require that you know how to add, edit, suspend, or delete user accounts, and grant users the necessary permissions to files, directories, and other system resources to perform their assigned tasks.

Managing User Accounts

To add a new user account to a RHEL 7 server, you can run either of the following two commands as root:

# adduser [new_account] # useradd [new_account]

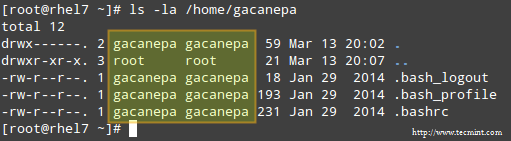

When a new user account is added, by default the following operations are performed.

- His/her home directory is created (

/home/usernameunless specified otherwise). - These

.bash_logout,.bash_profileand.bashrchidden files are copied inside the user’s home directory, and will be used to provide environment variables for his/her user session. You can explore each of them for further details. - A mail spool directory is created for the added user account.

- A group is created with the same name as the new user account.

The full account summary is stored in the /etc/passwd file. This file holds a record per system user account and has the following format (fields are separated by a colon):

[username]:[x]:[UID]:[GID]:[Comment]:[Home directory]:[Default shell]

- These two fields

[username]and[Comment]are self explanatory. - The second filed ‘x’ indicates that the account is secured by a shadowed password (in

/etc/shadow), which is used to logon as[username]. - The fields

[UID]and[GID]are integers that shows the User IDentification and the primary Group IDentification to which[username]belongs, equally.

Finally,

- The

[Home directory]shows the absolute location of[username]’shome directory, and [Default shell]is the shell that is commit to this user when he/she logins into the system.

Another important file that you must become familiar with is /etc/group, where group information is stored. As it is the case with /etc/passwd, there is one record per line and its fields are also delimited by a colon:

[Group name]:[Group password]:[GID]:[Group members]

where,

[Group name]is the name of group.- Does this group use a group password? (An “x” means no).

[GID]: same as in/etc/passwd.[Group members]: a list of users, separated by commas, that are members of each group.

After adding an account, at anytime, you can edit the user’s account information using usermod, whose basic syntax is:

# usermod [options] [username]

Read Also:

15 ‘useradd’ Command Examples

15 ‘usermod’ Command Examples

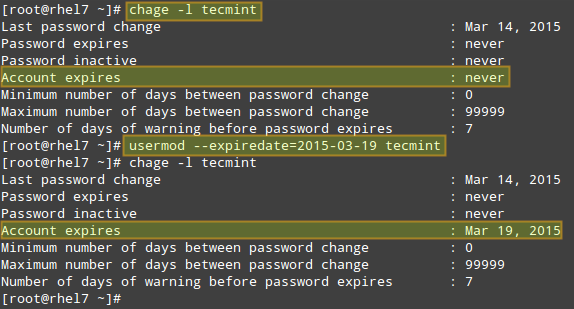

EXAMPLE 1: Setting the expiry date for an account

If you work for a company that has some kind of policy to enable account for a certain interval of time, or if you want to grant access to a limited period of time, you can use the --expiredate flag followed by a date in YYYY-MM-DD format. To verify that the change has been applied, you can compare the output of

# chage -l [username]

before and after updating the account expiry date, as shown in the following image.

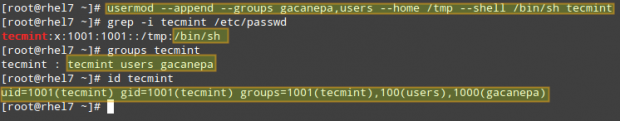

EXAMPLE 2: Adding the user to supplementary groups

Besides the primary group that is created when a new user account is added to the system, a user can be added to supplementary groups using the combined -aG, or –append –groups options, followed by a comma separated list of groups.

EXAMPLE 3: Changing the default location of the user’s home directory and / or changing its shell

If for some reason you need to change the default location of the user’s home directory (other than /home/username), you will need to use the -d, or –home options, followed by the absolute path to the new home directory.

If a user wants to use another shell other than bash (for example, sh), which gets assigned by default, use usermod with the –shell flag, followed by the path to the new shell.

EXAMPLE 4: Displaying the groups an user is a member of

After adding the user to a supplementary group, you can verify that it now actually belongs to such group(s):

# groups [username] # id [username]

The following image depicts Examples 2 through 4:

In the example above:

# usermod --append --groups gacanepa,users --home /tmp --shell /bin/sh tecmint

To remove a user from a group, omit the --append switch in the command above and list the groups you want the user to belong to following the --groups flag.

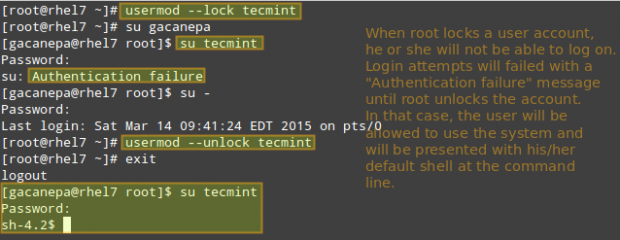

EXAMPLE 5: Disabling account by locking password

To disable an account, you will need to use either the -L (lowercase L) or the –lock option to lock a user’s password. This will prevent the user from being able to log on.

EXAMPLE 6: Unlocking password

When you need to re-enable the user so that he can log on to the server again, use the -U or the –unlock option to unlock a user’s password that was previously blocked, as explained in Example 5 above.

# usermod --unlock tecmint

The following image illustrates Examples 5 and 6:

EXAMPLE 7: Deleting a group or an user account

To delete a group, you’ll want to use groupdel, whereas to delete a user account you will use userdel (add the –rswitch if you also want to delete the contents of its home directory and mail spool):

# groupdel [group_name] # Delete a group # userdel -r [user_name] # Remove user_name from the system, along with his/her home directory and mail spool

If there are files owned by group_name, they will not be deleted, but the group owner will be set to the GID of the group that was deleted.

Listing, Setting and Changing Standard ugo/rwx Permissions

The well-known ls command is one of the best friends of any system administrator. When used with the -l flag, this tool allows you to view a list a directory’s contents in long (or detailed) format.

However, this command can also be applied to a single file. Either way, the first 10 characters in the output of ls -l represent each file’s attributes.

The first char of this 10-character sequence is used to indicate the file type:

- – (hyphen): a regular file

- d: a directory

- l: a symbolic link

- c: a character device (which treats data as a stream of bytes, i.e. a terminal)

- b: a block device (which handles data in blocks, i.e. storage devices)

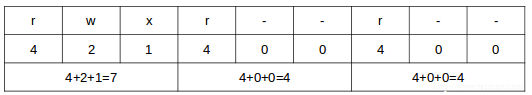

The next nine characters of the file attributes, divided in groups of three from left to right, are called the file mode and indicate the read (r), write(w), and execute (x) permissions granted to the file’s owner, the file’s group owner, and the rest of the users (commonly referred to as “the world”), respectively.

While the read permission on a file allows the same to be opened and read, the same permission on a directory allows its contents to be listed if the execute permission is also set. In addition, the execute permission in a file allows it to be handled as a program and run.

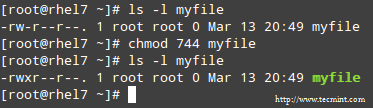

File permissions are changed with the chmod command, whose basic syntax is as follows:

# chmod [new_mode] file

where new_mode is either an octal number or an expression that specifies the new permissions. Feel free to use the mode that works best for you in each case. Or perhaps you already have a preferred way to set a file’s permissions – so feel free to use the method that works best for you.

The octal number can be calculated based on the binary equivalent, which can in turn be obtained from the desired file permissions for the owner of the file, the owner group, and the world.The presence of a certain permission equals a power of 2 (r=22, w=21, x=20), while its absence means 0. For example:

To set the file’s permissions as indicated above in octal form, type:

# chmod 744 myfile

Please take a minute to compare our previous calculation to the actual output of ls -l after changing the file’s permissions:

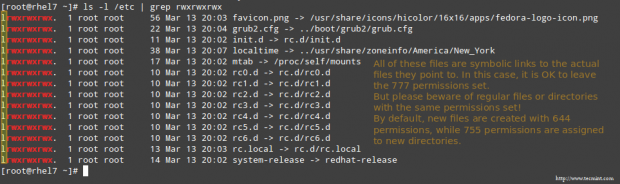

EXAMPLE 8: Searching for files with 777 permissions

As a security measure, you should make sure that files with 777 permissions (read, write, and execute for everyone) are avoided like the plague under normal circumstances. Although we will explain in a later tutorial how to more effectively locate all the files in your system with a certain permission set, you can -by now- combine ls with grep to obtain such information.

In the following example, we will look for file with 777 permissions in the /etc directory only. Note that we will use pipelining as explained in Part 2: File and Directory Management of this RHCSA series:

# ls -l /etc | grep rwxrwxrwx

EXAMPLE 9: Assigning a specific permission to all users

Shell scripts, along with some binaries that all users should have access to (not just their corresponding owner and group), should have the execute bit set accordingly (please note that we will discuss a special case later):

# chmod a+x script.sh

Note: That we can also set a file’s mode using an expression that indicates the owner’s rights with the letter u, the group owner’s rights with the letter g, and the rest with o. All of these rights can be represented at the same time with the letter a. Permissions are granted (or revoked) with the + or - signs, respectively.

A long directory listing also shows the file’s owner and its group owner in the first and second columns, respectively. This feature serves as a first-level access control method to files in a system:

To change file ownership, you will use the chown command. Note that you can change the file and group ownership at the same time or separately:

# chown user:group file

Note: That you can change the user or group, or the two attributes at the same time, as long as you don’t forget the colon, leaving user or group blank if you want to update the other attribute, for example:

# chown :group file # Change group ownership only # chown user: file # Change user ownership only

EXAMPLE 10: Cloning permissions from one file to another

If you would like to “clone” ownership from one file to another, you can do so using the –reference flag, as follows:

# chown --reference=ref_file file

where the owner and group of ref_file will be assigned to file as well:

Setting Up SETGID Directories for Collaboration

Should you need to grant access to all the files owned by a certain group inside a specific directory, you will most likely use the approach of setting the setgid bit for such directory. When the setgid bit is set, the effective GID of the real user becomes that of the group owner.

Thus, any user can access a file under the privileges granted to the group owner of such file. In addition, when the setgid bit is set on a directory, newly created files inherit the same group as the directory, and newly created subdirectories will also inherit the setgid bit of the parent directory.

# chmod g+s [filename]

To set the setgid in octal form, prepend the number 2 to the current (or desired) basic permissions.

# chmod 2755 [directory]

Conclusion

A solid knowledge of user and group management, along with standard and special Linux permissions, when coupled with practice, will allow you to quickly identify and troubleshoot issues with file permissions in your RHEL 7 server.

I assure you that as you follow the steps outlined in this article and use the system documentation (as explained in Part 1: Reviewing Essential Commands & System Documentation of this series) you will master this essential competence of system administration.

Feel free to let us know if you have any questions or comments using the form below.

RHCSA Series: Editing Text Files with Nano and Vim / Analyzing text with grep and regexps – Part 4

Every system administrator has to deal with text files as part of his daily responsibilities. That includes editing existing files (most likely configuration files), or creating new ones. It has been said that if you want to start a holy war in the Linux world, you can ask sysadmins what their favorite text editor is and why. We are not going to do that in this article, but will present a few tips that will be helpful to use two of the most widely used text editors in RHEL 7: nano (due to its simplicity and easiness of use, specially to new users), and vi/m (due to its several features that convert it into more than a simple editor). I am sure that you can find many more reasons to use one or the other, or perhaps some other editor such as emacs or pico. It’s entirely up to you.

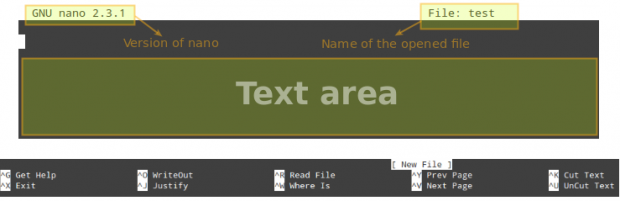

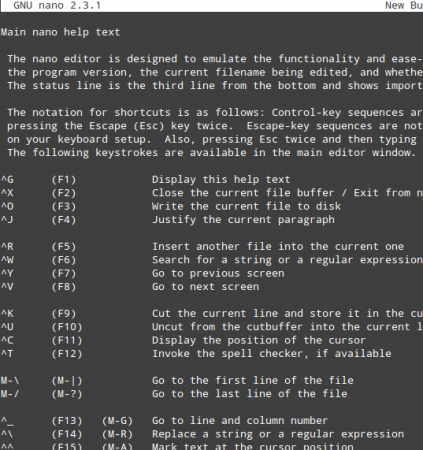

Editing Files with Nano Editor

To launch nano, you can either just type nano at the command prompt, optionally followed by a filename (in this case, if the file exists, it will be opened in edition mode). If the file does not exist, or if we omit the filename, nano will also be opened in edition mode but will present a blank screen for us to start typing:

As you can see in the previous image, nano displays at the bottom of the screen several functions that are available via the indicated shortcuts (^, aka caret, indicates the Ctrl key). To name a few of them:

- Ctrl + G: brings up the help menu with a complete list of functions and descriptions:Ctrl + X: exits the current file. If changes have not been saved, they are discarded.

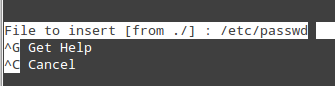

- Ctrl + R: lets you choose a file to insert its contents into the present file by specifying a full path.

- Ctrl + O: saves changes made to a file. It will let you save the file with the same name or a different one. Then press Enter to confirm.

- Ctrl + X: exits the current file. If changes have not been saved, they are discarded.

- Ctrl + R: lets you choose a file to insert its contents into the present file by specifying a full path.

will insert the contents of /etc/passwd into the current file.

- Ctrl + K: cuts the current line.

- Ctrl + U: paste.

- Ctrl + C: cancels the current operation and places you at the previous screen.

To easily navigate the opened file, nano provides the following features:

- Ctrl + F and Ctrl + B move the cursor forward or backward, whereas Ctrl + P and Ctrl + N move it up or down one line at a time, respectively, just like the arrow keys.

- Ctrl + space and Alt + space move the cursor forward and backward one word at a time.

Finally,

- Ctrl + _ (underscore) and then entering X,Y will take you precisely to Line X, column Y, if you want to place the cursor at a specific place in the document.

The example above will take you to line 15, column 14 in the current document.

If you can recall your early Linux days, specially if you came from Windows, you will probably agree that starting off with nano is the best way to go for a new user.

Editing Files with Vim Editor

Vim is an improved version of vi, a famous text editor in Linux that is available on all POSIX-compliant *nix systems, such as RHEL 7. If you have the chance and can install vim, go ahead; if not, most (if not all) the tips given in this article should also work.

One of vim’s distinguishing features is the different modes in which it operates:

- Command mode will allow you to browse through the file and enter commands, which are brief and case-sensitive combinations of one or more letters. If you need to repeat one of them a certain number of times, you can prefix it with a number (there are only a few exceptions to this rule). For example, yy (or Y, short for yank) copies the entire current line, whereas 4yy (or 4Y) copies the entire current line along with the next three lines (4 lines in total).

- In ex mode, you can manipulate files (including saving a current file and running outside programs or commands). To enter ex mode, we must type a colon (:) starting from command mode (or in other words, Esc + :), directly followed by the name of the ex-mode command that you want to use.

- In insert mode, which is accessed by typing the letter i, we simply enter text. Most keystrokes result in text appearing on the screen.

- We can always enter command mode (regardless of the mode we’re working on) by pressing the Esc key.

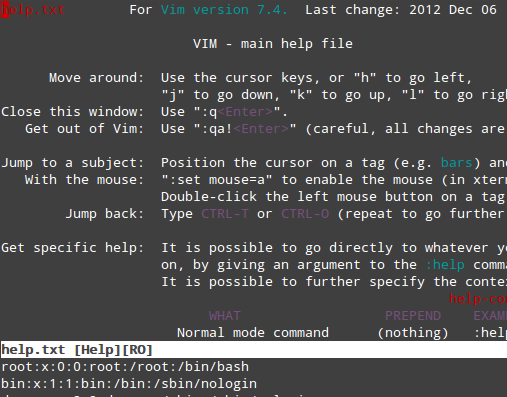

Let’s see how we can perform the same operations that we outlined for nano in the previous section, but now with vim. Don’t forget to hit the Enter key to confirm the vim command!

To access vim’s full manual from the command line, type :help while in command mode and then press Enter:

The upper section presents an index list of contents, with defined sections dedicated to specific topics about vim. To navigate to a section, place the cursor over it and press Ctrl + ] (closing square bracket). Note that the bottom section displays the current file.

1. To save changes made to a file, run any of the following commands from command mode and it will do the trick:

:wq! :x! ZZ (yes, double Z without the colon at the beginning)

2. To exit discarding changes, use :q!. This command will also allow you to exit the help menu described above, and return to the current file in command mode.

3. Cut N number of lines: type Ndd while in command mode.

4. Copy M number of lines: type Myy while in command mode.

5. Paste lines that were previously cutted or copied: press the P key while in command mode.

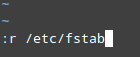

6. To insert the contents of another file into the current one:

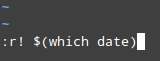

:r filename

For example, to insert the contents of /etc/fstab, do:

7. To insert the output of a command into the current document:

:r! command

For example, to insert the date and time in the line below the current position of the cursor:

In another article that I wrote for, (Part 2 of the LFCS series), I explained in greater detail the keyboard shortcuts and functions available in vim. You may want to refer to that tutorial for further examples on how to use this powerful text editor.

Analyzing Text with Grep and Regular Expressions

By now you have learned how to create and edit files using nano or vim. Say you become a text editor ninja, so to speak – now what? Among other things, you will also need how to search for regular expressions inside text.

A regular expression (also known as “regex” or “regexp“) is a way of identifying a text string or pattern so that a program can compare the pattern against arbitrary text strings. Although the use of regular expressions along with grep would deserve an entire article on its own, let us review the basics here:

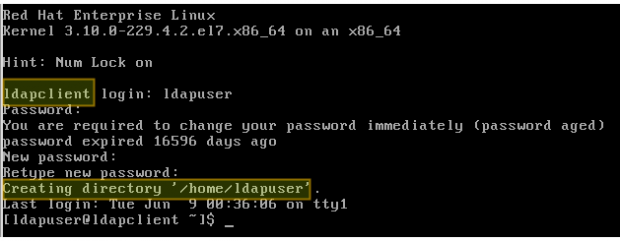

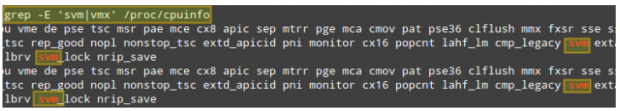

1. The simplest regular expression is an alphanumeric string (i.e., the word “svm”) or two (when two are present, you can use the | (OR) operator):

# grep -Ei 'svm|vmx' /proc/cpuinfo

The presence of either of those two strings indicate that your processor supports virtualization:

2. A second kind of a regular expression is a range list, enclosed between square brackets.

For example, c[aeiou]t matches the strings cat, cet, cit, cot, and cut, whereas [a-z] and [0-9] match any lowercase letter or decimal digit, respectively. If you want to repeat the regular expression X certain number of times, type {X} immediately following the regexp.

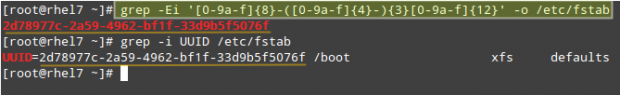

For example, let’s extract the UUIDs of storage devices from /etc/fstab:

# grep -Ei '[0-9a-f]{8}-([0-9a-f]{4}-){3}[0-9a-f]{12}' -o /etc/fstab

The first expression in brackets [0-9a-f] is used to denote lowercase hexadecimal characters, and {8} is a quantifier that indicates the number of times that the preceding match should be repeated (the first sequence of characters in an UUID is a 8-character long hexadecimal string).

The parentheses, the {4} quantifier, and the hyphen indicate that the next sequence is a 4-character long hexadecimal string, and the quantifier that follows ({3}) denote that the expression should be repeated 3 times.

Finally, the last sequence of 12-character long hexadecimal string in the UUID is retrieved with [0-9a-f]{12}, and the -o option prints only the matched (non-empty) parts of the matching line in /etc/fstab.

3. POSIX character classes.

| Character Class | Matches… |

| [[:alnum:]] | Any alphanumeric [a-zA-Z0-9] character |

| [[:alpha:]] | Any alphabetic [a-zA-Z] character |

| [[:blank:]] | Spaces or tabs |

| [[:cntrl:]] | Any control characters (ASCII 0 to 32) |

| [[:digit:]] | Any numeric digits [0-9] |

| [[:graph:]] | Any visible characters |

| [[:lower:]] | Any lowercase [a-z] character |

| [[:print:]] | Any non-control characters |

| [[:space:]] | Any whitespace |

| [[:punct:]] | Any punctuation marks |

| [[:upper:]] | Any uppercase [A-Z] character |

| [[:xdigit:]] | Any hex digits [0-9a-fA-F] |

| [:word:] | Any letters, numbers, and underscores [a-zA-Z0-9_] |

For example, we may be interested in finding out what the used UIDs and GIDs (refer to Part 2 of this series to refresh your memory) are for real users that have been added to our system. Thus, we will search for sequences of 4 digits in /etc/passwd:

# grep -Ei [[:digit:]]{4} /etc/passwd

The above example may not be the best case of use of regular expressions in the real world, but it clearly illustrates how to use POSIX character classes to analyze text along with grep.

Conclusion

In this article we have provided some tips to make the most of nano and vim, two text editors for the command-line users. Both tools are supported by extensive documentation, which you can consult in their respective official web sites (links given below) and using the suggestions given in Part 1 of this series.

Reference Links

http://www.nano-editor.org/

http://www.vim.org/

RHCSA Series: Process Management in RHEL 7: Boot, Shutdown, and Everything in Between – Part 5

We will start this article with an overall and brief revision of what happens since the moment you press the Power button to turn on your RHEL 7 server until you are presented with the login screen in a command line interface.

Please note that:

1. the same basic principles apply, with perhaps minor modifications, to other Linux distributions as well, and

2. the following description is not intended to represent an exhaustive explanation of the boot process, but only the fundamentals.

Linux Boot Process

1. The POST (Power On Self Test) initializes and performs hardware checks.

2. When the POST finishes, the system control is passed to the first stage boot loader, which is stored on either the boot sector of one of the hard disks (for older systems using BIOS and MBR), or a dedicated (U)EFI partition.

3. The first stage boot loader then loads the second stage boot loader, most usually GRUB (GRand Unified Boot Loader), which resides inside /boot, which in turn loads the kernel and the initial RAM–based file system (also known as initramfs, which contains programs and binary files that perform the necessary actions needed to ultimately mount the actual root filesystem).

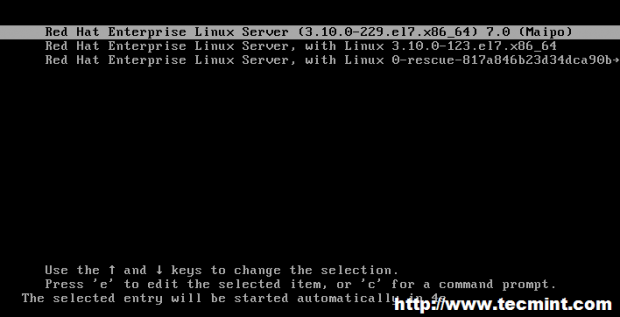

4. We are presented with a splash screen that allows us to choose an operating system and kernel to boot:

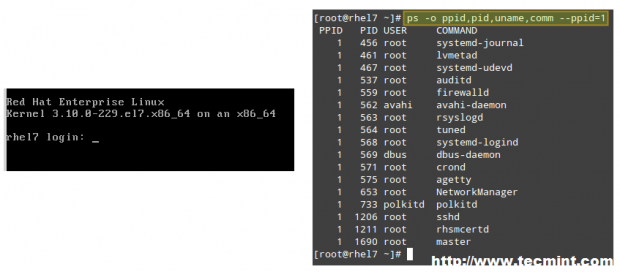

5. The kernel sets up the hardware attached to the system and once the root filesystem has been mounted, launches process with PID 1, which in turn will initialize other processes and present us with a login prompt.

Note: That if we wish to do so at a later time, we can examine the specifics of this process using the dmesg command and filtering its output using the tools that we have explained in previous articles of this series.

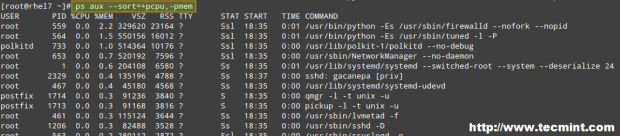

In the example above, we used the well-known ps command to display a list of current processes whose parent process (or in other words, the process that started them) is systemd (the system and service manager that most modern Linux distributions have switched to) during system startup:

# ps -o ppid,pid,uname,comm --ppid=1

Remember that the -o flag (short for –format) allows you to present the output of ps in a customized format to suit your needs using the keywords specified in the STANDARD FORMAT SPECIFIERS section in man ps.

Another case in which you will want to define the output of ps instead of going with the default is when you need to find processes that are causing a significant CPU and / or memory load, and sort them accordingly:

# ps aux --sort=+pcpu # Sort by %CPU (ascending) # ps aux --sort=-pcpu # Sort by %CPU (descending) # ps aux --sort=+pmem # Sort by %MEM (ascending) # ps aux --sort=-pmem # Sort by %MEM (descending) # ps aux --sort=+pcpu,-pmem # Combine sort by %CPU (ascending) and %MEM (descending)

An Introduction to SystemD

Few decisions in the Linux world have caused more controversies than the adoption of systemd by major Linux distributions. Systemd’s advocates name as its main advantages the following facts:

Read Also: The Story Behind ‘init’ and ‘systemd’

1. Systemd allows more processing to be done in parallel during system startup (as opposed to older SysVinit, which always tends to be slower because it starts processes one by one, checks if one depends on another, and then waits for daemons to launch so more services can start), and

2. It works as a dynamic resource management in a running system. Thus, services are started when needed (to avoid consuming system resources if they are not being used) instead of being launched without a valid reason during boot.

3. Backwards compatibility with SysVinit scripts.

Systemd is controlled by the systemctl utility. If you come from a SysVinit background, chances are you will be familiar with:

- the service tool, which -in those older systems- was used to manage SysVinit scripts, and

- the chkconfig utility, which served the purpose of updating and querying runlevel information for system services.

- shutdown, which you must have used several times to either restart or halt a running system.

The following table shows the similarities between the use of these legacy tools and systemctl:

| Legacy tool | Systemctl equivalent | Description |

| service name start | systemctl start name | Start name (where name is a service) |

| service name stop | systemctl stop name | Stop name |

| service name condrestart | systemctl try-restart name | Restarts name (if it’s already running) |

| service name restart | systemctl restart name | Restarts name |

| service name reload | systemctl reload name | Reloads the configuration for name |

| service name status | systemctl status name | Displays the current status of name |

| service –status-all | systemctl | Displays the status of all current services |

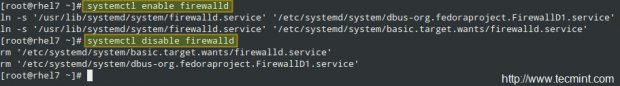

| chkconfig name on | systemctl enable name | Enable name to run on startup as specified in the unit file (the file to which the symlink points). The process of enabling or disabling a service to start automatically on boot consists in adding or removing symbolic links inside the /etc/systemd/system directory. |

| chkconfig name off | systemctl disable name | Disables name to run on startup as specified in the unit file (the file to which the symlink points) |

| chkconfig –list name | systemctl is-enabled name | Verify whether name (a specific service) is currently enabled |

| chkconfig –list | systemctl –type=service | Displays all services and tells whether they are enabled or disabled |

| shutdown -h now | systemctl poweroff | Power-off the machine (halt) |

| shutdown -r now | systemctl reboot | Reboot the system |

Systemd also introduced the concepts of units (which can be either a service, a mount point, a device, or a network socket) and targets (which is how systemd manages to start several related process at the same time, and can be considered -though not equal- as the equivalent of runlevels in SysVinit-based systems.

Summing Up

Other tasks related with process management include, but may not be limited to, the ability to:

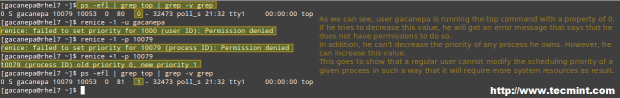

1. Adjust the execution priority as far as the use of system resources is concerned of a process:

This is accomplished through the renice utility, which alters the scheduling priority of one or more running processes. In simple terms, the scheduling priority is a feature that allows the kernel (present in versions => 2.6) to allocate system resources as per the assigned execution priority (aka niceness, in a range from -20 through 19) of a given process.

The basic syntax of renice is as follows:

# renice [-n] priority [-gpu] identifier

In the generic command above, the first argument is the priority value to be used, whereas the other argument can be interpreted as process IDs (which is the default setting), process group IDs, user IDs, or user names. A normal user (other than root) can only modify the scheduling priority of a process he or she owns, and only increase the niceness level (which means taking up less system resources).

2. Kill (or interrupt the normal execution) of a process as needed:

In more precise terms, killing a process entitles sending it a signal to either finish its execution gracefully (SIGTERM=15) or immediately (SIGKILL=9) through the kill or pkill commands.

The difference between these two tools is that the former is used to terminate a specific process or a process group altogether, while the latter allows you to do the same based on name and other attributes.

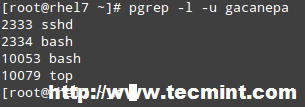

In addition, pkill comes bundled with pgrep, which shows you the PIDs that will be affected should pkill be used. For example, before running:

# pkill -u gacanepa

It may be useful to view at a glance which are the PIDs owned by gacanepa:

# pgrep -l -u gacanepa

By default, both kill and pkill send the SIGTERM signal to the process. As we mentioned above, this signal can be ignored (while the process finishes its execution or for good), so when you seriously need to stop a running process with a valid reason, you will need to specify the SIGKILL signal on the command line:

# kill -9 identifier # Kill a process or a process group # kill -s SIGNAL identifier # Idem # pkill -s SIGNAL identifier # Kill a process by name or other attributes

Conclusion

In this article we have explained the basics of the boot process in a RHEL 7 system, and analyzed some of the tools that are available to help you with managing processes using common utilities and systemd-specific commands.

Note that this list is not intended to cover all the bells and whistles of this topic, so feel free to add your own preferred tools and commands to this article using the comment form below. Questions and other comments are also welcome.

RHCSA Series: Using ‘Parted’ and ‘SSM’ to Configure and Encrypt System Storage – Part 6

In this article we will discuss how to set up and configure local system storage in Red Hat Enterprise Linux 7using classic tools and introducing the System Storage Manager (also known as SSM), which greatly simplifies this task.

Please note that we will present this topic in this article but will continue its description and usage on the next one (Part 7) due to vastness of the subject.

Creating and Modifying Partitions in RHEL 7

In RHEL 7, parted is the default utility to work with partitions, and will allow you to:

- Display the current partition table

- Manipulate (increase or decrease the size of) existing partitions

- Create partitions using free space or additional physical storage devices

It is recommended that before attempting the creation of a new partition or the modification of an existing one, you should ensure that none of the partitions on the device are in use (umount /dev/partition), and if you’re using part of the device as swap you need to disable it (swapoff -v /dev/partition) during the process.

The easiest way to do this is to boot RHEL in rescue mode using an installation media such as a RHEL 7installation DVD or USB (Troubleshooting → Rescue a Red Hat Enterprise Linux system) and Select Skip when you’re prompted to choose an option to mount the existing Linux installation, and you will be presented with a command prompt where you can start typing the same commands as shown as follows during the creation of an ordinary partition in a physical device that is not being used.

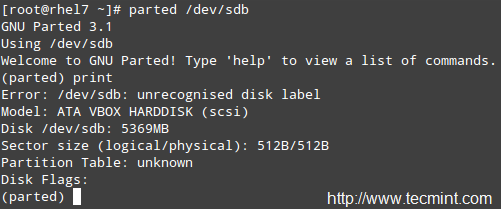

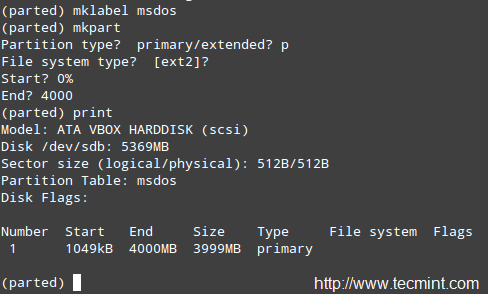

To start parted, simply type.

# parted /dev/sdb

Where /dev/sdb is the device where you will create the new partition; next, type print to display the current drive’s partition table:

As you can see, in this example we are using a virtual drive of 5 GB. We will now proceed to create a 4 GBprimary partition and then format it with the xfs filesystem, which is the default in RHEL 7.

You can choose from a variety of file systems. You will need to manually create the partition with mkpart and then format it with mkfs.fstype as usual because mkpart does not support many modern filesystems out-of-the-box.

In the following example we will set a label for the device and then create a primary partition (p) on /dev/sdb, which starts at the 0% percentage of the device and ends at 4000 MB (4 GB):

Next, we will format the partition as xfs and print the partition table again to verify that changes were applied:

# mkfs.xfs /dev/sdb1 # parted /dev/sdb print

For older filesystems, you could use the resize command in parted to resize a partition. Unfortunately, this only applies to ext2, fat16, fat32, hfs, linux-swap, and reiserfs (if libreiserfs is installed).

Thus, the only way to resize a partition is by deleting it and creating it again (so make sure you have a good backup of your data!). No wonder the default partitioning scheme in RHEL 7 is based on LVM.

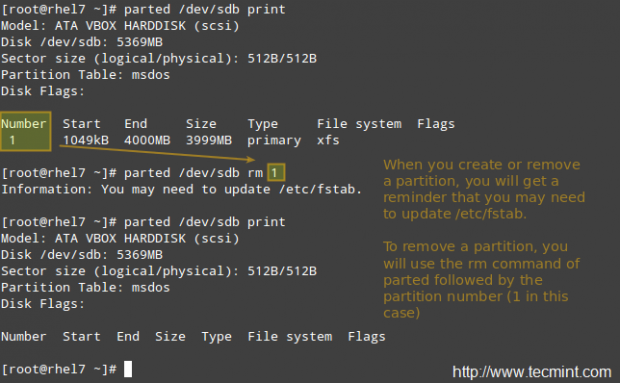

To remove a partition with parted:

# parted /dev/sdb print # parted /dev/sdb rm 1

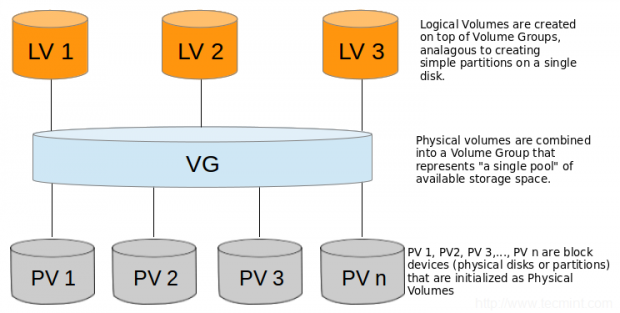

The Logical Volume Manager (LVM)

Once a disk has been partitioned, it can be difficult or risky to change the partition sizes. For that reason, if we plan on resizing the partitions on our system, we should consider the possibility of using LVM instead of the classic partitioning system, where several physical devices can form a volume group that will host a defined number of logical volumes, which can be expanded or reduced without any hassle.

In simple terms, you may find the following diagram useful to remember the basic architecture of LVM.

Creating Physical Volumes, Volume Group and Logical Volumes

Follow these steps in order to set up LVM using classic volume management tools. Since you can expand this topic reading the LVM series on this site, I will only outline the basic steps to set up LVM, and then compare them to implementing the same functionality with SSM.

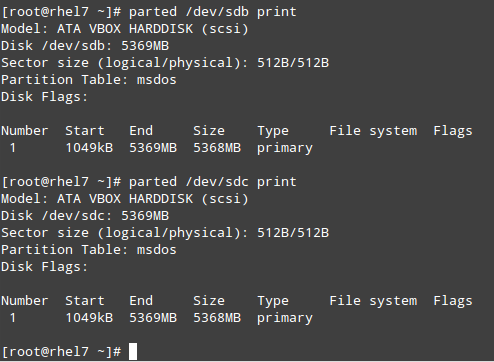

Note: That we will use the whole disks /dev/sdb and /dev/sdc as PVs (Physical Volumes) but it’s entirely up to you if you want to do the same.

1. Create partitions /dev/sdb1 and /dev/sdc1 using 100% of the available disk space in /dev/sdb and /dev/sdc:

# parted /dev/sdb print # parted /dev/sdc print

2. Create 2 physical volumes on top of /dev/sdb1 and /dev/sdc1, respectively.

# pvcreate /dev/sdb1 # pvcreate /dev/sdc1

Remember that you can use pvdisplay /dev/sd{b,c}1 to show information about the newly created PVs.

3. Create a VG on top of the PV that you created in the previous step:

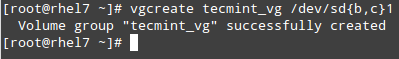

# vgcreate tecmint_vg /dev/sd{b,c}1

Remember that you can use vgdisplay tecmint_vg to show information about the newly created VG.

4. Create three logical volumes on top of VG tecmint_vg, as follows:

# lvcreate -L 3G -n vol01_docs tecmint_vg [vol01_docs → 3 GB] # lvcreate -L 1G -n vol02_logs tecmint_vg [vol02_logs → 1 GB] # lvcreate -l 100%FREE -n vol03_homes tecmint_vg [vol03_homes → 6 GB]

Remember that you can use lvdisplay tecmint_vg to show information about the newly created LVs on top of VG tecmint_vg.

5. Format each of the logical volumes with xfs (do NOT use xfs if you’re planning on shrinking volumes later!):

# mkfs.xfs /dev/tecmint_vg/vol01_docs # mkfs.xfs /dev/tecmint_vg/vol02_logs # mkfs.xfs /dev/tecmint_vg/vol03_homes

6. Finally, mount them:

# mount /dev/tecmint_vg/vol01_docs /mnt/docs # mount /dev/tecmint_vg/vol02_logs /mnt/logs # mount /dev/tecmint_vg/vol03_homes /mnt/homes

Removing Logical Volumes, Volume Group and Physical Volumes

7. Now we will reverse the LVM implementation and remove the LVs, the VG, and the PVs:

# lvremove /dev/tecmint_vg/vol01_docs

# lvremove /dev/tecmint_vg/vol02_logs

# lvremove /dev/tecmint_vg/vol03_homes

# vgremove /dev/tecmint_vg

# pvremove /dev/sd{b,c}1

8. Now let’s install SSM and we will see how to perform the above in ONLY 1 STEP!

# yum update && yum install system-storage-manager

We will use the same names and sizes as before:

# ssm create -s 3G -n vol01_docs -p tecmint_vg --fstype ext4 /mnt/docs /dev/sd{b,c}1

# ssm create -s 1G -n vol02_logs -p tecmint_vg --fstype ext4 /mnt/logs /dev/sd{b,c}1

# ssm create -n vol03_homes -p tecmint_vg --fstype ext4 /mnt/homes /dev/sd{b,c}1

Yes! SSM will let you:

- initialize block devices as physical volumes

- create a volume group

- create logical volumes

- format LVs, and

- mount them using only one command

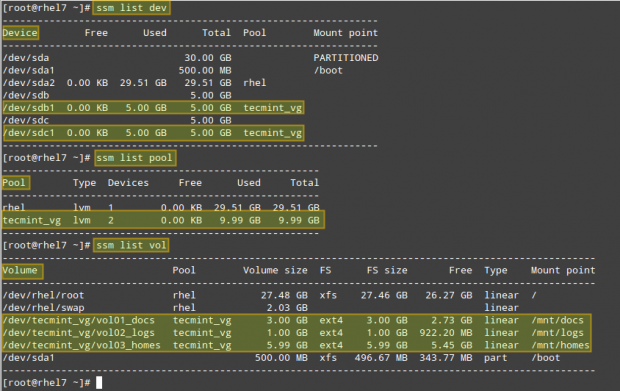

9. We can now display the information about PVs, VGs, or LVs, respectively, as follows:

# ssm list dev # ssm list pool # ssm list vol

10. As we already know, one of the distinguishing features of LVM is the possibility to resize (expand or decrease) logical volumes without downtime.

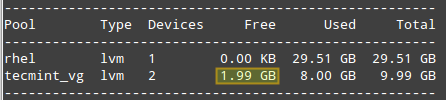

Say we are running out of space in vol02_logs but have plenty of space in vol03_homes. We will resize vol03_homes to 4 GB and expand vol02_logs to use the remaining space:

# ssm resize -s 4G /dev/tecmint_vg/vol03_homes

Run ssm list pool again and take note of the free space in tecmint_vg:

Then do:

# ssm resize -s+1.99 /dev/tecmint_vg/vol02_logs

Note: that the plus sign after the -s flag indicates that the specified value should be added to the present value.

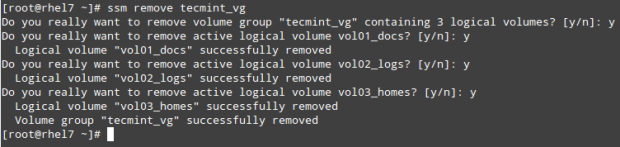

11. Removing logical volumes and volume groups is much easier with ssm as well. A simple,

# ssm remove tecmint_vg

will return a prompt asking you to confirm the deletion of the VG and the LVs it contains:

Managing Encrypted Volumes

SSM also provides system administrators with the capability of managing encryption for new or existing volumes. You will need the cryptsetup package installed first:

# yum update && yum install cryptsetup

Then issue the following command to create an encrypted volume. You will be prompted to enter a passphraseto maximize security:

# ssm create -s 3G -n vol01_docs -p tecmint_vg --fstype ext4 --encrypt luks /mnt/docs /dev/sd{b,c}1

# ssm create -s 1G -n vol02_logs -p tecmint_vg --fstype ext4 --encrypt luks /mnt/logs /dev/sd{b,c}1

# ssm create -n vol03_homes -p tecmint_vg --fstype ext4 --encrypt luks /mnt/homes /dev/sd{b,c}1

Our next task consists in adding the corresponding entries in /etc/fstab in order for those logical volumes to be available on boot. Rather than using the device identifier (/dev/something).

We will use each LV’s UUID (so that our devices will still be uniquely identified should we add other logical volumes or devices), which we can find out with the blkid utility:

# blkid -o value UUID /dev/tecmint_vg/vol01_docs # blkid -o value UUID /dev/tecmint_vg/vol02_logs # blkid -o value UUID /dev/tecmint_vg/vol03_homes

In our case:

Next, create the /etc/crypttab file with the following contents (change the UUIDs for the ones that apply to your setup):

docs UUID=ba77d113-f849-4ddf-8048-13860399fca8 none logs UUID=58f89c5a-f694-4443-83d6-2e83878e30e4 none homes UUID=92245af6-3f38-4e07-8dd8-787f4690d7ac none

And insert the following entries in /etc/fstab. Note that device_name (/dev/mapper/device_name) is the mapper identifier that appears in the first column of /etc/crypttab.

# Logical volume vol01_docs: /dev/mapper/docs /mnt/docs ext4 defaults 0 2 # Logical volume vol02_logs /dev/mapper/logs /mnt/logs ext4 defaults 0 2 # Logical volume vol03_homes /dev/mapper/homes /mnt/homes ext4 defaults 0 2

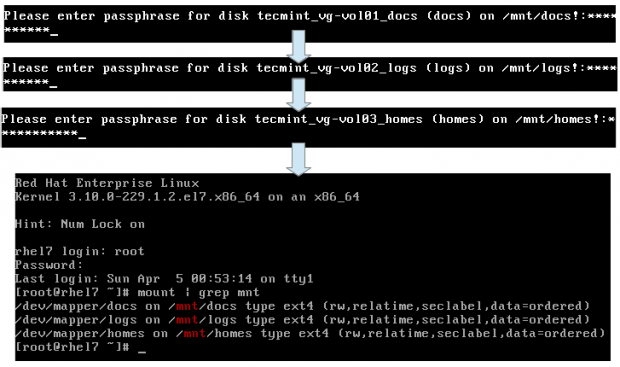

Now reboot (systemctl reboot) and you will be prompted to enter the passphrase for each LV. Afterwards you can confirm that the mount operation was successful by checking the corresponding mount points:

Conclusion

In this tutorial we have started to explore how to set up and configure system storage using classic volume management tools and SSM, which also integrates filesystem and encryption capabilities in one package. This makes SSM an invaluable tool for any sysadmin.

Let us know if you have any questions or comments – feel free to use the form below to get in touch with us!

RHCSA Series: Using ACLs (Access Control Lists) and Mounting Samba / NFS Shares – Part 7

In the last article (RHCSA series Part 6) we started explaining how to set up and configure local system storage using parted and ssm.

We also discussed how to create and mount encrypted volumes with a password during system boot. In addition, we warned you to avoid performing critical storage management operations on mounted filesystems. With that in mind we will now review the most used file system formats in Red Hat Enterprise Linux 7 and then proceed to cover the topics of mounting, using, and unmounting both manually and automatically network filesystems (CIFS and NFS), along with the implementation of access control lists for your system.

Prerequisites

Before proceeding further, please make sure you have a Samba server and a NFS server available (note that NFSv2 is no longer supported in RHEL 7).

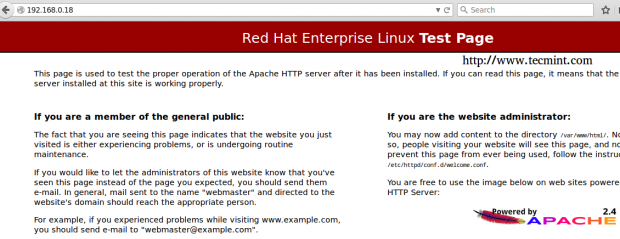

During this guide we will use a machine with IP 192.168.0.10 with both services running in it as server, and a RHEL 7 box as client with IP address 192.168.0.18. Later in the article we will tell you which packages you need to install on the client.

File System Formats in RHEL 7

Beginning with RHEL 7, XFS has been introduced as the default file system for all architectures due to its high performance and scalability. It currently supports a maximum filesystem size of 500 TB as per the latest tests performed by Red Hat and its partners for mainstream hardware.

Also, XFS enables user_xattr (extended user attributes) and acl (POSIX access control lists) as default mount options, unlike ext3 or ext4 (ext2 is considered deprecated as of RHEL 7), which means that you don’t need to specify those options explicitly either on the command line or in /etc/fstab when mounting a XFS filesystem (if you want to disable such options in this last case, you have to explicitly use no_acl and no_user_xattr).

Keep in mind that the extended user attributes can be assigned to files and directories for storing arbitrary additional information such as the mime type, character set or encoding of a file, whereas the access permissions for user attributes are defined by the regular file permission bits.

Access Control Lists

As every system administrator, either beginner or expert, is well acquainted with regular access permissions on files and directories, which specify certain privileges (read, write, and execute) for the owner, the group, and “the world” (all others). However, feel free to refer to Part 3 of the RHCSA series if you need to refresh your memory a little bit.

However, since the standard ugo/rwx set does not allow to configure different permissions for different users, ACLs were introduced in order to define more detailed access rights for files and directories than those specified by regular permissions.

In fact, ACL-defined permissions are a superset of the permissions specified by the file permission bits. Let’s see how all of this translates is applied in the real world.

1. There are two types of ACLs: access ACLs, which can be applied to either a specific file or a directory), and default ACLs, which can only be applied to a directory. If files contained therein do not have a ACL set, they inherit the default ACL of their parent directory.

2. To begin, ACLs can be configured per user, per group, or per an user not in the owning group of a file.

3. ACLs are set (and removed) using setfacl, with either the -m or -x options, respectively.

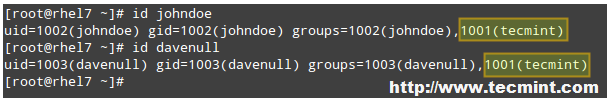

For example, let us create a group named tecmint and add users johndoe and davenull to it:

# groupadd tecmint # useradd johndoe # useradd davenull # usermod -a -G tecmint johndoe # usermod -a -G tecmint davenull

And let’s verify that both users belong to supplementary group tecmint:

# id johndoe # id davenull

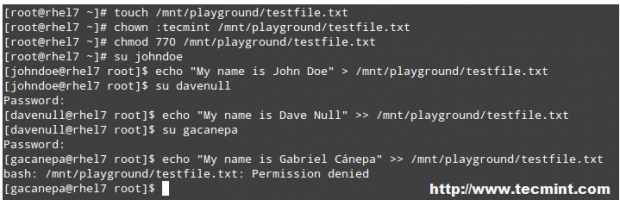

Let’s now create a directory called playground within /mnt, and a file named testfile.txt inside. We will set the group owner to tecmint and change its default ugo/rwx permissions to 770 (read, write, and execute permissions granted to both the owner and the group owner of the file):

# mkdir /mnt/playground # touch /mnt/playground/testfile.txt # chmod 770 /mnt/playground/testfile.txt

Then switch user to johndoe and davenull, in that order, and write to the file:

echo "My name is John Doe" > /mnt/playground/testfile.txt echo "My name is Dave Null" >> /mnt/playground/testfile.txt

So far so good. Now let’s have user gacanepa write to the file – and the write operation will fail, which was to be expected.

But what if we actually need user gacanepa (who is not a member of group tecmint) to have write permissions on /mnt/playground/testfile.txt? The first thing that may come to your mind is adding that user account to group tecmint. But that will give him write permissions on ALL files were the write bit is set for the group, and we don’t want that. We only want him to be able to write to /mnt/playground/testfile.txt.

# touch /mnt/playground/testfile.txt # chown :tecmint /mnt/playground/testfile.txt # chmod 777 /mnt/playground/testfile.txt # su johndoe $ echo "My name is John Doe" > /mnt/playground/testfile.txt $ su davenull $ echo "My name is Dave Null" >> /mnt/playground/testfile.txt $ su gacanepa $ echo "My name is Gabriel Canepa" >> /mnt/playground/testfile.txt

Let’s give user gacanepa read and write access to /mnt/playground/testfile.txt.

Run as root,

# setfacl -R -m u:gacanepa:rwx /mnt/playground

and you’ll have successfully added an ACL that allows gacanepa to write to the test file. Then switch to user gacanepa and try to write to the file again:

$ echo "My name is Gabriel Canepa" >> /mnt/playground/testfile.txt

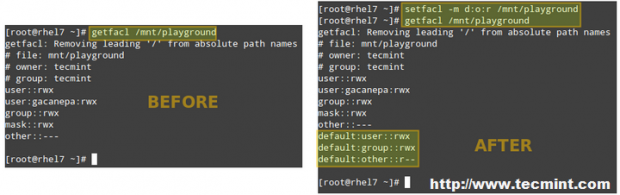

To view the ACLs for a specific file or directory, use getfacl:

# getfacl /mnt/playground/testfile.txt

To set a default ACL to a directory (which its contents will inherit unless overwritten otherwise), add d: before the rule and specify a directory instead of a file name:

# setfacl -m d:o:r /mnt/playground

The ACL above will allow users not in the owner group to have read access to the future contents of the /mnt/playground directory. Note the difference in the output of getfacl /mnt/playground before and after the change:

Chapter 20 in the official RHEL 7 Storage Administration Guide provides more ACL examples, and I highly recommend you take a look at it and have it handy as reference.

Mounting NFS Network Shares

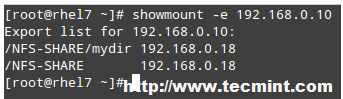

To show the list of NFS shares available in your server, you can use the showmount command with the -eoption, followed by the machine name or its IP address. This tool is included in the nfs-utils package:

# yum update && yum install nfs-utils

Then do:

# showmount -e 192.168.0.10

and you will get a list of the available NFS shares on 192.168.0.10:

To mount NFS network shares on the local client using the command line on demand, use the following syntax:

# mount -t nfs -o [options] remote_host:/remote/directory /local/directory

which, in our case, translates to:

# mount -t nfs 192.168.0.10:/NFS-SHARE /mnt/nfs

If you get the following error message: “Job for rpc-statd.service failed. See “systemctl status rpc-statd.service” and “journalctl -xn” for details.”, make sure the rpcbind service is enabled and started in your system first:

# systemctl enable rpcbind.socket # systemctl restart rpcbind.service

and then reboot. That should do the trick and you will be able to mount your NFS share as explained earlier. If you need to mount the NFS share automatically on system boot, add a valid entry to the /etc/fstab file:

remote_host:/remote/directory /local/directory nfs options 0 0

The variables remote_host, /remote/directory, /local/directory, and options (which is optional) are the same ones used when manually mounting an NFS share from the command line. As per our previous example:

192.168.0.10:/NFS-SHARE /mnt/nfs nfs defaults 0 0

Mounting CIFS (Samba) Network Shares

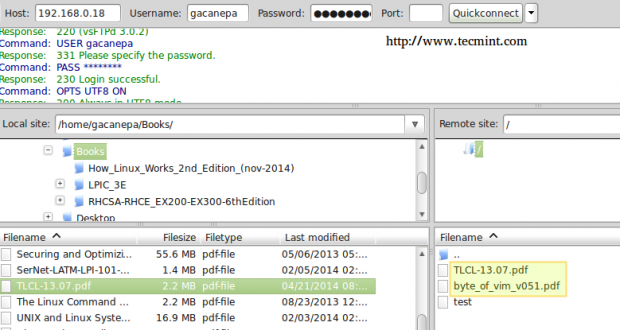

Samba represents the tool of choice to make a network share available in a network with *nix and Windows machines. To show the Samba shares that are available, use the smbclient command with the -L flag, followed by the machine name or its IP address. This tool is included in the samba-client package:

You will be prompted for root’s password in the remote host:

# smbclient -L 192.168.0.10

To mount Samba network shares on the local client you will need to install first the cifs-utils package:

# yum update && yum install cifs-utils

Then use the following syntax on the command line:

# mount -t cifs -o credentials=/path/to/credentials/file //remote_host/samba_share /local/directory

which, in our case, translates to:

# mount -t cifs -o credentials=~/.smbcredentials //192.168.0.10/gacanepa /mnt/samba

where smbcredentials:

username=gacanepa password=XXXXXX

is a hidden file inside root’s home (/root/) with permissions set to 600, so that no one else but the owner of the file can read or write to it.

Please note that the samba_share is the name of the Samba share as returned by smbclient -L remote_host as shown above.

Now, if you need the Samba share to be available automatically on system boot, add a valid entry to the /etc/fstab file as follows:

//remote_host:/samba_share /local/directory cifs options 0 0

The variables remote_host, /samba_share, /local/directory, and options (which is optional) are the same ones used when manually mounting a Samba share from the command line. Following the definitions given in our previous example:

//192.168.0.10/gacanepa /mnt/samba cifs credentials=/root/smbcredentials,defaults 0 0

Conclusion

In this article we have explained how to set up ACLs in Linux, and discussed how to mount CIFS and NFSnetwork shares in a RHEL 7 client.

I recommend you to practice these concepts and even mix them (go ahead and try to set ACLs in mounted network shares) until you feel comfortable. If you have questions or comments feel free to use the form below to contact us anytime. Also, feel free to share this article through your social networks.

RHCSA Series: Securing SSH, Setting Hostname and Enabling Network Services – Part 8

As a system administrator you will often have to log on to remote systems to perform a variety of administration tasks using a terminal emulator. You will rarely sit in front of a real (physical) terminal, so you need to set up a way to log on remotely to the machines that you will be asked to manage.

In fact, that may be the last thing that you will have to do in front of a physical terminal. For security reasons, using Telnet for this purpose is not a good idea, as all traffic goes through the wire in unencrypted, plain text.

In addition, in this article we will also review how to configure network services to start automatically at boot and learn how to set up network and hostname resolution statically or dynamically.

Installing and Securing SSH Communication

For you to be able to log on remotely to a RHEL 7 box using SSH, you will have to install the openssh, openssh-clients and openssh-servers packages. The following command not only will install the remote login program, but also the secure file transfer tool, as well as the remote file copy utility:

# yum update && yum install openssh openssh-clients openssh-servers

Note that it’s a good idea to install the server counterparts as you may want to use the same machine as both client and server at some point or another.

After installation, there is a couple of basic things that you need to take into account if you want to secure remote access to your SSH server. The following settings should be present in the /etc/ssh/sshd_configfile.

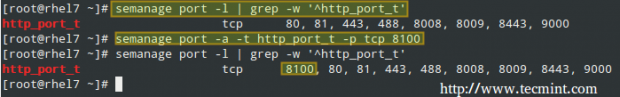

1. Change the port where the sshd daemon will listen on from 22 (the default value) to a high port (2000 or greater), but first make sure the chosen port is not being used.

For example, let’s suppose you choose port 2500. Use netstat in order to check whether the chosen port is being used or not:

# netstat -npltu | grep 2500

If netstat does not return anything, you can safely use port 2500 for sshd, and you should change the Port setting in the configuration file as follows:

Port 2500

2. Only allow protocol 2:

Protocol 2

3. Configure the authentication timeout to 2 minutes, do not allow root logins, and restrict to a minimum the list of users which are allowed to login via ssh:

LoginGraceTime 2m PermitRootLogin no AllowUsers gacanepa

4. If possible, use key-based instead of password authentication:

PasswordAuthentication no RSAAuthentication yes PubkeyAuthentication yes