mshell Workflow Patterns — Reference Guide – Part I (p1-p13).

mshell Workflow Guide, February 22nd, 2026.

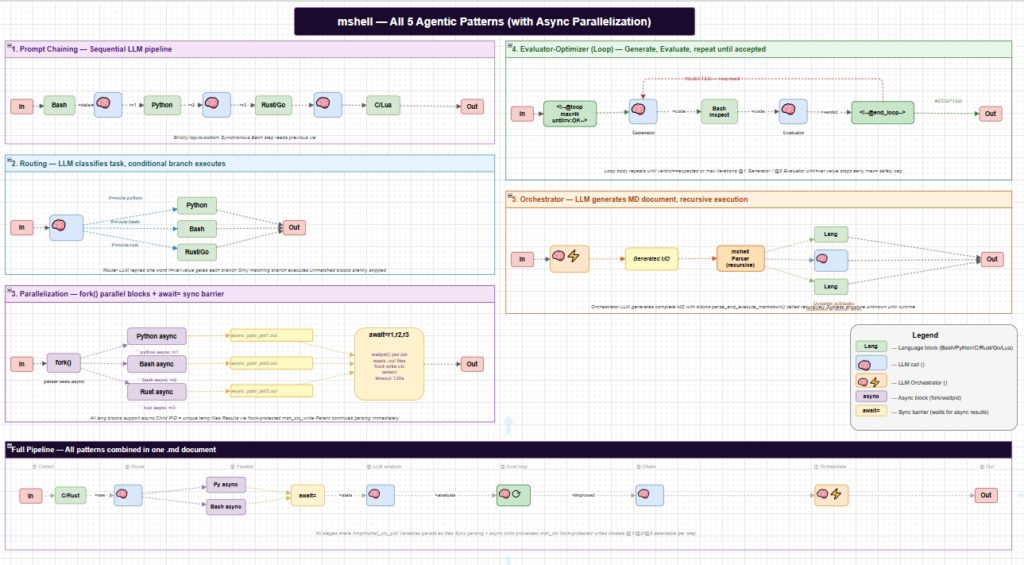

Implementation of all five canonical agentic workflow patterns in mshell

This diagram illustrates the complete implementation of all five canonical agentic workflow patterns in mshell, a polyglot AI-and-mathematics powered shell environment that combines: 7 programming languages (Bash, Python, C, C++, Rust, Go, Lua), 3 LLM vendor backends (Ollama, Claude, OpenAI or connecting throw llm linux evaluation framework), up to 3 different models active simultaneously within a single workflow, direct execution of pre-written code blocks in any supported language, and native mshell commands — all orchestrated from plain Markdown documents.

Pattern 1 — Prompt Chaining shows a sequential pipeline where language blocks and LLM calls alternate, each step consuming the previous output via <var and producing the next via >var. Different models (@1, @2, @3) can be assigned to different steps in the same chain.

Pattern 2 — Routing demonstrates LLM-driven conditional branching: a router model classifies the task and emits a single keyword; the if=var:value fence attribute gates execution so only the matching language branch runs.

Pattern 3 — Parallelization is the newest addition: the async fence attribute triggers a fork(), launching the block in a child process. Results are written to uniquely named temp files (keyed by parent/child PID pair) and collected at an await=var1,var2 barrier via waitpid(). Writes to the shared session context (/tmp/mshell_ctx_<pid>/) are protected by flock().

Pattern 4 — Evaluator-Optimizer implements iterative refinement via <!–@loop max=N until=var:value–> / <!–@end_loop–>. A generator model produces output, an evaluator model scores it, and the loop continues until the verdict matches the expected value or the safety cap is reached.

Pattern 5 — Orchestrator uses <!–@Nx_md–> to have an LLM generate a complete Markdown document at runtime, which is then executed recursively by parse_and_execute_markdown(). The subtask structure is entirely dynamic — unknown at authoring time.

The Full Pipeline at the bottom shows all five patterns composing naturally in a single .md document, sharing session state through the context directory.

Because mshell supports all five patterns natively in plain Markdown — across seven programming languages, multiple LLM vendors and models, executable code blocks, and native shell commands — it serves as a universal agentic execution environment requiring no external orchestration framework and supporting all five canonical agentic workflow patterns.

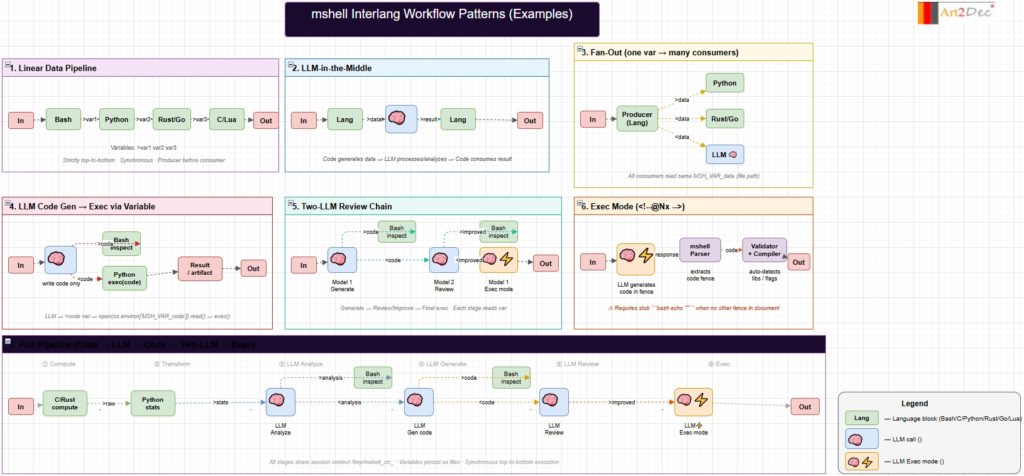

mshell Interlang synchronous Workflow Patterns

This diagram documents the core workflow patterns available in the mshell Ecosystem — an AI-powered polyglot shell that executes Markdown documents containing mixed-language code blocks and inline LLM directives. The diagram covers seven patterns, arranged from simple to complex.

The first three patterns (Linear Data Pipeline, LLM-in-the-Middle, Fan-Out) represent foundational data flow: code blocks in different languages pass data to each other through named session variables (>var / <var), with an optional LLM processing step in the middle, or a single producer writing to multiple consumers simultaneously.

The next two patterns (LLM Code Gen → Exec via Variable, Two-LLM Review Chain) show more advanced LLM integration: a model generates executable code that is stored in a variable and then run via exec(), and a multi-model pipeline where Model 1 generates, Model 2 reviews and improves, and Model 1 finalizes in exec mode — mirroring a human code review cycle.

Exec Mode (<!–@Nx–>) is a unique mshell primitive: the LLM response is intercepted by the mshell Parser, the code fence is extracted, and the Validator automatically resolves compiler flags and library dependencies before running the binary.

The bottom-spanning Full Pipeline combines all stages into a single chain: C/Rust computes raw data → Python transforms it into statistics → LLM analyzes → LLM generates visualization code → LLM reviews → Exec mode compiles and runs — all driven from one .md file without any manual editing.

All patterns execute strictly top-to-bottom and share a session context at /tmp/mshell_ctx_<pid> where variables persist as plain files for the duration of the process. These are example patterns for the synchronous usage patterns without extension.

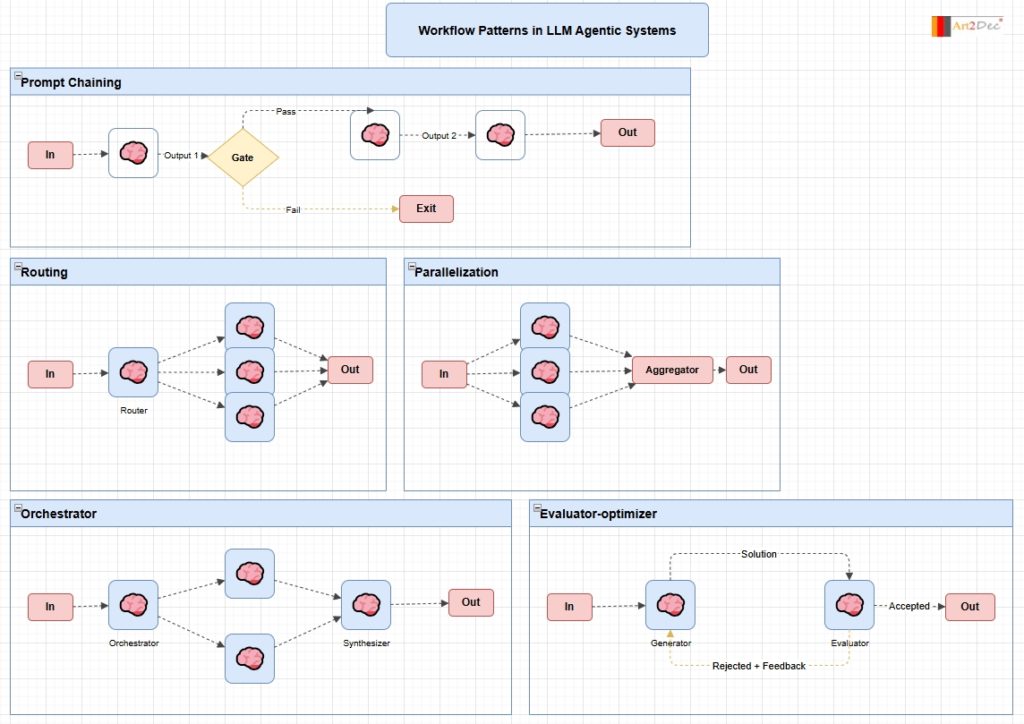

Workflow Patterns in LLM Agentic Systems

This diagram illustrates five canonical architectural patterns for building multi-agent systems with Large Language Models, following the taxonomy popularized in agentic AI research.

Prompt Chaining is the simplest pattern: a sequence of LLM calls where each output feeds the next input. A Gate node introduces conditional logic — on Pass the chain continues through additional LLM stages, on Fail the flow exits early. This is the foundation of any multi-step reasoning pipeline.

Routing uses a dedicated Router LLM to classify the input and dispatch it to one of several specialized downstream agents. Only one branch executes, making this suitable for scenarios where different input types require fundamentally different handling.

Parallelization fans the input out to multiple LLM agents running independently, then collects their outputs through an Aggregator. This is used to increase throughput, generate diverse perspectives, or split a large task into independent subtasks.

Orchestrator extends parallelization with explicit coordination: a central Orchestrator LLM plans and delegates subtasks to worker agents, then a Synthesizer LLM merges the results into a coherent final output. This pattern is common in complex research or code generation pipelines.

Evaluator-optimizer implements an iterative refinement loop: a Generator LLM produces a candidate solution, an Evaluator LLM scores it, and if the result is rejected the feedback is sent back to the Generator for another attempt. The loop continues until the Evaluator accepts the output, enabling self-correction without human intervention.

Two LLM Pipeline experiment.

This document is an experimental report on a two-model LLM pipeline built with the mshell Ecosystem — an AI-mathematical powered shell developed by Art2Dec SoftLab that supports C, C++, Rust, Go, Python, Lua, Bash and native mshell scripting. The experiment demonstrates how two AI models can collaborate within a single Markdown document to generate, review, improve, and execute a C/OpenGL 3D solar system visualization — without any manual code editing. The pipeline passes code between models through mshell’s Interlang session variable system, with the mshell Validator automatically resolving compiler flags from include directives. The document includes the full pipeline Markdown source, all three versions of the generated code, a step-by-step execution log, and five practical conclusions about multi-model collaboration — including the observation that models evaluate code more critically when reviewing work they did not generate themselves.

SImple example how to use Mshell Ecosystem.

This note is a practical example of a code generation session within the mshell Ecosystem — an AI-and-Mathematics powered shell environment that supports 7 programming languages (C, C++, Rust, Go, Python, Lua, and Bash) plus native mshell scripting. The session demonstrates how an user interacts with LLM through a conversational prompt to generate a C/OpenGL application featuring three perpetually bouncing balls rendered in gold, silver, and copper.

Beyond single-language code generation, mshell uses Markdown as the foundation for building documentation, multi-language pipelines, and interlanguage solutions. The core idea is that code blocks in different languages within a single .md document can exchange data through a session variable system — one block writes its output to a named variable, and the next block in any other language reads it as input. This means, for example, a C program can compute data, pass it to Python for statistical processing, then send the result to an LLM for analysis, and finally display the conclusion in Bash — all within one document, executed sequentially. LLM model calls can also be embedded inline, supporting Ollama, OpenAI, and Claude backends.

mshell — Inter-Language Execution Reference Guide.

mshell — Inter-Language Markdown Execution Environment Reference Guide:

mshell reimagines the Markdown document as an active execution environment rather than static text. A single .md file can contain code blocks in seven languages — Bash, Python, C, C++, Rust, Go, and Lua — that run sequentially and exchange data through session variables. The output of a C block becomes the input of a Python block, which feeds an LLM directive, which passes its response to a Rust analyzer. All in one document, no glue code, no temp files managed by hand.

The core idea: treat a document as a pipeline. Variables written with >varname are captured to session context and read by any subsequent block via MSH_VAR_varname. The mechanism is language-agnostic — every language reads a file path from an environment variable, which is the same simple contract for all seven.

Different algorithms and tasks have their natural homes in different languages — C for raw performance, Python for data and visualization, Rust for safety-critical logic, Go for concurrency, Lua for lightweight scripting. mshell lets each language do what it does best, passing results seamlessly to the next stage rather than forcing everything into a single environment.

LLM integration is first-class. Inline directives , , call configured models from Ollama, OpenAI, or Claude without leaving the document. Exec mode goes further — the model generates code and mshell executes it immediately, enabling live visualizations, OpenGL windows, and browser charts produced entirely from a natural language prompt.

The notebook application that ships with mshell adds a GTK-based editor with direct PDF export — making the same .md file serve as both a runnable program and a formatted technical document. No separate toolchain, no pandoc pipeline required.

Tested and working on Ubuntu, Debian, Raspberry Pi ARM64, other Linux OSes, macOS Sequoia (and some previous).

What makes it unusual is the combination: polyglot execution, LLM as a first-class pipeline stage, and documentation generation — all from a single file format that is itself human-readable. If you have any questions based on the Reference Guide you can ask directly here on LinkedIn or send an e-mail to me.

8 bytes (64 items) Python Snippets Collection.

8 bytes (64 items) Python Snippets Collection —

About This Collection

This is a curated set of 64 practical Python code snippets designed for learning and quick reference. Each snippet demonstrates a specific programming concept, algorithm, or common task you’ll encounter in real-world Python development. Documentation was generated with mshell v.1.4.1 (shell for AI and Mathematics) and md file with code was checked to run by mshell v.1.4.1

What you’ll find here:

String manipulation and text processing techniques

Numeric operations, type conversions, and mathematical functions

List operations, comprehensions, and functional programming patterns

Control flow structures (loops, conditionals, pattern matching)

Functions, lambdas, decorators, and closures

Object-oriented programming basics (classes, inheritance, instance vs class members)

Pattern generation and algorithmic challenges

Python-specific features (iterators, generators, *args, **kwargs)

Who is this for:

Beginners learning Python fundamentals

Developers transitioning from other languages

Anyone preparing for coding interviews

Programmers looking for quick syntax references

All code blocks have been verified to run without errors in Python 3.12+. Simply copy, paste, and execute to see immediate results.