Systemd is a cutting-edge system and service manager for Linux systems: an init daemon replacement intended to start processes in parallel at system boot. It is now supported in a number of current mainstream distribution including Fedora, Debian, Ubuntu, OpenSuSE, Arch, RHEL, CentOS, etc.

Earlier on, we explained the story behind ‘init’ and ‘systemd’; where we discussed what the two daemons are, why ‘init’ technically needed to be replaced with ‘systemd’ as well as the main features of systemd.

One of the main advantages of systemd over other common init systems is, support for centralized management of system and processes logging using a journal. In this article, we will learn how to manage and view log messages under systemd using journalctl command in Linux.

Important: Before moving further in this guide, you may want to learn how to manage ‘Systemd’ services and units using ‘Systemctl’ command, and also create and run new service units in systemd using shell scripts in Linux. However, if you are okay with all the above, continue reading through.

Configuring Journald for Collecting Log Messages Under Systemd

journald is a daemon which gathers and writes journal entries from the entire system; these are essentially boot messages, messages from kernel and from syslog or various applications and it stores all the messages in a central location – journal file.

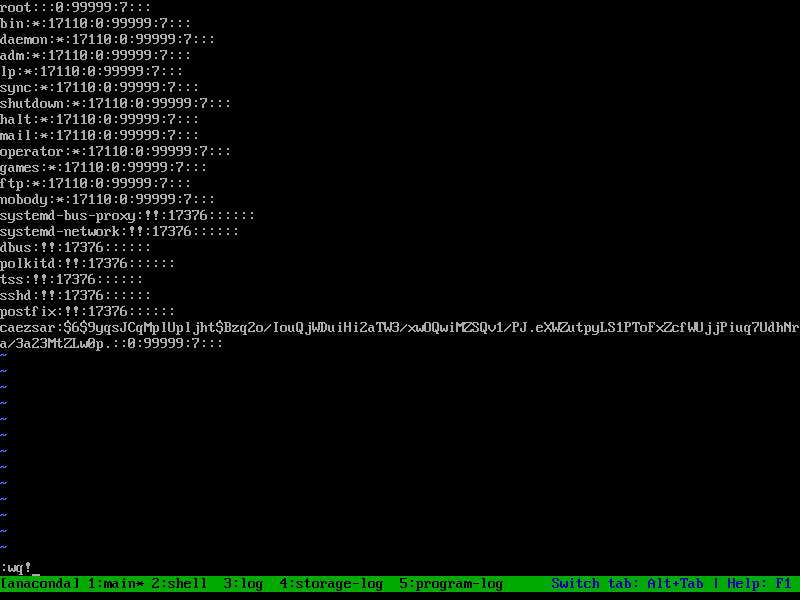

You can control the behavior of journald via its default configuration file: /etc/systemd/journald.conf which is generated at compile time. This file contains options whose values you may change to suite your local environment requirements.

Below is a sample of what the file looks like, viewed using the cat command.

$ cat /etc/systemd/journald.conf

Journald Configuration File

# See journald.conf(5) for details.

[Journal]

#Storage=auto

#Compress=yes

#Seal=yes

#SplitMode=uid

#SyncIntervalSec=5m

#RateLimitInterval=30s

#RateLimitBurst=1000

#SystemMaxUse=

#SystemKeepFree=

#SystemMaxFileSize=

#SystemMaxFiles=100

#RuntimeMaxUse=

#RuntimeKeepFree=

#RuntimeMaxFileSize=

#RuntimeMaxFiles=100

#MaxRetentionSec=

#MaxFileSec=1month

#ForwardToSyslog=yes

#ForwardToKMsg=no

#ForwardToConsole=no

#ForwardToWall=yes

#TTYPath=/dev/console

#MaxLevelStore=debug

#MaxLevelSyslog=debug

#MaxLevelKMsg=notice

#MaxLevelConsole=info

#MaxLevelWall=emerg

Note that various package installs and use configuration extracts in /usr/lib/systemd/*.conf.d/ and run time configurations can be found in /run/systemd/journald.conf.d/*.conf which you may not necessarily use.

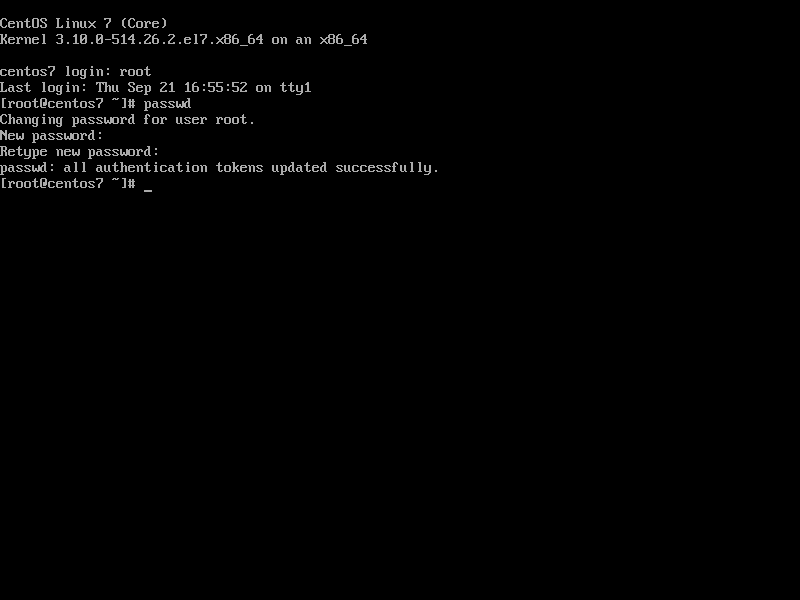

Enable Journal Data Storage On Disk

A number of Linux distributions including Ubuntu and it’s derivatives like Linux Mint do not enable persistent storage of boot messages on disk by default.

It is possible to enable this by setting the “Storage” option to “persistent” as shown below. This will create the /var/log/journal directory and all journal files will be stored under it.

$ sudo vi /etc/systemd/journald.conf

OR

$ sudo nano /etc/systemd/journald.conf

[Journal]

Storage=persistent

For additional settings, find the meaning of all options which are supposed to be configured under the “[Journal]” section by typing.

$ man journald.conf

Setting Correct System Time Using Timedatectl Command

For reliable log management under systemd using journald service, ensure that the time settings including the timezone is correct on the system.

In order to view the current date and time settings on your system, type.

$ timedatectl

OR

$ timedatectl status

Local time: Thu 2017-06-15 13:29:09 EAT

Universal time: Thu 2017-06-15 10:29:09 UTC

RTC time: Thu 2017-06-15 10:29:09

Time zone: Africa/Kampala (EAT, +0300)

Network time on: yes

NTP synchronized: yes

RTC in local TZ: no

To set the correct timezone and possibly system time, use the commands below.

$ sudo timedatectl set-timezone Africa/Kampala

$ sudo timedatectl set-time “13:50:00”

Viewing Log Messages Using Journalctl Command

journalctl is a utility used to view the contents of the systemd journal (which is written by journald service).

To show all collected logs without any filtering, type.

$ journalctl

View Log Messages

-- Logs begin at Wed 2017-06-14 21:56:43 EAT, end at Thu 2017-06-15 12:28:19 EAT

Jun 14 21:56:43 tecmint systemd-journald[336]: Runtime journal (/run/log/journal

Jun 14 21:56:43 tecmint kernel: Initializing cgroup subsys cpuset

Jun 14 21:56:43 tecmint kernel: Initializing cgroup subsys cpu

Jun 14 21:56:43 tecmint kernel: Initializing cgroup subsys cpuacct

Jun 14 21:56:43 tecmint kernel: Linux version 4.4.0-21-generic (buildd@lgw01-21)

Jun 14 21:56:43 tecmint kernel: Command line: BOOT_IMAGE=/boot/vmlinuz-4.4.0-21-

Jun 14 21:56:43 tecmint kernel: KERNEL supported cpus:

Jun 14 21:56:43 tecmint kernel: Intel GenuineIntel

Jun 14 21:56:43 tecmint kernel: AMD AuthenticAMD

Jun 14 21:56:43 tecmint kernel: Centaur CentaurHauls

Jun 14 21:56:43 tecmint kernel: x86/fpu: xstate_offset[2]: 576, xstate_sizes[2]

Jun 14 21:56:43 tecmint kernel: x86/fpu: Supporting XSAVE feature 0x01: 'x87 flo

Jun 14 21:56:43 tecmint kernel: x86/fpu: Supporting XSAVE feature 0x02: 'SSE reg

Jun 14 21:56:43 tecmint kernel: x86/fpu: Supporting XSAVE feature 0x04: 'AVX reg

Jun 14 21:56:43 tecmint kernel: x86/fpu: Enabled xstate features 0x7, context si

Jun 14 21:56:43 tecmint kernel: x86/fpu: Using 'eager' FPU context switches.

Jun 14 21:56:43 tecmint kernel: e820: BIOS-provided physical RAM map:

Jun 14 21:56:43 tecmint kernel: BIOS-e820: [mem 0x0000000000000000-0x00000000000

Jun 14 21:56:43 tecmint kernel: BIOS-e820: [mem 0x0000000000090000-0x00000000000

Jun 14 21:56:43 tecmint kernel: BIOS-e820: [mem 0x0000000000100000-0x000000001ff

Jun 14 21:56:43 tecmint kernel: BIOS-e820: [mem 0x0000000020000000-0x00000000201

Jun 14 21:56:43 tecmint kernel: BIOS-e820: [mem 0x0000000020200000-0x00000000400

View Log messages Based On Boots

You can display a list of boot numbers (relative to the current boot), their IDs, and the timestamps of the first and last message corresponding to the boot with the --list-boots option.

$ journalctl --list-boots

-1 9fb590b48e1242f58c2579defdbbddc9 Thu 2017-06-15 16:43:36 EAT—Thu 2017-06-15 1

0 464ae35c6e264a4ca087949936be434a Thu 2017-06-15 16:47:36 EAT—Thu 2017-06-15 1

To view the journal entries from the current boot (number 0), use the -b switch like this (same as the sample output above).

$ journalctl -b

and to see a journal from the previous boot, use the -1 relative pointer with the -b option as below.

$ journalctl -b -1

Alternatively, use the boot ID like this.

$ journalctl -b 9fb590b48e1242f58c2579defdbbddc9

Filtering Log Messages Based On Time

To use time in Coordinated Universal Time (UTC) format, add the --utc options as follows.

$ journalctl --utc

To see all of the entries since a particular date and time, e.g. June 15th, 2017 at 8:15 AM, type this command.

$ journalctl --since "2017-06-15 08:15:00"

$ journalctl --since today

$ journalctl --since yesterday

Viewing Recent Log Messages

To view recent log messages (10 by default), use the -n flag as shown below.

$ journalctl -n

$ journalctl -n 20

Viewing Log Messages Generated By Kernel

To see only kernel messages, similar to the dmesg command output, you can use the -k flag.

$ journalctl -k

$ journalctl -k -b

$ journalctl -k -b 9fb590b48e1242f58c2579defdbbddc9

Viewing Log Messages Generated By Units

To can view all journal entries for a particular unit, use the -u switch as follows.

$ journalctl -u apache2.service

To zero down to the current boot, type this command.

$ journalctl -b -u apache2.service

To show logs from the previous boot, use this.

$ journalctl -b -1 -u apache2.service

Below are some other useful commands:

$ journalctl -u apache2.service

$ journalctl -u apache2.service --since today

$ journalctl -u apache2.service -u nagios.service --since yesterday

Viewing Log Messages Generated By Processes

To view logs generated by a specific process, specify it’s PID like this.

$ journalctl _PID=19487

$ journalctl _PID=19487 --since today

$ journalctl _PID=19487 --since yesterday

Viewing Log Messages Generated By User or Group ID

To view logs generated by a specific user or group, specify it’s user or group ID like this.

$ journalctl _UID=1000

$ journalctl _UID=1000 --since today

$ journalctl _UID=1000 -b -1 --since today

Viewing Logs Generated By a File

To show all logs generated by a file (possibly an executable), such as the D-Bus executable or bash executables, simply type.

$ journalctl /usr/bin/dbus-daemon

$ journalctl /usr/bin/bash

Viewing Log Messages By Priority

You can also filter output based on message priorities or priority ranges using the -p flag. The possible values are: 0 – emerg, 1 – alert, 2 – crit, 3 – err, 4 – warning, 5 – notice, 6 – info, 7 – debug):

$ journalctl -p err

To specify a range, use the format below (emerg to warning).

$ journalctl -p 1..4

OR

$ journalctl -p emerg..warning

View Log Messages in Real-Time

You can practically watch logs as they are being written with the -f option (similar to tail -f functionality).

$ journalctl -f

Handling Journal Display Formatting

If you want to control the output formatting of the journal entries, add the -o flag and use these options: cat, export, json, json-pretty, json-sse, short, short-iso, short-monotonic, short-precise and verbose(check meaning of options in the man page:

The cat option shows the actual message of each journal entry without any metadata (timestamp and so on).

$ journalctl -b -u apache2.service -o cat

Managing Journals On a System

To check the journal file for internal consistency, use the --verify option. If all is well, the output should indicate a PASS.

$ journalctl --verify

PASS: /run/log/journal/2a5d5f96ef9147c0b35535562b32d0ff/system.journal

491f68: Unused data (entry_offset==0)

PASS: /run/log/journal/2a5d5f96ef9147c0b35535562b32d0ff/system@816533ecd00843c4a877a0a962e124f2-0000000000003184-000551f9866c3d4d.journal

PASS: /run/log/journal/2a5d5f96ef9147c0b35535562b32d0ff/system@816533ecd00843c4a877a0a962e124f2-0000000000001fc8-000551f5d8945a9e.journal

PASS: /run/log/journal/2a5d5f96ef9147c0b35535562b32d0ff/system@816533ecd00843c4a877a0a962e124f2-0000000000000d4f-000551f1becab02f.journal

PASS: /run/log/journal/2a5d5f96ef9147c0b35535562b32d0ff/system@816533ecd00843c4a877a0a962e124f2-0000000000000001-000551f01cfcedff.journal

Deleting Old Journal Files

You can also display the current disk usage of all journal files with the --disk-usage options. It shows the sum of the disk usage of all archived and active journal files:

$ journalctl --disk-usage

To delete old (archived) journal files run the commands below:

$ sudo journalctl --vacuum-size=50M #delete files until the disk space they use falls below the specified size

$ sudo journalctl --vacuum-time=1years #delete files so that all journal files contain no data older than the specified timespan

$ sudo journalctl --vacuum-files=4 #delete files so that no more than the specified number of separate journal files remain in storage location

Rotating Journal Files

Last but not least, you can instruct journald to rotate journal files with the --rotate option. Note that this directive does not return until the rotation operation is finished:

$ sudo journalctl --rotate

For an in-depth usage guide and options, view the journalctl man page as follows.

$ man journalctl

Do check out some useful articles.

- Managing System Startup Process and Services (SysVinit, Systemd and Upstart)

- Petiti – An Open Source Log Analysis Tool for Linux SysAdmins

- How to Setup and Manage Log Rotation Using Logrotate in Linux

- lnav – Watch and Analyze Apache Logs from a Linux Terminal

That’s it for now. Use the feedback from below to ask any questions or add you thoughts on this topic.

Source