By Jack M. Germain

Sep 27, 2018 5:00 AM PT

The

MakuluLinux distro is now something brand new and very inviting.

MakuluLinux developer Jacque Montague Raymer on Thursday announced the first major release of this year. It is a whole lot more than a mere upgrade of distro packages. MakuluLinux Series 15 offers much more than new artwork and freshly repainted themes and desktop styles.

If you crave a Linux OS that is fresh and independent, MakuluLinux is a must-try Linux solution. The distro itself has been around for a few years and has grown considerably along the way. When it arrived on the Linux scene in 2015, its different approach to implementing Linux OS features disrupted the status quo.

I have reviewed six MakuluLinux releases since 2015. Each one involved a different desktop option. Each one introduced new features and improvements that gave MakuluLinux the potential to challenge long-time major Linux distro communities. Series 15 makes it clear that this South Vietnam-based Linux developer is no longer a small player in the Linux distro game.

MakuluLinux Series 15 is not an update of last year’s editions. It is a complete

rip-and-replace rebuild. Series 15 consists of three separate Linux distros: LinDoz is available now; Flash will be released by the end of October; and Core will debut between the end of November and mid-December.

I do mean three *different* distros — not desktop environments you choose within an edition. The first two offerings, LinDoz and Flash, are not new per se. They are rebuilt reincarnations of previous versions. However, LinDoz and Flash are completely reworked from the ground up to give you several big surprises.

MakuluLinux Core, however, is something entirely new. In fact, Raymer had not divulged Core’s development until reaching out to LinuxInsider to discuss the LinDoz release. His plan is to spotlight each distro as a separate entity.

The centerpiece of MakuluLinux Core’s innovative, homegrown user interface is the spin-wheel style circular menu display.

Makulu Unwrapped

Raymer and his developer team spent the last two years building a new base for MakuluLinux Series 15. Their goal is to surpass the functionality of prime competitors such as Linux Mint, Ubuntu and Manjaro, according to Raymer.

All three of the Series 15 editions feature a redesign of the original Ubuntu-based LinDoz OS. The developers spent a major portion of their time over the last two years applying many changes. First, they tackled revamping the LinDoz Ubuntu foundation.

MakuluLinux’s Series 15 LinDoz Edition blends both Microsoft Windows traits and Linux functionality into one OS.

It is “possibly the fastest and most stable base floating around the net at the moment, not to mention it is near bug-free,” Raymer told LinuxInsider. “All three of the builds going live this year will feature this base.”

The new base gets its primary updates from both Debian and Makulu directly. The new strategy is not to borrow the base from Debian or Ubuntu like other big developers. Makulu’s team chose to build its own base instead.

“This way we don’t inherit any known bugs that plague Debian or Ubuntu builds, and since we built the base we know what’s going on inside it,” said Raymer.

It also allowed the developers to optimize builds for speed and stability, he added.

That planning shows. I have been sampling the almost daily builds for the last few weeks. Each one offers a higher level of performance over the previous releases. Stability and speed were evident throughout the process.

What’s Inside

The new base for MakuluLinux resulted from an intense study of the competition, noted Raymer. The developers were determined to surpass Ubuntu, Linux Mint — which borrows from the Ubuntu base, except for its separate Linux Mint Debian Edition — and Manjara Linux, which is a derivative of Arch Linux.

After daily hands-on exposure to the end results of the base changes, I can vouch for the developer team’s success. Clearly, the team members had their priorities in the correct order. The new base is lightning fast. It is also more secure.

Security in Linux is a relative term. The real issue with Linux OSes is how secure you want to make your system. Some distros have higher levels of security that go beyond the upstream patching and package tweaks.

Raymer built in up-to-date security patches along with a reliable firewall and handy virus scanner out of the box. Typical Linux adopters normally do not think about deploying firewalls and virus scanners. Having those two features built into the OS adds to your feeling of safety and instills confidence.

The new base and system structure support a wide range of hardware out of the box. My test bench is stocked with a few old Windows clunkers and some very new rigs. I did not have to give a thought to installing drivers and fiddling with graphics fixes. The audio gear and varied printers and other connected devices I use every day just worked.

One of Raymer’s big demands was a bug-free release. I give him huge credit. I doubt that software can exist without bugs. MakuluLinux does a damn good job of proving that assessment wrong.

Developers can never test every piece of hardware in the wild. That is where the community of build testers and early adopters comes to the rescue. I’m guessing that this large gang of testers found enough bugs in the mix of builds to get a higher percentage of code fixed than generally happens elsewhere.

LinDoz Primer

I always liked the sarcasm hidden in the LinDoz name for the former MakuluLinux flagship OS. It is an ideal alternative to the actual Microsoft Windows platform. However, It does not try to be the next great Windows clone on Linux.

LinDoz does offer the Windows look and feel, thanks to its similar themes. That helps your comfort zone. Still, we are talking Linux here. LinDoz does what the proprietary giant cannot do. LinDoz is highly configurable beyond the look and feel of the themes.

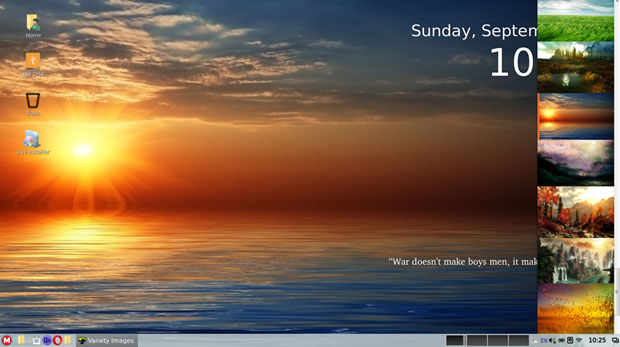

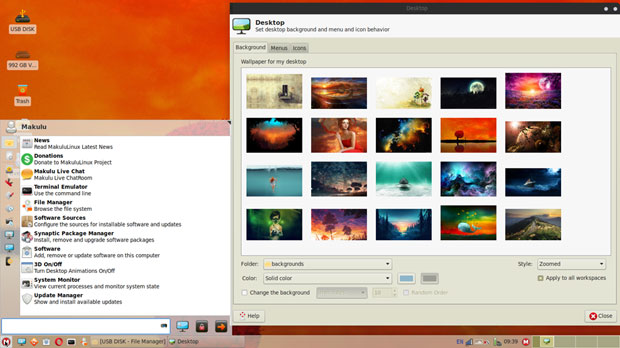

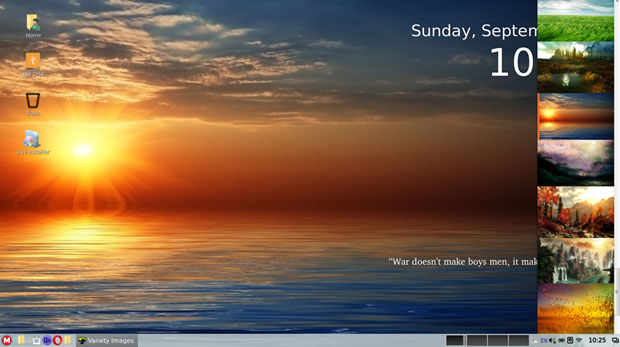

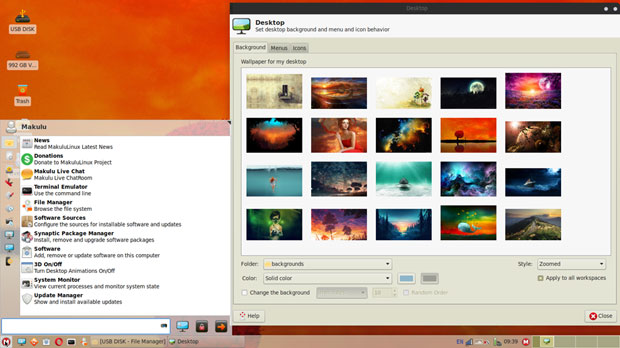

MakuluLinux LinDoz has vivid backgrounds, a classic bottom panel, and a preconfigured workspace switcher applet with a nice collection of desktop desklets.

For instance, LinDoz has a unique menu. It blends both Windows and Linux functionality into one OS. If you are a transplant from Windows World, you will be comfy in the familiar LinDoz surroundings. The Linux World part of the computing experience is so well integrated that you actually enjoy a new and better computing platform that does not come loaded with frustration and useless software.

LinDoz uses a nicely tweaked version of the Cinnamon desktop. I recently reviewed Linux Mint’s Debian-based release, Linux Mint Debian Edition or LMDE. I felt right at home with LinDoz Series 15. It uses a combination of the Debian repositories and its own in-house Makulu repository. Raymer just missed debuting the new LinDoz on Debian ahead of Linux Mint’s

release by a matter of weeks.

Flashy and Fast and Splashy

If you fancy a more traditional Linux setting, Flash has much going for it to keep you happy. It runs on the Xfce desktop, only you will swear it is something newer thanks to the snappy integration with other MakuluLinux trappings.

MakuluLinux Flash Edition running on the Xfce desktop is so well tweaked it looks and feels like something new. Flash is fast and splashy.

For example, the desktop has transparency that gives it a modern flavor. The Compiz OpenGL compositing manager is built in, for on-the-fly window dressing and fancy animations. With 3D graphics hardware, you can create fast compositing desktop effects like a minimization animation.

The Flash OS has the old style bottom panel with menu buttons on both sides. If you prefer the old Linux layout still around from 30 years ago, this OS is for you. Unlike many aging Linux distros, though, there is nothing old or sluggish about Makulu Flash. It is fast and splashy.

I especially like how I can turn the Compiz effects off or on with a single click. Flash also exhibits a modern flair that takes the Xfce desktop to a higher level of functionality. You can configure the settings to activate the hot corners features to add actions.

New Core Flagship

What could become the most inviting option in the MakuluLinux OS family — when it becomes available — is Makulu Core. Raymer has this third release positioned to be the new “core” Makulu offering.

Unlike the other two MakuluLinux distros in the Series 15 releases, the Core Edition is a dock-based desktop environment. This approach is innovative and attractive. A bottom dock houses the favorite applications. A side dock along the lower right vertical edge of the screen holds system icons and notifications.

For me, the most exciting eye candy that the Core Edition offers is its dynamic animations that put into play a new way to interact with the OS. The developers forked the classic Xfce desktop as a framework for designing the new Core desktop.

The user interface includes a dual menu and dual dock. It is mouse driven with a touchscreen gesture system.

For instance, the main menu appears in a circular design displaying icons for each software category. Fly over any icon in the circular array to have the contents of that category hang in a larger circle layered over the main menu display in the center of the screen.

The main menu is also based on hot corners. You trigger them by mousing into the top left or bottom left corners of the screen.

MakuluLinux Core is ready to grow and adapt. It is a solid platform for traditional Linux hardware. It will support new computing tools, according to Raymer.

For example, Core will work with touchscreens, and with foldable laptops that turn into tablets. Core will incorporate a way for both to work with ease and without the user having to make any changes on his side.

“We also wanted to make the OS feel a little like Linux, macOS and Microsoft Windows all at the same time, yet offer something new and fresh. This is how we came up with the dual menus, dual dock system. It feels comfortable to use, and it looks and feels a little like everything,” Raymer said.

Bottom Line

Since LinDoz is now officially available for download, I will wrap up with a focus on what makes MakuluLinux LinDoz a compelling computing option. I no doubt will follow the Flash and the Core edition releases when those two distros are available in final form.

One of the more compelling attributes that LinDoz offers is its beautiful form. It is appealing to see. Its themes and wallpapers are stunning.

For the first time, you will be able to install the new LinDoz once and forget about it. LinDoz is now a semi-rolling release. It receives patches directly from Debian Testing and MakuluLinux.

Essential patches are pushed to the system as needed.

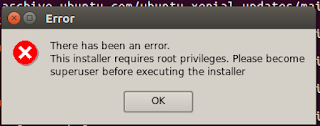

Caution: The LinDoz ISO is not optimized for virtual machines. I tried it and was disappointed. It loads but is extremely slow and mostly nonresponsive. Hopefully, the developer will optimize the ISO swoon to provide an additional option for testing or using this distro.

However, I burned the ISO to a DVD and had no issues with the performance in live session. I installed LinDoz to a hard drive with very satisfying results.

Want to Suggest a Review?

Is there a Linux software application or distro you’d like to suggest for review? Something you love or would like to get to know?

Please

email your ideas to me, and I’ll consider them for a future Linux Picks and Pans column.

And use the Reader Comments feature below to provide your input!

Jack M. Germain has been an ECT News Network reporter since 2003. His main areas of focus are enterprise IT, Linux and open source technologies. He has written numerous reviews of Linux distros and other open source software.

Jack M. Germain has been an ECT News Network reporter since 2003. His main areas of focus are enterprise IT, Linux and open source technologies. He has written numerous reviews of Linux distros and other open source software.

Email Jack.

Source

Teal has launched a $1,200 “Teal One” drone that runs Linux on a Jetson TX1 module and an Ambarella SoC with PX4 support. The quadcopter can fly at up to 60 mph for 15 minutes and shoot

Teal has launched a $1,200 “Teal One” drone that runs Linux on a Jetson TX1 module and an Ambarella SoC with PX4 support. The quadcopter can fly at up to 60 mph for 15 minutes and shoot

Jack M. Germain has been an ECT News Network reporter since 2003. His main areas of focus are enterprise IT, Linux and open source technologies. He has written numerous reviews of Linux distros and other open source software.

Jack M. Germain has been an ECT News Network reporter since 2003. His main areas of focus are enterprise IT, Linux and open source technologies. He has written numerous reviews of Linux distros and other open source software.

Haiku desktop

Haiku desktop HaikuDepot, Haiku’s package manager

HaikuDepot, Haiku’s package manager WebPositive, Haiku’s built-in browser

WebPositive, Haiku’s built-in browser