Ah, the age-old question: Which Linux distribution is best suited for servers? Typically, when this question is asked, the standard responses pop up:

- RHEL

- SUSE

- Ubuntu Server

- Debian

- CentOS

However, in the name of opening your eyes to maybe something a bit different, I’m going to approach this a bit differently. I want to consider a list of possible distributions that are not only outstanding candidates but also easy to use, and that can serve many functions within your business. In some cases, my choices are drop-in replacements for other operating systems, whereas others require a bit of work to get them up to speed.

Some of my choices are community editions of enterprise-grade servers, which could be considered gateways to purchasing a much more powerful platform. You’ll even find one or two entries here to be duty-specific platforms. Most importantly, however, what you’ll find on this list isn’t the usual fare.

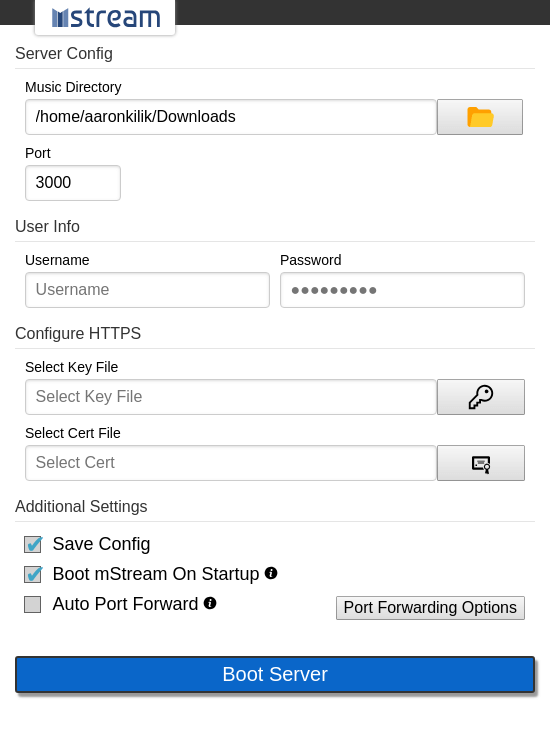

ClearOS

What is ClearOS? For home and small business usage, you might not find a better solution. Out of the box, ClearOS includes tools like intrusion detection, a strong firewall, bandwidth management tools, a mail server, a domain controller, and much more. What makes ClearOS stand out above some of the competition is its purpose is to server as a simple Home and SOHO server with a user-friendly, graphical web-based interface. From that interface, you’ll find an application marketplace (Figure 1), with hundreds of apps (some of which are free, whereas some have an associated cost), that makes it incredibly easy to extend the ClearOS featureset. In other words, you make ClearOS the platform your home and small business needs it to be. Best of all, unlike many other alternatives, you only pay for the software and support you need.

There are three different editions of ClearOS:

- ClearOS Community – the free edition of ClearOS

- ClearOS Home – ideal for home offices

- ClearOS Business – ideal for small businesses, due to the inclusion of paid support

To make the installation of software even easier, the ClearOS marketplace allows you to select via:

- By Function (which displays apps according to task)

- By Category (which displays groups of related apps)

- Quick Select File (which allows you to select pre-configured templates to get you up and running fast)

In other words, if you’re looking for a Linux Home, SOHO, or SMB server, ClearOS is an outstanding choice (especially if you don’t have the Linux chops to get a standard server up and running).

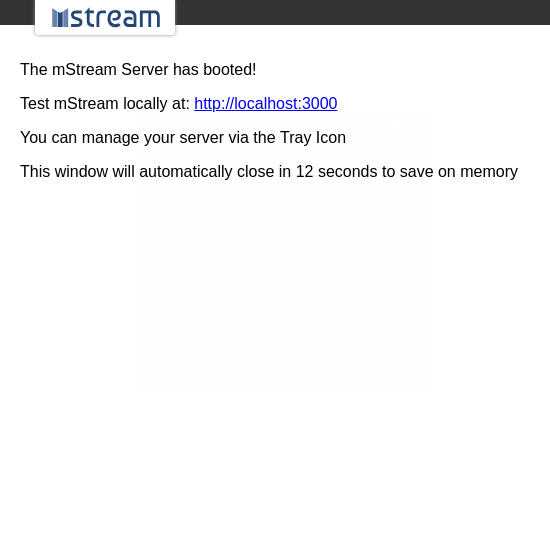

Fedora Server

You’ve heard of Fedora Linux. Of course you have. It’s one of the finest bleeding edge distributions on the market. But did you know the developers of that excellent Fedora Desktop distribution also has a Server edition? The Fedora Server platform is a short-lifecycle, community-supported server OS. This take on the server operating system enables seasoned system administrators, experienced with any flavor of Linux (or any OS at all), to make use of the very latest technologies available in the open source community. There are three key words in that description:

- Seasoned

- System

- Administrators

In other words, new users need not apply. Although Fedora Server is quite capable of handling any task you throw at it, it’s going to require someone with a bit more Linux kung fu to make it work and work well. One very nice inclusion with Fedora Server is that, out of the box, it includes one of the finest open source, web-based interface for servers on the market. With Cockpit (Figure 2) you get a quick glance at system resources, logs, storage, network, as well as the ability to manage accounts, services, applications, and updates.

If you’re okay working with bleeding edge software, and want an outstanding admin dashboard, Fedora Server might be the platform for you.

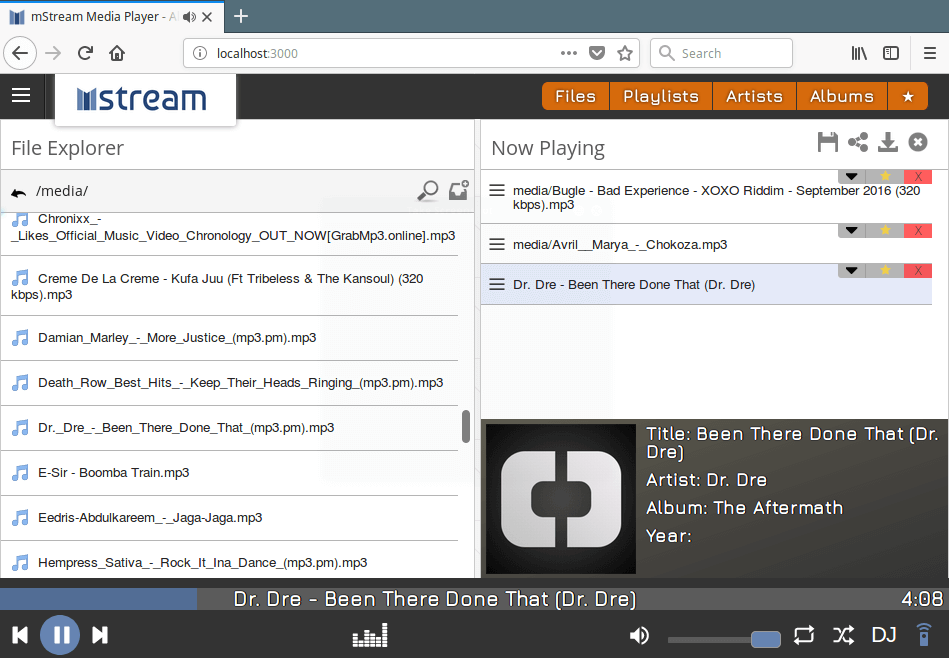

NethServer

NethServer is about as no-brainer of a drop-in SMB Linux server as you’ll find. With the latest iteration of NethServer, your small business will enjoy:

- Built-in Samba Active Directory Controller

- Seamless Nextcloud integration

- Certificate management

- Transparent HTTPS proxy

- Firewall

- Mail server and filter

- Web server and filter

- Groupware

- IPS/IDS or VPN

All of the included features can be easily configured with a user-friendly, web-based interface that includes single-click installation of modules to expand the NethServer feature set (Figure 3) What sets NethServer apart from ClearOS is that it was designed to make the admin job easier. In other words, this platform offers much more in the way of flexibility and power. Unlike ClearOS, which is geared more toward home office and SOHO deployments, NethServer is equally at home in small business environments.

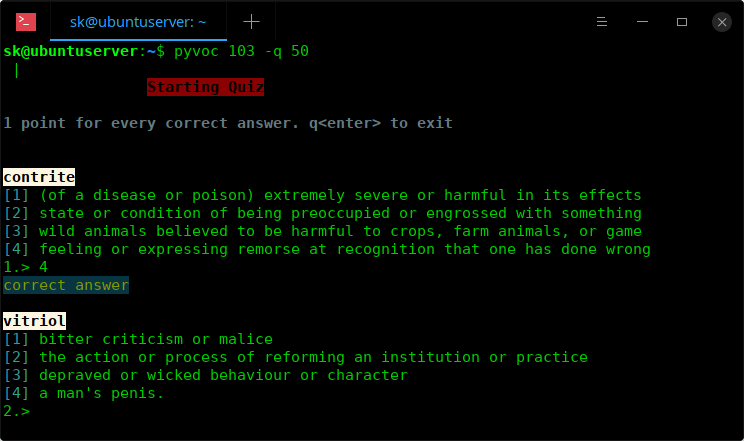

Rockstor

Rockstor is a Linux and Btfrs powered advanced Network Attached Storage (NAS) and Cloud storage server that can be deployed for Home, SOHO, as well as small- and mid-sized businesses alike. With Rockstor, you get a full-blown NAS/Cloud solution with a user-friendly, web-based GUI tool that is just as easy for admins to set up as it is for users to use. Once you have Rockstor deployed, you can create pools, shares, snapshots, manage replication and users, share files (with the help of Samba, NFS, SFTP, and AFP), and even extend the featureset, thanks to add-ons (called Rock-ons). The list of Rock-ons includes:

- CouchPotato (Downloader for usenet and bittorrent users)

- Deluge (Movie downloader for bittorrent users)

- EmbyServer (Emby media server)

- Ghost (Publishing platform for professional bloggers)

- GitLab CE (Git repository hosting and collaboration)

- Gogs Go Git Service (Lightweight Git version control server and front end)

- Headphones (An automated music downloader for NZB and Torrent)

- Logitech Squeezebox Server for Squeezebox Devices

- MariaDB (Relational database management system)

- NZBGet (Efficient usenet downloader)

- OwnCloud-Official (Secure file sharing and hosting)

- Plexpy (Python-based Plex Usage tracker)

- Rocket.Chat (Open Source Chat Platform)

- SaBnzbd (Usenet downloader)

- Sickbeard (Internet PVR for TV shows)

- Sickrage (Automatic Video Library Manager for TV Shows)

- Sonarr (PVR for usenet and bittorrent users)

- Symform (Backup service)

Rockstor also includes an at-a-glance dashboard that gives admins quick access to all the information they need about their server (Figure 4).

Zentyal

Zentyal is another Small Business Server that does a great job of handling multiple tasks. If you’re looking for a Linux distribution that can handle the likes of:

- Directory and Domain server

- Mail server

- Gateway

- DHCP, DNS, and NTP server

- Certification Authority

- VPN

- Instant Messaging

- FTP server

- Antivirus

- SSO authentication

- File sharing

- RADIUS

- Virtualization Management

- And more

Zentyal might be your new go-to. Zentyal has been around since 2004 and is based on Ubuntu Server, so it enjoys a rock-solid base and plenty of applications. And with the help of the Zentyal dashboard (Figure 5), admins can easily manage:

- System

- Network

- Logs

- Software updates and installation

- Users/groups

- Domains

- File sharing

- DNS

- Firewall

- Certificates

- And much more

Adding new components to the Zentyal server is as simple as opening the Dashboard, clicking on Software Management > Zentyal Components, selecting what you want to add, and clicking Install. The one issue you might find with Zentyal is that it doesn’t offer nearly the amount of addons as you’ll find in the likes of Nethserver and ClearOS. But the services it does offer, Zentyal does incredibly well.

Plenty More Where These Came From

This list of Linux servers is clearly not exhaustive. What it is, however, is a unique look at the top five server distributions you’ve probably not heard of. Of course, if you’d rather opt to use a more traditional Linux server distribution, you can always stick with CentOS, Ubuntu Server, SUSE, Red Hat Enterprise Linux, or Debian… most of which are found on every list of best server distributions on the market. If, however, you’re looking for something a bit different, give one of these five distos a try.

Learn more about Linux through the free “Introduction to Linux” course from The Linux Foundation and edX.