In this lesson on Python NumPy library, we will look at how this library allows us to manage powerful N-dimensional array objects with sophisticated functions present to manipulate and operate over these arrays. To make this lesson complete, we will cover the following sections:

- What is Python NumPy package?

- NumPy arrays

- Different operations which can be done over NumPy arrays

- Some more special functions

What is Python NumPy package?

Simply put, NumPy stands for ‘Numerical Python’ and that is what it aims to fulfil, to allow complex numerical operations performed on N-dimensional array objects very easily and in an intuitive manner. It is the core library used in scientific computing, with functions present to perform linear algebraic operations and statistical operations.

One of the most fundamental (and attractive) concepts to NumPy is its usage of N-dimensional array objects. We can take this array as just a collection of rows and column, just like an MS-Excel file. It is possible to convert a Python list into a NumPy array and operate functions over it.

NumPy Array representation

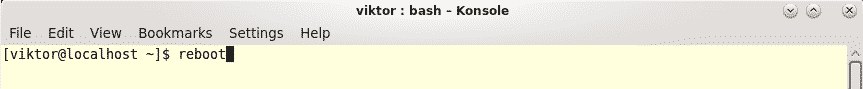

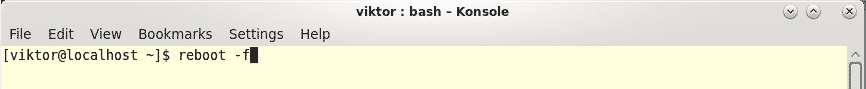

Just a note before starting, we use a virtual environment for this lesson which we made with the following command:

source numpy/bin/activate

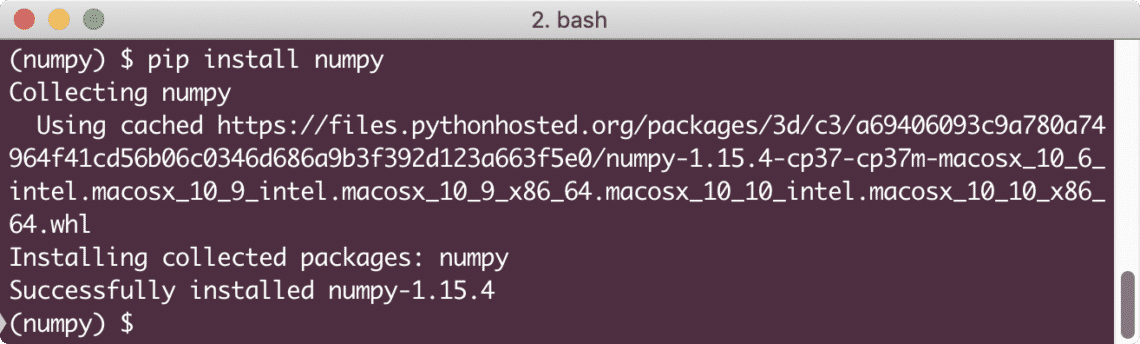

Once the virtual environment is active, we can install numpy library within the virtual env so that examples we create next can be executed:

We see something like this when we execute the above command:

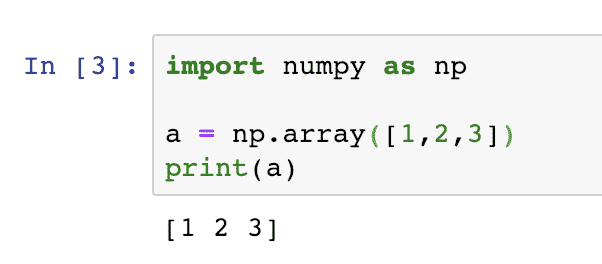

Let’s quickly test if the NumPy package has been installed correctly with the following short code snippet:

a = np.array([1,2,3])

print(a)

Once you run the above program, you should see the following output:

We can also have multi-dimensional arrays with NumPy:

print(multi_dimension)

This will produce an output like:

[4 5 6]]

You can use Anaconda as well to run these examples which is easier and that is what we have used above. If you want to install it on your machine, look at the lesson which describes “How to Install Anaconda Python on Ubuntu 18.04 LTS” and share your feedback. Now, let us move forward to various types of operations that can be performed with with Python NumPy arrays.

Using NumPy arrays over Python lists

It is important to ask that when Python already has a sophisticated data structure to hold multiple items than why do we need NumPy arrays at all? The NumPy arrays are preferred over Python lists due to the following reasons:

- Convenient to use for mathematical and compute intensive operations due to presence of compatible NumPy functions

- They are much fast faster due to the way they store data internally

- Less memory

Let us prove that NumPy arrays occupy less memory. This can be done by writing a very simple Python program:

import time

import sys

python_list = range(500)

print(sys.getsizeof(1) * len(python_list))

numpy_arr = np.arange(500)

print(numpy_arr.size * numpy_arr.itemsize)

When we run the above program, we will get the following output:

4000

This shows that the same size list is more than 3 times in size when compared to same size NumPy array.

Performing NumPy operations

In this section, let us quickly glance over the operations that can be performed on NumPy arrays.

Finding dimensions in array

As the NumPy array can be used in any dimensional space to hold data, we can find the dimension of an array with the following code snippet:

numpy_arr = np.array([(1,2,3),(4,5,6)])

print(numpy_arr.ndim)

We will see the output as “2” as this is a 2-dimensional array.

Finding datatype of items in array

We can use NumPy array to hold any data type. Let’s now find out the data type of the data an array contains:

print(other_arr.dtype)

numpy_arr = np.array([(1,2,3),(4,5,6)])

print(numpy_arr.dtype)

We used different type of elements in the above code snippet. Here is the output this script will show:

int64

This happens as characters are interpreted as unicode characters and second one is obvious.

Reshape items of an array

If a NumPy array consists of 2 rows and 4 columns, it can be reshaped to contain 4 rows and 2 columns. Let’s write a simple code snippet for the same:

print(original)

reshaped = original.reshape(4, 2)

print(reshaped)

Once we run the above code snippet, we will get the following output with both arrays printed to the screen:

[‘5’ ‘f’ ‘g’ ‘8’]]

[[‘1’ ‘b’]

[‘c’ ‘4’]

[‘5’ ‘f’]

[‘g’ ‘8’]]

Note how NumPy took care of shifting and associating the elements to new rows.

Mathematical operations on items of an array

Performing mathematical operations on items of an array is very simple. We will start by writing a simple code snippet to find out maximum, minimum and addition of all items of the array. Here is the code snippet:

print(numpy_arr.max())

print(numpy_arr.min())

print(numpy_arr.sum())

print(numpy_arr.mean())

print(np.sqrt(numpy_arr))

print(np.std(numpy_arr))

In the last 2 operations above, we also calculated the square root and standard deviation of each array items. The above snippet will provide the following output:

1

15

3.0

[[1. 1.41421356 1.73205081 2. 2.23606798]]

1.4142135623730951

Converting Python lists to NumPy arrays

Even if you have been using Python lists in your existing programs and you don’t want to change all of that code but still want to make use of NumPy arrays in your new code, it is good to know that we can easily convert a Python list to a NumPy array. Here is an example:

height = [2.37, 2.87, 1.52, 1.51, 1.70, 2.05]

weight = [91.65, 97.52, 68.25, 88.98, 86.18, 88.45]

# Create 2 numpy arrays from height and weight

np_height = np.array(height)

np_weight = np.array(weight)

Just to check, we can now print out the type of one of the variables:

And this will show:

We can now perform a mathematical operations over all the items at once. Let’s see how we can calculate the BMI of the people:

bmi = np_weight / np_height ** 2

# Print the result

print(bmi)

This will show the BMI of all the people calculated element-wise:

Isn’t that easy and handy? We can even filter data easily with a condition in place of an index inside square brackets:

This will give:

Create random sequences & repetitions with NumPy

With many features present in NumPy to create random data and arrange it in a required form, NumPy arrays are many times used in generating test dataset at many places, including debugging and testing purposes. For example, if you want to create an array from 0 to n, we can use the arange (note the single ‘r’) like the given snippet:

This will return the output as:

The same function can be used to provide a lower value so that the array starts from other numbers than 0:

This will return the output as:

The numbers need not be continuous, they can skip a fix step like:

This will return the output as:

We can also get the numbers in a decreasing order with a negative skip value:

This will return the output as:

It is possible to fund n numbers between x and y with equal space with linspace method, here is the code snippet for the same:

This will return the output as:

Please note that the output items are not equally spaced. NumPy does its best to do so but you need not rely on it as it does the rounding off.

Finally, let us look at how we can generate a set of random sequence with NumPy which is one of the most used function for testing purposes. We will pass a range of numbers to NumPy which will be used as an initial and final point for the random numbers:

The above snippet creates a 2 by 2 dimensional NumPy array which will contain random numbers between 0 and 10. Here is the sample output:

[8 3]]

Please note as the numbers are random, the output can differ even between the 2 runs on the same machine.

Conclusion

In this lesson, we looked at various aspects of this computing library which we can use with Python to compute simple as well as complex mathematical problems which can arise in various use-cases The NumPy is one of the most important computation library when it comes to data engineering and calculating numerical dat, definitely a skill we need to have under our belt.

Aaeon’s compact, fanless “Boxer-8110AI” embedded computer runs Ubuntu 16.04 on an Nvidia Jetson TX2 module and offers GbE, HDMI, CAN, serial, and 3x USB ports, plus anti-vibration and -20 to 50°C support.

Aaeon’s compact, fanless “Boxer-8110AI” embedded computer runs Ubuntu 16.04 on an Nvidia Jetson TX2 module and offers GbE, HDMI, CAN, serial, and 3x USB ports, plus anti-vibration and -20 to 50°C support.