Share with friends and colleagues on social media

The steady crush of data growth is at your doorstep, your storage arrays are showing their age, and it just doesn’t seem like you have either the budget, staff or the resources to keep up. Whether you recognize it or not, that’s the siren call for Ceph, the open-source distributed storage system designed for performance, reliability and scalability.

The only rub is that, as an IT practitioner familiar with RAID, SANS and proprietary storage solutions of all shapes and sizes, there’s not much about Ceph that feels, well, comfortable. After all, Ceph uses something called “replication” or “erasure coding” instead of RAID; it provides block, object and file storage services all in one; and it scatters data across drives, servers and even geographical locations.

Still, you have that gnawing sense that you need to get on board — even if it feels like the expertise you and your team need is just out of reach.

The real challenge isn’t the technology itself; that commodity stuff — servers, fast networks and loads of drives — is familiar enough. Ceph expertise is really about getting accustomed to abstracting that familiar hardware and willingly handing off routine aspects of the cluster to more automated, DevOps-style approaches. It also helps to get your hands on a cluster of your very own to see just how it works.

SUSE Enterprise Storage can help.

SUSE is a primary contributor to the open-source Ceph project, and we’ve added a lot of upstream features and capabilities that have gone a long way toward shaping the technology for the enterprise. With SUSE Enterprise Storage, we’ve made the technology even more attainable by automating the deployment with Salt and DeepSea, a collection of Salt files for deploying Ceph.

With the latest releases of SUSE Enterprise Storage, you can use DeepSea to deploy Ceph in hours, not days or weeks. With the openATTIC graphical dashboard, newcomers can get a feel for how a Ceph storage array works while the slightly more expert can use it to manage, maintain and use the cluster. For example, the Dashboard makes adding iSCSI, NFS or other shared storage services straightforward and familiar, which gives you and your team more confidence with the technology.

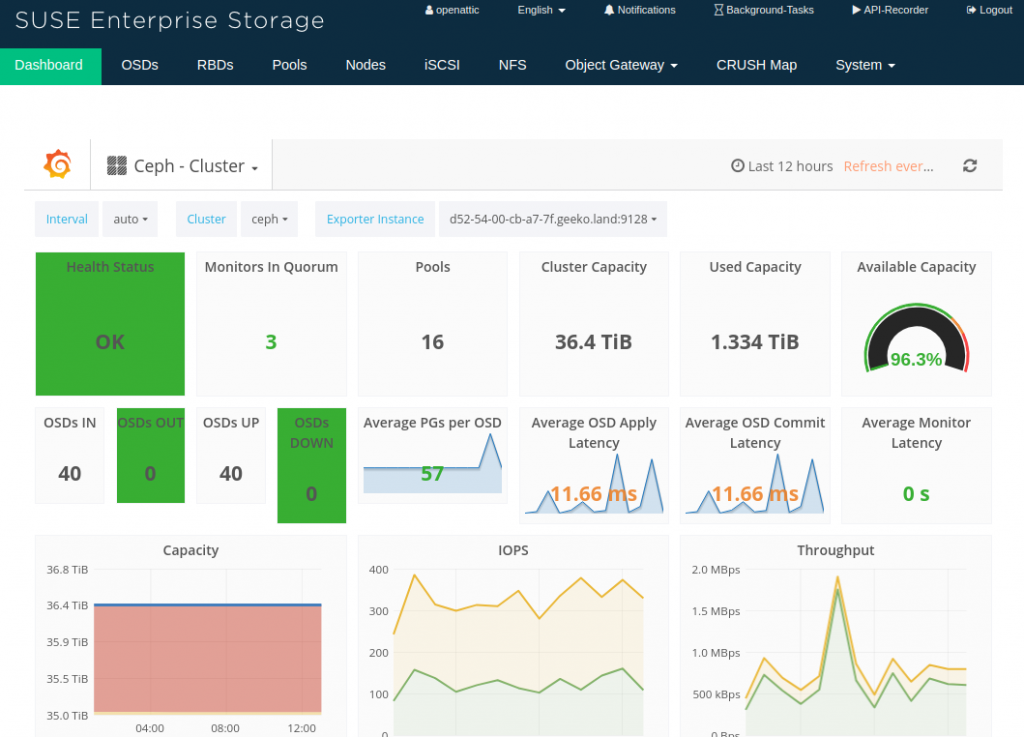

In the image below, you can see how the dashboard offers a real-time view of the cluster, including available storage capacity, health and availability:

The SUSE Enterprise Storage dashboard with openATTIC.

The visual information on the Dashboard is just the start. Under the covers it’s a SUSE Linux Enterprise Server 12 SP3 running as a Salt master that controls any number of Salt minions, which provide monitor, manager, storage, RADOS, iSCSI, NFS and other services to your storage cluster.

Instead of wrestling with resources or manually figuring out how to set up an iSCSI gateway, for example, SUSE Enterprise Storage starts by automating the deployment in a predictable, reliable way, then gives you a graphical way to interact with all the components. It also gives you the flexibility to create storage pools and make them available through the gateways you want. Adding other services to your Ceph cluster requires only minor modifications to a straightforward policy.cfg file, which you apply with Salt to add even more capabilities and capacity:

The policy.cfg defines your various nodes, including all your Ceph minions.

In this policy.cfg example, you can see the iSCSI gateway (role-igw) service role applied to any node you’ve assigned a hostname that begins with “igw”. Other Ceph cluster roles are assigned to other nodes, which work together to replicate data, set up storage pools and make it all accessible through familiar APIs, the command line and the dashboard.

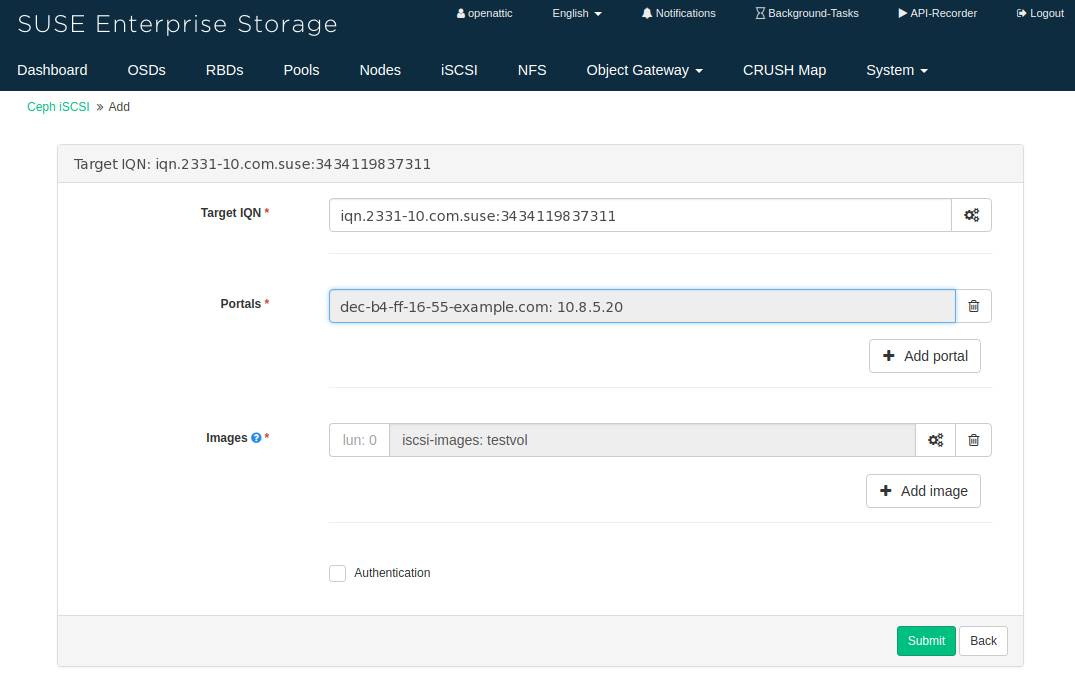

Adding the role-igw role to your Ceph cluster from the example above provides the iSCSI service to your cluster, which enables you to add new iSCSI shares from the dashboard at will:

The GUI makes adding iSCSI and other gateways straightforward.

Next steps

Of course, the key to any storage deployment is good planning, and regardless of the tools you use, you need to figure out how your Ceph storage cluster will be used — today and into the future. There’s no shortcut to good planning, but that part should be familiar to anyone who’s managed enterprise storage.

In Part 2 of this SUSE Enterprise Storage series, I’ll show you how to sketch out a small-scale proof-of-concept Ceph plan and deploy SUSE Enterprise Storage in a purely virtual environment. This lab environment won’t be suitable for production purposes, but it will give you a working storage cluster that looks, feels and acts just like a full-blown deployment.

Share with friends and colleagues on social media