Kubernetes (pronounced “CUBE-A-NET-IS”) is an open-source platform that helps manage container applications such as Docker. Whether you are looking to automate or scale these containers across multiple hosts, Kubernetes can speed up deployment. To do this it may use internal components such as Kubernetes API or third-party extensions which run on Kubernetes.

This article will help you understand the basic concepts of Kubernetes and why it is causing such a seismic shift in the server market, with vendors as well as cloud providers, such as Azure and Google Cloud, offering Kubernetes services.

Kubernetes: A Brief History

Kubernetes is one of Google’s gifts to the open source community. The container platform was a part of Borg, an internal Google project for more than a decade. Borg let Google manage hundreds and even thousands of tasks (called “Borglets”) from different applications across clusters. Its objective was to efficiently utilize machines (and virtual machines) while ensuring high availability of run-time features.

The same architecture was popular with other companies looking for ways to efficiently ensure high availability. Somewhere in 2015, as soon as Kubernetes 1.0 came out, Google gave up control over the technology. Kubernetes is now with a foundation called Cloud Native Computing Foundation (CNCF), which itself is part of the Linux Foundation.

How Kubernetes Works

Borrowing the ideas of the Borg Project, the “Borglets” gave way to “pods,” which are scheduler units housing the containers. Essentially, they have individual IP addresses which come into the picture whenever a container requires CPU, memory or storage.

The pods ensure high availability by load balancing the traffic in a round-robin format. Furthermore, they are inside machines (or virtual machines) called “worker nodes,” also known as “minions.” From this point a “master node” controls the entire cluster by orchestrating containerization using the Kubernetes API. Docker is capable of running in each worker node where it can download images and start containers.

To get the API connection at a Kubernetes cluster, a CLI syntax called kubectl is used. This is a very important command because it single-handedly runs all the instructions which the Master node serves to worker nodes. Mastering kubectl requires a bit of learning, but once you learn, you can start utilizing Kubernetes clusters. Kubernetes as well as Docker are written in the Go programming language.

Applications

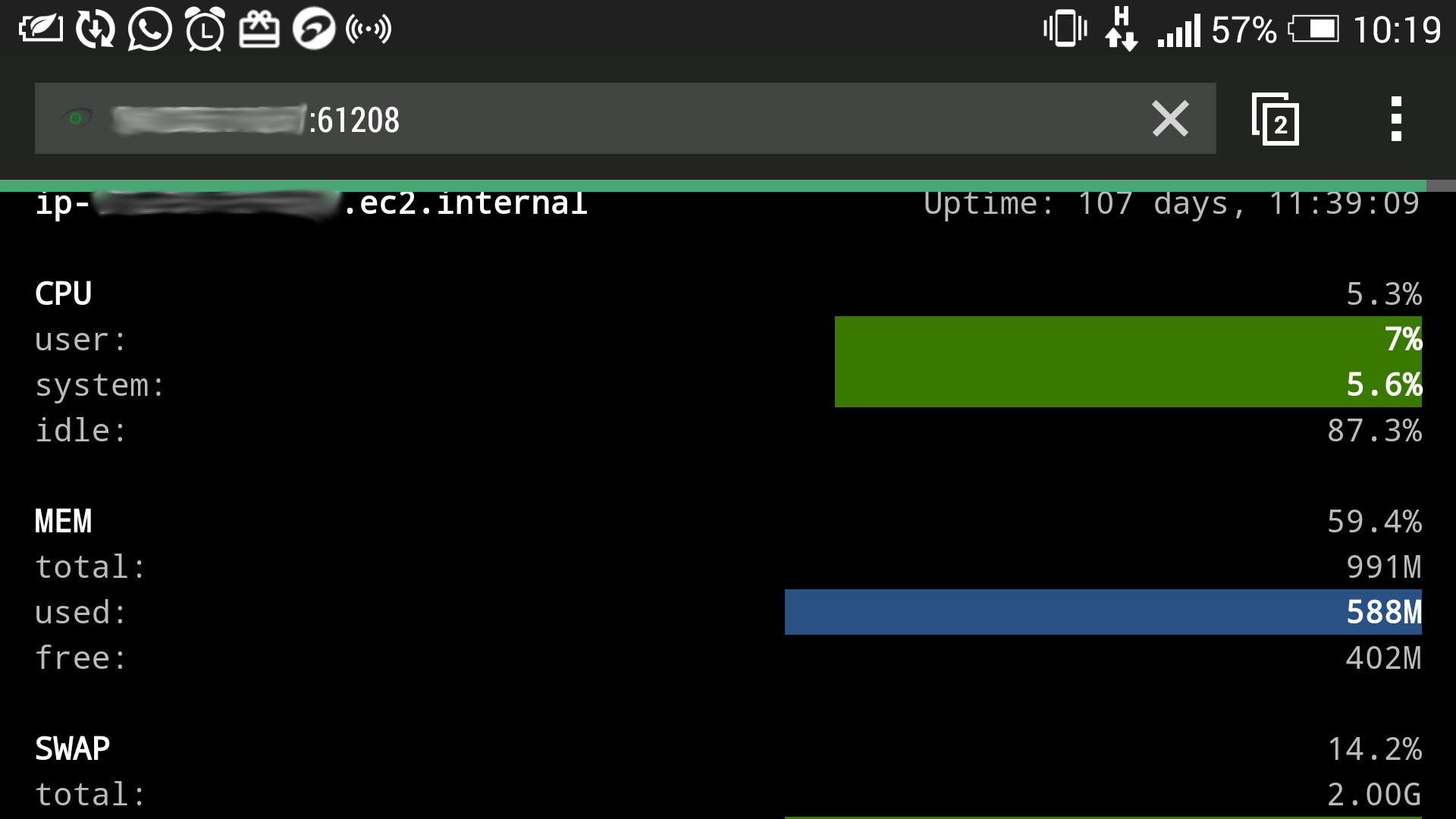

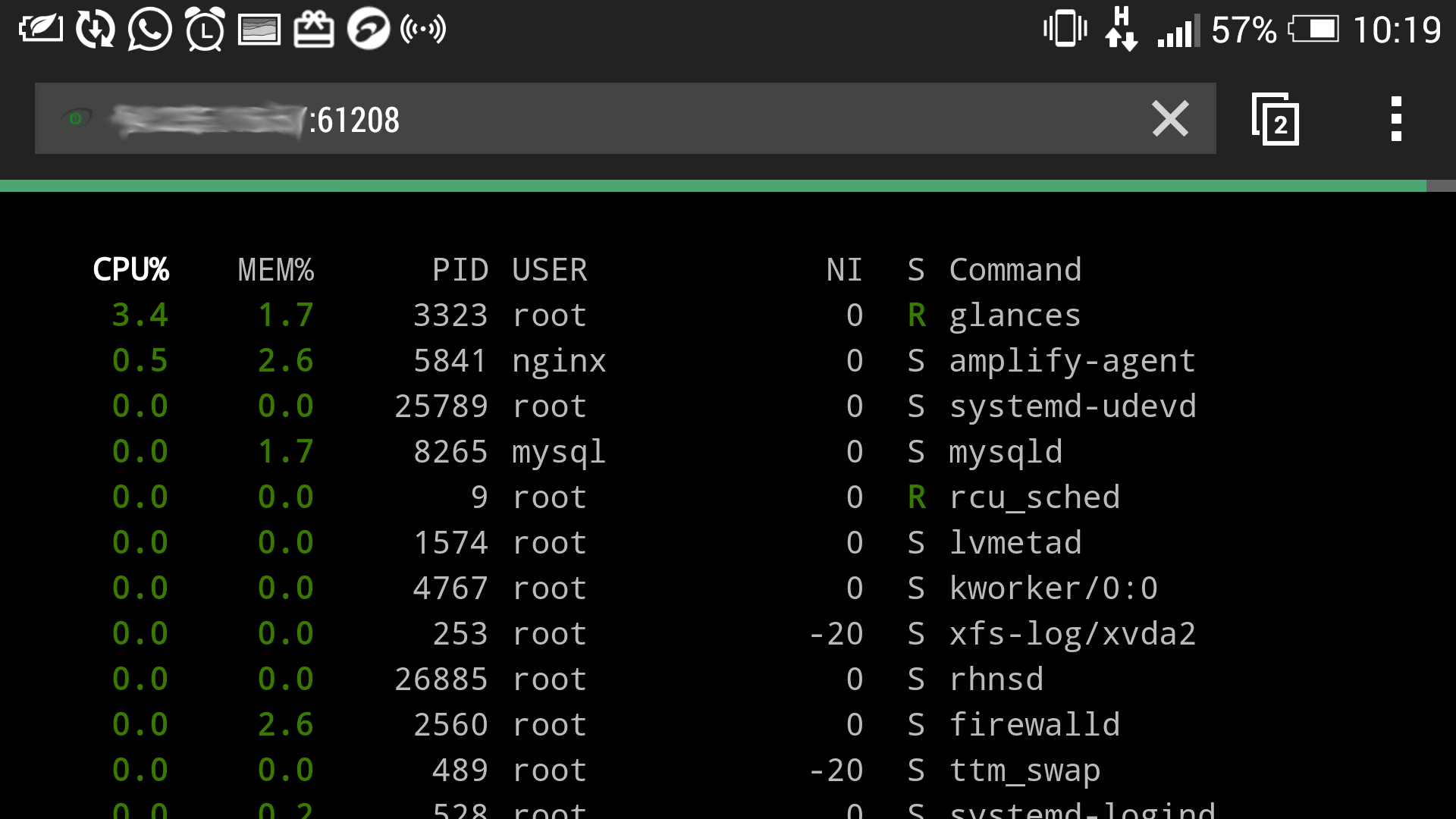

Kubernetes can drastically bring down server and data center costs because of its high efficiency in using the machines. Some of the common applications of Kubernetes include:

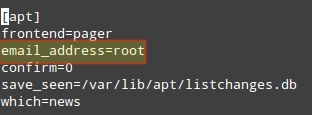

- Managing application servers. Most application servers require security, configuration management, updates and more, which can run using Kubernetes.

- Automatic rollouts and rollbacks. With Kubernetes, you don’t have to worry about product rollouts or rollbacks across multiple end nodes.

- Deploying stateless apps. Web applications are now remotely controllable. For example, Kubernetes can help you run Nginx servers using a stateless application deployment.

- Deploying stateful apps. Kubernetes can run a MySQL database.

- Storing API objects. For different storage needs, Kubernetes ensures ideal storage because it uses container principles.

- Out-of-the-box-ready. Kubernetes is very helpful in out-of-the-box applications such as service discovery, logging and monitoring and authentication.

- IoT applications. Kubernetes is finding an increasing use in IoT because of its massive scaling capability.

- Run anywhere. You can run Kubernetes anywhere, including inside a suitcase.

In Summary

The objective of Kubernetes is to utilize computing resources to their maximum extent. Since you can orchestrate containers across multiple hosts, the end nodes will never have resource problems or failure. It helps scale automatically because you only have to give the command once from Master node, and to scale applicataions is nothing short of revolutionary.

To learn more about Kubernetes, visit its official website which contains tutorials.