This is the latest in our series of articles highlighting essential system tools. These are small, indispensable utilities, useful for system administrators as well as regular users of Linux based systems. The series examines both graphical and text based open source utilities. For this article, we’ll look at Krusader, a free and open source graphical file manager. For details of all tools in this series, please check the table at the summary page of this article.

Krusader is an advanced, twin-panel (commander-style) file manager designed for KDE Plasma. Krusader also runs on other popular Linux desktop environments such as GNOME.

Besides comprehensive file management features, Krusader is almost completely customizable, fast, seamlessly handles archives, and offers a huge feature set.

Krusader is implemented in C++.

Installation

Popular Linux distros provide convenient packages for Krusader. So you shouldn’t need to compile the source code.

If you do want to compile the source code, bear in mind recent versions of Krusader use libraries like Qt5 and KF5, and don’t work on KDE Plasma 4 or older.

On one of our vanilla test machines, KDE Plasma is not installed and there are no KDE applications installed. If you don’t currently use any KDE applications, remember that installing Krusader will drag in many other packages. Krusader’s natural environment is KDE Plasma 5, because it depends on services provided by KDE Frameworks 5 base libraries.

The image below illustrates this point sweetly. Installing Krusader without Plasma requires 36 packages to be installed, consuming a whopping 148 MiB of hard disk space.

The image below offers a stark contrast. Here, a different test machine, ‘pluto’, is a vanilla Linux installation running KDE Plasma 5. Installing Krusader doesn’t pull in any other packages. Installing Krusader only consumes 14.90 MiB of disk space.

Some of Krusader’s functionality is sourced from external tools. On the first run, Krusader searches for available tools in your $PATH. Specifically, it checks for a diff utility (kdiff3, kompare or xxdiff), an email client (Thunderbird or KMail), a batch renamer (KRename), and a checksum utility (md5sum). It also searches for (de)compression tools (tar, gzip, bzip2, lzma, xz, lha, zip, unzip, arj, unarj, unace, rar, unrar, rpm, dpkg, and 7z). You’re then presented with a Konfigurator window which lets you configure the file manager.

Krusader’s internal editor requires Kate is installed. Kate is a competent text editor developed by KDE.

Krusader is implemented in C++.

In Operation

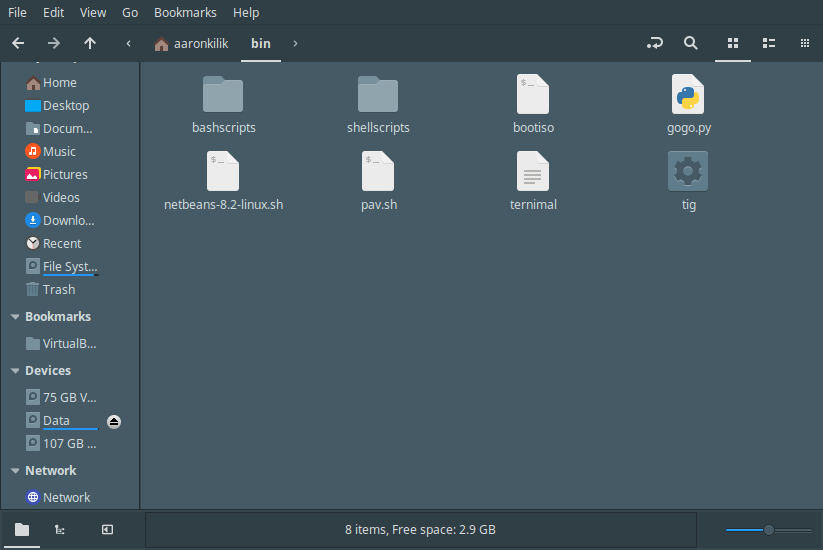

Here’s Krusader in operation.

Let’s break down the user interface. At the top is a standard menu bar which allows access to the features and functions of the file manager. “Useractions” seems a quirky entry.

Then there’s the main tool bar which offers access to commonly used functions. There’s a location tool bar, information label, and panel tool bars. The majority of the window is taken up by the left and right panels. Having two panels makes dragging and dropping files easy.

At the bottom there’s total labels, tabs, tab controls, function key buttons, and a status bar. You can also show a command line but that’s not enabled by default.

Krusader’s tabs let you switch between different directories in one panel, without affecting the directory displayed in the adjacent panel.

Places, favorites and volumes are available in each panel, not on a common side bar.

Krusader offers a wide range of features. We’ll have a look at some of the stand out features for illustration purposes — there are too many to go into great detail on them all! We’re also not going to consider basic functions, or basic file management operations, just take them for granted.

KRename is integrated with Krusader. Another highlight is BookMan, Krusader’s bookmark tool for bookmarking folders, local and remote URLs, and later returning to them in a click of a button.

There’s built in tree, file previews, file split and join, as well as compress/decompress functions.

Krusader can launch a program by clicking on a data file. For example, clicking on an R file launches that document in RStudio.

With profiles you can save and restore your favorite settings. Several features support profiles, you can have e.g. different panel profiles (work, home, remote connections, etc.), search profiles, synchroniser profiles, etc.

KruSearcher

One of the strengths of Krusader is its ability to quickly locate files both locally and on remote file systems. There’s a General Section which covers most searches you’ll want to perform, but if you need additional functionality there’s an Advanced section too.

Let’s take a very simple search. We’re looking to find files in /home/sde/R (and sub-directories) that match the suffix .rdx.

There’s a separate tab that displays the results of the search.

Of course this is an extremely basic search. You can append multiple searches in “the Search for” bar, with or without wildcards, and exclude searches with the | character. You also have the option to specify multiple directories to search or exclude, as well as the ability to search for patterns in files (like grep). There’s recursive searching, the option to search archives, and to follow soft-links during a search.

But that’s not the extent of the search functionality. With the advanced tab, you can restrict search to files matching a specific size or size range, date options, and even by ownership.

In the bottom left of each dialog box, there’s a profiles button. This can be a time-saver if you often perform the same search operation. It allows you save search settings.

Synchronise Folders

This function compares two directories with all subdirectories and shows the differences between them. It’s accessed from Tools | Synchronise Folders (or from the keyboard shortcut Ctrl+Y).

The tool lets you synchronize the files and directories, one of the panels can be a remote location.

Here’s a comparison of two directories stored on different partitions.

The image below shows that to synchronise the two directories, 45 files will be copied.

The Synchronizer is not the only way to compare files. There’s other compare functions available. Specifically, you can compare files by content, and compare directories. The compare by content functionality (accessed from the menu bar “File | Compare by Content”) calls an external graphical difference utility; either Kompare, KDiff3, or xxdiff.

Disk Usage

A disk usage analyzer is a utility which helps users to visualize the disk space being used by each folder and files on a hard disk or other storage media. This type of application often generates a graphical chart to help the visualization process.

Disk usage analyzers are popular with system administrators as one of their essential tools to prevent important directories and partitions from running out of space. Having a hard disk with insufficient free space can often have a detrimental effect on the system’s performance. It can even stop users from logging on to the system, or, in extreme circumstances, cause the system to hang.

However, disk usage analyzers are not just useful tools for system administrators. While modern hard disks are terabytes in size, there are many folk who seem to forever run out of hard drive space. Often the culprit is a large video and/or audio collection, bloated software applications, or games. Sometimes the hard disk is also full of data that that users have no particular interest in. For example, left unchecked, log files and package archives can consume large chunks of hard disk space.

Krusader offers built-in disk usage functionality. It’s accessed from Tools | Disk Usage (or with the keyboard shortcut Alt+Shift+S).

Here’s an image of the tool running.

And the output image showing the disk space consumed by each directory.

We’re not convinced that Krusader’s implementation is one of its strong points. We also experienced segmentation faults running the software in GNOME, although no such issues were found with KDE as our desktop environment. There’s definitely room for improvement.

Checksum generation and checking

A checksum is the result of running a checksum algorithm, called a cryptographic hash function, on an item of data, typically a single file. A hash function is an algorithm that transforms (hashes) an arbitrary set of data elements, such as a text file, into a single fixed length value (the hash).

Comparing the checksum that you generate from your version of the file, with the one provided by the source of the file, helps ensure that your copy of the file is genuine and error free. By themselves, checksums are often used to verify data integrity but are not relied upon to verify data authenticity.

You can create a checksum from File | Create Checksum.

There’s the option to choose the checksum method from a dropdown list. The supported checksum methods are:

- md5 – a widely used hash function producing a 128-bit hash value.

- sha1 – Secure Hash Algorithm 1. This cryptographic hash function is not considered secure.

- sha224 – part of SHA-2 set of cryptographic hash functions. SHA224 produces a 224-bit (28-byte) hash value, typically rendered as a hexadecimal number, 56 digits long.

- sha256 – produces a 256-bit (32-byte) hash value, typically rendered as a hexadecimal number, 64 digits long.

- sha384 – produces a 384-bit (48-byte) hash value, typically rendered as a hexadecimal number, 96 digits long.

- sha512 – produces a 512-bit (64-byte) hash value, typically rendered as a hexadecimal number, 128 digits long.

Krusader checks if you have a tool that supports the type of checksum you need (from your specified checksum file) and displays the files that failed the checksum (if any).

Custom commands

Krusader can be extended with custom add-ons called User Actions.

User Actions are a way to call external programs with variable parameters.

There are a few example User Actions provided which will help you get started. And KDE’s store offers, at the time of writing, 45 community created add-ons, which help to illustrate the possibilities.

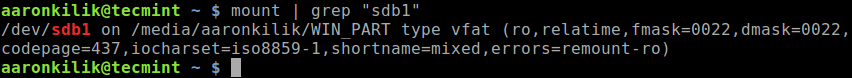

MountMan

MountMan is a tool which helps you manage your mounted file systems. Mount or unmount file systems of all types with a single mouse click.

When started from the menu (Tools | MountMan), it displays a list of all mounted file systems.

For each file system, MountMan displays its name (which is the actual device name – i.e. /dev/sda1 for a first partition on the first hard disk drive), its file system type (ext4, ext3, ntfs, vfat, ReiserFS etc) and its mount point on your system (the directory on which the file system is mounted).

MountMan also displays usage information using total size, free size, and percentage of available space free. You can sort by clicking the title of any column (in ascending or descending order).

Here’s an image from one of our test systems.

On this test system, the Free % column doesn’t list the partitions in the correct order.

Highly Configurable

Krusader offers a wealth of configuration options. Use the Menu bar and choose “Settings | Configure Krusader”. This opens a dialog box with many options for configuring the software.

In the Start up section, you can choose a startup profile. This can be a real time-saver. There’s a last session option.

The Panel section has a whole raft of configuration options with sections for General, View, Buttons, Selection Mode, Media Menu and Layout.

By default the software uses KDE colours, but you can configure the colours of every element to your heart’s content.

This panel covers basic operations, including the external terminal, the viewer/editor, and Atomic extensions.

With the Advanced section, you can automount filesystems, turn off specific user confirmations (not recommended), even fine-tune the icon cache size (which alters the memory footprint of Krusader).

The Archives section lets you change the way the software deals with archives. We don’t recommend enabling write support into an archive as there’s the possibility of data loss in the event of a power failure.

The dependencies section is where you define the location of external applications including general tools, packers and checksum utilities.

The User Actions sections lets you configure settings in relation to ‘useractions’. You can also change the font for the output-collection.

The final section links the MIMEs to protocols.

Website: krusader.org

Support: Krusader Handbook

Developer: Krusader Krew

License: GNU General Public License v2

———————————————————————————————–

Other tools in this series:

| Essential System Tools |

| ps_mem |

Accurate reporting of software’s memory consumption |

| gtop |

System monitoring dashboard |

| pet |

Simple command-line snippet manager |

| Alacritty |

Innovative, hardware-accelerated terminal emulator |

| inxi |

Command-line system information tool that’s a time-saver for everyone |

| BleachBit |

System cleaning software. Quick and easy way to service your computer |

| catfish |

Versatile file searching software |

| journalctl |

Query and display messages from the journal |

| Nmap |

Network security tool that builds a “map” of the network |

| ddrescue |

Data recovery tool, retrieving data from failing drives as safely as possible |

| Timeshift |

Similar to Windows’ System Restore functionality, Time Machine Tool in Mac OS |

| GParted |

Resize, copy, and move partitions without data |

| Clonezilla |

Partition and disk cloning software |

| fdupes |

Find or delete duplicate files |

| Krusader |

Advanced, twin-panel (commander-style) file manager |

| nmon |

Systems administrator, tuner, and benchmark tool |

| f3 |

Detect and fix counterfeit flash storage |

| QJournalctl |

Graphical User Interface for systemd’s journalctl |

Source