When today traffic and casual internet speeds is measured in teens of Giga over an eye blink even for ordinary Internet clients, what’s the purpose of setting a local repository cache on LAN’s you may ask?

One of the reasons is to reduce Internet bandwidth and high speed on pulling packages from local cache. But, also, another major reason should be privacy. Let’s imagine that clients from your organization are Internet restricted, but their Linux boxes need to regular system updates on software and security or just need new software packages. To go further picture, a server that runs on a private network, contains and serves secret sensitive information only for a restricted network segment, and should never be exposed to public Internet.

This are just a few reasons why you should build a local repository mirror on your LAN, delegate an edge server for this job and configure internal clients to pull out software form its cache mirror.

Ubuntu provides apt-mirror package to synchronize local cache with official Ubuntu repositories, mirror that can be configured through a HTTP or FTP server to share its software packages with local system clients.

For a complete mirror cache your server needs at least 120G free space reserved for local repositories.

Requirements

- Min 120G free space

- Proftpd server installed and configured in anonymous mode.

Step 1: Configure Server

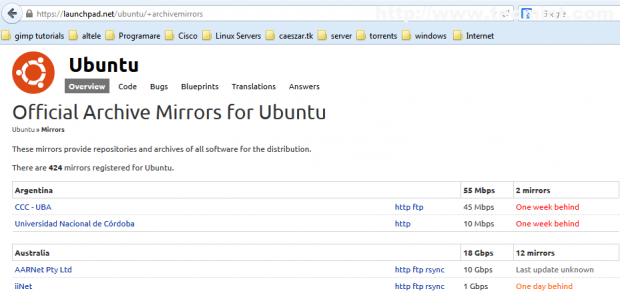

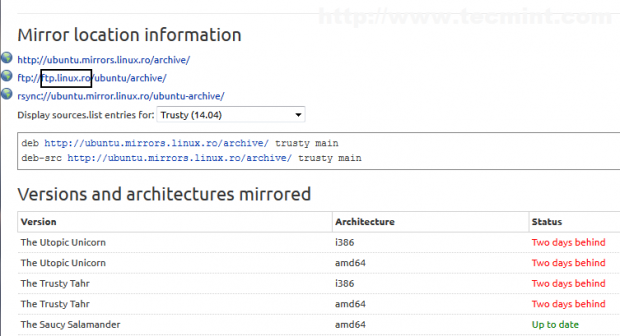

1. The first thing you may want to do is to identify the closest and fastest Ubuntu mirrors near you’re location by visiting Ubuntu Archive Mirror page and select your country.

If your country provides more mirrors you should identify mirror address and do some tests based on ping or traceroute results.

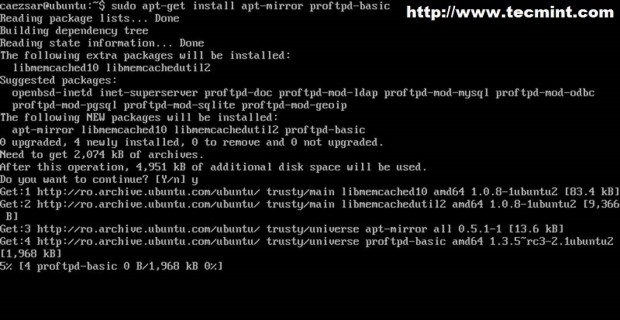

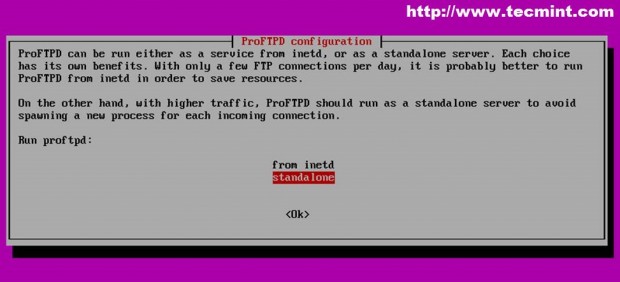

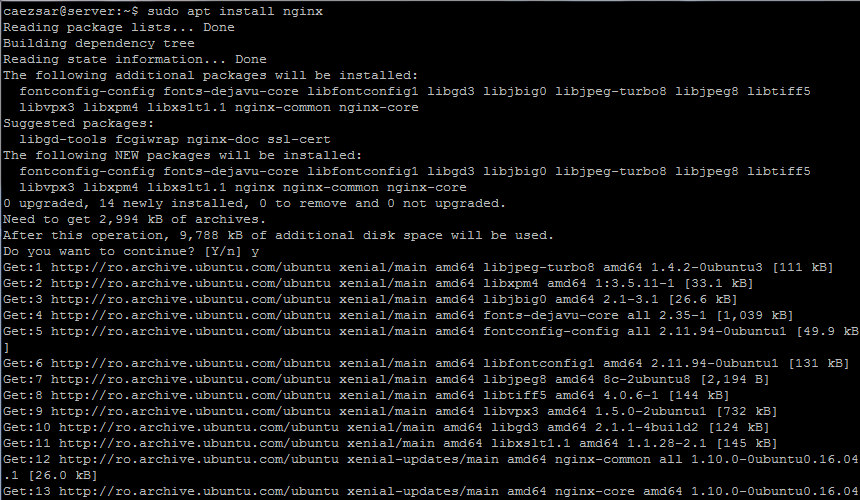

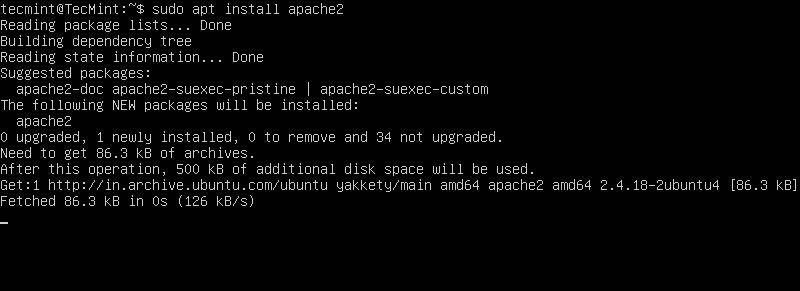

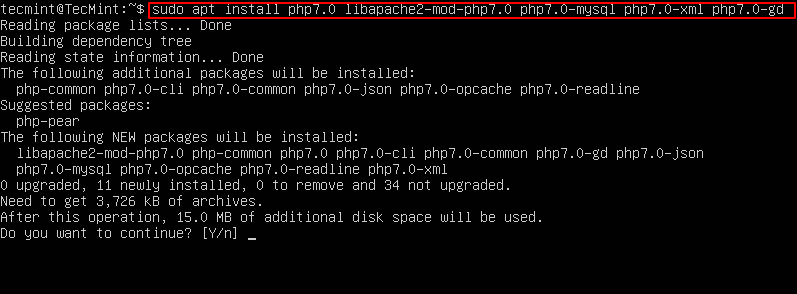

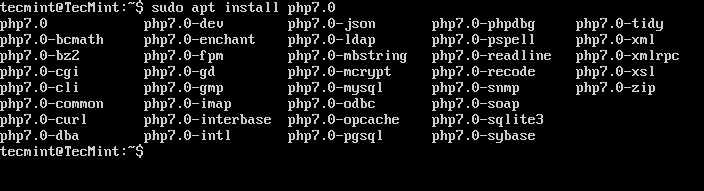

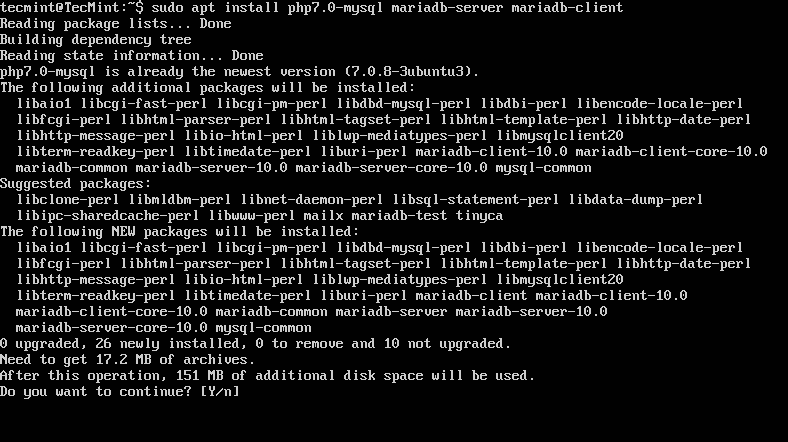

2. The next step is to install required software for setting up local mirror repository. Install apt-mirror and proftpd packages and configure proftpd as standalone system daemon.

$ sudo apt-get install apt-mirror proftpd-basic

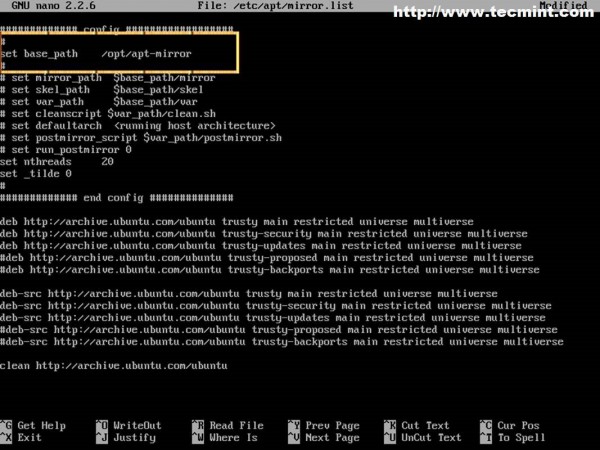

3. Now it’s time to configure apt-mirror server. Open and edit /etc/apt/mirror.list file by adding your nearest locations (Step 1) – optional, if default mirrors are fast enough or you’re not in a hurry – and choose your system path where packages should be downloaded. By default apt-mirror uses /var/spool/apt-mirror location for local cache but on this tutorial we are going to use change system path and point set base_path directive to /opt/apt-mirror location.

$ sudo nano /etc/apt/mirror.list

Also you can uncomment or add other source list before clean directive – including Debian sources – depending on what Ubuntu versions your clients use. You can add sources from 12.04, if you like but be aware that adding more sources requires more free space.

For Debian source lists visit Debian Wiki or Debian Sources List Generator.

4. All you need to do now is, just create path directory and run apt-mirror command to synchronize official Ubuntu repositories with our local mirror.

$ sudo mkdir -p /opt/apt-mirror $ sudo apt-mirror

As you can see apt-mirror proceeds with indexing and downloading archives presenting total number of downloaded packages and their size. As we can imagine 110-120 GB is large enough to take some time to download.

You can run ls command to view directory content.

Once the initial download is completed, future downloads will be small.

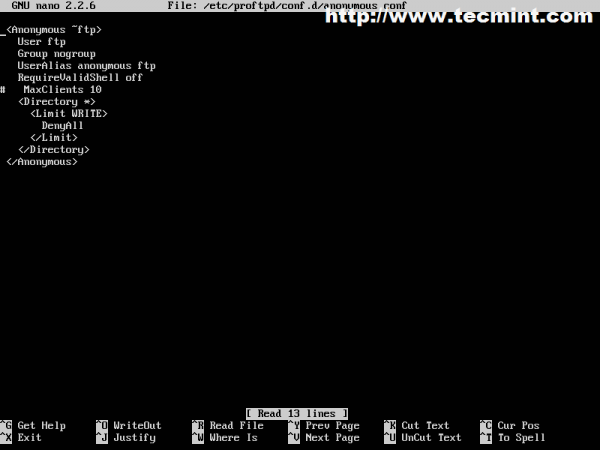

5. While apt-mirror downloads packages, you can configure your Proftpd server. The first thing you need to do is, to create anonymous configuration file for proftpd by running the following command.

$ sudo nano /etc/proftpd/conf.d/anonymous.conf

Then add the following content to anonymous.conf file and restart proftd service.

<Anonymous ~ftp>

User ftp

Group nogroup

UserAlias anonymous ftp

RequireValidShell off

# MaxClients 10

<Directory *>

<Limit WRITE>

DenyAll

</Limit>

</Directory>

</Anonymous>

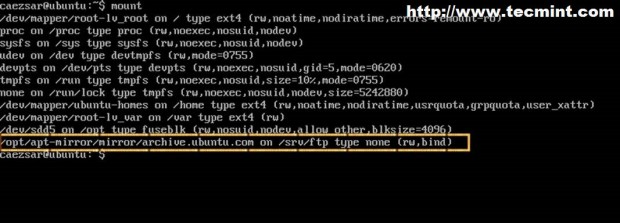

6. Next step is to link apt-mirror path to proftpd path by running a bind mount by issuing the command.

$ sudo mount --bind /opt/apt-mirror/mirror/archive.ubuntu.com/ /srv/ftp/

To verify it run mount command with no parameter or option.

$ mount

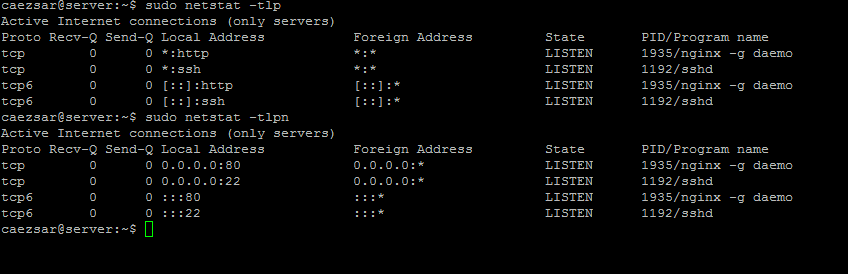

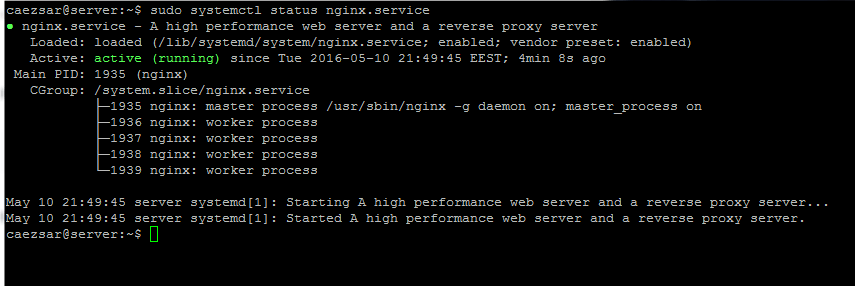

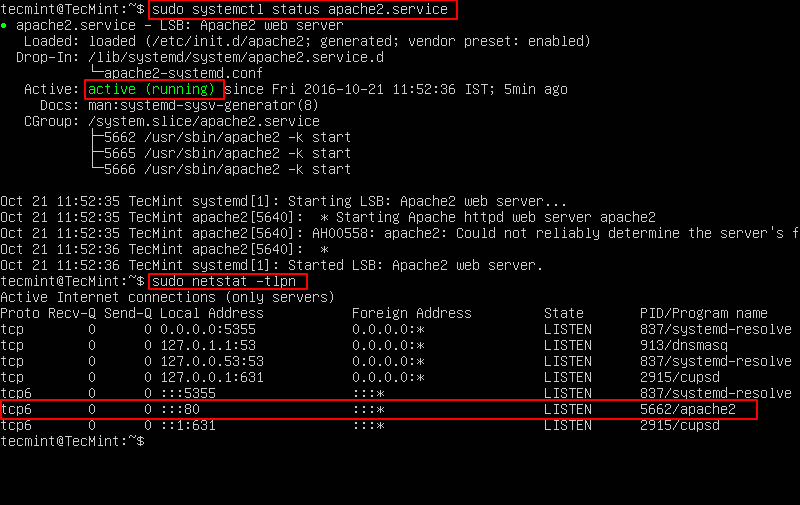

7. Last step is to make sure that Proftpd server is automatically started after system reboot and mirror-cachedirectory is also automatically mounted on ftp server path. To automatically enable proftpd run the following command.

$ sudo update-rc.d proftpd enable

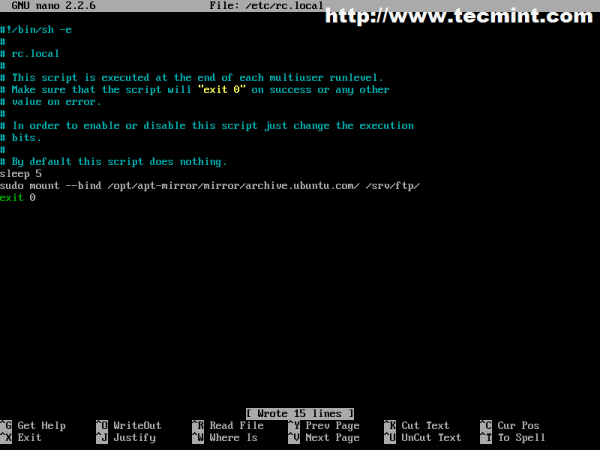

To automatically mount apt-mirror cache on proftpd open and edit /etc/rc.local file.

$ sudo nano /etc/rc.local

Add the following line before exit 0 directive. Also use 5 seconds delay before attempting to mount.

sleep 5 sudo mount --bind /opt/apt-mirror/mirror/archive.ubuntu.com/ /srv/ftp/

If you pull packages from Debian repositories run the following commands and make sure appropriate settings for above rc.local file are enabled.

$ sudo mkdir /srv/ftp/debian $ sudo mount --bind /opt/apt-mirror/mirror/ftp.us.debian.org/debian/ /srv/ftp/debian/

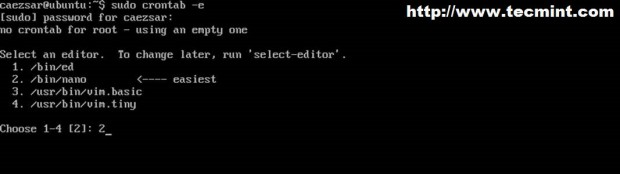

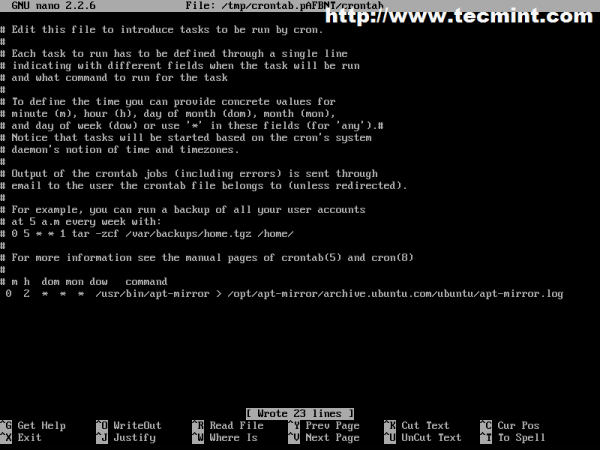

8. For a daily apt-mirror synchronization you can also create a system schedule job to run at 2 AM every day. Run crontab command, select your preferred editor then add the following line syntax.

$ sudo crontab –e

On last line add the following line.

0 2 * * * /usr/bin/apt-mirror >> /opt/apt-mirror/mirror/archive.ubuntu.com/ubuntu/apt-mirror.log

Now every day at 2 AM your system repository cache will synchronize with Ubuntu official mirrors and create a log file.

Step 2: Configure clients

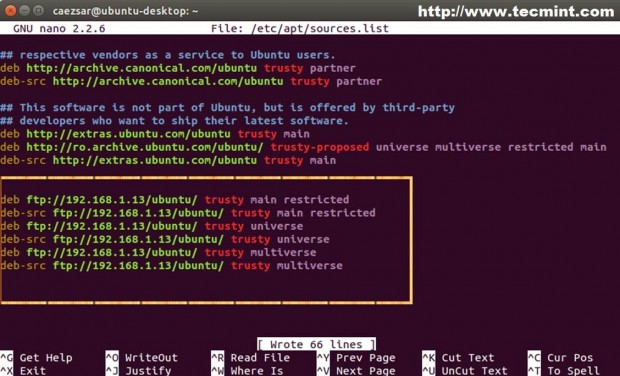

9. To configure local Ubuntu clients, edit /etc/apt/source.list on client computers to point to the IP address or hostname of apt-mirror server – replace http protocol with ftp, then update system.

deb ftp://192.168.1.13/ubuntu trusty universe deb ftp://192.168.1.13/ubuntu trusty main restricted deb ftp://192.168.1.13/ubuntu trusty-updates main restricted ## Ad so on….

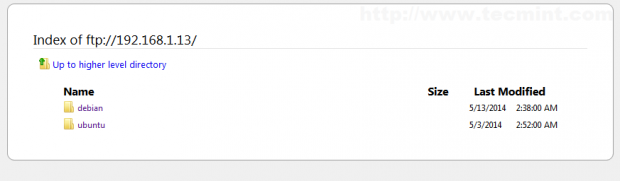

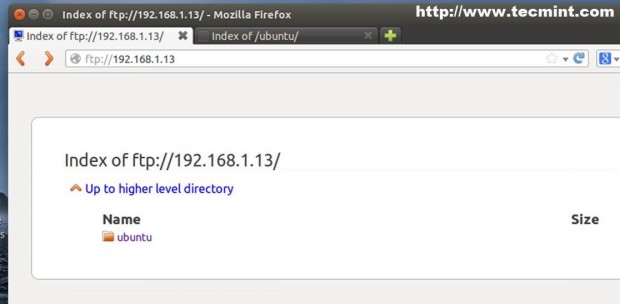

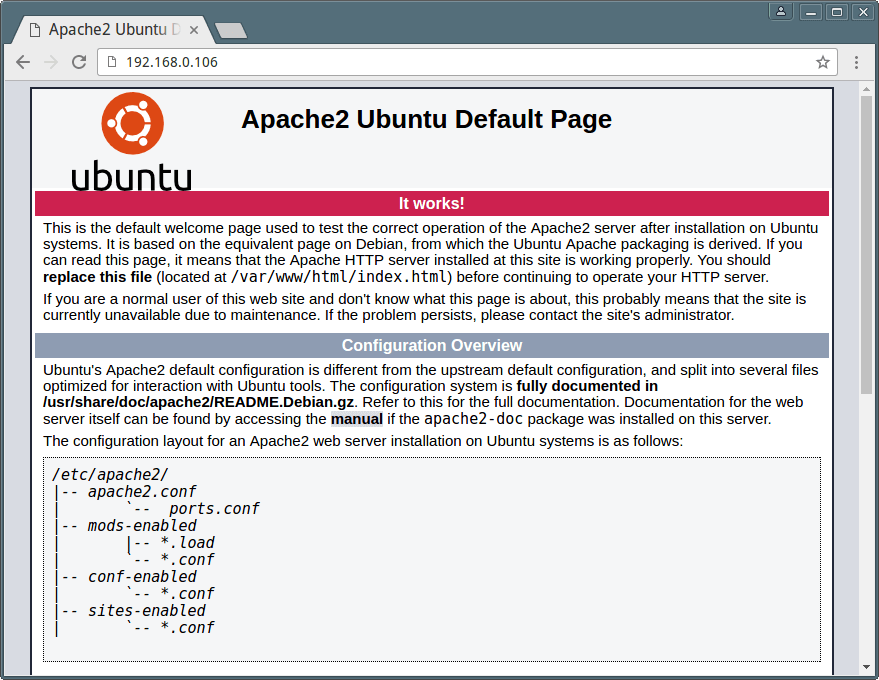

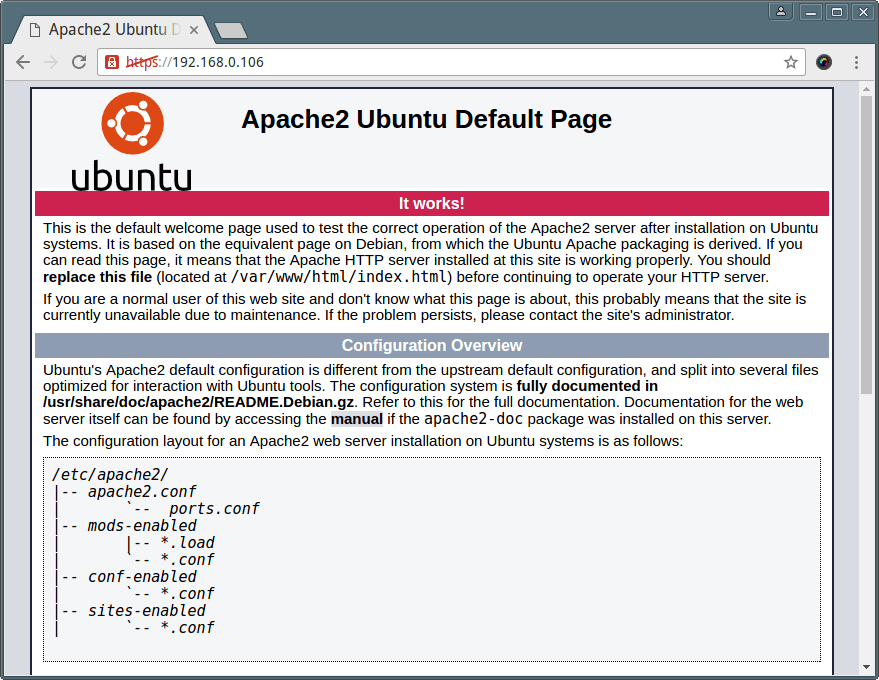

10. To view repositories you can actually open a browser and point to your server IP address of domain name using FTP protocol.

The same system applies also to Debian clients and servers, the only change needed are debian mirror and sources list.

Also if you install a fresh Ubuntu or Debian system, provide your local mirror manually whit ftp protocol when installer asks which repository to use.

The great thing about having your own local mirror repositories is that you’re always on current and your local clients don’t have to connect to Internet to install updates or software.