Snaps are the new Linux Apps that work on every Distro

Ask anyone that is using any operating that is mainstream, be it on PCs or mobile. Their biggest gripe is apps, finding useful and functional apps when using anything other than MacOS, Windows, Android or iOS is a serious hustle. Those of us trying our feet in the murky Linux ecosystem are not spared.

For a long time, getting apps for your Linux computer was an exercise in futility. This issue was made even worse with just how fragmented the Linux ecosystem is. This drove most of us to the relatively more mainstream distros like Ubuntu and Linux Mint for their relatively active developer community and support.

Advertisement – Continue reading below

See, when using Linux, you couldn’t exactly Google the name of a program you want, then download the .exe file, double click it and it is installed like you would on Windows (although technically you can do that now with .deb files). You had to know your way around the Terminal. Once in the Terminal, like for the case of Ubuntu, you needed to add the software source to your Repository with sudo apt commands, then now update the cache, then finally install the app you want with sudo apt-get install. In most cases, the dependencies would be all messed up and you’d have to scroll through endless forums trying to figure out how to fix that one pesky dependency that just won’t allow your app to run well.

You’d jump through all these hoops and then finally the app would run, but then it would look all weird because maybe it wasn’t made for your distro. Bottom line, it takes patience and resilience to install Linux Apps.

Snaps

Snaps are essentially applications that are compressed together with their dependencies and descriptions of how to run and interact with other software on the system that they are installed on. Snaps are secure in that, they are mainly designed to be sandboxed and isolated from other system software.

Snaps are easily installable, upgradeable, degradable, and removable irrespective of its underlying system. For this reason, they are easily installed on basically any Linux-based system. Canonical is even developing Snaps as the new packaging medium for Ubuntu’s Internet of Things devices and large container deployments referred to as Ubuntu Core.

How to Install Snap in Linux

In this section, I will show you to install Snap in Linux and how to use snap to install, update or remove packages. Ubuntu has been shipping distros since Ubuntu 16.04 with Snap already pre-installed on the system. Any Linux distro based on Ubuntu 16.04 and newer doesn’t need to install again. For other distribution, you can follow instructions as shown:

On Arch Linux

$ sudo yaourt -S snapd

$ sudo systemctl start snapd.socket

On Fedora

$ sudo dnf copr enable zyga/snapcore

$ sudo dnf install snapd

$ sudo systemctl enable –now snapd.service

$ sudo setenforce 0

Once snap has been installed and started, you can list all available packages in the snap store as shown.

$ snap find

To search for a particular package, just specify package name as shown.

$ snap find package-name

To install a snap package, specifying the package by name.

$ sudo snap install package-name

To update an installed snap package, specifying the package by name.

$ sudo snap refresh package-name

To remove an installed snap package, run.

$ sudo snap remove package-name

To learn more about snap packages, go through Snapcraft’s official page or head on out to the Snap Store to explore the bunch of apps that are already available.

I feel like Snaps are growing to be more like the Google Play Store. A central place for Linuxers, irrespective of which fork of Linux they’re running to come to get apps that just work, and do so with little to no fuss at all. At the moment, there are thousands of snaps that are used by millions of people across 41 Linux distributions. This number is only going to grow bigger. If there’s ever a good time to switch to Linux, it is now. The platform really has come of age.

5 Easy Tips for Linux Web Browser Security | Linux.com

If you use your Linux desktop and never open a web browser, you are a special kind of user. For most of us, however, a web browser has become one of the most-used digital tools on the planet. We work, we play, we get news, we interact, we bank… the number of things we do via a web browser far exceeds what we do in local applications. Because of that, we need to be cognizant of how we work with web browsers, and do so with a nod to security. Why? Because there will always be nefarious sites and people, attempting to steal information. Considering the sensitive nature of the information we send through our web browsers, it should be obvious why security is of utmost importance.

So, what is a user to do? In this article, I’ll offer a few basic tips, for users of all sorts, to help decrease the chances that your data will end up in the hands of the wrong people. I will be demonstrating on the Firefox web browser, but many of these tips cross the application threshold and can be applied to any flavor of web browser.

1. Choose Your Browser Wisely

Although most of these tips apply to most browsers, it is imperative that you select your web browser wisely. One of the more important aspects of browser security is the frequency of updates. New issues are discovered quite frequently and you need to have a web browser that is as up to date as possible. Of major browsers, here is how they rank with updates released in 2017:

- Chrome released 8 updates (with Chromium following up with numerous security patches throughout the year).

- Firefox released 7 updates.

- Edge released 2 updates.

- Safari released 1 update (although Apple does release 5-6 security patches yearly).

But even if your browser of choice releases an update every month, if you (as a user) don’t upgrade, that update does you no good. This can be problematic with certain Linux distributions. Although many of the more popular flavors of Linux do a good job of keeping web browsers up to date, others do not. So, it’s crucial that you manually keep on top of browser updates. This might mean your distribution of choice doesn’t include the latest version of your web browser of choice in its standard repository. If that’s the case, you can always manually download the latest version of the browser from the developer’s download page and install from there.

If you like to live on the edge, you can always use a beta or daily build version of your browser. Do note, that using a daily build or beta version does come with it the possibility of unstable software. Say, however, you’re okay with using a daily build of Firefox on a Ubuntu-based distribution. To do that, add the necessary repository with the command:

sudo apt-add-repository ppa:ubuntu-mozilla-daily/ppa

Update apt and install the daily Firefox with the commands:

sudo apt-get update

sudo apt-get install firefox

What’s most important here is to never allow your browser to get far out of date. You want to have the most updated version possible on your desktop. Period. If you fail this one thing, you could be using a browser that is vulnerable to numerous issues.

2. Use A Private Window

Now that you have your browser updated, how do you best make use of it? If you happen to be of the really concerned type, you should consider always using a private window. Why? Private browser windows don’t retain your data: No passwords, no cookies, no cache, no history… nothing. The one caveat to browsing through a private window is that (as you probably expect), every time you go back to a web site, or use a service, you’ll have to re-type any credentials to log in. If you’re serious about browser security, never saving credentials should be your default behavior.

This leads me to a reminder that everyone needs: Make your passwords strong! In fact, at this point in the game, everyone should be using a password manager to store very strong passwords. My password manager of choice is Universal Password Manager.

3. Protect Your Passwords

For some, having to retype those passwords every single time might be too much. So what do you do if you want to protect those passwords, while not having to type them constantly? If you use Firefox, there’s a built-in tool, called Master Password. With this enabled, none of your browser’s saved passwords are accessible, until you correctly type the master password. To set this up, do the following:

- Open Firefox.

- Click the menu button.

- Click Preferences.

- In the Preferences window, click Privacy & Security.

- In the resulting window, click the checkbox for Use a master password (Figure 1).

- When prompted, type and verify your new master password (Figure 2).

- Close and reopen Firefox.

4. Know your Extensions

There are plenty of privacy-focused extensions available for most browsers. What extensions you use will depend upon what you want to focus on. For myself, I choose the following extensions for Firefox:

- Firefox Multi-Account Containers – Allows you to configure certain sites to open in a containerized tab.

- Facebook Container – Always opens Facebook in a containerized tab (Firefox Multi-Account Containers is required for this).

- Avast Online Security – Identifies and blocks known phishing sites and displays a website’s security rating (curated by the Avast community of over 400 million users).

- Mining Blocker – Blocks all CPU-Crypto Miners before they are loaded.

- PassFF – Integrates with pass (A UNIX password manager) to store credentials safely.

- Privacy Badger – Automatically learns to block trackers.

- uBlock Origin – Blocks trackers based on known lists.

Of course, you’ll find plenty more security-focused extensions for:

Not every web browser offers extensions. Some, such as Midoria, offer a limited about of built-in plugins, that can be enabled/disabled (Figure 3). However, you won’t find third-party plugins available for the majority of these lightweight browsers.

5. Virtualize

For those that are concerned about releasing locally stored data to prying eyes, one option would be to only use a browser on a virtual machine. To do this, install the likes of VirtualBox, install a Linux guest, and then run whatever browser you like in the virtual environment. If you then apply the above tips, you can be sure your browsing experience will be safe.

The Truth of the Matter

The truth is, if the machine you are working from is on a network, you’re never going to be 100% safe. However, if you use that web browser intelligently you’ll get more bang out of your security buck and be less prone to having data stolen. The silver lining with Linux is that the chances of getting malicious software installed on your machine is exponentially less than if you were using another platform. Just remember to always use the latest release of your browser, keep your operating system updated, and use caution with the sites you visit.

How To Install Atom Text Editor on Ubuntu 18.04

Atom is an open source cross-platform code editor developed by GitHub. It has a built-in package manager, embedded Git control, smart autocompletion, syntax highlighting and multiple panes.

Under the hood Atom is a desktop application built on Electron using HTML, JavaScript, CSS, and Node.js.

The easiest and recommended way to install Atom on Ubuntu machines is to enable the Atom repository and install the Atom package through the command line.

Although this tutorial is written for Ubuntu 18.04 the same instructions apply for Ubuntu 16.04 and any Debian based distribution, including Debian, Linux Mint and Elementary OS.

Prerequisites

The user you are logging in as must have sudo privileges to be able to install packages.

Installing Atom on Ubuntu

Perform the following steps to install Atom on your Ubuntu system:

- Start by updating the packages list and install the dependencies by typing:

sudo apt update

sudo apt install software-properties-common apt-transport-https wget - Next, import the Atom Editor GPG key using the following wget command:

wget -q https://packagecloud.io/AtomEditor/atom/gpgkey -O- | sudo apt-key add –

And enable the Atom repository by typing:

sudo add-apt-repository “deb [arch=amd64] https://packagecloud.io/AtomEditor/atom/any/ any main”

- Once the repository is enabled, install the latest version of Atom with:

Starting Atom

Now that Atom is installed on your Ubuntu system you can launch it either from the command line by typing code or by clicking on the Atom icon (Activities -> Atom).

When you start the Atom editor for the first time, a window like the following should appear:

You can now start installing themes and extensions and configuring Atom according to your preferences.

Upgrading Atom

To upgrade your Atom installation when new releases are published, you can use the apt package manager normal upgrade procedure:

sudo apt update

sudo apt upgrade

Conclusion

You have successfully installed Atom on your Ubuntu 18.04 machine. To learn more about how to use Atom, from beginner basics to advanced techniques, visit their official documentation page.

If you have any question, please leave a comment below.

Official Google Twitter account hacked in Bitcoin scam

Source: Naked Security/Sophos

![]()

The epidemic of Twitter-based Bitcoin scams took another twist this week as attackers tweeted scams directly from two verified high-profile accounts. Criminals sent posts from both Googles G Suite account and Targets official Twitter account. Cryptocurrency giveaway scams work by offering money to victims. Theres a catch, of course: They must first send a small amount of money to verify their address. The money in return never shows up and the attackers cash out.

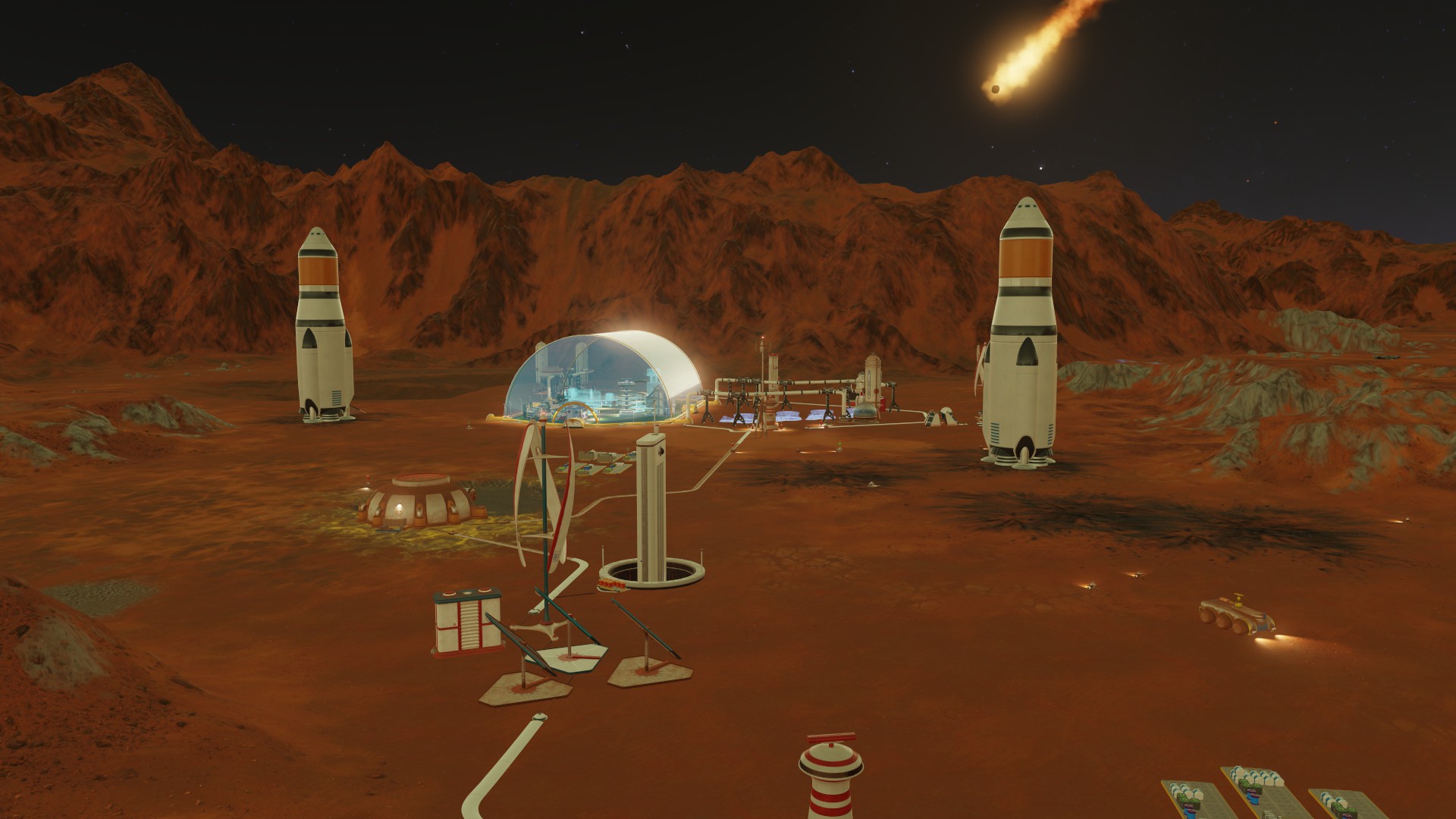

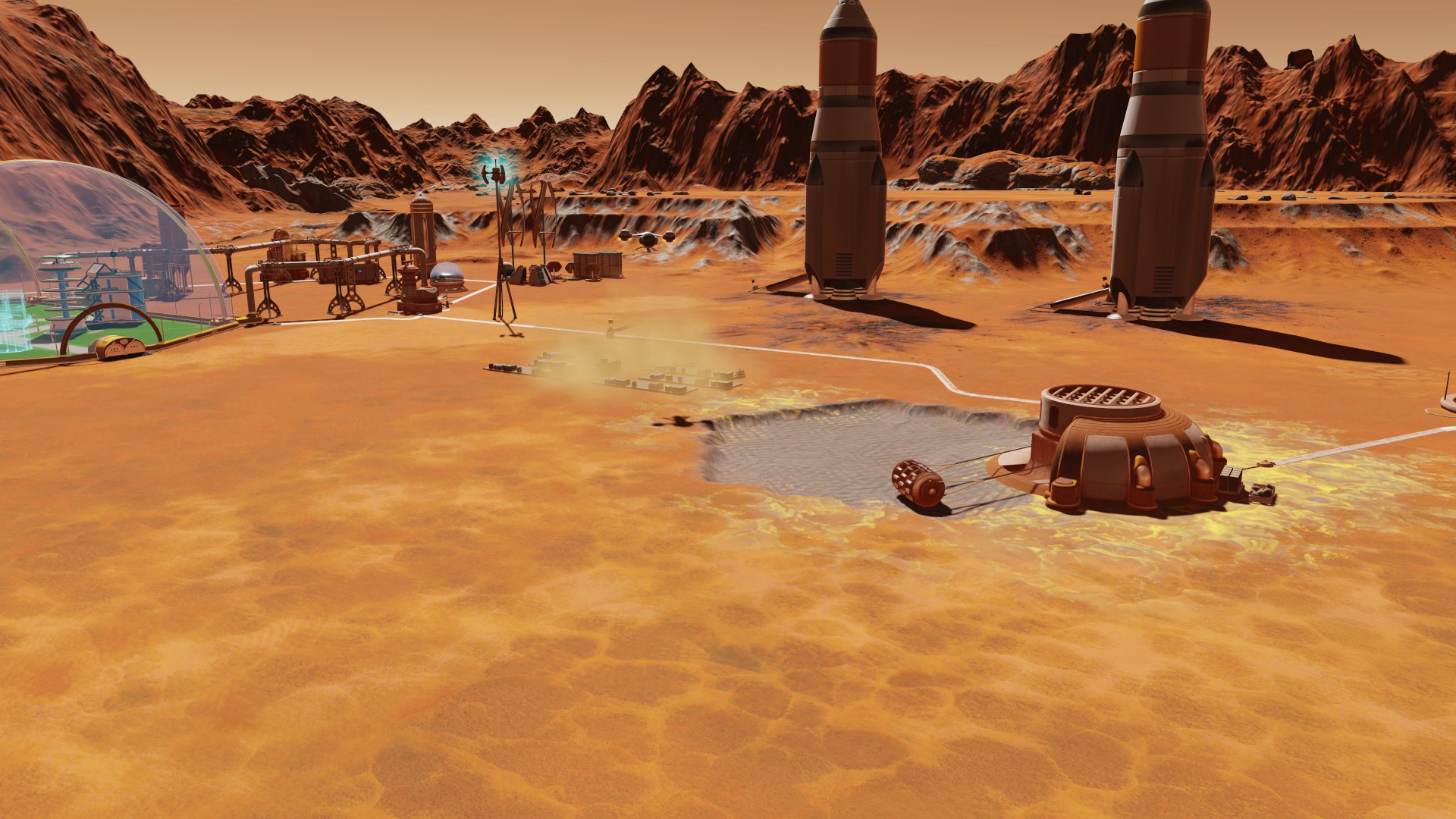

Surviving Mars: Space Race expansion and Gagarin free update have released, working well on Linux

Haemimont Games along with Paradox Interactive have released the Surviving Mars: Space Race expansion today and it’s great.

Note: DLC key provided by TriplePoint PR.

The Surviving Mars: Space Race expansion expands the game in some rather interesting ways. One of the biggest obviously being the AI controlled colonies which are optional. Being able to trade with them, deal with distress calls and do covert ops against them has certainly added an interesting layer into the game. While I enjoyed the game anyway, this actually gives it a little bit more of a purpose outside of trying to stave off starvation and not get blown into tiny pieces by meteorites. It’s interesting though, that this could pave the way for a full multiplayer feature, since they have things you can actually do with/against other colonies now. Although, you can’t directly view AI colonies, only see them on the world map which is a bit of a shame.

There’s also the free Gagarin content update which has released at the same time, which includes some interesting goals from each Sponsor. They each have their own special list of goals for you to achieve, which will give you rewards for completing them. For example, with the International Mars Mission sponsor, one such goal is to have a colonist born on Mars which will give you a bunch of supply pods for free. They’re worth doing, but not essential.

Another fun free feature our planetary anomalies, which will require you to send a rocket stocked with Drones, Rovers or Colonists across Mars outside of your colony to investigate, they’re pretty good too since they offer some pretty big rewards at times. There’s also Supply Pods now which cost a bit more, but you don’t need to wait for them to get prepped and so they’re really good for an emergency situation and can end up saving your colony should the worst happen.

Bringing Surviving Mars closer to games like Stellaris, there’s also now special events that will happen. The game was a little, how do I put it, empty? Empty is perhaps too harsh, it’s hard to explain properly. I enjoyed the game in the initially released form, but it did feel lacking in places. Not so much now since there’s around 250 events that will come up at various points throughout the game. Some good, some bad, some completely terrible and so it just makes the game a lot more fresh. Considering this was added in free, it’s quite a surprise. It goes to show how much they care about the game I think.

I was actually having the game freeze up on me a few times in previous version, since I’ve put many hours into this latest update I haven’t seen a single one so that’s really welcome. The game has been running like an absolute dream on maximum settings, incredibly smooth and (as weird as it is to say this about Mars) it looks great too. On top of that, the Linux version got a fix for switching between fullscreen and windowed mode with the patch.

One thing to note, is that there’s a few new keybinds and so I do suggest resetting them or checking them over as I had one or two that were doubled up.

As a whole, the game has changed quite dramatically with this expansion and free update. I liked it a lot before, now I absolutely love it. Even going with just the free update, it’s so much more worthwhile playing! I’m very content with it and I plan to play a lot more in my own personal free time now.

This exciting expansion will be available from Humble Store, GOG and Steam. It’s also having a free weekend on Steam.

Canonical Extends Ubuntu 18.04 LTS Linux Support to 10 Years

BERLIN — In a keynote at the OpenStack Summit here, Mark Shuttleworth, founder and CEO of Canonical Inc and Ubuntu, detailed the progress made by his Linux distribution in the cloud and announced new extended support.

The Ubuntu 18.04 LTS (Long Term Support) debuted back on April 26, providing new server and cloud capabilities. An LTS release comes with five year of support, but during his keynote Shuttleworth announced that 18.04 would have support that is available for up to 10 years.

“I’m delighted to announce that Ubuntu 18.04 will be supported for a full 10 years,” Shuttleworth said. “In part because of the very long time horizons in some of industries like financial services and telecommunications but also from IOT where manufacturing lines for example are being deployed that will be in production for at least a decade .”

OpenStack

The long term, stable support for the OpenStack cloud is something that Shuttleworth has committed for some time. In April 2014, the OpenStack Icehouse release came out and it is still being supported by Canonical.

“The OpenStack community is an amazing community and it attracts amazing technology, but that won’t be meaningful if it doesn’t deliver for everyday businesses,” Shuttleworth said. “We actually manage more OpenStack clouds for more different industries, more different architectures than any other company.”

Shuttleworth said that when Icehouse was released, he committed to supporting it for five years, because long term support matters.

“What matters isn’t day two, what matters is day 1,500,” Shuttleworth said. “Living with OpenStack scaling it, upgrading it, growing it, that is important to master to really get the value for your business.”

IBM Red Hat

Shuttleworth also provided some color about his views on the $34 billion acquisition of Red Hat by IBM, which was announced on Oct. 28.

“I wasn’t surprised to see Red Hat sell,” Shuttleworth said. “But I was surprised at the amount of debt that IBM took on to close the deal.”

He added that he would be worried for IBM, except for the fact that the public cloud is a huge opportunity.

“I guess it makes sense if you think of IBM being able to steer a large amount of on prem RHEL workloads to the cloud, then that deal might make sense,” he said.

Sean Michael Kerner is a senior editor at ServerWatch and InternetNews.com. Follow him on Twitter @TechJournalist.

Download GNOME Shell Extensions Linux 3.31.2

GNOME Shell Extensions is an open source and freely distributed project that provides users with a modest collection of extensions for the GNOME Shell user interface of the GNOME desktop environment. It contains a handful of extensions, carefully selected by technical members of the GNOME project. These extensions are designed to enhance users’ experience with the GNOME desktop environment.

Designed for GNOME

In general, GNOME Shell extensions can be used to customize the look and feel of the controversial desktop environment. In other words, to make your life a lot easier when working in GNOME. While the software is distributed as part of the GNOME project, installable from the default software channels of their Linux distributions, it is also available for download as a source archive, engineered to enable advanced users to configure, compile and install the software on any Linux OS.

Includes a wide variety of extensions for GNOME Shell

At the moment, the following GNOME-Shell extensions are included in this package: Alternate Tab, Apps Menu, Auto Move Windows, Drive Menu, Launch New Instance, Native Window Placement, Places Menu, systemMonitor, User Theme, Window List, windowsNavigator, and Workspace Indicator.

While some of them are self-explanatory, like systemMonitor, Window List, Workspace Indicator, Apps Menu or Alternate Tab, we should mention that the User Theme allows you to add a custom theme (skin) for the GNOME Shell, and Alternate Tab replaces the default ALT+TAB functionality with a sophisticated one.

In addition, the windowsNavigator extension allows you to select windows and workspaces in the GNOME Shell overlay mode using your keyboard, Native Window Placement will arrange windows in the overview mode in a more compact way, and Auto Move Windows will automatically move apps to a specific workspace when it is opened.

Bottom line

Overall, GNOME Shell Extensions is yet another important component of the GNOME desktop environment, especially when using the GNOME Shell user interface, making your life much easier and helping you achieve your goals faster. However, we believe that there are many other useful extensions out there that should be installed in this package.

Install Etcher on Linux | Linux Hint

Etcher is a free tool for flashing microSD card with the operating system images for Raspberry Pi single board computers. The user interface of Etcher is simple and it is really easy to use. It is a must have tool if you’re working with a Raspberry Pi project. I highly recommend it. Etcher is available for Windows, macOS and Linux. So you get the same user experience no matter which operating system you’re using.

In this article, I will show you how to install and use Etcher on Linux. I will be using Debian 9 Stretch for the demonstration. But this article should work on any other Debian based Linux distributions such as Ubuntu without any modification. With slight modification, it should work on other Linux distributions as well. So, let’s get started.

You can download Etcher from the official website of Etcher. First, go to the official website of Etcher at https://www.balena.io/etcher/ and you should see the following page. You can click on the download link as marked in the screenshot below to download Etcher for Linux but it may not work all the time. It did not work for me.

If that is the case for you as well, scroll down a little bit and click on the link as marked in the screenshot below.

Your browser should prompt you to save the file. Just, click on Save File.

Your download should start as you can see in the screenshot below.

Installing Etcher on Linux:

Now that you have downloaded Etcher for Linux, you are ready to install Etcher on Linux. In order to run Etcher on Linux, you need to have zenity or Xdialog or kdialog package installed on your desired Linux distribution. On Ubuntu, Debian, Linux Mint and other Debian based Linux distributions, it is a lot easier to install zenity as zenity is available in the official package repository of these Linux distributions. As I am using Debian 9 Stretch for the demonstration, I will cover Debian based distributions here only.

First, update the package repository of your Ubuntu or Debian machine with the following command:

Now, install zenity with the following command:

$ sudo apt install zenity

Now, press y and then press <Enter> to continue.

zenity should be installed.

Now, navigate to the ~/Downloads directory where you downloaded Etcher with the following command:

As you can see, the Etcher zip archive file is here.

Now, unzip the file with the following command:

$ unzip etcher-electron-1.4.6-linux-x64.zip

The zip file should be extracted and a new AppImage file should be generated as you can see in the screenshot below.

Now, move the AppImage file to the /opt directory with the following command:

$ sudo mv etcher-electron-1.4.6-x86_64.AppImage /opt

Now, run Etcher with the following command:

$ /opt/etcher-electron-1.4.6-x86_64.AppImage

You should see the following dialog box. Just click on Yes.

Etcher should start as you can see in the screenshot below.

Now, you don’t have to start Etcher from the command line anymore. You can start Etcher from the Application Menu as you can see in the screenshot below.

Using Etcher on Linux:

You can now flash microSD cards using Etcher for your Raspberry Pi. First, open Etcher and click on Select image.

A file picker should be opened. Now, select the operating system image file that you want to flash your microSD card with and click on Open.

The image should be selected.

Now, insert the microSD card or USB storage device that you want to flash with Etcher. It may be selected by default. If you do have multiple USB storage devices or microSD card attached on your computer, and the right one is not selected by default, then you can click on Change as marked in the screenshot below to change it.

Now, select the one you want to flash using Etcher from the list and click on Continue.

NOTE: You can also flash multiple USB devices or microSD cards at the same time with Etcher. Just select the ones that you want to flash from the list and click on Continue.

It should be selected as you can see in the screenshot below.

You can also change Etcher settings to control how Etcher will flash the microSD cards or USB storage devices as well. To do that, click on the gear icon as marked in the screenshot below.

The Etcher settings panel is very clear and easy to use. All you have to do is either check or uncheck the things you want and click on the Back button. Normally you don’t have to do anything here. The default settings are good. But if you uncheck Validate write on success, it will save you a lot of time. Because this option will check if everything is written on the microSD cards or USB storage devices correctly. That puts a lot of stress on your microSD cards or USB devices and takes a lot of time to complete. Unless you have a faulty microSD card or USB storage device, unchecking this option would do you no harm. It’s up to you to decide what you want.

Finally, click on Flash!

Etcher should start flashing your microSD card or USB storage device.

Once the microSD card or the USB storage device is flashed, you should see the following window. You can now close Etcher and eject your microSD card or USB storage device and use it on your Raspberry Pi device.

So that’s how you install and use Etcher on Linux (Ubuntu/Debian specifically). Thanks for reading this article.