This article explains how to embed Google Calendar on your Linux desktop background. It also includes some customization hints.

Conky and gcalcli are used to display your Google Calendar events on top of your desktop wallpaper:

- Conky is a tool that displays information on your desktop. It can act as a system monitor, having some built-in functions to display the CPU usage, RAM, etc., as well as display custom commands output, among others.

- gcalcli is a command line interface for Google Calendar. Using OAuth2 to connect with your Google account, the tool can list your Google Calendars, add, edit and delete calendar events, and much more.

Follow the steps below to install Conky and gcalcli, and use these tools to embed Google Calendar on the desktop background. There are optional steps for customizing Conky, the calendar colors, and more, as well as adding this widget to startup.

The Conky configuration file in this article uses the Conky 1.10 syntax. You’ll need Conky 1.10 or newer to use the Conky configuration below.

1. Install gcalcli and Conky.

In Debian, Ubuntu or Linux Mint, use:

sudo apt install gcalcli conky-all

You’ll also need to install the Ubuntu Mono font (or you can change the font in the .conkyrc code below). This should be installed by default in Ubuntu.

2. Connect gcalcli with your Google account.

You need to run gcalcli with any option to start the OAuth2 authentication process. Let’s run the list command, like this:

gcalcli list

gcalcli should open a new page in your default web browser which asks if you want to authorize gcalcli with your Google account. Allow it and proceed to the next step.

3. Create and populate the Conky configuration file (~/.conkyrc).

Create a file called .conkyrc in your home folder (use Ctrl + H to toggle between hiding and showing hidden files and folders) and paste the following in this file:

conky.config = {

background = true,

update_interval = 1.5,

cpu_avg_samples = 2,

net_avg_samples = 2,

out_to_console = false,

override_utf8_locale = true,

double_buffer = true,

no_buffers = true,

text_buffer_size = 32768,

imlib_cache_size = 0,

own_window = true,

own_window_type = ‘desktop’,

own_window_argb_visual = true,

own_window_argb_value = 120,

own_window_hints = ‘undecorated,below,sticky,skip_taskbar,skip_pager’,

border_inner_margin = 10,

border_outer_margin = 0,

xinerama_head = 1,

alignment = ‘top_right’,

gap_x = 90,

gap_y = 90,

draw_shades = true,

draw_outline = false,

draw_borders = false,

draw_graph_borders = false,

use_xft = true,

font = ‘Ubuntu Mono:size=12’,

xftalpha = 0.8,

uppercase = false,

default_color = ‘#FFFFFF’,

own_window_colour = ‘#000000’,

minimum_width = 0, minimum_height = 0,

};

conky.text = [[

$

]];

Now run Conky with this configuration by typing this in a terminal:

conky

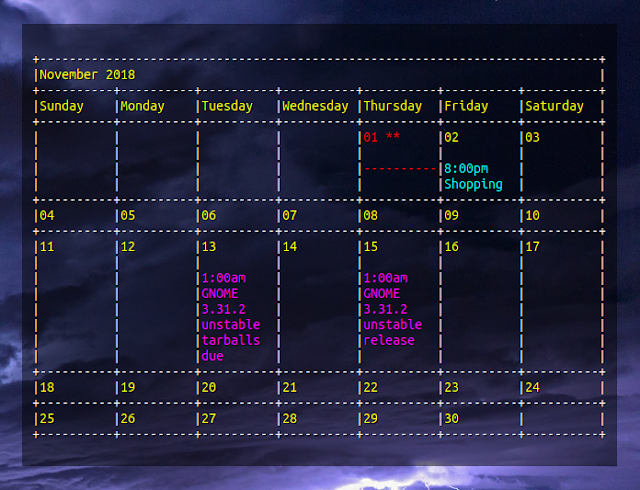

You should now see Google Calendar embedded in your desktop background, like this:

If you already have a Conky configuration, name the file as

.conkyrc2

(or

.conkyrc3

, etc.), and each time you see a “conky” command in this article (when running it or adding it to startup), append

-c ~/.conkyrc2

(or whatever you’ve named the file). For example, to run a second Conky instance that uses

~/.conkyrc2

as its configuration file, use this command:

conky -c ~/.conkyrc2

In case you want to close all running Conky instances, use:

killall -9 conky4. (Optional) Customize gcalcli and ConkyI. Basic Conky configuration

You can modify the contents of the .conkyrc file to suit your needs. The Google Calendar displayed on your desktop using Conky should be automatically updated each time you save the .conkyrc configuration file. In case this does not happen, kill all running Conky processes by using killall -9 conky, then run Conky again.

For example, change the gap_x and gap_y values to move the calendar that’s displayed on your desktop more close or further away from the top right corner. The top right corner position is given by the alignment = ‘top_right’ option, so change that to move the calendar to a different corner of the screen.

Most options are self explanatory, like the font value, which is set to Ubuntu Mono in the Conky code above. Make sure you have the Ubuntu font installed or change the font value to a monospaced font you have installed on your system.

If you have multiple monitors and you want to move the calendar to a different monitor, change the xinerama_head value.

Other than that, the values used in the sample Conky configuration from step 3 should just work for most users. Consult the Conky help (conky –help) for more info.

II. Changing the calendar colors

Using the .conkyrc code listed on step 3, the Google calendar is displayed using the default gcalcli values. The colors can be changed though.

For example, you can add –nocolor to the execpi ~/.conkyrc line (before last line) to not use any gcalcli colors, and rely on Conky for the text color, by changing the line to look like this:

$

Then you can specify the calendar text color by changing the default_color value (you can use hex or color names) from the ~/.conkyrc file. Here’s how it looks like using default_color = ‘green’ for example:

This only allows using one color for the whole calendar though. If you want to change individual colors, like the color of the date, the now marker, etc., make sure you don’t add

–nocolor

to the excepci line, and instead add these options with the color you want to use:

–color_border: Color of line borders

(default: ‘white’)

–color_date: Color for the date

(default: ‘yellow’)

–color_freebusy: Color for free/busy calendars

(default: ‘default’)

–color_now_marker: Color for the now marker

(default: ‘brightred’)

–color_owner: Color for owned calendars

(default: ‘cyan’)

–color_reader: Color for read-only calendars

(default: ‘magenta’)

–color_writer: Color for writable calendars

(default: ‘green’)

There aren’t many supported colors though. A comment on this bug report mentions black, red, green, yellow, blue, magenta, cyan and white as being supported.

For example, to change the calendar color for the now marker to blue, and the date color to white, while the other elements keep their default colors, you could change the execpi line to this:

$III. gcalcli options

gcalcli has a large number of options. The –monday option for example, which is added to our .conkyrc file sets the first day of the week to Monday. Remove it from the execpi line (in the .conkyrc file) to set the first day of the week to Sunday.

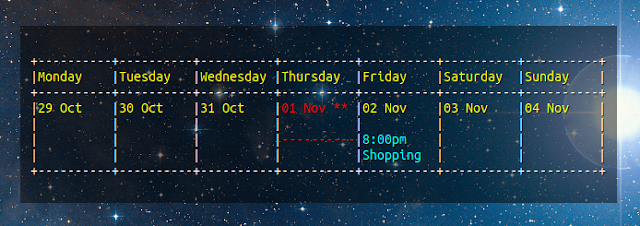

The calm option sets gcalcli to display the current month agenda in a calendar format. To display the current week instead of month, use calw instead of calm, like this:

$

This is how it will look on your desktop:

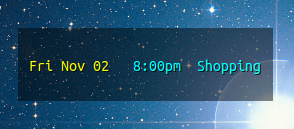

Another possible view is

agenda

, which defaults to starting in the current day at 12am (and displays events for the next 5 days), but can accept custom dates. Replace calm with agenda to use it, and also remove –monday

if it’s there (there’s no need for it in this view, and gcalcli will throw an error), like this:

$

This is how it looks like with only 1 event in the next 5 days:

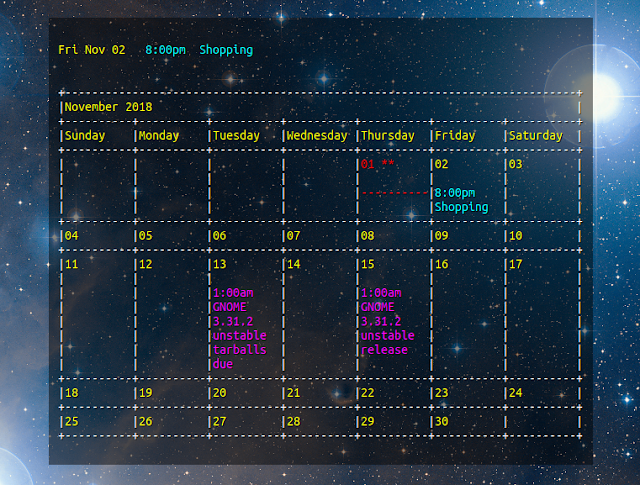

You can also display both the current month calendar and a 5day agenda on top of it, by adding two execpi lines instead of one to the

~/.conkyrc

file, like this:

conky.text = [[

$

$

]];

This is how it looks like on the desktop:

For even more customization, check the gcalcli –help and the GitHub project page.

As a side note, gcalcli is ran using PYTHONIOENCODING=utf8 to avoid some possible issues with the calendar display – you can remove this if the calendar is displayed correctly for you. Also, I used the –nolineart gcalcli option, which disables line art, because Conky can’t display gcalcli’s line art properly.

5. (Optional) Add the Google Calendar Conky desktop widget to startup.

To add it to startup, open Startup Applications or equivalent from your application launcher, add a new startup program, enter Conky Google Calendar as its name, and use the following in the command field:

conky –daemonize –pause=5

Alternatively you can create a file called conky.desktop in ~/.config/autostart/ with the following contents:

[Desktop Entry]

Type=Application

Exec=conky –daemonize –pause=5

Hidden=false

NoDisplay=false

X-GNOME-Autostart-enabled=true

Name=Conky Google Calendar