Share with friends and colleagues on social media

High performance computing (HPC) – the use of supercomputers and parallel processing techniques for solving complex computational problems – has traditionally been limited to the world of large research institutions, academia, governments and massive enterprises. But now, advanced analytics applications using artificial intelligence (AI), machine learning (ML), deep learning and cognitive computing are increasingly being used in the intelligence community, engineering and cognitive industries.

The need to analyze massive amounts of data and transaction-intensive workloads are driving the use of HPC into the business arena and making these tools mainstream for a variety of industries. Commercial users are getting into high performance applications for fraud detection, personalized medicine, manufacturing, smart cities, autonomous vehicles and many other areas. In order to effectively and efficiently run these workloads, SUSE has built a comprehensive and cohesive OS platform. In this blog, I will illustrate five things you should know about our SUSE solutions for AI over HPC.

Stronger partnerships

The first thing to know is how vital SUSE partnerships are to our HPC business. While the SLE HPC product can be obtained through direct Sales, it historically has been made available via our IHV and ISV partners. But obtaining the OS and associated HPC tools is only half of the story. Our key partnerships provide opportunities to innovate and contribute to open source development in AI/ML/DL and leading-edge advanced analytics applications.

Hewlett Packard Enterprises’ HPC software includes open source, HPE-developed and commercial HPC software that’s validated, integrated and performance-optimized for their systems. SUSE is the preferred HPE partner for Linux, HPC, OpenStack and Cloud Foundry solutions. And SUSE technology is embedded in every HPE ProLiant Server to power the intelligent provisioning feature. We have several joint papers that describe how SUSE and HPE together deliver HPC power to enterprises.

ARM System on a Chip (SoC) partners are driving new HPC adoptions in the modern data center. And SUSE is helping transform the 64-bit ARM platform to an enterprise computing platform by being the first commercial Linux distributor to fully support ARM servers. In fact, SUSE provides ARM HPC functionality as part of SLE HPC. The increased server density on the latest 64-bit ARM processors really helps to optimize the overall infrastructure costs – making Arm-based supercomputers more affordable. ARM SoC partners include Marvell (formerly Cavium), AMD, HPE, Cray, MACOM, Huawei HiSilicon, Mellanox, XILINX, Gigabyte, Qualcomm and more.

Cray builds their own Cray Linux Environment (CLE) – an adaptive operating system, purpose-built for HPC and designed for performance, reliability and compatibility – it also happens to be built on SUSE Linux Enterprise. Cray supercomputers continue to have a majority share of the Top500 sites around the world. And Cray is a key player in HPC, producing both Intel-based and ARM-powered supercomputers.

Lenovo’s strategy is to provide open access to clusters on their new highly efficient processors. SUSE and Lenovo jointly defined the scope of the Lenovo HPC stack using SUSE HPC componentry. In turn, Lenovo created the LiCO (Lenovo Intelligent Computing Orchestration) adaptation – a premier AI/HPC package tailored to power AI/ML/DL workloads.

Those are just a few highlights of key partnerships for SUSE and HPC. Others include NVIDIA, Microsoft Azure, Fujitsu, Intel, Univa, Dell Technologies, Altair, ANSYS, MathWorks, Supermicro and Bright Computing. Another aspect of partnering in open source is continuing to be a major contributor in communities that guide parallel computing – including OpenHPC (where SUSE is a founding member), OpenMP and many more involved in shaping HPC tools.

More differentiators

The second thing to know is the clear and concise set of HPC platform differentiators. This list encompasses what’s available in the SUSE OS as well as for HPC storage and HPC in the cloud:

- SUSE Enterprise Storage is Ceph-based and software-defined, providing backup and archival storage for HPC environments that is very easy to manage

- SLE HPC is enabled for Microsoft Azure and AWS Cloud

- SLE HPC and associated HPC packages are fully supported for Aarch64 (Arm) and x86-64 architectures

- Supported HPC packages, such as slurm for cluster workload management, are included with SLE HPC subscriptions. Also in the same HPC Module are Ganglia for cluster monitoring, OpenMPI, OpenBLAS, FFTW, HDF, Munge, MVAPICH and more.

- SLE HPC is priced very competitively, and uses a simple, one price per cluster node model

- SLE HPC provides ESPOS (Extended Service Pack Overlay Support) for longer service life for each service pack

- SUSE Enterprise Linux is used in about half of the top 100 HPC systems around the world

- SUSE Package Hub includes SUSE-curated and community-supported packages for HPC.

AI/ML focus

The third thing to know is our increased focus on the AI/ML market space and how we are providing the most efficient and effective HPC platform for these new workloads in a parallel computing environment. Technologies like cognitive computing, the Internet of Things and smart cities are powered by high performance computing and fueled by advanced data analytics. Businesses around the world today are recognizing that a Linux-based HPC infrastructure is vital to supporting the analytics applications of tomorrow. And we are finding that HPC is not just for scientific research any longer, and being adopted across banking, healthcare, retail, utilities and manufacturing.

In healthcare, an HPC platform underlies applications such as AI for precision medicine, diagnoses and treatment plans, cancer research, genomics and drug research. In the automotive world, we see HPC being used in aerodynamic designs, engine performance and timing, fuel consumption, safety systems and AI driverless operations. In manufacturing, HPC is vital for computational fluid dynamics, heat dissipation system design, AI advanced robotics, automated systems and other high-performance designs. And in energy, we find HPC as the basis for air flow designs in renewable energy, wind turbines and heating/cooling efficiencies.

SUSE Linux Enterprise HPC is integral to a highly scalable parallel computing infrastructure for supporting AI/ML and analytics applications being used across industries.

Restructured product

The fourth thing to know is how we’ve restructured our SLE HPC product with our goal of making HPC easier to implement and adapt. With SUSE’s concerted effort to make HPC easier to adopt, implement and maintain we have recently made the following changes:

- Invoked a simple, one price per cluster node model with significantly reduced list prices that can be used by IHVs, ISVs and direct Sales.

- SLE HPC is available for x86 and Arm HPC clusters

- SLE HPC has a new “level 3 support” SKU specifically for partners

- There are multiple service life options including Extended Service Pack Overlap Support and Long-Term Service Pack Support

- There are revised terms and conditions for smaller cluster sizes and increased clarity on defining compute nodes

- More frequent updates on demand for popular HPC packages, supported by SUSE

Growing market share

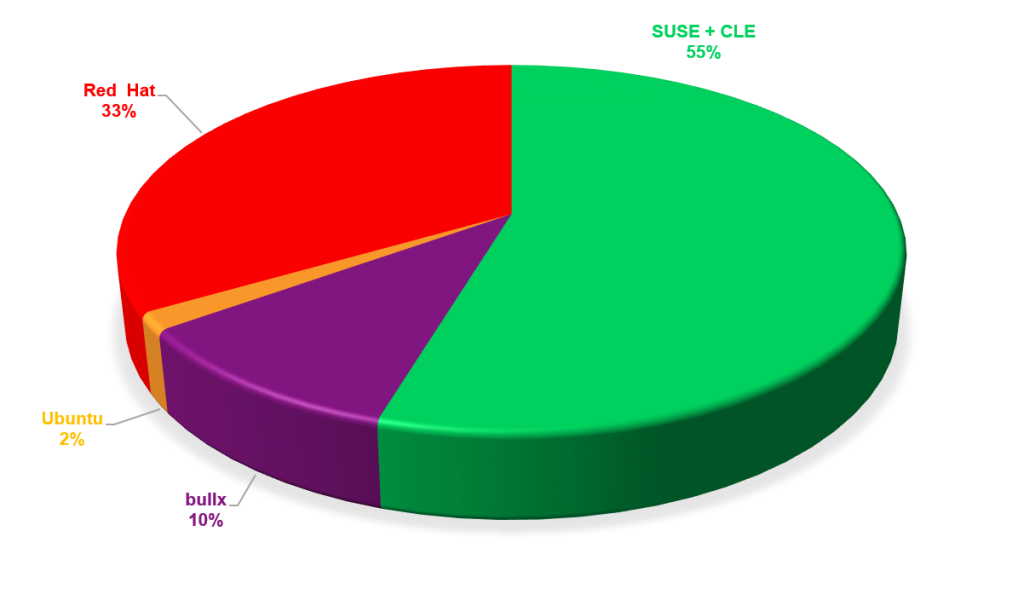

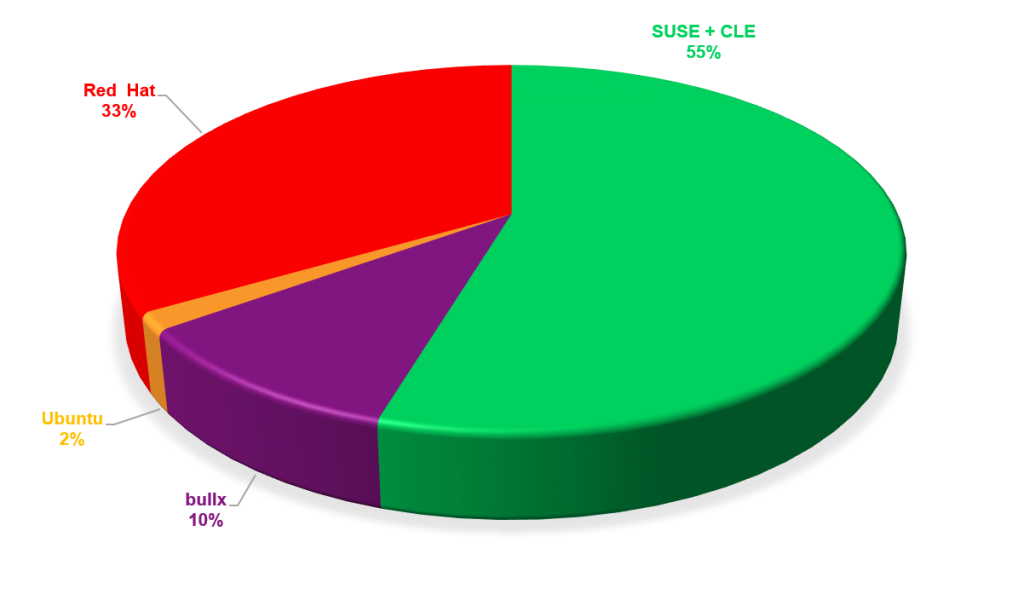

The fifth and final thing to know is that SUSE continues to grow its market share in the supercomputing arena, as evident by the market share in the latest Top500 report. The latest analysis of the Top500 supercomputer sites report shows that half of the top 30 run SUSE, expanding to 40% of the top 100. One of the most compelling statistics from the report is when we look at the vendor share of paid OS, which represents 116 supercomputers in the top 500 list. Here we see that over half of the paid Linux OS in the top 500 are running SUSE.

From the same segment, we also calculated the paid OS “performance share”, which is based on the total number of cores across 116 supercomputers. Here again we see that over half of the paid-for Linux OS in the top 500 are SUSE.

I will be providing more specifics on all of the areas I talked about in this blog post over the next several months, but hopefully I’ve given you a decent “first look”.

With our open and highly collaborative approach through our strong partner ecosystem, we can help deliver the required knowledge, skills and capabilities that will shape the adoption of HPC and AI technologies today and power the new analytics applications of tomorrow.

For more information about SUSE’s solutions for HPC, please visit https://www.suse.com/programs/high-performance-computing/ and https://www.suse.com/products/server/hpc/ and https://www.suse.com/solutions/hpc-storage/ .

Thanks for reading!

Source

Resin.io changed its name to balena and released an open source version of its IoT fleet management platform for Linux devices called openBalena. Targets include the Intel NUC, Jetson TX2, Raspberry Pi, and a new RPi CM3 carrier called the balenaFin.

Resin.io changed its name to balena and released an open source version of its IoT fleet management platform for Linux devices called openBalena. Targets include the Intel NUC, Jetson TX2, Raspberry Pi, and a new RPi CM3 carrier called the balenaFin.