For a person new to Linux, finding Linux functional is still not very easy even after the emergence of user friendly Linux distribution like Ubuntu and Mint. The thing remains that there will always be some configuration on user’s part to be done manually.

60 Linux Commands

Just to start with, the first thing a user should know is the basic commands in terminal. Linux GUI runs on Shell. When GUI is not running but Shell is running, Linux is running. If Shell is not running, nothing is running. Commands in Linux is a means of interaction with Shell. For a beginners some of the basic computational task is to:

- View the contents of a directory : A directory may contains visible and invisible files with different file permissions.

- Viewing blocks, HDD partition, External HDD

- Checking the integrity of Downloaded/Transferred Packages

- Converting and copying a file

- Know your machine name, OS and Kernel

- Viewing history

- Being root

- Make Directory

- Make Files

- Changing the file permission

- Own a file

- Install, Update and maintain Packages

- Uncompressing a file

- See current date, time and calendar

- Print contents of a file

- Copy and Move

- See the working directory for easy navigation

- Change the working directory, etc…

And we have described all of the above basic computational task in our First Article.

This was the first article of this series. We tried to provide you with detailed description of these commands with explicit examples which was highly appreciated by our reader in terms of likes, comments and traffic.

What after these initial commands? Obviously we moved to the next part of this article where we provided commands for computational tasks like:

- Finding a file in a given directory

- Searching a file with the given keywords

- Finding online documentation

- See the current running processes

- Kill a running process

- See the location of installed Binaries

- Starting, Ending, Restarting a service

- Making and removing of aliases

- View the disk and space usages

- Removing a file and/or directory

- Print/echo a custom output on standard output

- Changing password of on-self and other’s, if you are root.

- View Printing queue

- Compare two files

- Download a file, the Linux way (wget)

- Mount a block / partition / external HDD

- Compile and Run a code written in ‘C’, ‘C++’ and ‘Java’ Programming Language

This Second Article was again highly appreciated by the readers of Tecmint.com. The article was nicely elaborated with suitable examples and output.

After providing the users with the glimpse of Commands used by a Middle Level User we thought to give our effort in a nice write-up for a list of command used by an user of System Administrator Level.

In our Third and last article of this series, we tried to cover the commands that would be required for the computational task like:

- Configuring Network Interface

- Viewing custom Network Related information

- Getting information about Internet Server with customisable switches and Results

- Digging DNS

- Knowing Your System uptime

- Sending an occasional Information to all other logged-in users

- Send text messages directly to a user

- Combination of commands

- Renaming a file

- Seeing the processes of a CPU

- Creating newly formatted ext4 partition

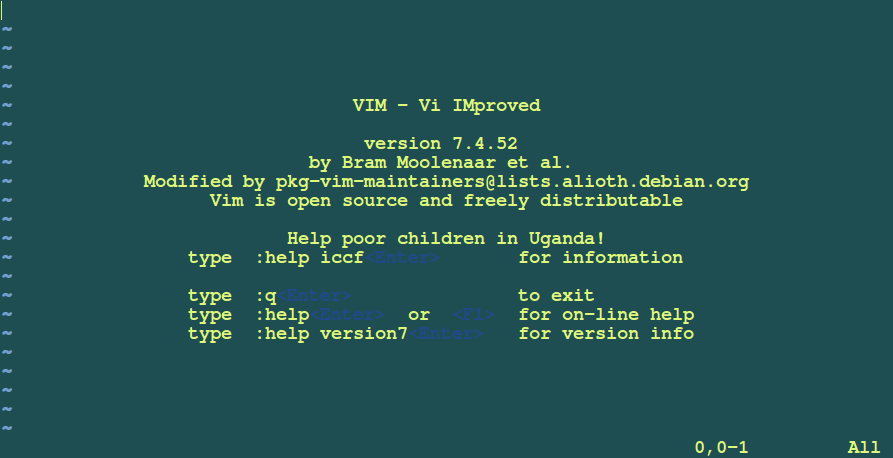

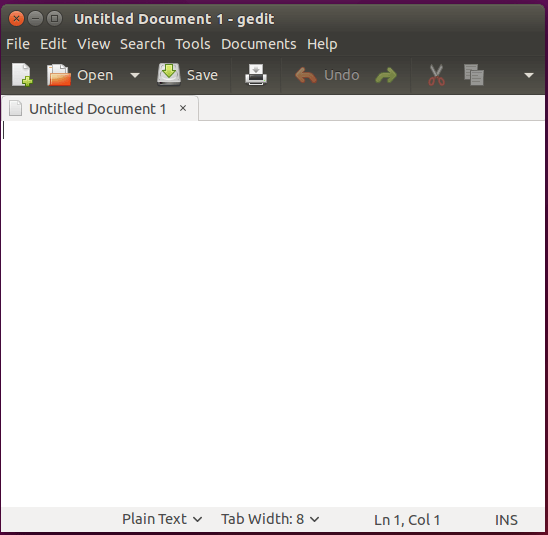

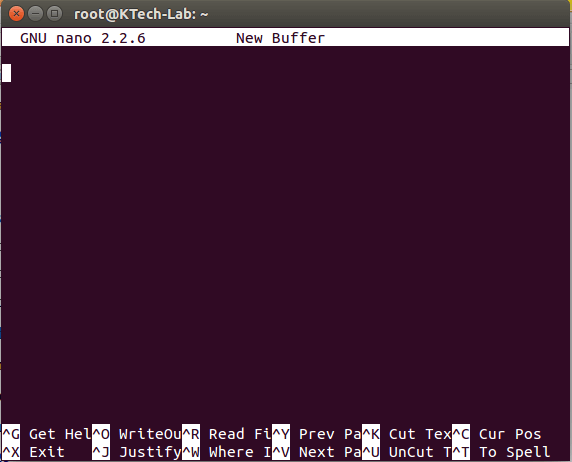

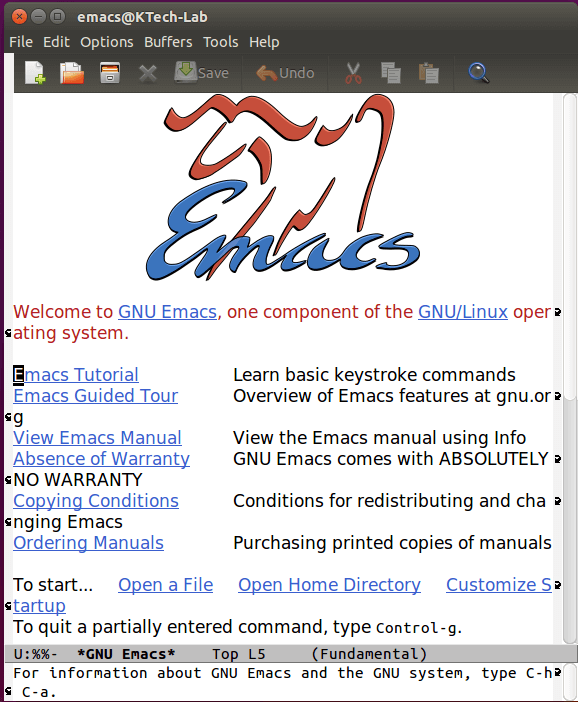

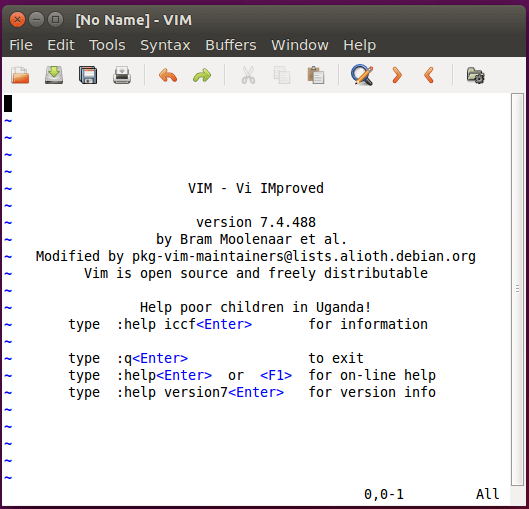

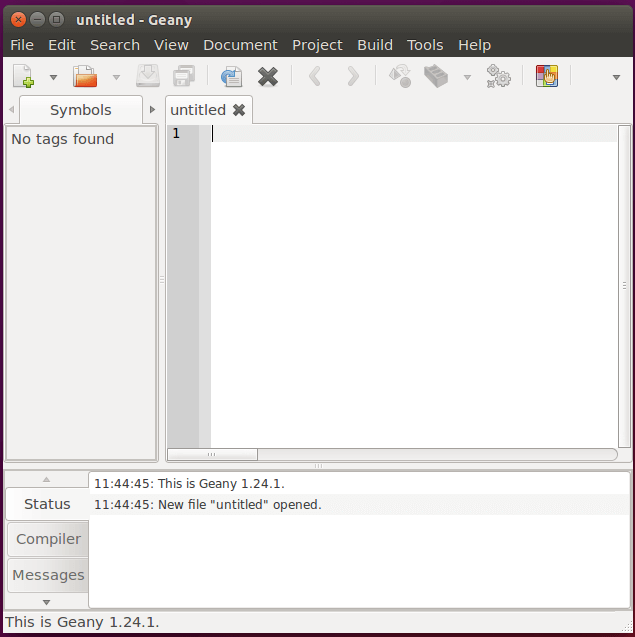

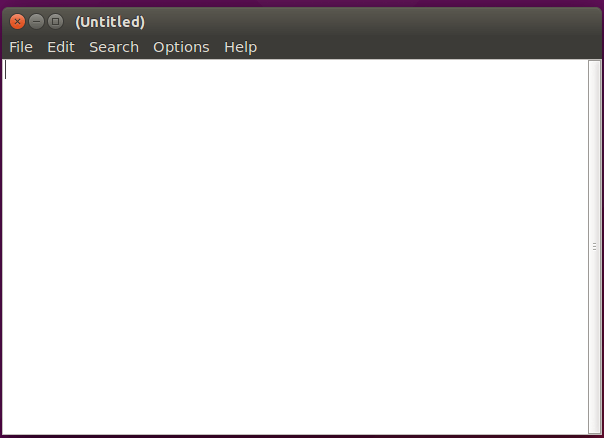

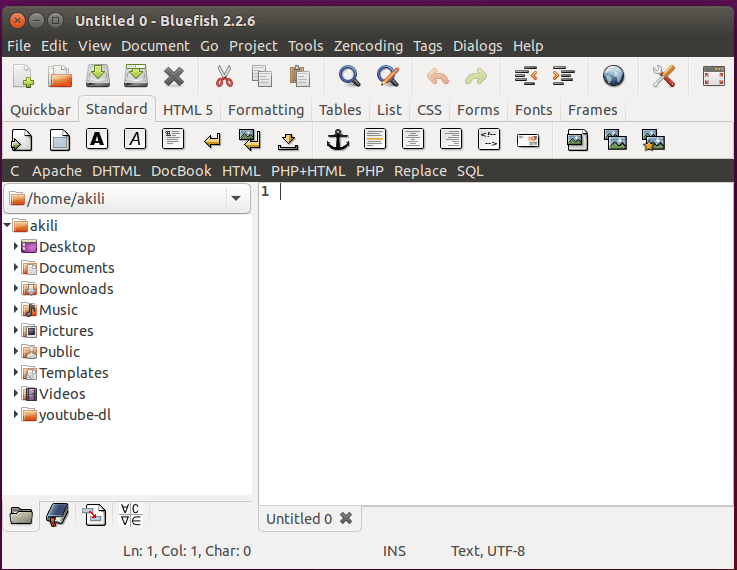

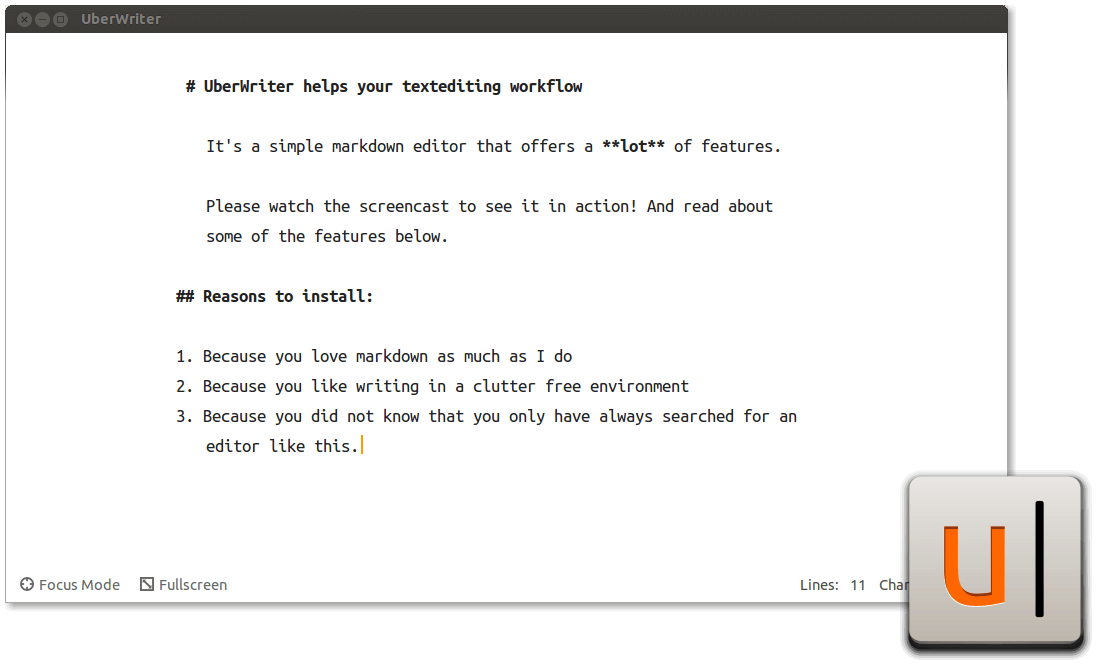

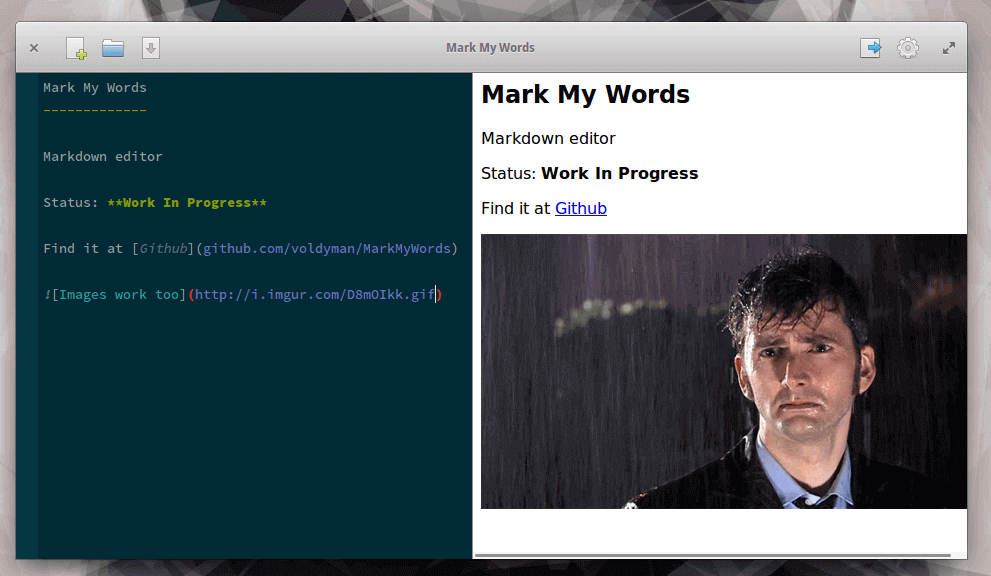

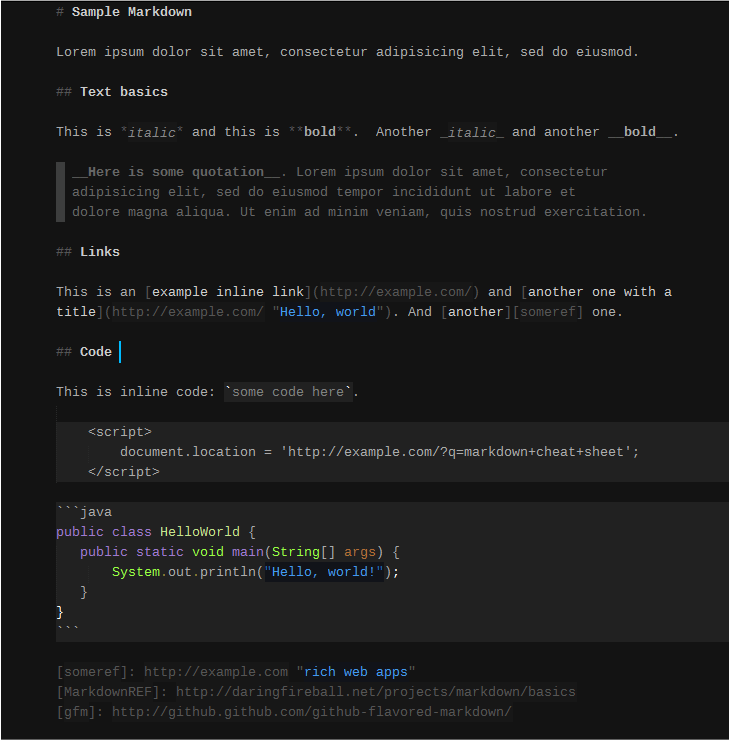

- Text File editors like vi, emacs and nano

- Copying a large file/folder with progress bar

- Keeping track of free and available memory

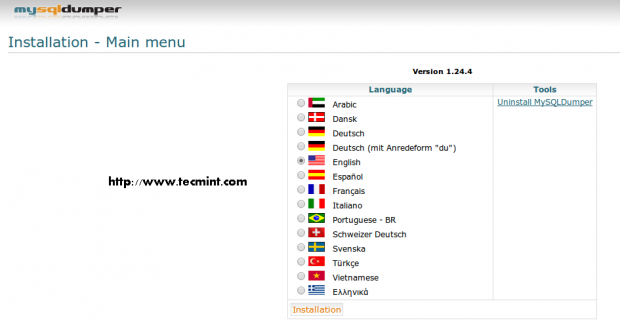

- Backup a mysql database

- Make difficult to guess – random password

- Merge two text files

- List of all the opened files

Writing this article and the list of command that needs to go with the article was a little cumbersome. We chose 20 commands with each article and hence gave a lot of thought for which command should be included and which should be excluded from the particular post. I personally selected the commands on the basis of their usability (as I use and get used to) from an user point of view and an Administrator point of view.

This Articles aims to concatenate all the articles of its series and provide you with all the functionality in commands you can perform in our this very series of articles.

There are too long lists of commands available in Linux. But we provided the list of 60 commands which is generally and most commonly used and a user having knowledge of these 60 commands as a whole can work in terminal very much smoothly.

That’s all for now from me. I will soon be coming up with another tutorial, you people will love to go through. Till then Stay Tuned!

Switching From Windows to Nix or a Newbie to Linux – 20 Useful Commands for Linux Newbies

So you are planning to switch from Windows to Linux, or have just switched to Linux? Oops!!! what I am asking! For what else reason would you have been here. From my past experience when I was new to Nux, commands and terminal really scared me, I was worried about the commands, as to what extent I have to remember and memorise them to get myself fully functional with Linux. No doubt online documentation, books, man pages and user community helped me a lot but I strongly believed that there should be an article with details of commands in easy to learn and understand language.These Motivated me to Master Linux and to make it easy-to-use. My this article is a step towards it.

20 Linux Commands for Newbies

1. Command: ls

The command “ls” stands for (List Directory Contents), List the contents of the folder, be it file or folder, from which it runs.

root@tecmint:~# ls

Android-Games Music

Pictures Public

Desktop Tecmint.com

Documents TecMint-Sync

Downloads Templates

The command “ls -l” list the content of folder, in long listing fashion.

root@tecmint:~# ls -l

total 40588

drwxrwxr-x 2 ravisaive ravisaive 4096 May 8 01:06 Android Games

drwxr-xr-x 2 ravisaive ravisaive 4096 May 15 10:50 Desktop

drwxr-xr-x 2 ravisaive ravisaive 4096 May 16 16:45 Documents

drwxr-xr-x 6 ravisaive ravisaive 4096 May 16 14:34 Downloads

drwxr-xr-x 2 ravisaive ravisaive 4096 Apr 30 20:50 Music

drwxr-xr-x 2 ravisaive ravisaive 4096 May 9 17:54 Pictures

drwxrwxr-x 5 ravisaive ravisaive 4096 May 3 18:44 Tecmint.com

drwxr-xr-x 2 ravisaive ravisaive 4096 Apr 30 20:50 Templates

Command “ls -a“, list the content of folder, including hidden files starting with ‘.’.

root@tecmint:~# ls -a

. .gnupg .dbus .goutputstream-PI5VVW .mission-control

.adobe deja-dup .grsync .mozilla .themes

.gstreamer-0.10 .mtpaint .thumbnails .gtk-bookmarks .thunderbird

.HotShots .mysql_history .htaccess .apport-ignore.xml .ICEauthority

.profile .bash_history .icons .bash_logout .fbmessenger

.jedit .pulse .bashrc .liferea_1.8 .pulse-cookie

.Xauthority .gconf .local .Xauthority.HGHVWW .cache

.gftp .macromedia .remmina .cinnamon .gimp-2.8

.ssh .xsession-errors .compiz .gnome teamviewer_linux.deb

.xsession-errors.old .config .gnome2 .zoncolor

Note: In Linux file name starting with ‘.‘ is hidden. In Linux every file/folder/device/command is a file. The output of ls -l is:

- d (stands for directory).

- rwxr-xr-x is the file permission of the file/folder for owner, group and world.

- The 1st ravisaive in the above example means that file is owned by user ravisaive.

- The 2nd ravisaive in the above example means file belongs to user group ravisaive.

- 4096 means file size is 4096 Bytes.

- May 8 01:06 is the date and time of last modification.

- And at the end is the name of the File/Folder.

For more “ls” command examples read 15 ‘ls’ Command Examples in Linux.

2. Command: lsblk

The “lsblk” stands for (List Block Devices), print block devices by their assigned name (but not RAM) on the standard output in a tree-like fashion.

root@tecmint:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 232.9G 0 disk

├─sda1 8:1 0 46.6G 0 part /

├─sda2 8:2 0 1K 0 part

├─sda5 8:5 0 190M 0 part /boot

├─sda6 8:6 0 3.7G 0 part [SWAP]

├─sda7 8:7 0 93.1G 0 part /data

└─sda8 8:8 0 89.2G 0 part /personal

sr0 11:0 1 1024M 0 rom

The “lsblk -l” command list block devices in ‘list‘ structure (not tree like fashion).

root@tecmint:~# lsblk -l

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 232.9G 0 disk

sda1 8:1 0 46.6G 0 part /

sda2 8:2 0 1K 0 part

sda5 8:5 0 190M 0 part /boot

sda6 8:6 0 3.7G 0 part [SWAP]

sda7 8:7 0 93.1G 0 part /data

sda8 8:8 0 89.2G 0 part /personal

sr0 11:0 1 1024M 0 rom

Note: lsblk is very useful and easiest way to know the name of New Usb Device you just plugged in, especially when you have to deal with disk/blocks in terminal.

3. Command: md5sum

The “md5sum” stands for (Compute and Check MD5 Message Digest), md5 checksum (commonly called hash) is used to match or verify integrity of files that may have changed as a result of a faulty file transfer, a disk error or non-malicious interference.

root@tecmint:~# md5sum teamviewer_linux.deb

47790ed345a7b7970fc1f2ac50c97002 teamviewer_linux.deb

Note: The user can match the generated md5sum with the one provided officially. Md5sum is considered less secure than sha1sum, which we will discuss later.

4. Command: dd

Command “dd” stands for (Convert and Copy a file), Can be used to convert and copy a file and most of the times is used to copy a iso file (or any other file) to a usb device (or any other location), thus can be used to make a ‘Bootlable‘ Usb Stick.

root@tecmint:~# dd if=/home/user/Downloads/debian.iso of=/dev/sdb1 bs=512M; sync

Note: In the above example the usb device is supposed to be sdb1 (You should Verify it using command lsblk, otherwise you will overwrite your disk and OS), use name of disk very Cautiously!!!.

dd command takes some time ranging from a few seconds to several minutes in execution, depending on the size and type of file and read and write speed of Usb stick.

5. Command: uname

The “uname” command stands for (Unix Name), print detailed information about the machine name, Operating System and Kernel.

root@tecmint:~# uname -a

Linux tecmint 3.8.0-19-generic #30-Ubuntu SMP Wed May 1 16:36:13 UTC 2013 i686 i686 i686 GNU/Linux

Note: uname shows type of kernel. uname -a output detailed information. Elaborating the above output of uname -a.

- “Linux“: The machine’s kernel name.

- “tecmint“: The machine’s node name.

- “3.8.0-19-generic“: The kernel release.

- “#30-Ubuntu SMP“: The kernel version.

- “i686“: The architecture of the processor.

- “GNU/Linux“: The operating system name.

6. Command: history

The “history” command stands for History (Event) Record, it prints the history of long list of executed commands in terminal.

root@tecmint:~# history

1 sudo add-apt-repository ppa:tualatrix/ppa

2 sudo apt-get update

3 sudo apt-get install ubuntu-tweak

4 sudo add-apt-repository ppa:diesch/testing

5 sudo apt-get update

6 sudo apt-get install indicator-privacy

7 sudo add-apt-repository ppa:atareao/atareao

8 sudo apt-get update

9 sudo apt-get install my-weather-indicator

10 pwd

11 cd && sudo cp -r unity/6 /usr/share/unity/

12 cd /usr/share/unity/icons/

13 cd /usr/share/unity

Note: Pressing “Ctrl + R” and then search for already executed commands which lets your command to be completed with auto completion feature.

(reverse-i-search)`if': ifconfig

7. Command: sudo

The “sudo” (super user do) command allows a permitted user to execute a command as the superuser or another user, as specified by the security policy in the sudoers list.

root@tecmint:~# sudo add-apt-repository ppa:tualatrix/ppa

Note: sudo allows user to borrow superuser privileged, while a similar command ‘su‘ allows user to actually log in as superuser. Sudo is safer than su.

It is not advised to use sudo or su for day-to-day normal use, as it can result in serious error if accidentally you did something wrong, that’s why a very popular saying in Linux community is:

“To err is human, but to really foul up everything, you need root password.”

8. Command: mkdir

The “mkdir” (Make directory) command create a new directory with name path. However is the directory already exists, it will return an error message “cannot create folder, folder already exists”.

root@tecmint:~# mkdir tecmint

Note: Directory can only be created inside the folder, in which the user has write permission. mkdir: cannot create directory `tecmint‘: File exists

(Don’t confuse with file in the above output, you might remember what i said at the beginning – In Linux every file, folder, drive, command, scripts are treated as file).

9. Command: touch

The “touch” command stands for (Update the access and modification times of each FILE to the current time). touch command creates the file, only if it doesn’t exist. If the file already exists it will update the timestamp and not the contents of the file.

root@tecmint:~# touch tecmintfile

Note: touch can be used to create file under directory, on which the user has write permission, only if the file don’t exist there.

10. Command: chmod

The Linux “chmod” command stands for (change file mode bits). chmod changes the file mode (permission) of each given file, folder, script, etc.. according to mode asked for.

There exist 3 types of permission on a file (folder or anything but to keep things simple we will be using file).

Read (r)=4

Write(w)=2

Execute(x)=1

So if you want to give only read permission on a file it will be assigned a value of ‘4‘, for write permission only, a value of ‘2‘ and for execute permission only, a value of ‘1‘ is to be given. For read and write permission 4+2 = ‘6‘ is to be given, ans so on.

Now permission need to be set for 3 kinds of user and usergroup. The first is owner, then usergroup and finally world.

rwxr-x--x abc.sh

Here the root’s permission is rwx (read, write and execute).

usergroup to which it belongs, is r-x (read and execute only, no write permission) and

for world is –x (only execute).

To change its permission and provide read, write and execute permission to owner, group and world.

root@tecmint:~# chmod 777 abc.sh

only read and write permission to all three.

root@tecmint:~# chmod 666 abc.sh

read, write and execute to owner and only execute to group and world.

root@tecmint:~# chmod 711 abc.sh

Note: one of the most important command useful for sysadmin and user both. On a multi-user environment or on a server, this command comes to rescue, setting wrong permission will either makes a file inaccessible or provide unauthorized access to someone.

11. Command: chown

The Linux “chown” command stands for (change file owner and group). Every file belongs to a group of user and a owner. It is used Do ‘ls -l‘ into your directory and you will see something like this.

root@tecmint:~# ls -l

drwxr-xr-x 3 server root 4096 May 10 11:14 Binary

drwxr-xr-x 2 server server 4096 May 13 09:42 Desktop

Here the directory Binary is owned by user “server” and it belongs to usergroup “root” where as directory “Desktop” is owned by user “server” and belongs to user group “server“.

This “chown” command is used to change the file ownership and thus is useful in managing and providing file to authorised user and usergroup only.

root@tecmint:~# chown server:server Binary

drwxr-xr-x 3 server server 4096 May 10 11:14 Binary

drwxr-xr-x 2 server server 4096 May 13 09:42 Desktop

Note: “chown” changes the user and group ownership of each given FILE to NEW-OWNER or to the user and group of an existing reference file.

12. Command: apt

The Debian based “apt” command stands for (Advanced Package Tool). Apt is an advanced package manager for Debian based system (Ubuntu, Kubuntu, etc.), that automatically and intelligently search, install, update and resolves dependency of packages on Gnu/Linux system from command line.

root@tecmint:~# apt-get install mplayer

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following package was automatically installed and is no longer required:

java-wrappers

Use 'apt-get autoremove' to remove it.

The following extra packages will be installed:

esound-common libaudiofile1 libesd0 libopenal-data libopenal1 libsvga1 libvdpau1 libxvidcore4

Suggested packages:

pulseaudio-esound-compat libroar-compat2 nvidia-vdpau-driver vdpau-driver mplayer-doc netselect fping

The following NEW packages will be installed:

esound-common libaudiofile1 libesd0 libopenal-data libopenal1 libsvga1 libvdpau1 libxvidcore4 mplayer

0 upgraded, 9 newly installed, 0 to remove and 8 not upgraded.

Need to get 3,567 kB of archives.

After this operation, 7,772 kB of additional disk space will be used.

Do you want to continue [Y/n]? y

root@tecmint:~# apt-get update

Hit http://ppa.launchpad.net raring Release.gpg

Hit http://ppa.launchpad.net raring Release.gpg

Hit http://ppa.launchpad.net raring Release.gpg

Hit http://ppa.launchpad.net raring Release.gpg

Get:1 http://security.ubuntu.com raring-security Release.gpg [933 B]

Hit http://in.archive.ubuntu.com raring Release.gpg

Hit http://ppa.launchpad.net raring Release.gpg

Get:2 http://security.ubuntu.com raring-security Release [40.8 kB]

Ign http://ppa.launchpad.net raring Release.gpg

Get:3 http://in.archive.ubuntu.com raring-updates Release.gpg [933 B]

Hit http://ppa.launchpad.net raring Release.gpg

Hit http://in.archive.ubuntu.com raring-backports Release.gpg

Note: The above commands results into system-wide changes and hence requires root password (Check ‘#‘ and not ‘$’ as prompt). Apt is considered more advanced and intelligent as compared to yum command.

As the name suggest, apt-cache search for package containing sub package mpalyer. apt-get install, update all the packages, that are already installed, to the newest one.

Read more about apt-get and apt-cache commands at 25 APT-GET and APT-CACHE Commands

13. Command: tar

The “tar” command is a Tape Archive is useful in creation of archive, in a number of file format and their extraction.

root@tecmint:~# tar -zxvf abc.tar.gz (Remember 'z' for .tar.gz)

root@tecmint:~# tar -jxvf abc.tar.bz2 (Remember 'j' for .tar.bz2)

root@tecmint:~# tar -cvf archieve.tar.gz(.bz2) /path/to/folder/abc

Note: A ‘tar.gz‘ means gzipped. ‘tar.bz2‘ is compressed with bzip which uses a better but slower compression method.

Read more about “tar command” examples at 18 Tar Command Examples

14. Command: cal

The “cal” (Calendar), it is used to displays calendar of the present month or any other month of any year that is advancing or passed.

root@tecmint:~# cal

May 2013

Su Mo Tu We Th Fr Sa

1 2 3 4

5 6 7 8 9 10 11

12 13 14 15 16 17 18

19 20 21 22 23 24 25

26 27 28 29 30 31

Show calendar of year 1835 for month February, that already has passed.

root@tecmint:~# cal 02 1835

February 1835

Su Mo Tu We Th Fr Sa

1 2 3 4 5 6 7

8 9 10 11 12 13 14

15 16 17 18 19 20 21

22 23 24 25 26 27 28

Shows calendar of year 2145 for the month of July, that will advancing

root@tecmint:~# cal 07 2145

July 2145

Su Mo Tu We Th Fr Sa

1 2 3

4 5 6 7 8 9 10

11 12 13 14 15 16 17

18 19 20 21 22 23 24

25 26 27 28 29 30 31

Note: You need not to turn the calendar of 50 years back, neither you need to make complex mathematical calculation to know what day you were worn or your coming birthday will fall on which day.

15. Command: date

The “date” (Date) command print the current date and time on the standard output, and can further be set.

root@tecmint:~# date

Fri May 17 14:13:29 IST 2013

root@tecmint:~# date --set='14 may 2013 13:57'

Mon May 13 13:57:00 IST 2013

Note: This Command will be very use-full in scripting, time and date based scripting, to be more perfect. Moreover changing date and time using terminal will make you feel GEEK!!!. (Obviously you need to be root to perform this operation, as it is a system wide change).

16. Command: cat

The “cat” stands for (Concatenation). Concatenate (join) two or more plain file and/or print contents of a file on standard output.

root@tecmint:~# cat a.txt b.txt c.txt d.txt >> abcd.txt

root@tecmint:~# cat abcd.txt

....

contents of file abcd

...

Note: “>>” and “>” are called append symbol. They are used to append the output to a file and not on standard output. “>” symbol will delete a file already existed and create a new file hence for security reason it is advised to use “>>” that will write the output without overwriting or deleting the file.

Before Proceeding further, I must let you know about wildcards (you would be aware of wildcard entry, in most of the Television shows) Wildcards are a shell feature that makes the command line much more powerful than any GUI file managers. You see, if you want to select a big group of files in a graphical file manager, you usually have to select them with your mouse. This may seem simple, but in some cases it can be very frustrating.

For example, suppose you have a directory with a huge amount of all kinds of files and subdirectories, and you decide to move all the HTML files, that have the word “Linux” somewhere in the middle of their names, from that big directory into another directory. What’s a simple way to do this? If the directory contains a huge amount of differently named HTML files, your task is everything but simple!

In the Linux CLI that task is just as simple to perform as moving only one HTML file, and it’s so easy because of the shell wildcards. These are special characters that allow you to select file names that match certain patterns of characters. This helps you to select even a big group of files with typing just a few characters, and in most cases it’s easier than selecting the files with a mouse.

Here’s a list of the most commonly used wildcards :

Wildcard Matches

* zero or more characters

? exactly one character

[abcde] exactly one character listed

[a-e] exactly one character in the given range

[!abcde] any character that is not listed

[!a-e] any character that is not in the given range

{debian,linux} exactly one entire word in the options given

! is called not symbol, and the reverse of string attached with ‘!’ is true.

Read more examples of Linux “cat command” at 13 Cat Command Examples in Linux

17. Command: cp

The “copy” stands for (Copy), it copies a file from one location to another location.

root@tecmint:~# cp /home/user/Downloads abc.tar.gz /home/user/Desktop (Return 0 when sucess)

Note: cp is one of the most commonly used command in shell scripting and it can be used with wildcard characters (Describe in the above block), for customised and desired file copying.

18. Command: mv

The “mv” command moves a file from one location to another location.

root@tecmint:~# mv /home/user/Downloads abc.tar.gz /home/user/Desktop (Return 0 when sucess)

Note: mv command can be used with wildcard characters. mv should be used with caution, as moving of system/unauthorised file may lead to security as well as breakdown of system.

19. Command: pwd

The command “pwd” (print working directory), prints the current working directory with full path name from terminal.

root@tecmint:~# pwd

/home/user/Desktop

Note: This command won’t be much frequently used in scripting but it is an absolute life saver for newbie who gets lost in terminal in their early connection with nux. (Linux is most commonly referred as nux or nix).

20. Command: cd

Finally, the frequently used “cd” command stands for (change directory), it change the working directory to execute, copy, move write, read, etc. from terminal itself.

root@tecmint:~# cd /home/user/Desktop

server@localhost:~$ pwd

/home/user/Desktop

Note: cd comes to rescue when switching between directories from terminal. “Cd ~” will change the working directory to user’s home directory, and is very useful if a user finds himself lost in terminal. “Cd ..” will change the working directory to parent directory (of current working directory).

These commands will surely make you comfortable with Linux. But it’s not the end. Very soon I will be coming with other commands which will be useful for ‘Middle Level User‘ i.e., You! No don’t exclaim, if you get used-to these commands, You will notice promotion in user-level from newbie to Middle-level-user. In the next article, I will be coming up with commands like ‘Kill‘, ‘Ps‘, ‘grep‘,….Wait for the article and I don’t want to spoil your interest.

20 Advanced Commands for Middle Level Linux Users

You might have found the first article very much useful, this article is an extension of the 20 Useful Commands for Linux Newbies. The first article was intended for newbies and this article is for Middle-Level-User and Advanced Users. Here you will find how to customise search, know the processes running guide to kill them, how to make your Linux terminal productive is an important aspect and how to compile c, c++, java programs in nix.

20 Linux Advanced & Expert Commands

21. Command: Find

Search for files in the given directory, hierarchically starting at the parent directory and moving to sub-directories.

root@tecmint:~# find -name *.sh

./Desktop/load.sh

./Desktop/test.sh

./Desktop/shutdown.sh

./Binary/firefox/run-mozilla.sh

./Downloads/kdewebdev-3.5.8/quanta/scripts/externalpreview.sh

./Downloads/kdewebdev-3.5.8/admin/doxygen.sh

./Downloads/kdewebdev-3.5.8/admin/cvs.sh

./Downloads/kdewebdev-3.5.8/admin/ltmain.sh

./Downloads/wheezy-nv-install.sh

Note: The `-name‘ option makes the search case sensitive. You can use the `-iname‘ option to find something regardless of case. (* is a wildcard and searches all the file having extension ‘.sh‘ you can use filename or a part of file name to customise the output).

root@tecmint:~# find -iname *.SH ( find -iname *.Sh / find -iname *.sH)

./Desktop/load.sh

./Desktop/test.sh

./Desktop/shutdown.sh

./Binary/firefox/run-mozilla.sh

./Downloads/kdewebdev-3.5.8/quanta/scripts/externalpreview.sh

./Downloads/kdewebdev-3.5.8/admin/doxygen.sh

./Downloads/kdewebdev-3.5.8/admin/cvs.sh

./Downloads/kdewebdev-3.5.8/admin/ltmain.sh

./Downloads/wheezy-nv-install.sh

root@tecmint:~# find -name *.tar.gz

/var/www/modules/update/tests/aaa_update_test.tar.gz

./var/cache/flashplugin-nonfree/install_flash_player_11_linux.i386.tar.gz

./home/server/Downloads/drupal-7.22.tar.gz

./home/server/Downloads/smtp-7.x-1.0.tar.gz

./home/server/Downloads/noreqnewpass-7.x-1.2.tar.gz

./usr/share/gettext/archive.git.tar.gz

./usr/share/doc/apg/php.tar.gz

./usr/share/doc/festival/examples/speech_pm_1.0.tar.gz

./usr/share/doc/argyll/examples/spyder2.tar.gz

./usr/share/usb_modeswitch/configPack.tar.gz

Note: The above command searches for all the file having extension ‘tar.gz‘ in root directory and all the sub-directories including mounted devices.

Read more examples of Linux ‘find‘ command at 35 Find Command Examples in Linux

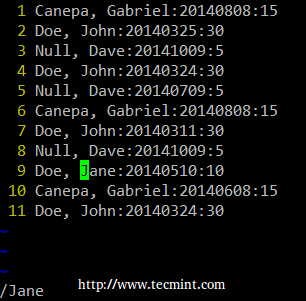

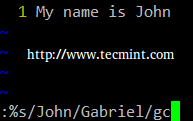

22. Command: grep

The ‘grep‘ command searches the given file for lines containing a match to the given strings or words. Search ‘/etc/passwd‘ for ‘tecmint‘ user.

root@tecmint:~# grep tecmint /etc/passwd

tecmint:x:1000:1000:Tecmint,,,:/home/tecmint:/bin/bash

Ignore word case and all other combination with ‘-i‘ option.

root@tecmint:~# grep -i TECMINT /etc/passwd

tecmint:x:1000:1000:Tecmint,,,:/home/tecmint:/bin/bash

Search recursively (-r) i.e. read all files under each directory for a string “127.0.0.1“.

root@tecmint:~# grep -r "127.0.0.1" /etc/

/etc/vlc/lua/http/.hosts:127.0.0.1

/etc/speech-dispatcher/modules/ivona.conf:#IvonaServerHost "127.0.0.1"

/etc/mysql/my.cnf:bind-address = 127.0.0.1

/etc/apache2/mods-available/status.conf: Allow from 127.0.0.1 ::1

/etc/apache2/mods-available/ldap.conf: Allow from 127.0.0.1 ::1

/etc/apache2/mods-available/info.conf: Allow from 127.0.0.1 ::1

/etc/apache2/mods-available/proxy_balancer.conf:# Allow from 127.0.0.1 ::1

/etc/security/access.conf:#+ : root : 127.0.0.1

/etc/dhcp/dhclient.conf:#prepend domain-name-servers 127.0.0.1;

/etc/dhcp/dhclient.conf:# option domain-name-servers 127.0.0.1;

/etc/init/network-interface.conf: ifconfig lo 127.0.0.1 up || true

/etc/java-6-openjdk/net.properties:# localhost & 127.0.0.1).

/etc/java-6-openjdk/net.properties:# http.nonProxyHosts=localhost|127.0.0.1

/etc/java-6-openjdk/net.properties:# localhost & 127.0.0.1).

/etc/java-6-openjdk/net.properties:# ftp.nonProxyHosts=localhost|127.0.0.1

/etc/hosts:127.0.0.1 localhost

Note: You can use these following options along with grep.

- -w for word (egrep -w ‘word1|word2‘ /path/to/file).

- -c for count (i.e., total number of times the pattern matched) (grep -c ‘word‘ /path/to/file).

- –color for coloured output (grep –color server /etc/passwd).

23. Command: man

The ‘man‘ is the system’s manual pager. Man provides online documentation for all the possible options with a command and its usages. Almost all the command comes with their corresponding manual pages. For example,

root@tecmint:~# man man

MAN(1) Manual pager utils MAN(1)

NAME

man - an interface to the on-line reference manuals

SYNOPSIS

man [-C file] [-d] [-D] [--warnings[=warnings]] [-R encoding] [-L locale] [-m system[,...]] [-M path] [-S list] [-e extension] [-i|-I]

[--regex|--wildcard] [--names-only] [-a] [-u] [--no-subpages] [-P pager] [-r prompt] [-7] [-E encoding] [--no-hyphenation] [--no-justification] [-p

string] [-t] [-T[device]] [-H[browser]] [-X[dpi]] [-Z] [[section] page ...] ...

man -k [apropos options] regexp ...

man -K [-w|-W] [-S list] [-i|-I] [--regex] [section] term ...

man -f [whatis options] page ...

man -l [-C file] [-d] [-D] [--warnings[=warnings]] [-R encoding] [-L locale] [-P pager] [-r prompt] [-7] [-E encoding] [-p string] [-t] [-T[device]]

[-H[browser]] [-X[dpi]] [-Z] file ...

man -w|-W [-C file] [-d] [-D] page ...

man -c [-C file] [-d] [-D] page ...

man [-hV]

Manual page for man page itself, similarly ‘man cat‘ (Manual page for cat command) and ‘man ls‘ (Manual page for command ls).

Note: man page is intended for command reference and learning.

24. Command: ps

ps (Process) gives the status of running processes with a unique Id called PID.

root@tecmint:~# ps

PID TTY TIME CMD

4170 pts/1 00:00:00 bash

9628 pts/1 00:00:00 ps

To list status of all the processes along with process id and PID, use option ‘-A‘.

root@tecmint:~# ps -A

PID TTY TIME CMD

1 ? 00:00:01 init

2 ? 00:00:00 kthreadd

3 ? 00:00:01 ksoftirqd/0

5 ? 00:00:00 kworker/0:0H

7 ? 00:00:00 kworker/u:0H

8 ? 00:00:00 migration/0

9 ? 00:00:00 rcu_bh

....

Note: This command is very useful when you want to know which processes are running or may need PIDsometimes, for process to be killed. You can use it with ‘grep‘ command to find customised output. For example,

root@tecmint:~# ps -A | grep -i ssh

1500 ? 00:09:58 sshd

4317 ? 00:00:00 sshd

Here ‘ps‘ is pipelined with ‘grep‘ command to find customised and relevant output of our need.

25. Command: kill

OK, you might have understood what this command is for, from the name of the command. This command is used to kill process which is not relevant now or is not responding. It is very useful command, rather a very very useful command. You might be familiar with frequent windows restarting because of the fact that most of the time a running process can’t be killed, and if killed it needs windows to get restart so that changes could be taken into effect but in the world of Linux, there is no such things. Here you can kill a process and start it without restarting the whole system.

You need a process’s pid (ps) to kill it.

Let suppose you want to kill program ‘apache2‘ that might not be responding. Run ‘ps -A‘ along with grepcommand.

root@tecmint:~# ps -A | grep -i apache2

1285 ? 00:00:00 apache2

Find process ‘apache2‘, note its pid and kill it. For example, in my case ‘apache2‘ pid is ‘1285‘.

root@tecmint:~# kill 1285 (to kill the process apache2)

Note: Every time you re-run a process or start a system, a new pid is generated for each process and you can know about the current running processes and its pid using command ‘ps‘.

Another way to kill the same process is.

root@tecmint:~# pkill apache2

Note: Kill requires job id / process id for sending signals, where as in pkill, you have an option of using pattern, specifying process owner, etc.

26. Command: whereis

The ‘whereis‘ command is used to locate the Binary, Sources and Manual Pages of the command. For example, to locate the Binary, Sources and Manual Pages of the command ‘ls‘ and ‘kill‘.

root@tecmint:~# whereis ls

ls: /bin/ls /usr/share/man/man1/ls.1.gz

root@tecmint:~# whereis kill

kill: /bin/kill /usr/share/man/man2/kill.2.gz /usr/share/man/man1/kill.1.gz

Note: This is useful to know where the binaries are installed for manual editing sometimes.

27. Command: service

The ‘service‘ command controls the Starting, Stopping or Restarting of a ‘service‘. This command make it possible to start, restart or stop a service without restarting the system, for the changes to be taken into effect.

Startting an apache2 server on Ubuntu

root@tecmint:~# service apache2 start

* Starting web server apache2 apache2: Could not reliably determine the server's fully qualified domain name, using 127.0.1.1 for ServerName

httpd (pid 1285) already running [ OK ]

Restarting a apache2 server on Ubuntu

root@tecmint:~# service apache2 restart

* Restarting web server apache2 apache2: Could not reliably determine the server's fully qualified domain name, using 127.0.1.1 for ServerName

... waiting .apache2: Could not reliably determine the server's fully qualified domain name, using 127.0.1.1 for ServerName [ OK ]

Stopping a apache2 server on Ubuntu

root@tecmint:~# service apache2 stop

* Stopping web server apache2 apache2: Could not reliably determine the server's fully qualified domain name, using 127.0.1.1 for ServerName

... waiting [ OK ]

Note: All the process script lies in ‘/etc/init.d‘, and the path might needs to be included on certain system, i.e., in spite of running “service apache2 start” you would be asked to run “/etc/init.d/apache2 start”.

28. Command: alias

alias is a built in shell command that lets you assign name for a long command or frequently used command.

I uses ‘ls -l‘ command frequently, which includes 5 characters including space. Hence I created an alias for this to ‘l‘.

root@tecmint:~# alias l='ls -l'

check if it works or not.

root@tecmint:~# l

total 36

drwxr-xr-x 3 tecmint tecmint 4096 May 10 11:14 Binary

drwxr-xr-x 3 tecmint tecmint 4096 May 21 11:21 Desktop

drwxr-xr-x 2 tecmint tecmint 4096 May 21 15:23 Documents

drwxr-xr-x 8 tecmint tecmint 4096 May 20 14:56 Downloads

drwxr-xr-x 2 tecmint tecmint 4096 May 7 16:58 Music

drwxr-xr-x 2 tecmint tecmint 4096 May 20 16:17 Pictures

drwxr-xr-x 2 tecmint tecmint 4096 May 7 16:58 Public

drwxr-xr-x 2 tecmint tecmint 4096 May 7 16:58 Templates

drwxr-xr-x 2 tecmint tecmint 4096 May 7 16:58 Videos

To remove alias ‘l‘, use the following ‘unalias‘ command.

root@tecmint:~# unalias l

check, if ‘l‘ still is alias or not.

root@tecmint:~# l

bash: l: command not found

Making a little fun out of this command. Make alias of certain important command to some other important command.

alias cd='ls -l' (set alias of ls -l to cd)

alias su='pwd' (set alias of pwd to su)

....

(You can create your own)

....

Now when your friend types ‘cd‘, just think how funny it would be when he gets directory listing and not directory changing. And when he tries to be ‘su‘ the all he gets is the location of working directory. You can remove the alias later using command ‘unalias‘ as explained above.

29. Command: df

Report disk usages of file system. Useful for user as well as System Administrator to keep track of their disk usages. ‘df‘ works by examining directory entries, which generally are updated only when a file is closed.

root@tecmint:~# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/sda1 47929224 7811908 37675948 18% /

none 4 0 4 0% /sys/fs/cgroup

udev 1005916 4 1005912 1% /dev

tmpfs 202824 816 202008 1% /run

none 5120 0 5120 0% /run/lock

none 1014120 628 1013492 1% /run/shm

none 102400 44 102356 1% /run/user

/dev/sda5 184307 79852 94727 46% /boot

/dev/sda7 95989516 61104 91045676 1% /data

/dev/sda8 91953192 57032 87218528 1% /personal

For more examples of ‘df‘ command, read the article 12 df Command Examples in Linux.

30. Command: du

Estimate file space usage. Output the summary of disk usages by ever file hierarchically, i.e., in recursive manner.

root@tecmint:~# du

8 ./Daily Pics/wp-polls/images/default_gradient

8 ./Daily Pics/wp-polls/images/default

32 ./Daily Pics/wp-polls/images

8 ./Daily Pics/wp-polls/tinymce/plugins/polls/langs

8 ./Daily Pics/wp-polls/tinymce/plugins/polls/img

28 ./Daily Pics/wp-polls/tinymce/plugins/polls

32 ./Daily Pics/wp-polls/tinymce/plugins

36 ./Daily Pics/wp-polls/tinymce

580 ./Daily Pics/wp-polls

1456 ./Daily Pics

36 ./Plugins/wordpress-author-box

16180 ./Plugins

12 ./May Articles 2013/Xtreme Download Manager

4632 ./May Articles 2013/XCache

Note: ‘df‘ only reports usage statistics on file systems, while ‘du‘, on the other hand, measures directory contents. For more ‘du‘ command examples and usage, read 10 du (Disk Usage) Commands.

31. Command: rm

The command ‘rm‘ stands for remove. rm is used to remove files (s) and directories.

Removing a directory

root@tecmint:~# rm PassportApplicationForm_Main_English_V1.0

rm: cannot remove `PassportApplicationForm_Main_English_V1.0': Is a directory

The directory can’t be removed simply by ‘rm‘ command, you have to use ‘-rf‘ switch along with ‘rm‘.

root@tecmint:~# rm -rf PassportApplicationForm_Main_English_V1.0

Warning: “rm -rf” command is a destructive command if accidently you make it to the wrong directory. Once you ‘rm -rf‘ a directory all the files and the directory itself is lost forever, all of a sudden. Use it with caution.

32. Command: echo

echo as the name suggest echoes a text on the standard output. It has nothing to do with shell, nor does shell reads the output of echo command. However in an interactive script, echo passes the message to the user through terminal. It is one of the command that is commonly used in scripting, interactive scripting.

root@tecmint:~# echo "Tecmint.com is a very good website"

Tecmint.com is a very good website

creating a small interactive script

1. create a file, named ‘interactive_shell.sh‘ on desktop. (Remember ‘.sh‘ extension is must).

2. copy and paste the below script, exactly same, as below.

#!/bin/bash

echo "Please enter your name:"

read name

echo "Welcome to Linux $name"

Next, set execute permission and run the script.

root@tecmint:~# chmod 777 interactive_shell.sh

root@tecmint:~# ./interactive_shell.sh

Please enter your name:

Ravi Saive

Welcome to Linux Ravi Saive

Note: ‘#!/bin/bash‘ tells the shell that it is an script an it is always a good idea to include it at the top of script. ‘read‘ reads the given input.

33. Command: passwd

This is an important command that is useful for changing own password in terminal. Obviously you need to know your current passowrd for Security reason.

root@tecmint:~# passwd

Changing password for tecmint.

(current) UNIX password: ********

Enter new UNIX password: ********

Retype new UNIX password: ********

Password unchanged [Here was passowrd remians unchanged, i.e., new password=old password]

Enter new UNIX password: #####

Retype new UNIX password:#####

34. Command: lpr

This command print files named on command line, to named printer.

root@tecmint:~# lpr -P deskjet-4620-series 1-final.pdf

Note: The ‘lpq‘ command lets you view the status of a printer (whether it’s up or not), and the jobs (files) waiting to be printed.

35. Command: cmp

compare two files of any type and writes the results to the standard output. By default, ‘cmp‘ Returns 0 if the files are the same; if they differ, the byte and line number at which the first difference occurred is reported.

To provide examples for this command, lets consider two files:

file1.txt

root@tecmint:~# cat file1.txt

Hi My name is Tecmint

file2.txt

root@tecmint:~# cat file2.txt

Hi My name is tecmint [dot] com

Now, let’s compare two files and see output of the command.

root@tecmint:~# cmp file1.txt file2.txt

file1.txt file2.txt differ: byte 15, line 1

36. Command: wget

Wget is a free utility for non-interactive (i.e., can work in background) download of files from the Web. It supports HTTP, HTTPS, FTP protocols and HTTP proxies.

Download ffmpeg using wget

root@tecmint:~# wget http://downloads.sourceforge.net/project/ffmpeg-php/ffmpeg-php/0.6.0/ffmpeg-php-0.6.0.tbz2

--2013-05-22 18:54:52-- http://downloads.sourceforge.net/project/ffmpeg-php/ffmpeg-php/0.6.0/ffmpeg-php-0.6.0.tbz2

Resolving downloads.sourceforge.net (downloads.sourceforge.net)... 216.34.181.59

Connecting to downloads.sourceforge.net (downloads.sourceforge.net)|216.34.181.59|:80... connected.

HTTP request sent, awaiting response... 302 Found

Location: http://kaz.dl.sourceforge.net/project/ffmpeg-php/ffmpeg-php/0.6.0/ffmpeg-php-0.6.0.tbz2 [following]

--2013-05-22 18:54:54-- http://kaz.dl.sourceforge.net/project/ffmpeg-php/ffmpeg-php/0.6.0/ffmpeg-php-0.6.0.tbz2

Resolving kaz.dl.sourceforge.net (kaz.dl.sourceforge.net)... 92.46.53.163

Connecting to kaz.dl.sourceforge.net (kaz.dl.sourceforge.net)|92.46.53.163|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 275557 (269K) [application/octet-stream]

Saving to: ‘ffmpeg-php-0.6.0.tbz2’

100%[===========================================================================>] 2,75,557 67.8KB/s in 4.0s

2013-05-22 18:55:00 (67.8 KB/s) - ‘ffmpeg-php-0.6.0.tbz2’ saved [275557/275557]

37. Command: mount

Mount is an important command which is used to mount a filesystem that don’t mount itself. You need root permission to mount a device.

First run ‘lsblk‘ after plugging-in your filesystem and identify your device and note down you device assigned name.

root@tecmint:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 931.5G 0 disk

├─sda1 8:1 0 923.6G 0 part /

├─sda2 8:2 0 1K 0 part

└─sda5 8:5 0 7.9G 0 part [SWAP]

sr0 11:0 1 1024M 0 rom

sdb 8:16 1 3.7G 0 disk

└─sdb1 8:17 1 3.7G 0 part

From this screen it was clear that I plugged in a 4 GB pendrive thus ‘sdb1‘ is my filesystem to be mounted. Become a root to perform this operation and change to /dev directory where all the file system is mounted.

root@tecmint:~# su

Password:

root@tecmint:~# cd /dev

Create a directory named anything but should be relevent for reference.

root@tecmint:~# mkdir usb

Now mount filesystem ‘sdb1‘ to directory ‘usb‘.

root@tecmint:~# mount /dev/sdb1 /dev/usb

Now you can navigate to /dev/usb from terminal or X-windows system and acess file from the mounted directory.

Time for Code Developer to know how rich Linux environment is

38. Command: gcc

gcc is the in-built compiler for ‘c‘ language in Linux Environment. A simple c program, save it on ur desktop as Hello.c (remember ‘.c‘ extension is must).

#include <stdio.h>

int main()

{

printf("Hello world\n");

return 0;

}

Compile it

root@tecmint:~# gcc Hello.c

Run it

root@tecmint:~# ./a.out

Hello world

Note: On compiling a c program the output is automatically generated to a new file “a.out” and everytime you compile a c program same file “a.out” gets modified. Hence it is a good advice to define a output file during compile and thus there is no risk of overwrite to output file.

Compile it this way

root@tecmint:~# gcc -o Hello Hello.c

Here ‘-o‘ sends the output to ‘Hello‘ file and not ‘a.out‘. Run it again.

root@tecmint:~# ./Hello

Hello world

39. Command: g++

g++ is the in-built compiler for ‘C++‘ , the first object oriented programming language. A simple c++ program, save it on ur desktop as Add.cpp (remember ‘.cpp‘ extension is must).

#include <iostream>

using namespace std;

int main()

{

int a;

int b;

cout<<"Enter first number:\n";

cin >> a;

cout <<"Enter the second number:\n";

cin>> b;

cin.ignore();

int result = a + b;

cout<<"Result is"<<" "<<result<<endl;

cin.get();

return 0;

}

Compile it

root@tecmint:~# g++ Add.cpp

Run it

root@tecmint:~# ./a.out

Enter first number:

...

...

Note: On compiling a c++ program the output is automatically generated to a new file “a.out” and everytime you compile a c++ program same file “a.out” gets modified. Hence it is a good advice to define a output file during compile and thus there is no risk of overwrite to output file.

Compile it this way

root@tecmint:~# g++ -o Add Add.cpp

Run it

root@tecmint:~# ./Add

Enter first number:

...

...

40. Command: java

Java is one of the world’s highly used programming language and is considered fast, secure, and reliable. Most of the the web based service of today runs on java.

Create a simple java program by pasting the below test to a file, named tecmint.java (remember ‘.java‘ extension is must).

class tecmint {

public static void main(String[] arguments) {

System.out.println("Tecmint ");

}

}

compile it using javac

root@tecmint:~# javac tecmint.java

Run it

root@tecmint:~# java tecmint

Note: Almost every distribution comes packed with gcc compiler, major number of distros have inbuilt g++ and java compiler, while some may not have. You can apt or yum the required package.

Don’t forget to mention your valueable comment and the type of article you want to see here. I will soon be back with an interesting topic about the lesser known facts about Linux.

20 Advanced Commands for Linux Experts

Thanks for all the likes, good words and support you gave us in the first two part of this article. In the first article we discussed commands for those users who have just switched to Linux and needed the necessary knowledge to start with.

- 20 Useful Commands for Linux Newbies

In the second article we discussed the commands which a middle level user requires to manage his own system.

- 20 Advanced Commands for Middle Level Linux Users

What Next? In this article I will be explaining those commands required for administrating the Linux Server.

Linux Expert Commands

41. Command: ifconfig

ifconfig is used to configure the kernel-resident network interfaces. It is used at boot time to set up interfaces as necessary. After that, it is usually only needed when debugging or when system tuning is needed.

Check Active Network Interfaces

[avishek@tecmint ~]$ ifconfig

eth0 Link encap:Ethernet HWaddr 40:2C:F4:EA:CF:0E

inet addr:192.168.1.3 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::422c:f4ff:feea:cf0e/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:163843 errors:0 dropped:0 overruns:0 frame:0

TX packets:124990 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:154389832 (147.2 MiB) TX bytes:65085817 (62.0 MiB)

Interrupt:20 Memory:f7100000-f7120000

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:78 errors:0 dropped:0 overruns:0 frame:0

TX packets:78 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:4186 (4.0 KiB) TX bytes:4186 (4.0 KiB)

Check All Network Interfaces

Display details of All interfaces including disabled interfaces using “-a” argument.

[avishek@tecmint ~]$ ifconfig -a

eth0 Link encap:Ethernet HWaddr 40:2C:F4:EA:CF:0E

inet addr:192.168.1.3 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::422c:f4ff:feea:cf0e/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:163843 errors:0 dropped:0 overruns:0 frame:0

TX packets:124990 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:154389832 (147.2 MiB) TX bytes:65085817 (62.0 MiB)

Interrupt:20 Memory:f7100000-f7120000

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:78 errors:0 dropped:0 overruns:0 frame:0

TX packets:78 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:4186 (4.0 KiB) TX bytes:4186 (4.0 KiB)

virbr0 Link encap:Ethernet HWaddr 0e:30:a3:3a:bf:03

inet addr:192.168.122.1 Bcast:192.168.122.255 Mask:255.255.255.0

UP BROADCAST MULTICAST MTU:1500 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

Disable an Interface

[avishek@tecmint ~]$ ifconfig eth0 down

Enable an Interface

[avishek@tecmint ~]$ ifconfig eth0 up

Assign IP Address to an Interface

Assign “192.168.1.12” as the IP address for the interface eth0.

[avishek@tecmint ~]$ ifconfig eth0 192.168.1.12

Change Subnet Mask of Interface eth0

[avishek@tecmint ~]$ ifconfig eth0 netmask 255.255.255.

Change Broadcast Address of Interface eth0

[avishek@tecmint ~]$ ifconfig eth0 broadcast 192.168.1.255

Assign IP Address, Netmask and Broadcast to Interface eth0

[avishek@tecmint ~]$ ifconfig eth0 192.168.1.12 netmask 255.255.255.0 broadcast 192.168.1.255

Note: If using a wireless network you need to use command “iwconfig“. For more “ifconfig” command examples and usage, read 15 Useful “ifconfig” Commands.

42. Command: netstat

netstat command displays various network related information such as network connections, routing tables, interface statistics, masquerade connections, multicast memberships etc..,

List All Network Ports

[avishek@tecmint ~]$ netstat -a

Active UNIX domain sockets (servers and established)

Proto RefCnt Flags Type State I-Node Path

unix 2 [ ACC ] STREAM LISTENING 741379 /run/user/user1/keyring-I5cn1c/gpg

unix 2 [ ACC ] STREAM LISTENING 8965 /var/run/acpid.socket

unix 2 [ ACC ] STREAM LISTENING 18584 /tmp/.X11-unix/X0

unix 2 [ ACC ] STREAM LISTENING 741385 /run/user/user1/keyring-I5cn1c/ssh

unix 2 [ ACC ] STREAM LISTENING 741387 /run/user/user1/keyring-I5cn1c/pkcs11

unix 2 [ ACC ] STREAM LISTENING 20242 @/tmp/dbus-ghtTjuPN46

unix 2 [ ACC ] STREAM LISTENING 13332 /var/run/samba/winbindd_privileged/pipe

unix 2 [ ACC ] STREAM LISTENING 13331 /tmp/.winbindd/pipe

unix 2 [ ACC ] STREAM LISTENING 11030 /var/run/mysqld/mysqld.sock

unix 2 [ ACC ] STREAM LISTENING 19308 /tmp/ssh-qnZadSgJAbqd/agent.3221

unix 2 [ ACC ] STREAM LISTENING 436781 /tmp/HotShots

unix 2 [ ACC ] STREAM LISTENING 46110 /run/user/ravisaive/pulse/native

unix 2 [ ACC ] STREAM LISTENING 19310 /tmp/gpg-zfE9YT/S.gpg-agent

....

List All TCP Ports

[avishek@tecmint ~]$ netstat -at

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State

tcp 0 0 localhost:mysql *:* LISTEN

tcp 0 0 *:5901 *:* LISTEN

tcp 0 0 *:5902 *:* LISTEN

tcp 0 0 *:x11-1 *:* LISTEN

tcp 0 0 *:x11-2 *:* LISTEN

tcp 0 0 *:5938 *:* LISTEN

tcp 0 0 localhost:5940 *:* LISTEN

tcp 0 0 ravisaive-OptiPl:domain *:* LISTEN

tcp 0 0 ravisaive-OptiPl:domain *:* LISTEN

tcp 0 0 localhost:ipp *:* LISTEN

tcp 0 0 ravisaive-OptiPle:48270 ec2-23-21-236-70.c:http ESTABLISHED

tcp 0 0 ravisaive-OptiPle:48272 ec2-23-21-236-70.c:http TIME_WAIT

tcp 0 0 ravisaive-OptiPle:48421 bom03s01-in-f22.1:https ESTABLISHED

tcp 0 0 ravisaive-OptiPle:48269 ec2-23-21-236-70.c:http ESTABLISHED

tcp 0 0 ravisaive-OptiPle:39084 channel-ecmp-06-f:https ESTABLISHED

...

Show Statistics for All Ports

[avishek@tecmint ~]$ netstat -s

Ip:

4994239 total packets received

0 forwarded

0 incoming packets discarded

4165741 incoming packets delivered

3248924 requests sent out

8 outgoing packets dropped

Icmp:

29460 ICMP messages received

566 input ICMP message failed.

ICMP input histogram:

destination unreachable: 98

redirects: 29362

2918 ICMP messages sent

0 ICMP messages failed

ICMP output histogram:

destination unreachable: 2918

IcmpMsg:

InType3: 98

InType5: 29362

OutType3: 2918

Tcp:

94533 active connections openings

23 passive connection openings

5870 failed connection attempts

7194 connection resets received

....

OK! For some reason if you want not to resolve host, port and user name as a output of netstat.

[avishek@tecmint ~]$ netstat -an

Fine, you may need to get the output of netstat continuously till interrupt instruction is passed (ctrl+c).

[avishek@tecmint ~]$ netstat -c

For more “netstat” command examples and usage, see the article 20 Netstat Command Examples.

43. Command: nslookup

A network utility program used to obtain information about Internet servers. As its name suggests, the utility finds name server information for domains by querying DNS.

[avishek@tecmint ~]$ nslookup tecmint.com

Server: 192.168.1.1

Address: 192.168.1.1#53

Non-authoritative answer:

Name: tecmint.com

Address: 50.16.67.239

Query Mail Exchanger Record

[avishek@tecmint ~]$ nslookup -query=mx tecmint.com

Server: 192.168.1.1

Address: 192.168.1.1#53

Non-authoritative answer:

tecmint.com mail exchanger = 0 smtp.secureserver.net.

tecmint.com mail exchanger = 10 mailstore1.secureserver.net.

Authoritative answers can be found from:

Query Name Server

[avishek@tecmint ~]$ nslookup -type=ns tecmint.com

Server: 192.168.1.1

Address: 192.168.1.1#53

Non-authoritative answer:

tecmint.com nameserver = ns3404.com.

tecmint.com nameserver = ns3403.com.

Authoritative answers can be found from:

Query DNS Record

[avishek@tecmint ~]$ nslookup -type=any tecmint.com

Server: 192.168.1.1

Address: 192.168.1.1#53

Non-authoritative answer:

tecmint.com mail exchanger = 10 mailstore1.secureserver.net.

tecmint.com mail exchanger = 0 smtp.secureserver.net.

tecmint.com nameserver = ns06.domaincontrol.com.

tecmint.com nameserver = ns3404.com.

tecmint.com nameserver = ns3403.com.

tecmint.com nameserver = ns05.domaincontrol.com.

Authoritative answers can be found from:

Query Start of Authority

[avishek@tecmint ~]$ nslookup -type=soa tecmint.com

Server: 192.168.1.1

Address: 192.168.1.1#53

Non-authoritative answer:

tecmint.com

origin = ns3403.hostgator.com

mail addr = dnsadmin.gator1702.hostgator.com

serial = 2012081102

refresh = 86400

retry = 7200

expire = 3600000

minimum = 86400

Authoritative answers can be found from:

Query Port Number

Change the port number using which you want to connect

[avishek@tecmint ~]$ nslookup -port 56 tecmint.com

Server: tecmint.com

Address: 50.16.76.239#53

Name: 56

Address: 14.13.253.12

Read Also : 8 Nslookup Commands

44. Command: dig

dig is a tool for querying DNS nameservers for information about host addresses, mail exchanges, nameservers, and related information. This tool can be used from any Linux (Unix) or Macintosh OS X operating system. The most typical use of dig is to simply query a single host.

[avishek@tecmint ~]$ dig tecmint.com

; <<>> DiG 9.8.2rc1-RedHat-9.8.2-0.17.rc1.el6 <<>> tecmint.com

;; global options: +cmd

;; Got answer:

;; ->>HEADER<

Turn Off Comment Lines

[avishek@tecmint ~]$ dig tecmint.com +nocomments

; <<>> DiG 9.8.2rc1-RedHat-9.8.2-0.17.rc1.el6 <<>> tecmint.com +nocomments

;; global options: +cmd

;tecmint.com. IN A

tecmint.com. 14400 IN A 40.216.66.239

;; Query time: 418 msec

;; SERVER: 192.168.1.1#53(192.168.1.1)

;; WHEN: Sat Jun 29 13:53:22 2013

;; MSG SIZE rcvd: 45

Turn Off Authority Section

[avishek@tecmint ~]$ dig tecmint.com +noauthority

; <<>> DiG 9.8.2rc1-RedHat-9.8.2-0.17.rc1.el6 <<>> tecmint.com +noauthority

;; global options: +cmd

;; Got answer:

;; ->>HEADER<

Turn Off Additional Section

[avishek@tecmint ~]$ dig tecmint.com +noadditional

; <<>> DiG 9.9.2-P1 <<>> tecmint.com +noadditional

;; global options: +cmd

;; Got answer:

;; ->>HEADER<

Turn Off Stats Section

[avishek@tecmint ~]$ dig tecmint.com +nostats

; <<>> DiG 9.8.2rc1-RedHat-9.8.2-0.17.rc1.el6 <<>> tecmint.com +nostats

;; global options: +cmd

;; Got answer:

;; ->>HEADER<

Turn Off Answer Section

[avishek@tecmint ~]$ dig tecmint.com +noanswer

; <<>> DiG 9.8.2rc1-RedHat-9.8.2-0.17.rc1.el6 <<>> tecmint.com +noanswer

;; global options: +cmd

;; Got answer:

;; ->>HEADER<

Disable All Section at Once

[avishek@tecmint ~]$ dig tecmint.com +noall

; <<>> DiG 9.8.2rc1-RedHat-9.8.2-0.17.rc1.el6 <<>> tecmint.com +noall

;; global options: +cmd

Read Also : 10 Linux Dig Command Examples

45. Command: uptime

You have just connected to your Linux Server Machine and founds Something unusual or malicious, what you will do? Guessing…. NO, definitely not you could run uptime to verify what happened actually when the server was unattended.

[avishek@tecmint ~]$ uptime

14:37:10 up 4:21, 2 users, load average: 0.00, 0.00, 0.04

46. Command: wall

one of the most important command for administrator, wall sends a message to everybody logged in with their mesg permission set to “yes“. The message can be given as an argument to wall, or it can be sent to wall’s standard input.

[avishek@tecmint ~]$ wall "we will be going down for maintenance for one hour sharply at 03:30 pm"

Broadcast message from root@localhost.localdomain (pts/0) (Sat Jun 29 14:44:02 2013):

we will be going down for maintenance for one hour sharply at 03:30 pm

47. command: mesg

Lets you control if people can use the “write” command, to send text to you over the screen.

mesg [n|y]

n - prevents the message from others popping up on the screen.

y – Allows messages to appear on your screen.

48. Command: write

Let you send text directly to the screen of another Linux machine if ‘mesg’ is ‘y’.

[avishek@tecmint ~]$ write ravisaive

49. Command: talk

An enhancement to write command, talk command lets you talk to the logged in users.

[avishek@tecmint ~]$ talk ravisaive

Note: If talk command is not installed, you can always apt or yum the required packages.

[avishek@tecmint ~]$ yum install talk

OR

[avishek@tecmint ~]$ apt-get install talk

50. Command: w

what command ‘w’ seems you funny? But actually it is not. t’s a command, even if it’s just one letter long! The command “w” is a combination of uptime and who commands given one immediately after the other, in that order.

[avishek@tecmint ~]$ w

15:05:42 up 4:49, 3 users, load average: 0.02, 0.01, 0.00

USER TTY FROM LOGIN@ IDLE JCPU PCPU WHAT

server tty7 :0 14:06 4:43m 1:42 0.08s pam: gdm-passwo

server pts/0 :0.0 14:18 0.00s 0.23s 1.65s gnome-terminal

server pts/1 :0.0 14:47 4:43 0.01s 0.01s bash

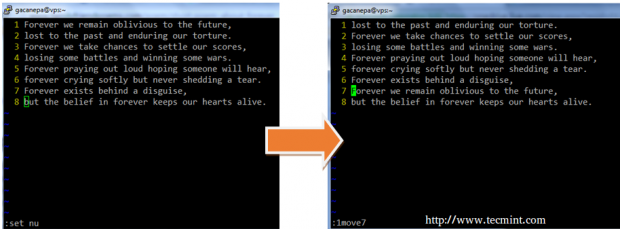

51. Command: rename

As the name suggests, this command rename files. rename will rename the specified files by replacing the first occurrence from the file name.

Give the file names a1, a2, a3, a4.....1213

Just type the command.

rename a1 a0 a?

rename a1 a0 a??

52. Command: top

Displays the processes of CPU. This command refresh automatically, by default and continues to show CPUprocesses unless interrupt-instruction is given.

[avishek@tecmint ~]$ top

top - 14:06:45 up 10 days, 20:57, 2 users, load average: 0.10, 0.16, 0.21

Tasks: 240 total, 1 running, 235 sleeping, 0 stopped, 4 zombie

%Cpu(s): 2.0 us, 0.5 sy, 0.0 ni, 97.5 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

KiB Mem: 2028240 total, 1777848 used, 250392 free, 81804 buffers

KiB Swap: 3905532 total, 156748 used, 3748784 free, 381456 cached

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

23768 ravisaiv 20 0 1428m 571m 41m S 2.3 28.9 14:27.52 firefox

24182 ravisaiv 20 0 511m 132m 25m S 1.7 6.7 2:45.94 plugin-containe

26929 ravisaiv 20 0 5344 1432 972 R 0.7 0.1 0:00.07 top

24875 ravisaiv 20 0 263m 14m 10m S 0.3 0.7 0:02.76 lxterminal

1 root 20 0 3896 1928 1228 S 0.0 0.1 0:01.62 init

2 root 20 0 0 0 0 S 0.0 0.0 0:00.06 kthreadd

3 root 20 0 0 0 0 S 0.0 0.0 0:17.28 ksoftirqd/0

5 root 0 -20 0 0 0 S 0.0 0.0 0:00.00 kworker/0:0H

7 root 0 -20 0 0 0 S 0.0 0.0 0:00.00 kworker/u:0H

8 root rt 0 0 0 0 S 0.0 0.0 0:00.12 migration/0

9 root 20 0 0 0 0 S 0.0 0.0 0:00.00 rcu_bh

10 root 20 0 0 0 0 S 0.0 0.0 0:26.94 rcu_sched

11 root rt 0 0 0 0 S 0.0 0.0 0:01.95 watchdog/0

12 root rt 0 0 0 0 S 0.0 0.0 0:02.00 watchdog/1

13 root 20 0 0 0 0 S 0.0 0.0 0:17.80 ksoftirqd/1

14 root rt 0 0 0 0 S 0.0 0.0 0:00.12 migration/1

16 root 0 -20 0 0 0 S 0.0 0.0 0:00.00 kworker/1:0H

17 root 0 -20 0 0 0 S 0.0 0.0 0:00.00 cpuset

18 root 0 -20 0 0 0 S 0.0 0.0 0:00.00 khelper

19 root 20 0 0 0 0 S 0.0 0.0 0:00.00 kdevtmpfs

20 root 0 -20 0 0 0 S 0.0 0.0 0:00.00 netns

21 root 20 0 0 0 0 S 0.0 0.0 0:00.04 bdi-default

22 root 0 -20 0 0 0 S 0.0 0.0 0:00.00 kintegrityd

23 root 0 -20 0 0 0 S 0.0 0.0 0:00.00 kblockd

24 root 0 -20 0 0 0 S 0.0 0.0 0:00.00 ata_sff

Read Also : 12 TOP Command Examples

53. Command: mkfs.ext4

This command create a new ext4 file system on the specified device, if wrong device is followed after this command, the whole block will be wiped and formatted, hence it is suggested not to run this command unless and until you understand what you are doing.

Mkfs.ext4 /dev/sda1 (sda1 block will be formatted)

mkfs.ext4 /dev/sdb1 (sdb1 block will be formatted)

Read More: What is Ext4 and How to Create and Convert

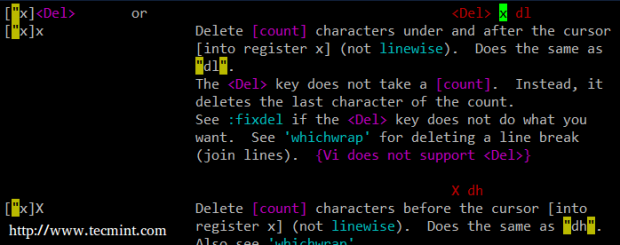

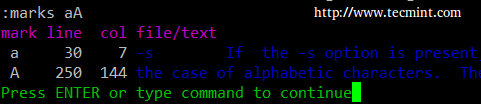

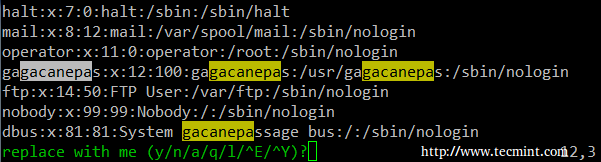

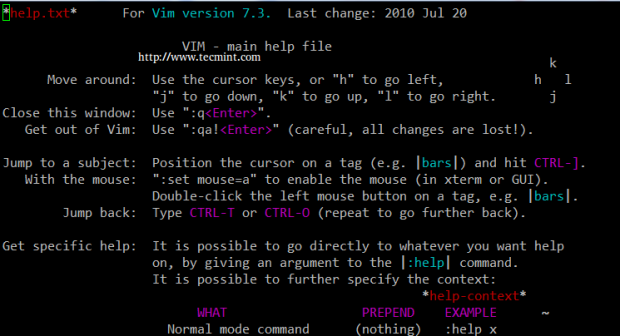

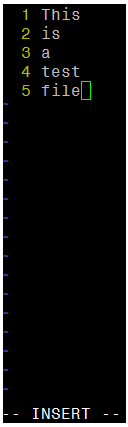

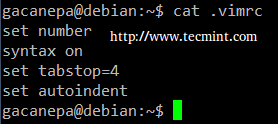

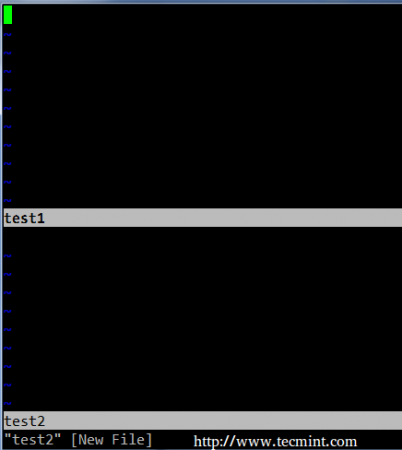

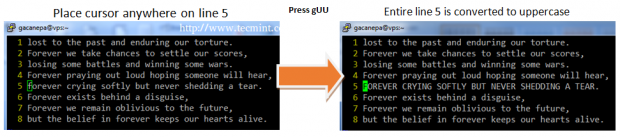

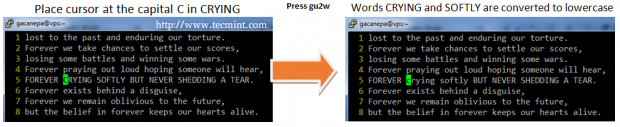

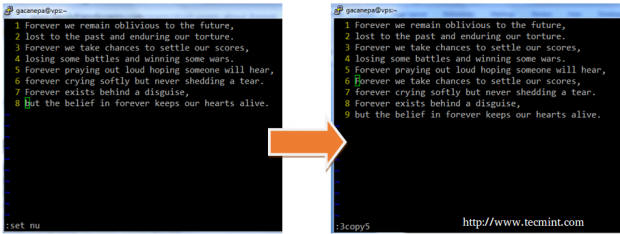

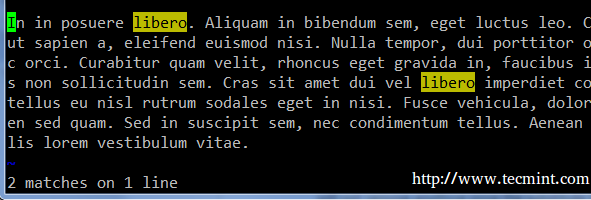

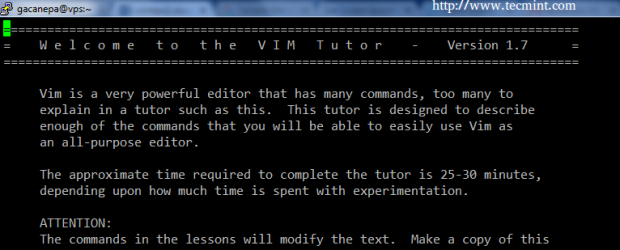

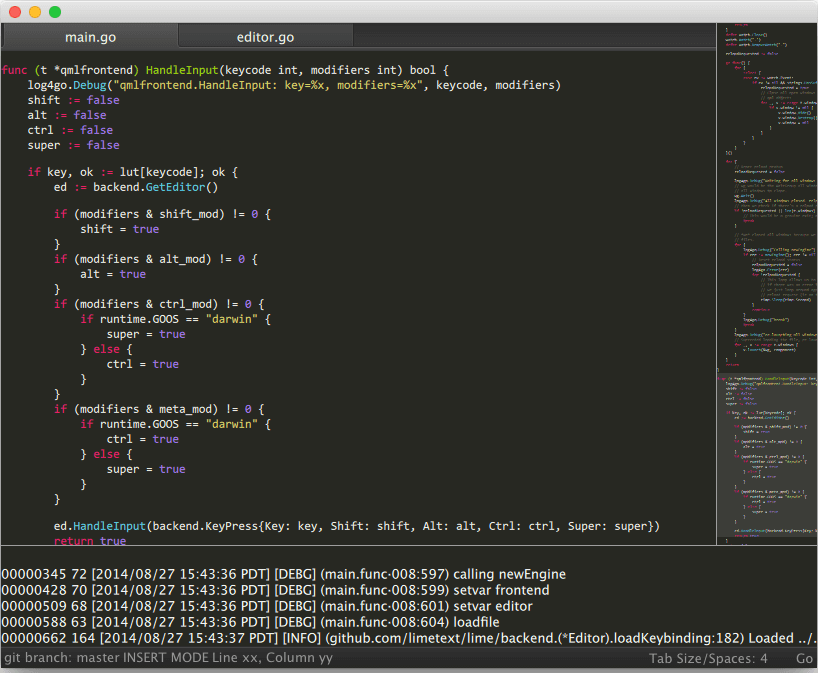

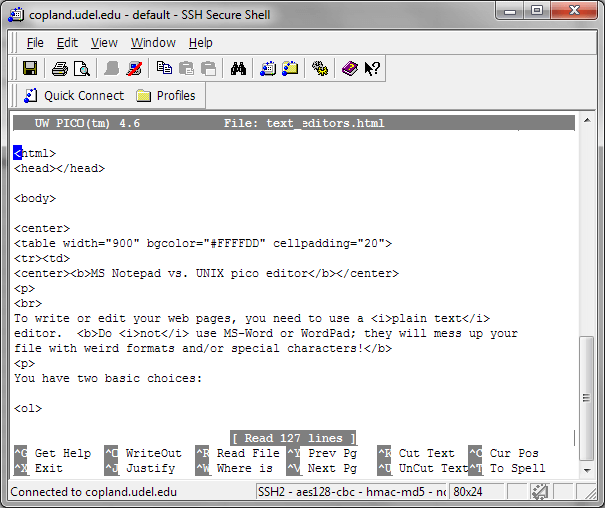

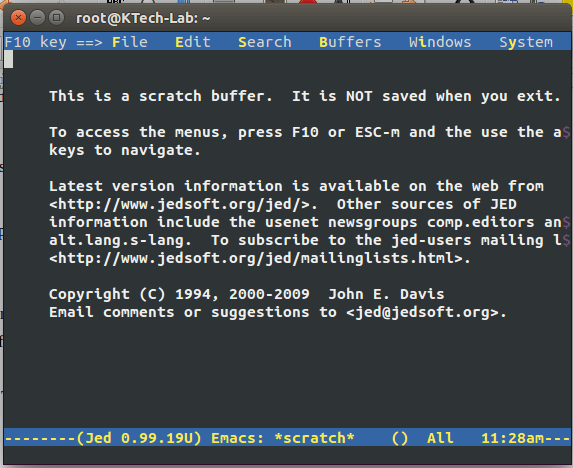

54. Command: vi/emacs/nano

vi (visual), emacs, nano are some of the most commonly used editors in Linux. They are used oftenly to edit text, configuration,… files. A quick guide to work around vi and nano is, emacs is a.

vi-editor

[avishek@tecmint ~]$ touch a.txt (creates a text file a.txt)

[avishek@tecmint ~]$ vi a.txt (open a.txt with vi editor)

[press ‘i’ to enter insert mode, or you won’t be able to type-in anything]

echo "Hello" (your text here for the file)

- alt+x (exit insert mode, remember to keep some space between the last letter.

- ctrl+x command or your last word will be deleted).

- :wq! (saves the file, with the current text, remember ‘!’ is to override).

nano editor

[avishek@tecmint ~]$ nano a.txt (open a.txt file to be edited with nano)

edit, with the content, required

ctrl +x (to close the editor). It will show output as:

Save modified buffer (ANSWERING "No" WILL DESTROY CHANGES) ?

Y Yes

N No ^C Cancel

Click ‘y’ to yes and enter file name, and you are done.

55. Command: rsync

Rsync copies files and has a -P switch for a progress bar. So if you have rsync installed, you could use a simple alias.

alias cp='rsync -aP'

Now try to copy a large file in terminal and see the output with remaining items, similar to a progress bar.

Moreover, Keeping and Maintaining backup is one of the most important and boring work a system administrator, needs to perform. Rsync is a very nice tool (there exists, several other) to create and maintain backup, in terminal.

[avishek@tecmint ~]$ rsync -zvr IMG_5267\ copy\=33\ copy\=ok.jpg ~/Desktop/

sending incremental file list

IMG_5267 copy=33 copy=ok.jpg

sent 2883830 bytes received 31 bytes 5767722.00 bytes/sec

total size is 2882771 speedup is 1.00

Note: -z for compression, -v for verbose and -r for recursive.

56. Command: free

Keeping track of memory and resources is as much important, as any other task performed by an administrator, and ‘free‘ command comes to rescue here.

Current Usage Status of Memory

[avishek@tecmint ~]$ free

total used free shared buffers cached

Mem: 2028240 1788272 239968 0 69468 363716

-/+ buffers/cache: 1355088 673152

Swap: 3905532 157076 3748456

Tuned Output in KB, or MB, or GB

[avishek@tecmint ~]$ free -b

total used free shared buffers cached

Mem: 2076917760 1838272512 238645248 0 71348224 372670464

-/+ buffers/cache: 1394253824 682663936

Swap: 3999264768 160845824 3838418944

[avishek@tecmint ~]$ free -k

total used free shared buffers cached

Mem: 2028240 1801484 226756 0 69948 363704

-/+ buffers/cache: 1367832 660408

Swap: 3905532 157076 3748456

[avishek@tecmint ~]$ free -m

total used free shared buffers cached

Mem: 1980 1762 218 0 68 355

-/+ buffers/cache: 1338 641

Swap: 3813 153 3660

[avishek@tecmint ~]$ free -g

total used free shared buffers cached

Mem: 1 1 0 0 0 0

-/+ buffers/cache: 1 0

Swap: 3 0 3

Check Current Usage in Human Readable Format

[avishek@tecmint ~]$ free -h

total used free shared buffers cached

Mem: 1.9G 1.7G 208M 0B 68M 355M

-/+ buffers/cache: 1.3G 632M

Swap: 3.7G 153M 3.6G

Check Status Contineously After Regular Interval

[avishek@tecmint ~]$ free -s 3

total used free shared buffers cached

Mem: 2028240 1824096 204144 0 70708 364180

-/+ buffers/cache: 1389208 639032

Swap: 3905532 157076 3748456

total used free shared buffers cached

Mem: 2028240 1824192 204048 0 70716 364212

-/+ buffers/cache: 1389264 638976

Swap: 3905532 157076 3748456

Read Also : 10 Examples of Free Command

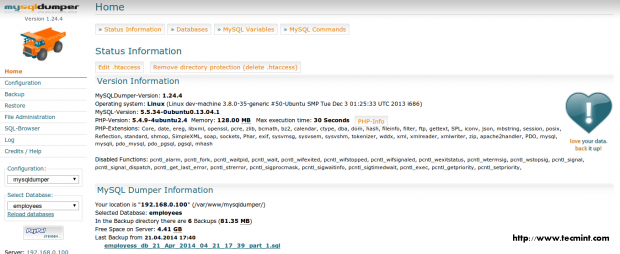

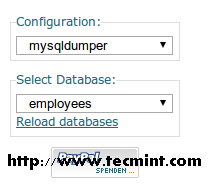

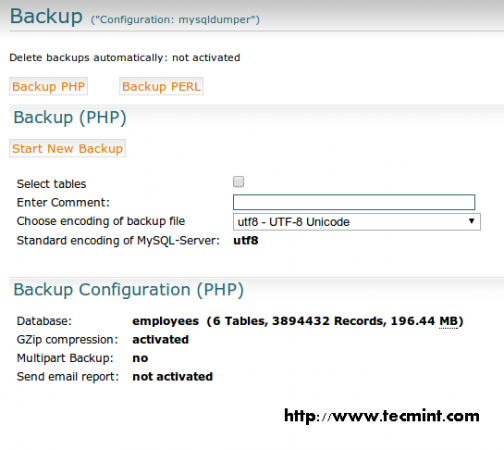

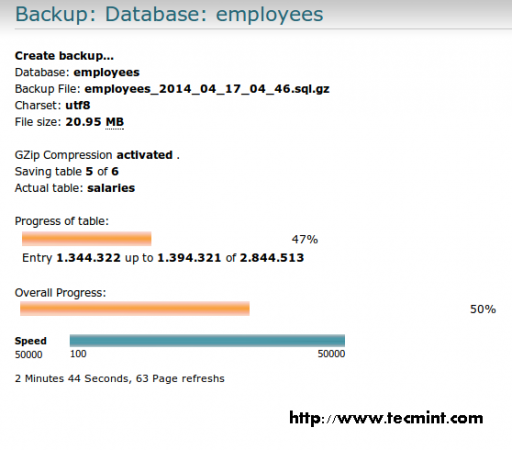

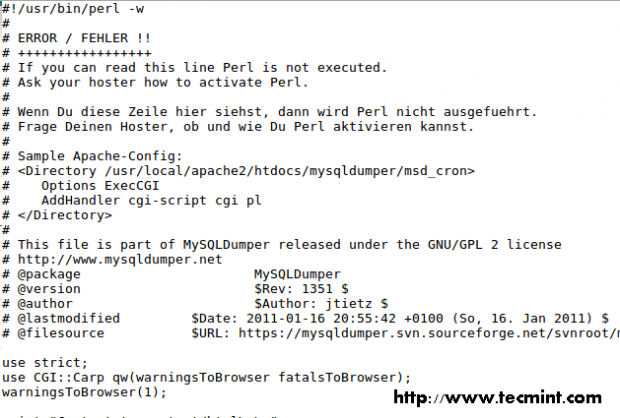

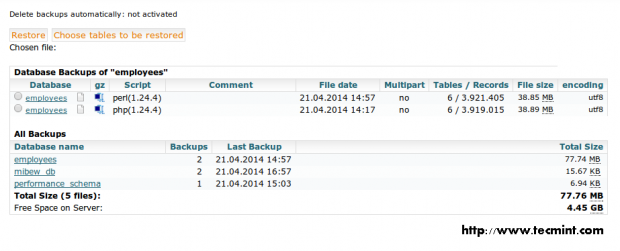

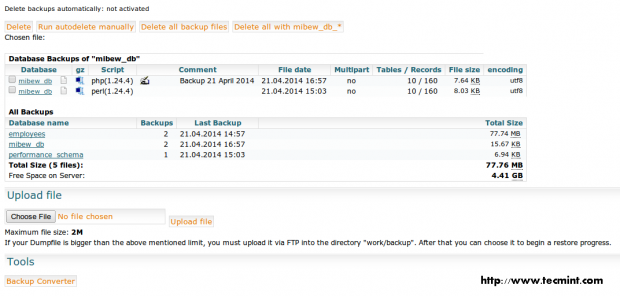

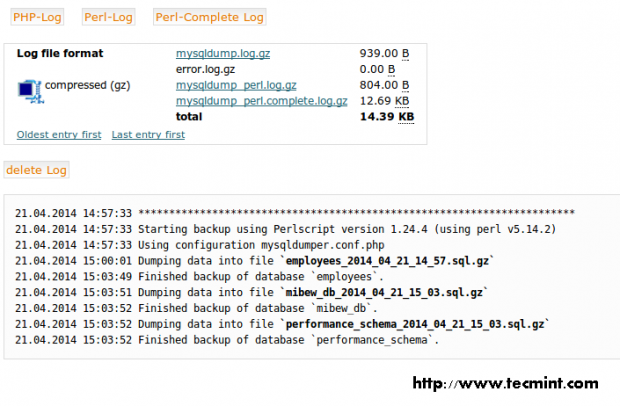

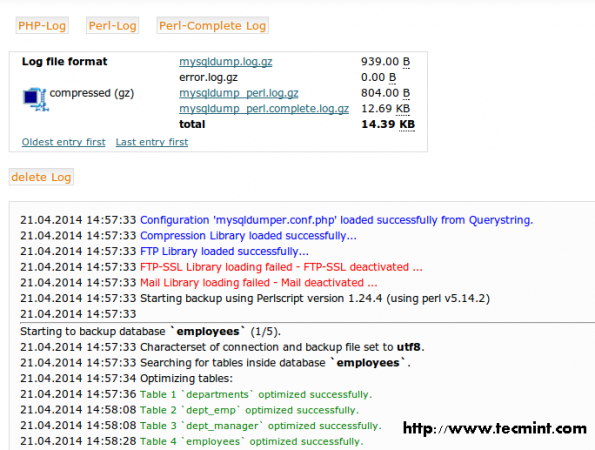

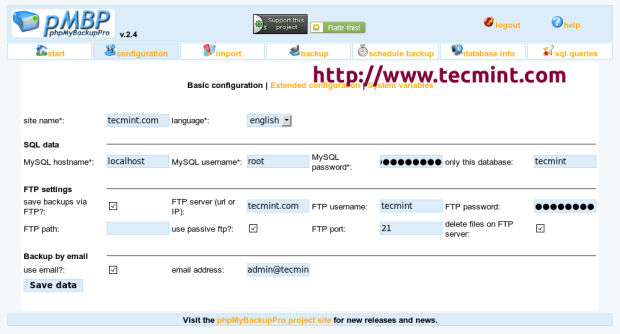

57. Command: mysqldump

Ok till now you would have understood what this command actually stands for, from the name of this command.mysqldump commands dumps (backups) all or a particular database data into a given a file.For example,

[avishek@tecmint ~]$ mysqldump -u root -p --all-databases > /home/server/Desktop/backupfile.sql

Note: mysqldump requires mysql to be running and correct password for authorisation. We have covered some useful “mysqldump” commands at Database Backup with mysqldump Command

58. Command: mkpasswd

Make a hard-to-guess, random password of the length as specified.

[avishek@tecmint ~]$ mkpasswd -l 10

zI4+Ybqfx9

[avishek@tecmint ~]$ mkpasswd -l 20

w0Pr7aqKk&hmbmqdrlmk

Note: -l 10 generates a random password of 10 characters while -l 20 generates a password of character 20, it could be set to anything to get desired result. This command is very useful and implemented in scripting language oftenly to generate random passwords. You might need to yum or apt the ‘expect’ package to use this command.

[root@tecmint ~]# yum install expect

OR

[root@tecmint ~]# apt-get install expect

59. Command: paste

Merge two or more text files on lines using. Example. If the content of file1 was:

1

2

3

and file2 was:

a

b

c

d

the resulting file3 would be:

1 a

2 b

3 c

d

60.Command: lsof

lsof stands for “list open files” and displays all the files that your system has currently opened. It’s very useful to figure out which processes uses a certain file, or to display all the files for a single process. Some useful 10 lsof Command examples, you might be interested in reading.

[avishek@tecmint ~]$ lsof

COMMAND PID TID USER FD TYPE DEVICE SIZE/OFF NODE NAME

init 1 root cwd DIR 8,1 4096 2 /

init 1 root rtd DIR 8,1 4096 2 /

init 1 root txt REG 8,1 227432 395571 /sbin/init

init 1 root mem REG 8,1 47080 263023 /lib/i386-linux-gnu/libnss_files-2.17.so

init 1 root mem REG 8,1 42672 270178 /lib/i386-linux-gnu/libnss_nis-2.17.so

init 1 root mem REG 8,1 87940 270187 /lib/i386-linux-gnu/libnsl-2.17.so

init 1 root mem REG 8,1 30560 263021 /lib/i386-linux-gnu/libnss_compat-2.17.so

init 1 root mem REG 8,1 124637 270176 /lib/i386-linux-gnu/libpthread-2.17.so

init 1 root mem REG 8,1 1770984 266166 /lib/i386-linux-gnu/libc-2.17.so

init 1 root mem REG 8,1 30696 262824 /lib/i386-linux-gnu/librt-2.17.so

init 1 root mem REG 8,1 34392 262867 /lib/i386-linux-gnu/libjson.so.0.1.0

init 1 root mem REG 8,1 296792 262889 /lib/i386-linux-gnu/libdbus-1.so.3.7.2

init 1 root mem REG 8,1 34168 262840 /lib/i386-linux-gnu/libnih-dbus.so.1.0.0

init 1 root mem REG 8,1 95616 262848 /lib/i386-linux-gnu/libnih.so.1.0.0

init 1 root mem REG 8,1 134376 270186 /lib/i386-linux-gnu/ld-2.17.so

init 1 root 0u CHR 1,3 0t0 1035 /dev/null

init 1 root 1u CHR 1,3 0t0 1035 /dev/null

init 1 root 2u CHR 1,3 0t0 1035 /dev/null

init 1 root 3r FIFO 0,8 0t0 1714 pipe

init 1 root 4w FIFO 0,8 0t0 1714 pipe

init 1 root 5r 0000 0,9 0 6245 anon_inode

init 1 root 6r 0000 0,9 0 6245 anon_inode

init 1 root 7u unix 0xf5e91f80 0t0 8192 @/com/ubuntu/upstart

init 1 root 8w REG 8,1 3916 394 /var/log/upstart/teamviewerd.log.1 (deleted)

This is not the end, a System Administrator does a lot of stuff, to provide you such a nice interface, upon which you work. System Administration is actually an art of learning and implementing in a very much perfect way. We will try to get you with all other necessary stuff which a linux professional must learn, linux in its basic actually itself, is a process of learning and learning. Your good words are always sought, which encourages us to put in more effort to give you a knowledgeable article. “Like and share Us, to help Us Spread”.

Source