In this lesson, we will take a look at how we can install and start using the R programming language on Ubuntu 18.04. R is an excellent open-source graphical and statistical computing programming language and is one of the most used programming languages after Python for Data Science and Machine Learning, used with one of the best tools, Jupyter Notebooks.

We will start by installing the R programming language on Ubuntu 18.04 and continue with a very simple program in this language. Let’s get started.

Add GPG Keys

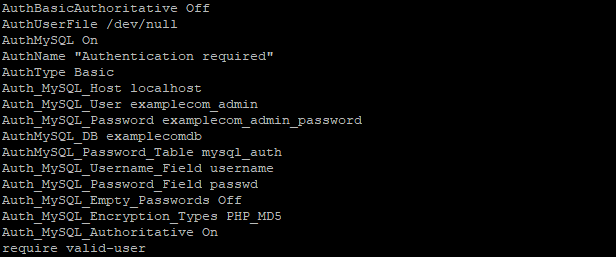

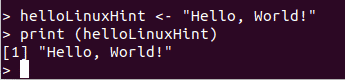

We first need to add the relevant GPG keys:

sudo apt-key adv –keyserver keyserver.ubuntu.com –recv-keys E298A3A825C0D65DFD57CBB651716619E084DAB9

Here is what we get back with this command:

Add GPG Keys

Add R Repositories

We can now add R repositories for the R programming language release:

sudo add-apt-repository ‘deb https://cloud.r-project.org/bin/linux/ubuntu bionic-cran35/’

Here is what we get back with this command:

Add R repositories

Update Package List

Let’s update Ubuntu Package list:

Install R

We can finally install R programming language now:

Verify Installation

Run the following command to verify your install:

We will see the R console once we write above command:

Verify R Installation

Start using R programming with Hello World

Once we have an active installation for the R programming language on Ubuntu 18.04, we will start using it in a very simple and traditional “Hello World” program here. To execute a simple program, we can simply open a terminal, type the following command to open R console:

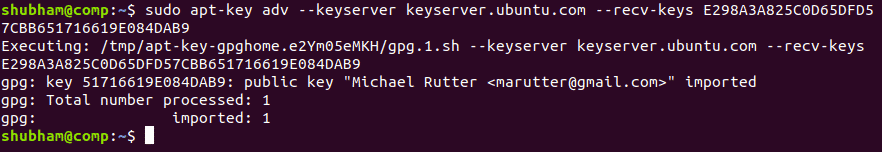

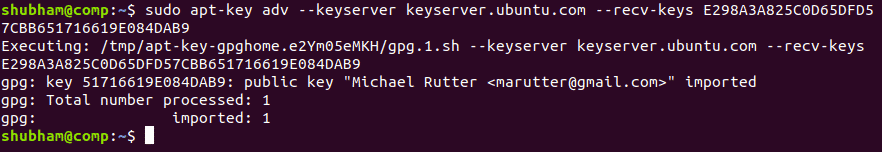

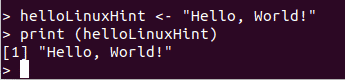

We can now start writing simple statements in the console now:

> helloLinuxHint <- “Hello World”

> print (helloLinuxHint)

Here is what we get back with this command:

R Hello World

Running R-based scripts

It is also possible to run R-based scripts using the R command line tool. To do this, make a new file ‘linuxhint.R’ with the following content:

helloLinuxHint <- “Hello from the script, World!”

print(helloLinuxHint)

Here is what we get back with a command which will run this script:

Running R program from Rscript

Here is the command we used:

At last, we will demonstrate another simple program to calculate factorial of a number with R. Here is a sample program which shows how to do this:

num = 5

factorial = 1

# check if the number is negative, positive or zeroif(num < 0) {

print (“Sorry, number cannot be negative.”)

} else if( num == 0) { print (“The factorial of 0 is 1.”)

} else { for( i in 1 :number) { factorial = factorial*i}

print(paste(“The factorial of”, num ,”is:”,factorial))}

We can run the above script with the following command:

Once we run the provided script, we can see the factorial calculated for a given number:

Calculating factorial of a number

Now, you’re ready to write your own R programs.

Python vs R for Data Science

If you are a beginner, it is difficult to pick Python or R over each other for data analysis and visualisation. Both of these languages have a lot of common libraries than you can imagine. Almost each and every task can be done in both of these languages, may it be related to data wrangling, engineering, feature selection web scrapping, app and so on. Some points we can consider for Python include:

- Python is a language to deploy and implement machine learning at a large-scale

- The code in Python is much more scalable and maintainable

- Most of the data science job can be done with five Python libraries: Numpy, Pandas, Scipy, Scikit-learn and Seaborn and they have developed majorly over past few hours and is catching up with the R programming language

Some things which make R more useful is the availability of many statistical products which creates excellent output for business use-cases which we will discover in coming posts.

Conclusion: Installing R on Ubuntu 18.04

In this lesson, we studied how we can install and start using the R programming language on Ubuntu 18.04 with very simple programs in the language. This is just a very simple introduction of many lessons to come with the R programming language. Share your feedback for the lesson with me or to LinuxHint Twitter handle.

Source

Kontron announced two Ubuntu-driven computers for autonomous vehicles. The S2000 is a lab dev platform with a Xeon 8160T and the EvoTRAC S1901 offers a choice of Kontron modules including a new Atom C3000 based, Type 7 COMe-bDV7R.

Kontron announced two Ubuntu-driven computers for autonomous vehicles. The S2000 is a lab dev platform with a Xeon 8160T and the EvoTRAC S1901 offers a choice of Kontron modules including a new Atom C3000 based, Type 7 COMe-bDV7R.