By Jack M. Germain

Jul 17, 2018 11:06 AM PT

Pinguy OS 18.04 is an Ubuntu-based distribution that offers a non-standard GNOME desktop environment intended to be friendlier for new Linux users.

This distro is a solid Linux OS with a focus on simple and straightforward usability for the non-geek desktop user. If you do not like tinkering with settings or having numerous power-grabbing fancy screen animations, Pinguy OS could be a good choice.

The GNOME desktop is the only user interface option, but Pinguy OS’ developer, Antoni Norman, tweaked the desktop environment with some different software options not usually packaged with GNOME.

His refusal to settle for the run-of-the-mill software typical of mainstream GNOME choices is one of this distro’s strongest features. The developer gives you better application options to create the best user experience within the modified GNOME environment.

Pinguy OS is a great pick for beginning Linux users because it is easy to use and offers a satisfying experience. It is also a no-nonsense computing platform for seasoned Linux users who want a GNOME environment that makes more sense.

Pinguy OS comes with user-friendly enhancements and out-of-the-box support for multimedia codecs and browser plugins. The modified GNOME user interface has enhanced menus, panels and dock bars. It includes a handpicked selection of popular desktop applications for many common computing tasks.

Sensible Modernizing

I last looked at Pinguy OS four years ago and found it both useful and easy to use. The developer offers a major upgrade about once yearly. This latest release, which arrived earlier this month, shows significant updating.

For instance, it includes GNOME 3.28. The developer tweaked many of the components to ensure a fast and modern OS. Gone are the gEdit text editor in favor of Pluma. In addition to providing better performance, Pluma is a suitable clone replacement. The file manager app is Nemo 3.8.3.

No email client is bundled with this latest release, but Thunderbird is readily available from repositories. The developer suggests using the GNOME email notifications, which is part of the GNOME desktop and works once you enter online account info into the GNOME account panel.

One of the benefits of running Pinguy OS used to be its support for 32-bit systems. However, the latest tweaking done to Pinguy OS made 32-bit versions a bad user experience. This latest release does not run on very old hardware.

Changes That Work

Earlier versions of Pinguy OS ran Docky, an aging launch dock app. It did not mesh well with the latest Pinguy OS build, so gone it is. In its place are Simple Dock and Places Status Indicator.

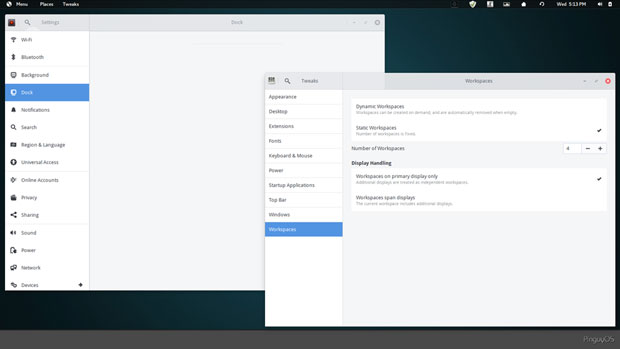

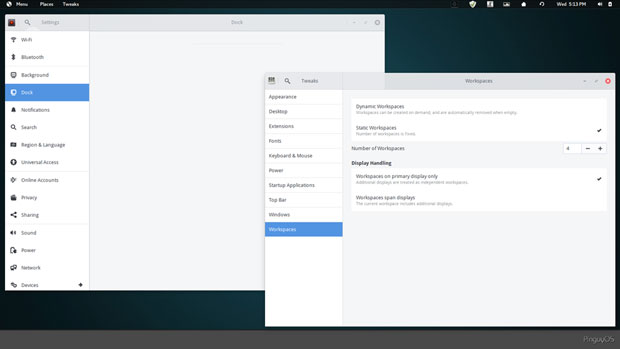

Pinguy OS 18.04 combines application listings, system panel bar tools and workspace switcher into one multifunction panel. Plus, it provides a panel bar for notifications and a Simple Dock for quick launch.

Simple Dock and Places Status Indicator are GNOME extensions. Like Docky, Simple Dock places a quick launch bar at the bottom of the screen. Places Status Indicator adds a menu for quickly navigating places in the system.

Simple dock at the bottom of the screen and the panel bar across the top of the screen provide easy access to all system tools. The menu button at the left of the top panel has additional tweaks and improvements.

Some of the default GNOME apps have been replaced with MATE versions. This is another example of why Pinguy OS is not just another retread built on standard GNOME 3.

Earlier versions came with the Conky desktop applets, but all the adjusting done in the Pinguy OS 18.04 made it a distraction at best. The developer reasoned that the OS did not need Conky because it confused new users.

I can not agree more. I have found Conky to be clunky. Most of its displays focused on system readouts. Putting them on a desktop just adds to the clutter.

Under the Hood

Pinguy OS is basically Linux Mint infrastructure under the covers, but the GNOME 3 environment is redesigned with many nice usability features. The tweaking in this latest Pinguy OS goes well beyond the GNOME 3 you see in Linux Mint, however.

Pinguy OS has only one desktop flavor. It comes in two options, though: full version or the mini edition. This supports the developer’s goal of making an uncomplicated desktop environment.

The mini option gives you less prepackaged software, but you can add the software not included with a few mouse clicks.

This release uses Linux Kernel 4.15.0-23-generic. It also includes OpenGL version string 3.1 Mesa 18.1.1.

If you are a game player who fancies Window games, you will like the inclusion of Winepak’s repository. This makes it easy to install your favorites.

Pinguy OS 18.04 also ships with a new GDM and GTK Theme, which contributes greatly to giving the OS a more modern look.

Look and Feel

The desktop itself is clutter-free. You can not place icons there. That is a feature (or not) of the GNOME 3 desktop.

However, it also reinforces one of the distro’s driving principles. The goal of Pinguy OS is to give users a clean desktop with a fine-tuned interface that works without confusion. This distro does that.

Simplicity is not the only distinguishing trait. Pinguy OS is a thing of beauty. Pinguy OS comes with an eye-catching collection of artwork that randomly displays as a new background every five minutes or so.

Pinguy OS has a clutter-free desktop and a handy weather applet built into the top panel.

This process is controlled by the Variety application. You can change the timing interval and other options for the background images in the Variety Preferences panel.

Pinguy provides a reasonably solid out-of-the-box experience, but the GNOME 3 desktop limits functionality for the sake of simplicity. That is an important distinction.

A panel bar sits at the top of the screen. It holds the traditional menu button in the left corner and system notification icons on the right half of the bar. You can not add or remove any items from the bar.

A Matter of Taste

Do not get me wrong. Placing simplicity above functionality is a point of user perspective about the GNOME 3 desktop — I do not mean that as a criticism.

GNOME 3 is the foundation under several popular desktop environments. What you can see and do with it is a matter of what the developer does. This developer does a lot.

Pinguy OS is not your typical plain-Jane GNOME desktop. Pinguy OS is a solid, functional OS.

New Linux users will not be frustrated by it, but seasoned Linux users might want an advanced setting tool, which does not exist.

My personal preference is a bottom panel that puts notifications, quick launch icons, and a virtual workplace switcher applet a single mouse click at hand. I’d like to see a few icon launchers on the desktop for added convenience.

That is my comfort zone. Standard GNOME 3 dumbs down the process of navigating quickly. It unnecessarily hides access to moving around open applications on numerous virtual workplaces.

Pinguy OS has enough tweaking to build in a suitable workaround for such limitations. So in that regard, this distro gives you a better integration of the GNOME desktop.

Change for the Better

Earlier versions of Pinguy OS used the default full-screen display to show installed applications. The current release has a much better menu system. The far left corner of the panel bar has a Menu button and a Places button.

Click Places for a dropdown listing of folders such as downloads, documents, music, pictures and videos. Clicking on any of these opens a file manager with more options.

Click the Menu button to open a trilogy of functionality. This is a handy mechanism that pulls together what usually is done with several clicks in standard GNOME.

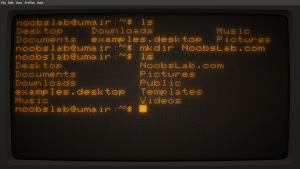

The Simple Dock provides quick access to a few essentials. The apps there include the Web browser, software store, terminal, trash and system monitoring tools.

Multipurpose Panel Bar

When you click the Main Menu button, a panel drops down from the top left corner of the screen. Across the top of this panel are buttons to restart the GNOME shell, suspend /shut down /log out user, lock screen, view Web bookmarks, view recent files, toggle startup apps view, and view applications in list/grid view.

A search window makes finding applications fast. As you type letters, a list of icons for matching applications appears. Click the gear button in the far right of this top row to open a GNOME Menu settings panel. It is here that you can turn on/off numerous features such as activating hot corners.

Down the left edge of the main menu panel is a list of categories that includes Frequent Apps and Favorite Apps. You see that list in the large display area in the center of the dropdown panel. Depending on whether you set grid or list view, a vertical list of program titles fills either the display area or a mini version of the full-screen display that you see in standard GNOME 3.

Built-in Workspace Switcher

What I really dislike about the usual display for virtual workspaces is having to push the mouse pointer into the top left hot corner to slide out the panel from the right edge of the screen. Pinguy OS has a much better solution.

The right edge of the Main Menu panel automatically shows the virtual workspaces in thumbnail view. What a concept! It is simple and efficient.

This approach makes it very handy to navigate among different virtual desktops with a single mouse click. Other features lets you use window actions to move an application to another workspace or jump to a new location using shortcuts.

Settings Supremacy

The top panel bar in GNOME (including Pinguy OS) does not dock open applications or provide any panel applets. That short-circuits many of the special features the panel provides in other Linux desktop environments.

However, Pinguy OS makes up for that by providing a consolidation of system settings. This is a very useful alternative.

Access the system settings from the Main Menu /System Tools /Settings. The list of settings and preferences resembles the dropdown top panel on an Android device. It is very straightforward and complete.

A design based on simplicity puts nearly all of the system settings into an Android-style set of preference panels.

A second settings panel of sorts is available by clicking the Gear button at the far right top of the Main Menu. Click on a category to see a full panel view of preferences to turn on/off. This settings panel provides much of the functionality that would otherwise be provided in a fully functional panel bar at the top (or bottom) of the Linux screen.

Bottom Line

Pinguy OS may not satisfy power users who like to control navigation with keyboard shortcuts and advanced system settings. However, if you just want your system to work from the start, Pinguy OS has a lot going for it.

Do not let this distro’s self-avowed fervor for simplicity let you misjudge its power and usability. If you think it is too basic for serious users, your thinking might be skewed.

Even if you do not prefer the GNOME 3 desktop, give Pinguy OS a try. It is not your standard GNOME. This OS improves upon most of GNOME 3’s shortcomings. It offers a solid, better GNOME integration.

Want to Suggest a Review?

Is there a Linux software application or distro you’d like to suggest for review? Something you love or would like to get to know?

Please

email your ideas to me, and I’ll consider them for a future Linux Picks and Pans column.

And use the Reader Comments feature below to provide your input!

Jack M. Germain has been an ECT News Network reporter since 2003. His main areas of focus are enterprise IT, Linux and open source technologies. He has written numerous reviews of Linux distros and other open source software.

Jack M. Germain has been an ECT News Network reporter since 2003. His main areas of focus are enterprise IT, Linux and open source technologies. He has written numerous reviews of Linux distros and other open source software.

Email Jack.

Source

Jack M. Germain has been an ECT News Network reporter since 2003. His main areas of focus are enterprise IT, Linux and open source technologies. He has written numerous reviews of Linux distros and other open source software.

Jack M. Germain has been an ECT News Network reporter since 2003. His main areas of focus are enterprise IT, Linux and open source technologies. He has written numerous reviews of Linux distros and other open source software.

Click Network Settings, choose Ethernet and Edit Connections. Select Wired Connection 1 and click the tool icon

Click Network Settings, choose Ethernet and Edit Connections. Select Wired Connection 1 and click the tool icon