Arm unveiled an “Mbed Linux OS” distro that mixes Yocto and Mbed code and works with its Pelion IoT Platform. Arm also extended Pelion to support x86 devices that use Intel’s SDO provisioning scheme.

Arm unveiled an “Mbed Linux OS” distro that mixes Yocto and Mbed code and works with its Pelion IoT Platform. Arm also extended Pelion to support x86 devices that use Intel’s SDO provisioning scheme.

Politics and international relations may be fraught with acrimony these days, but the tech world seems a bit friendlier of late. Last week Microsoft joined the Open Invention Network and agreed to grant a royalty-free, unrestricted license of its 60,000-patent portfolio to other OIN members, thereby enabling Android and Linux device manufacturers to avoid exorbitant patent payments. This week, Arm and Intel kept up the happy talk by agreeing to a partnership involving IoT device provisioning.

Arm’s recently announced Pelion IoT Platform will align with Intel’s Secure Device Onboard (SDO) provisioning technology to make it easier for IoT vendors and customers to onboard both x86 and Arm-based devices using a common Peleon platform. Arm also announced Pelion related partnerships with myDevices and Arduino (see farther below).

In another nod to Intel, Arm unveiled a new, IoT focused Mbed Linux OS distribution that combines the Linux kernel with tools and recipes from the Intel-backed Yocto Project. The distro also integrates security and IoT connectivity code from its open source Mbed RTOS.

Pelion IoT Platform architecture

(click image to enlarge)

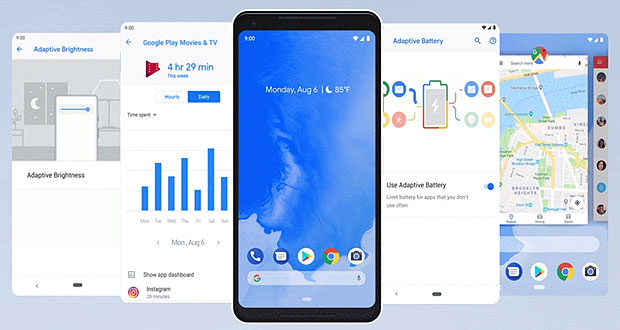

When Pelion was announced, Arm mentioned cross-platform support, but there were few details. Now with the Intel SDO deal and the launch of Mbed Linux OS, Arm has formally expanded Pelion from an MCU-only IoT data aggregation platform to one that supports more advanced x86 and Cortex-A based systems.

Mbed Linux OS

The early stage Mbed Linux OS will be released by the end of the year as an invitation-only developer preview. Both the OS source code and related test suites will eventually be open sourced.

In the Mbed Linux OS blog announcement, Arm’s Mark Wright pitches the distro as a secure, IoT focused “sibling” to the Cortex-M focused Mbed that is designed for Cortex-A processors. Arm will support Mbed Linux with its MCU-oriented Mbed community of 350,000 developers and will offer support for popular Linux development boards and modules. The Softbank-owned company will also supply optional commercial support.

Like Mbed, Mbed Linux will be “deeply integrated” with the Pelion IoT System in order “to simplify lifecycle management.” The Pelion support provides device provisioning, connectivity, and updates, thereby enabling development teams to update the OS and the applications independently, says Wright. Working with the Pelion Device Management Application, Mbed Linux OS can “simplify in-field provisioning and eradicate the need for legacy serial connections for initial device configuration,” says Arm.

Mbed Linux will support Arm’s Platform Security Architecture and hardware based TrustZone security to enable secure, signed boot and signed updates. It will also enable deployment of applications in secure, OCI-compliant containers.

Arm did not specify which components of the Yocto Project code it would integrate with Mbed. In late August, Arm and Facebook joined Intel and TI as Platinum members of the Yocto Project. The Linux Foundation hosted project was launched by Intel but is now widely used on Arm as well as x86 based IoT devices.

Despite common references to “Yocto Linux,” Yocto Project is not a distribution, but rather a collection of open source templates, tools, and methods for creating custom embedded Linux-based systems. A Yocto foundation underlies most major commercial Linux distributions such as Wind River Linux and Mentor Embedded Linux and is often spun into custom builds by DIY developers, especially for resource constrained IoT devices.

We saw no mention of a contribution for the Arm-backed Linaro initiative for either Mbed Linux or Pelion. Linaro, which oversees the 96Boards project, develops open source embedded Linux and Android software components. The Yocto and Linaro projects were initially seen as rivals, but they have grown increasingly complementary. Linaro’s Arm toolchain can be used within Yocto Project, as well as with the related OpenEmbedded build environment and Bitbake build engine.

Developers can sign up for the limited number of invites to participate in the upcoming developer preview of Mbed Linux OS here.

Arm’s Pelion partnerships

Arm’s Pelion IoT Platform will soon run on devices with Intel’s recently launched Secure Device Onboard (SDO) service, enabling customers to deploy both Arm and x86 based systems controlled by the common Pelion platform. “We believe this collaboration is a big step forward for greater customer choice, fewer device SKUs, higher volume and velocity through IoT supply chains and lower deployment cost,” says Arm.

Intel’s Secure Device Onboard provisioning used within Pelion IoT Platform

(click image to enlarge)

The SDO “zero-touch onboarding service” depends on Intel Enhanced Privacy ID (EPID) data embedded in chips to validate and provision IoT devices automatically. SDO automatically discovers and provisions compliant devices during installation. This “late binding” approach reduces provisioning times from 20 minutes to an hour to a few minutes, says Intel.

Unlike PKI based authentication methods, “SDO does not insert Intel into the authentication path.” Instead, it brokers a rendezvous URL to the Intel SDO service where Intel EPID opens a private authentication channel between the device and the customer’s IoT platform.

Pelion device management conceptual diagram (from Arm’s Aug. announcement)

(click image to enlarge)

The Pelion IoT Platform offers its own scheme for provisioning and configuration of devices using cryptographic identities built into Cortex-M MCUs running Mbed. With the new Mbed Linux, Pelion will also be able to accept devices that run on Cortex-A chips with TrustZone security.

Pelion combines Arm’s Mbed Cloud connected Mbed IoT Device Management Platform with technologies it acquired via two 2018 acquisitions. The new Treasure Data unit supplies data management services to Pelion. Meanwhile, Stream Technologies provides Pelion managed gateway services for wireless technologies including cellular, LoRa, and satellite communications.

The partnership with myDevices extends Pelion support to devices that run myDevices’ new IoT in a Box turnkey IoT software for LoRa gateways and nodes. myDevices, which is known for its Linux- and Arduino-friendly Cayenne drag-and-drop IoT development and management platform, launched IoT in a Box to enable easy set up a LoRa gateway and LoRa sensor nodes. Different IoT in a Box versions target specific applications ranging from home and building management to storage lockers to refrigeration systems. Developers can try out Pelion services together with IoT in a Box for a new, $199 IoT Starter Kit.

The Arduino partnership is a bit less clear. It appears to extend Arm’s Pelion Connectivity Management stack, based on the Stream Technologies acquisition, to Arduino devices. The partnership gives users the option of selecting “competitive global data plans” for cellular service, says Arm.

More details on this and the other Pelion announcements should emerge at Arm TechCon in San Jose, Calif. and IoT Solution World Congress in Barcelona, both of which run Oct 16-18. Intel also offers a video overview of the Pelion/SDO mashup.

This article is copyright © 2018 Linux.com and was originally published here. It has been reproduced by this site with the permission of its owner. Please visit Linux.com for up-to-date news and articles about Linux and open source.

John P. Mello Jr. has been an ECT News Network reporter

John P. Mello Jr. has been an ECT News Network reporter