While many user interface designers advocate simplicity and simplified decision-making for users (which often results in no decision-making at all), the KDE community [1] has stubbornly gone the other way, jam-packing all manner of features and doodads into its Plasma [2] desktop (see the “KDE Is Not a Desktop” box).

| Desktop |

RAM Used |

Bootup |

Firefox Startup |

LibreOffice Startup |

| Gnome |

542MiB |

~51sec |

7.41sec |

10.75sec |

| Xfce |

530MiB |

~45sec |

7.66sec |

10.10sec |

| Plasma |

489MiB |

~60sec |

7.40sec |

8.07sec |

This has been the subject of much controversy and confusion, but, no, KDE is not the name of a desktop environment anymore and hasn’t been for some time now.

The desktop is called Plasma. KDE, on the other hand, is the name given to the community of developers, artists, translators, and so on that create the software. The reason for this shift is because the KDE community builds many things, like Krita, Kdenlive, digiKam, GCompris, and so on, not just Plasma. Many of these applications are not even tied to Linux, much less to the Plasma desktop, and can be run on many other graphical environments, including Mac OS X, Windows, Android, and others.

Also, much like KFC does not stand for Kentucky Fried Chicken anymore, neither does KDE stand for Kool Desktop Environment. KDE is not an acronym for anything. It is just … KDE.

That said, if you want simple, Plasma can do simple, too. You can ignore all the bell and whistles and just get on with your life. But where is the fun in that?

Camouflage

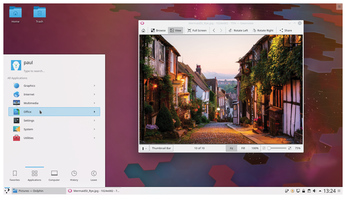

A default Plasma desktop looks like Figure 1. Usually, you will find a panel at the bottom of the screen, a start button holding menus at the bottom left, and a tray on the right – all quite conventional, boring, and even Windows-y.

Figure 1: Out of the box, Plasma’s default layout looks pretty conventional …

But Plasma can be configured to look like anything, even like Ubuntu’s defunct Unity (Figure 2), Gnome, Mac OS, or whatever else rocks your boat.

Figure 2: … but Plasma can adopt any layout that tickles your fancy. Here, Plasma looks like Ubuntu’s defunct Unity desktop.

To illustrate Plasma’s flexibility, I’ll show you some tricks you can use to emulate other desktops, starting with global menus. Both Unity and Mac OS use a global menu: It is the menu that appears in a bar at the top of the screen and shows a selected application’s options, instead of having them in a bar along the top of the application.

To create global menus in Plasma, first right-click in any free space on the Plasma desktop and select + Add Panel | Empty Panel from the pop-up menu. Usually, the panel will appear across the top of the screen, because the bottom is already filled with the default Plasma panel. If it has popped up anywhere else, click on the hamburger menu (the button with three horizontal lines at one end of the panel) and then click and hold the Screen Edge button and drag the panel to the top.

Once you have placed the panel, click on the hamburger menu on the right of the panel again and click on + Add Widgets. A bar with all the available widgets will show up on the screen’s left . You can narrow your search down by typing global into the search box. When you see the Global Menu widget, double-click on it, and it will be added to the panel.

Initially you may not see any difference. Indeed, open applications still have their own menubars. Close and reopen the applications, and you’ll see how now their menus have moved from the application window to the upper panel you just made.

To make the effect even more striking, click the Start menu on the bottom panel and pick System Settings. Under Workspace Theme, choose Breeze Dark and click Apply. You will end up with something like Figure 3.

Figure 3: Creating a panel with a global menu is easy.

But theming is just one of the things you can do to tweak Plasma’s look and feel. You can also configure the look, size, and location of individual applications and even individual windows to absurd lengths.

Window Hacking

Right-click on any window’s titlebar, and a menu will pop up. Apart from the options to minimize, maximize, and close the windows, you’ll notice the More Actions option. The Keep Above Others and Keep Below Others options are self-explanatory, but you can also make a window Fullscreen, and it will be maximized; the application’s titlebar and any other desktop element (like panels) will disappear, giving you maximum workspace. If the application doesn’t offer you a way to exit full screen, press Alt+F3 and use the menu to deactivate full-screen mode.

You can also “shade” the window, which means it will roll up like a blind, leaving only the titlebar visible. Another alternative is to remove the border and titlebar, leaving a bare window with no decorations. To recover borders and the titlebar, select the window and press Alt+F3 again to open the window’s configuration menu.

Within Window Specific Settings and Application Specific Settings, you have all manner of options to fix the application window’s position and size. You can make a window stick to a certain area of your screen and become unmovable. At the same time, you can adjust its size to the pixel. You can configure things so that, when you launch a certain application, it always opens in a certain place, maximized, or shaded. You can make the application so it won’t close, or you can choose actions from dozens of other options.

Window Manager Settings opens another cornucopia of options for adjusting windows. From here, you can adjust things like the screen edges, for example. These are “live” areas that react and carry out an action when your cursor moves into them. Move your cursor into the upper left-hand corner of your screen, and you will see all the windows spread out and go a shade darker, showing everything you have open. You can use this trick to pick a window on which to focus, especially if you have several open (Figure 4).

Figure 4: Screen edges allow you to activate actions when you mouse over them.

As with everything Plasma, screen edges can be configured to execute a wide variety of other actions: You can minimize all the windows to show the desktop, open KRunner to run a command (you’ll learn more about how KRunner works below), or open the window-switching panel on the left of the screen. The switching panel allows you to scroll through all the open windows to select the one you want.

Another of the more interesting configuration tabs in Window Manager Settings is the Effects tab. Old timers will remember things like wobbly windows and rotating cubes that plagued Linux desktops of yore. Those still live on in Plasma (Figure 5) and can be activated from this tab, but other effects are more intriguing and worth a second look.

Figure 5: Rotating cubes are back and can be activated from the Effects tab in Window Manager Settings.

Take, for example, the Thumbnail Aside effect. Activate this effect, maximize a window, and press Alt+Meta+T (the Meta key usually has a Windows logo on it), and you will see a small preview of the window appear in the bottom right-hand corner of your screen. You can make these previews translucent (the level of translucency is, of course, adjustable), so you can still see what is under the preview. You can also adjust the size to whatever you want. Click on another window, maximize it, press Alt+Meta+T again, and, lo and behold: Another preview will pop up above the first one (Figure 6).

Figure 6: The Thumbnail Aside effect allows you to open previews of window activities in the bottom right corner of your screen.

Because these previews do not interfere with the cursor or the other windows, you can carry on with your work while you keep an eye on a video streaming in VLC or a complex install running in a terminal.

Certain caveats apply, though: As mentioned above, the windows you want to preview must be maximized. If you minimize a previewed window, the preview will disappear, but not close, leaving an empty space in the column of previews. Also, as shown above, you can remove borders and the titlebar from windows quite easily, and the Thumbnail Aside effect will not work with windows without borders.

Select the previewed window and press Alt+Meta+T again to close its preview.

Being Productive

While on the subject of shortcuts, you always have a terminal close at hand in Plasma: Hit F12 and a Yakuake terminal [3] unfolds from the top of the screen. You can run anything you need from this console. When you’re done, hit F12 again, or click on a window, and the terminal folds back up, moving out of the way. If you are carrying out a long process, like compiling an application, folding the terminal up won’t interrupt it: It will carry on in the background, so at any moment you can hit F12 again and check on its progress.

With regard to productivity/shortcuts/stuff that unfolds from the top of the screen, try pressing Alt+F2 (or Alt+Space, or simply click on an empty area in the desktop and start typing). A text box for searches appears at the top of the screen. This is KRunner [4], a built-in application originally designed to help search for (and run) applications. Nowadays, KRunner indexes everything: applications, for sure, but also email addresses, packages available from your software center, bookmarks from your web browser, folders, and documents (Figure 7). It is blindingly fast and coughs up results as you type.

Figure 7: KRunner is your one-stop solution to finding the answer to everything.

KRunner can also be used as a media player (type play and the name of the song), a clock (type time), a calendar (date), a regular and scientific calculator (try typing =solve( 2*x^2 + 3*x – 2 = 0 ); the first result will be the solution to that polynomial function), as a unit and currency converter (type 100 GBP to see the value of 100 British pounds in different currencies), and a way to connect to remote computers (type fish://<your remote server> to open your SSH server in your file explorer).

KRunner is the epitome of what Plasma is all about.

Misconceptions

You might be thinking that all these goodies are cool and all, but at what cost? Surely Plasma will slow down your average machine to a crawl. Turns out that is not the case at all. In fact, some not-very-scientific research I carried out showed Plasma to be lighter in many areas than even Xfce, a desktop environment whose main claim to fame is that it is light.

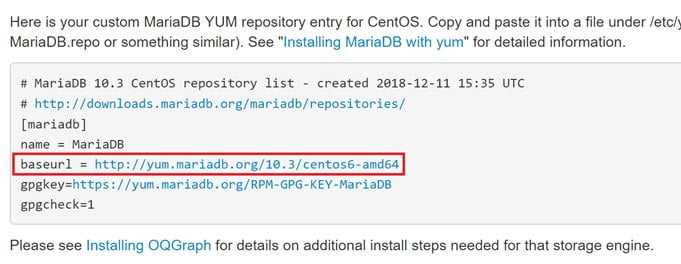

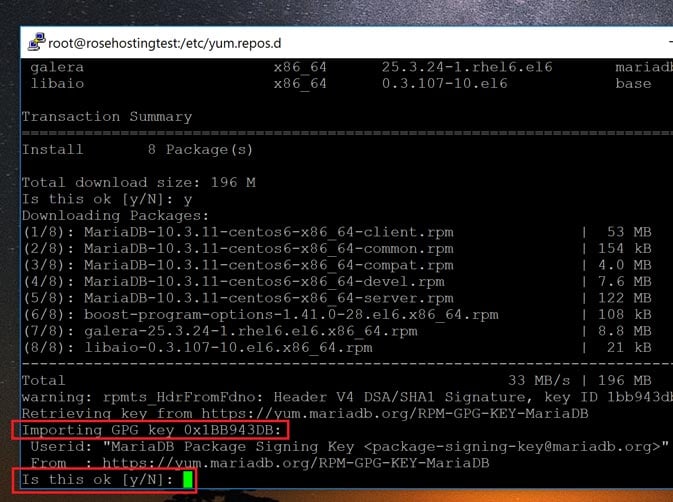

Using three Live Manjaro flavors, KDE (with Plasma desktop), Gnome, and Xfce, I ran some tests and concocted Table 1.

If the numbers in the first column seem a bit high, it is because I was running the desktops off of a Live distribution, so, apart from the graphical environment, the RAM was also loaded with a lot of in-memory systems. Notwithstanding, Plasma is the lightest of the three by quite a measure.

Bootup, which was timed from the GRUB screen to a fully loaded desktop, does show Plasma to be slower, but starting up external applications shows Plasma to be faster – in the case of running LibreOffice, much faster. This exercise shows that you can expect Plasma to take longer to load, but, once loaded, it will run lighter and snappier than many other desktops.

Conclusion

If you have not tried Plasma or remember the bad old days when it was clunky and buggy, you should really give it a chance. Not only is it configurable to absurd extremes and packs more features than you would ever need, but it is also lighter and snappier than most other desktops out there.

Figure 1. Go “Hello, World” Program in Portuguese

Figure 1. Go “Hello, World” Program in Portuguese

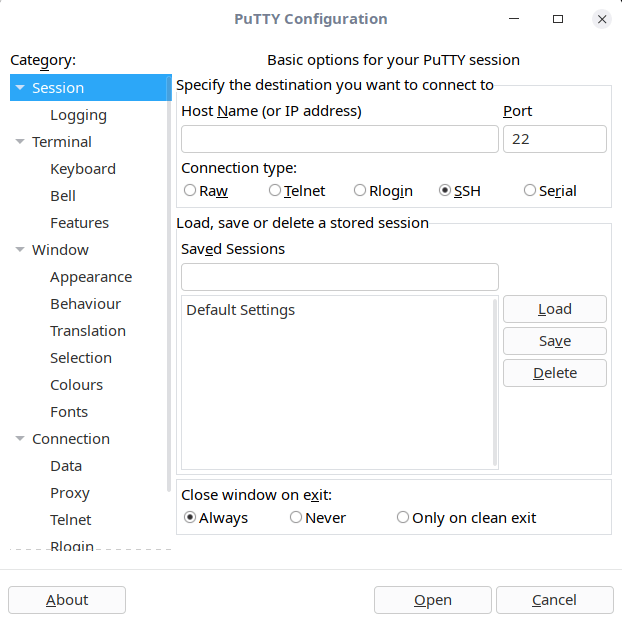

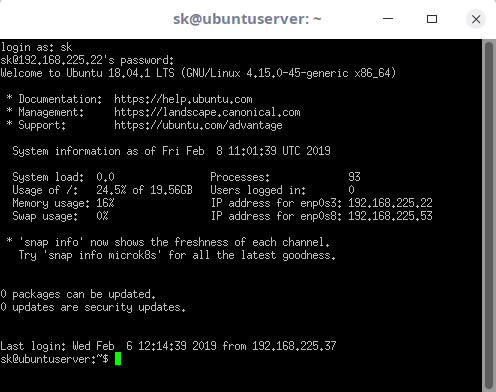

Double click on the downloaded .deb file to start installation

Double click on the downloaded .deb file to start installation The installation of deb file will be carried out via Software Center

The installation of deb file will be carried out via Software Center Installing deb files using dpkg command in Ubuntu

Installing deb files using dpkg command in Ubuntu