Jan 11, 2019 10:53 AM PT

Keeping up to date with multiple daily activity calendars, tons of information, and long must-do lists can be a never-ending challenge. This week’s Linux Picks and Pans reviews the best open source Personal Information Managers (PIMs) that will serve you well on whatever Linux distribution you run.

In theory, computer tools should make managing a flood of personal and business information child’s play. In practice, however, many PIM tool sets are isolated from your other devices. This, of course, makes it difficult, if not impossible, to share essential information across your smartphone, desktop, laptop and tablet.

There are some obvious cloud solutions that ease the hassle of accessing personal and business information across devices. For instance, you can access Microsoft’s proprietary OneNote software for free via the cloud on your Linux gear, including Android and Chromebook devices.

As long as you have a free Microsoft email account, you can access your OneNote content directly from your browser or via the OneNote app available for most platforms. The only roadblock with Microsoft is using it on portable devices (laptops and tablets) beyond a certain screen size.

Google offers similar cloud-based PIM solutions with its Keep note-taking and Tasks to-do list services. Keep has numerous features for cataloging notes and imported images using labels and color options. Tasks lets you enter a simple event to track, as well as drill down to storing details and due dates.

If you use Google’s Chrome Web browser, you can integrate both the Keep and Tasks content as part of the Google Calendar display for added flexibility.

OneNote, Tasks and Keep serve different purposes and let you take the PIM process only so far. All three solutions lack specific tracking and reminder features that true PIM packages provide. Still, they do provide a reliable measure of cross-platform access for basic PIM functionality.

You already may be using these Microsoft or Google cloud-based tools. However, if your needs do not require sharing information on multiple devices, one of the following more traditional Linux PIM packages may be more to your liking.

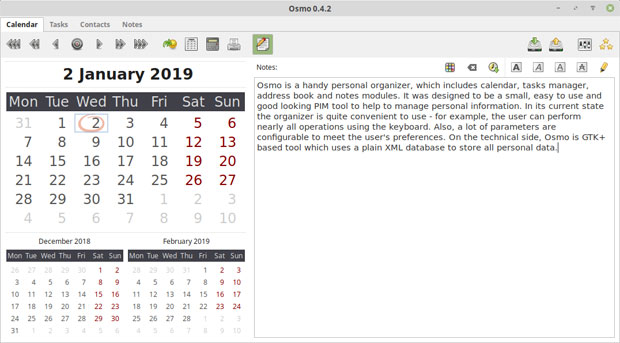

Osmo: Info Management Done Simple

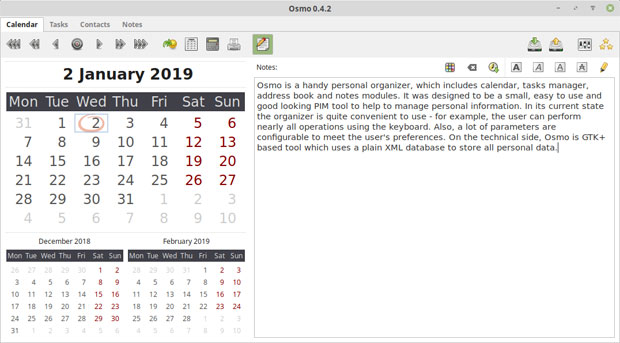

Osmo is a lightweight yet feature-heavy do-it-all PIM for any Linux desktop. It is an ideal all-around PIM that manages appointments, tasks, contacts and notes.

Osmo is a full-featured do-it-all PIM for any Linux desktop. It manages appointments, tasks, contacts and notes.

Osmo’s design is not unlike other datebook-style calendars. You can choose a horizontal or vertical orientation. The preferences panel lets you juggle several appearance and functionality options for each of the components. These include the Calendar, Task List, Contacts and Notes databases. You even can hide PIM components to match the way you use Osmo.

Osmo employs a plain XML database to store all personal data. Find this file on the hard drive and copy it to a thumb drive to make Osmo portable, and to update the PIM on other Linux devices. Osmo does not have a real file storage exchange mechanism. A backup and restore features helps to automate this process.

Moving around the app is simple. Click the tab for the desired component. The display shows the current month with markers indicating days with events entered. Below the current month’s display is a selector arrow to show previous and next month.

The day note icon pops up a note entry screen for the selected date and shows it at the bottom of the app window. The day note panel has a tool row of buttons to modify the text display of information you enter.

The Notes panel is surprisingly flexible. For instance, the opening note screen shows a file-list type of directory display. You can use its dropdown menus to select a note category. A handy search window lets you find information in the notes database rapidly. Icons let you add a new note, select an existing note for editing, or delete a note from the list.

The contact component in Osmo is fairly slick. It has an icon and tool row along with a search window similar to the Notes component. These include New, Remove and Edit buttons. The search box finds matches as you type. The contact panel also has options to show birthdays, and buttons to import and export contacts.

A nice touch is a globe button that shows a contact’s location on a map. Osmo lets you choose either Google, Bing or OpenStreetMap as a map provider source.

While Osmo does not sync with other computers or a Web-based calendar, it does much of what you would expect from a solid PIM. Osmo does very well what it was designed to do — keep track of your lists, calendar events and contacts.

Osmo’s Last Update: 8-26-2018

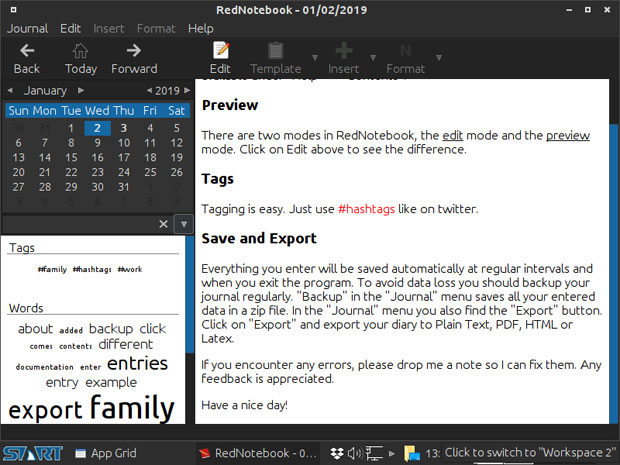

Journal Life With RedNotebook

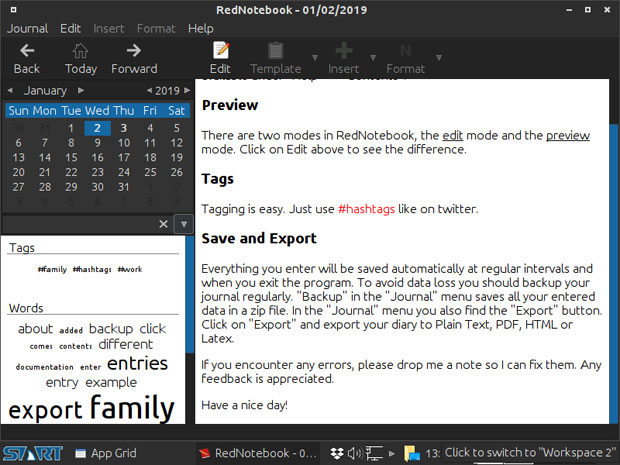

RedNotebook is built around the concept of a simple design with enhanced features. This application is much more than a daily diary maker. Its flexible design is a perfect platform for storing notes and information tracking.

RedNotebook’s flexible design is a perfect platform for storing notes and information tracking.

It is an information magnet that lets you add files, links, images and notes divided into categories. Assigning tags to your entries adds a sophisticated way to organize the content. The ability to insert images, files and links to websites makes it very viable as a general note-taking program.

The design incorporates tags and other cool navigational features that drive RedNotebook’s functionality. Its interface is divided into three parts.

On the left is the calendar. Click a day within any month to see the content appear in the display panel in the center. The annotations panel is to the right. Annotations are notes that elaborate on the basic diary entry. You can sort annotations into categories easily.

RedNotebook’s features include easy calendar navigation, numerous customizable templates, export functionality and word clouds. It also lets you format, tag and search your entries, something that other diary and note apps do not offer.

Along with spell-checking capability, RedNotebook has some nice advanced-level features, including the ability to export in PDF format, drag and drop content between entries, and display markup highlighting. Plus, it automatically saves at set intervals and upon exit.

To facilitate use on multiple computers, you can save your journals on a remote server. The application by default makes Zipped backup copies of all entries upon exit.

Another cool feature is Word Cloud. RedNotebook keeps track of your most-often-used words in the note entries. Click on the Clouds tab to view this list. Select your category or tag clouds by clicking on the scroll-down menu. Right click on any words in the cloud that you want removed. Or you can add these words to the blacklist menu in the Preferences menu option to filter them out.

Use RedNotebook for a combination of things to keep track of daily information, activities and links to other reference files. You also can use it to maintain a running to-do list. The advantage to this feature is never having to enter a start or end date.

Last Update: 11-15-2018

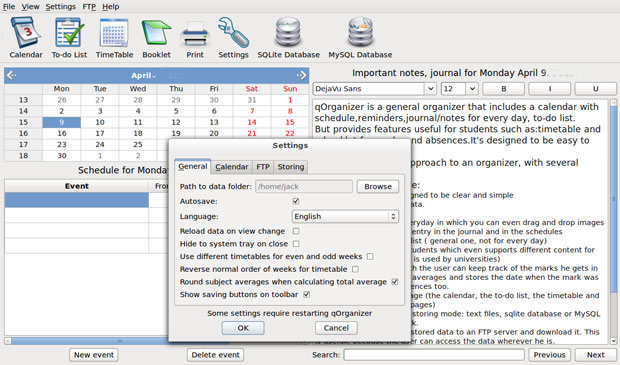

qOrganizing for Multiple Device Use

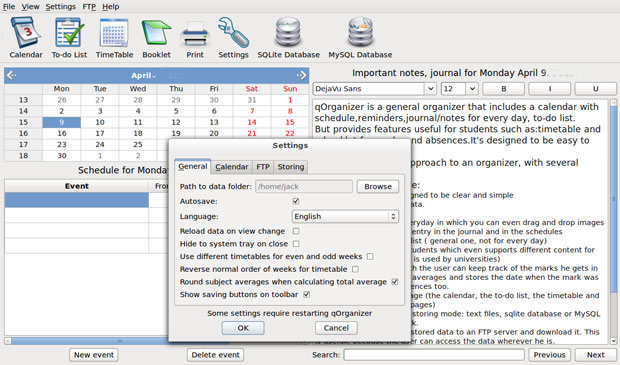

qOrganizer goes a long way toward solving usage issues on multiple computers. This PIM does a nice job of going head-to-head with other information managers to track and manage your day.

You might have some trouble getting it from your distro’s repository, however. qOrganizer is readily available at Sourceforge, but it is available only in 32-bit architecture. You will have to unzip the archived file and manually install the program. Still, qOrganizer should run on your system and is worthy of a tryout.

qOrganizer has a useful collection of tools that give it an edge over other PIM solutions.

qOrganizer is a general organizer that includes a calendar with schedule, reminders, journal/notes and a to-do list. Its comprehensive collection of components and simple interface give this app a fresh, innovative approach to tracking your important activities.

One of gOrganizer’s most unique components makes it a cool tool for the academic set, both high school and college level. Its Timetable and Booklet features are unique to general purpose PIMs.

qOrganizer has an intuitive design so it mostly works the way you would use a handwritten day planner with pen on a page. Click an entry line and type your information. All the controls are handled by icons that switch easily from Calendar to To-Do List and other features. Icons in the tool row put every control one click away.

This PIM automatically saves all your data. You can choose the storing mode: text files, an SQlite database or MySQL database for transferring over the Internet. This gives you a way to sort of sync your PIM content on all your computers.

This app prints each module as a separate page, so you can carry a printed version of just the calendar, the to-do list, the timetable or the booklet.

Finding information stored in qOrganizer is fast and easy. A search window with previous and next buttons is located on the bottom right of the display. This tool searches for the entry data in any of the components.

A neat feature is data entry shortcuts. You can enter the number in the to-do start and deadline columns. The full date appears. The Priority column lets you enter a ranking number for each task. Click the arrow that appears in the entry line to have date selection calendar pop up.

The right side of the task display is the completed column. You can enter a number to show the percent of completion. A progress bar fills in the line.

The calendar page display is a split screen. The month fills the top left. The bottom left is the daily schedule for the highlighted date. The right side of the panel is the journal or note entry for the selected calendar date.

qOrganizer has a useful collection of tools that gives it an edge over other PIM solutions. It is too bad that the developer no longer provides updates for this open source project.

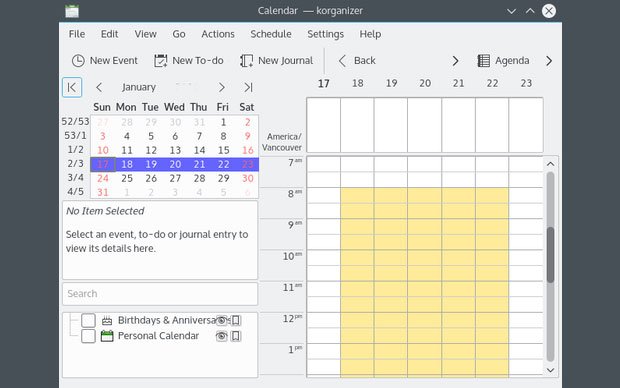

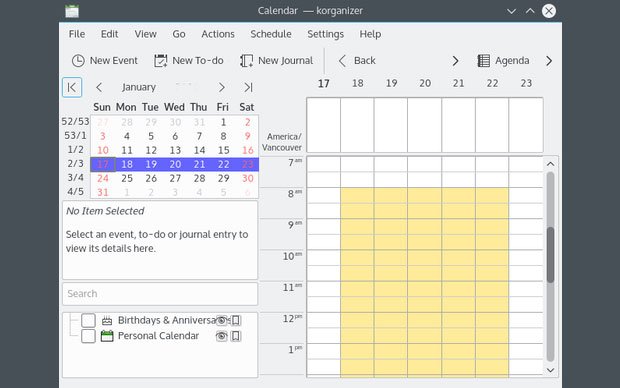

Making Informational Kontact

Kontact has its roots in the K Desktop environment. Originally, it was an integral set of tools designed as part of the KDE desktop. It still is.

However, you can use this integrated PIM with nearly any Linux distro. In most cases, any dependencies will be installed along with the core Kontact components.

Kontact’s integration makes it a more powerful information manager than other tools in this roundup. It displays email, address books, calendars, tasks, news feeds and other personal or business data in one window.

The integration built into Kontact makes it a more powerful information manager than other tools in this roundup. It supports the display of email, address books, calendars, tasks, news feeds and other personal or business data in one window.

The integration includes a PIM back end and the graphical applications connecting to the back end. The components include agents to merge new data with the existing data set, such as contacts and news.

This integration involves groupware servers that give your workgroup members access to shared email folders, group task lists, calendar sharing, central address books and meeting scheduling.

Kontact is not one program. In essence, it is a symbiotic collection of essential KDE tools.

One of its key components is Akonadi. This is a framework named after the oracle goddess of justice in Ghana. This framework provides applications with a centralized database to store, index and retrieve personal information, including emails, contacts, calendars, events, journals, alarms and notes.

Kontact’s other components:

- Akregator — to read selected news feeds;

- KAddressBook — to manage contacts;

- KMail — to provide mail client services;

- KNotes — to post sticky notes on the Desktop;

- KOrganizer — to provide calendar, scheduling and journal/notes management;

- Summary — to display an information summary screen;

- KJots — to organize your ideas into a notebook structure that includes calendars, information and to-do lists.

This multifaceted PIM package helps you manage your information overload more easily. The result is better productivity and efficiency. The combination of tools and back-end servers offers additional benefits of group collaboration as a business tool.

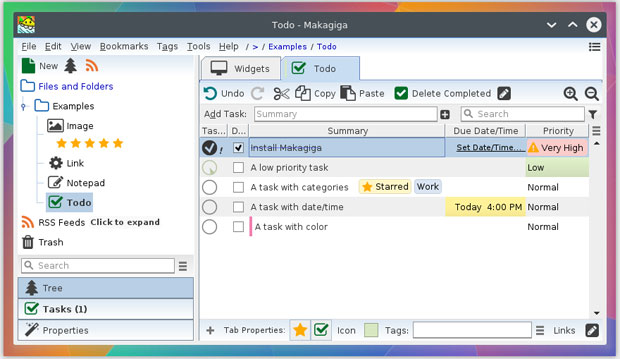

Makagiga: The All-in-One PIM

Makagiga is an easy-to-use PIM solution that does everything. The project is about four years young. In fact, compared to the other products in this roundup, it is one of the most modern approaches to managing personal information.

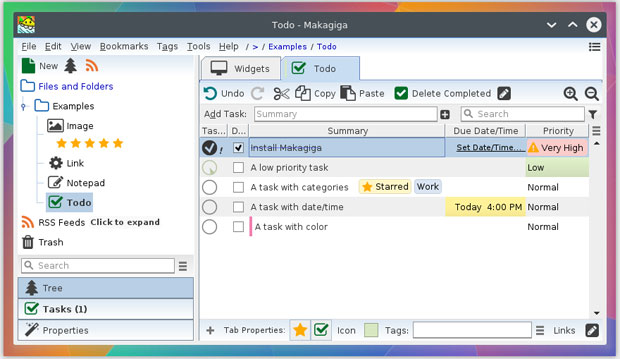

Makagiga uses a modern, smart interface that contributes to its intuitive ability to handle to-do listing, text editing and RSS reading. It uses add-ons to implement its various capabilities.

Makagiga does just about anything you need it to do. It is a capable to-do manager. It handles note-taking with ease. It edits images you package into your notes.

Plus, it uses plug-ins to provide Web searching, an OpenStreetMap viewer, a thesaurus, and a LaTex/ Markdown/BB Code previewer. It can capture screenshots to integrate as notes, and it can generate bar codes.

Makagiga uses a modern, smart interface that contributes to its intuitive ability to handle to-do listing, text editing and RSS reading. It uses add-ons to implement its various capabilities.

Among them are a collection of widgets to provide calendars and sticky notes.

The main window displays a tree directory view for folders and feeds to the left. It shows a large pin board to the right. The window uses tabs to show changing content in the pin board — Widgets, Calendar and To-Do list.

A horizontal menu bar sits at the top of the main window.

A settings dialog sits under the settings option of both the View and Tools menu. Dialogues configure the software. The menu structure changes when a pin board tab is activated.

You can find the settings dialog for designing the view by selecting the Widgets tab. The three context-sensitive menus (Wallpaper, Colors and Border, Workspaces) are used to enhance the pin board’s visual appearance. Basic modifications are performed in the Tools | Settings menu.

The To-Do manager is one of the best in this roundup. You can set task priorities, assign them dates/times, and even organize them into categories. You also can add colors and tags for more organizational distinctions.

The Image editor has options to resize, rotate or flip pictures. It also has simple annotation tools and an inventory of filters and special effects.

The Notepad is more basic than I prefer. It limps along without a find-and-replace function. It does have word count, syntax highlighting and an HTML preview.

This application has mouse gesture support for 17 actions you can perform easily.

Latest version: Makagiga 6.4 | 11-17-2018

Bottom Line

Personal Information Management is a software category being overshadowed by cloud services and dedicated apps on portable devices. That is one reason there are few new contenders among open source PIM applications available for the Linux platform.

The titles in this roundup are solid performers. They offer a variety of options. They also share a similar look and feel. So trying out several of these PIMs is easy. Compare the features, and choose the best tool to meet your needs.

Source

IEI unveiled a Linux-driven “Puzzle” series of 1U rackmount network appliances and uCPE systems with VNF support and a choice of Intel Coffee Lake, AMD Epyc 3000, Armada 8040, NXP LS2088, and Cavium Octeon CPUs.

IEI unveiled a Linux-driven “Puzzle” series of 1U rackmount network appliances and uCPE systems with VNF support and a choice of Intel Coffee Lake, AMD Epyc 3000, Armada 8040, NXP LS2088, and Cavium Octeon CPUs.