LFCE: Installing Network Services and Configuring Automatic Startup at Boot – Part 1

A Linux Foundation Certified Engineer (LFCE) is prepared to install, configure, manage, and troubleshoot network services in Linux systems, and is responsible for the design and implementation of system architecture.

Introducing The Linux Foundation Certification Program.

In this 12-article series, titled Preparation for the LFCE (Linux Foundation Certified Engineer) exam, we will cover the required domains and competencies in Ubuntu, CentOS, and openSUSE:

Installing Network Services

When it comes to setting up and using any kind of network services, it is hard to imagine a scenario that Linux cannot be a part of. In this article we will show how to install the following network services in Linux (each configuration will be covered in upcoming separate articles):

- NFS (Network File System) Server

- Apache Web Server

- Squid Proxy Server + SquidGuard

- Email Server (Postfix + Dovecot), and

- Iptables

In addition, we will want to make sure all of those services are automatically started on boot or on-demand.

We must note that even when you can run all of these network services in the same physical machine or virtual private server, one of the first so-called “rules” of network security tells system administrators to avoid doing so to the extent possible. What is the judgement that supports that statement? It’s rather simple: if for some reason a network service is compromised in a machine that runs more than one of them, it can be relatively easy for an attacker to compromise the rest as well.

Now, if you really need to install multiple network services on the same machine (in a test lab, for example), make sure you enable only those that you need at a certain moment, and disable them later.

Before we begin, we need to clarify that the current article (along with the rest in the LFCS and LFCE series) is focused on a performance-based perspective, and thus cannot examine every theoretical detail about the covered topics. We will, however, introduce each topic with the necessary information as a starting point.

In order to use the following network services, you will need to disable the firewall for the time being until we learn how to allow the corresponding traffic through the firewall.

Please note that this is NOT recommended for a production setup, but we will do so for learning purposes only.

In a default Ubuntu installation, the firewall should not be active. In openSUSE and CentOS, you will need to explicitly disable it:

# systemctl stop firewalld # systemctl disable firewalld or # or systemctl mask firewalld

That being said, let’s get started!

Installing A NFSv4 Server

NFS in itself is a network protocol, whose latest version is NFSv4. This is the version that we will use throughout this series.

A NFS server is the traditional solution that allows remote Linux clients to mount its shares over a network and interact with those file systems as though they are mounted locally, allowing to centralize storage resources for the network.

On CentOS

# yum update && yum install nfs-utils

On Ubuntu

# aptitude update && aptitude install nfs-kernel-server

On OpenSUSE

# zypper refresh && zypper install nfsserver

For more detailed instructions, read our article that tells how to Configuring NFS Server and Client on Linux systems.

Installing Apache Web Server

The Apache web server is a robust and reliable FOSS implementation of a HTTP server. As of the end of October 2014, Apache powers 385 million sites, giving it a 37.45% share of the market. You can use Apache to serve a standalone website or multiple virtual hosts in one machine.

# yum update && yum install httpd [On CentOS] # aptitude update && aptitude apache2 [On Ubuntu] # zypper refresh && zypper apache2 [On openSUSE]

For more detailed instructions, read our following articles that shows on how to create Ip-based & Name-based Apache virtual hosts and how to secure Apache web server.

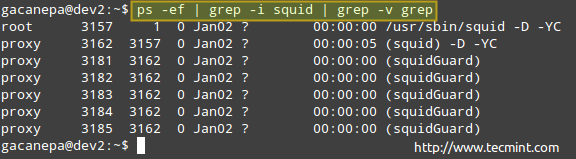

Installing Squid and SquidGuard

Squid is a proxy server and web cache daemon and, as such, acts as intermediary between several client computers and the Internet (or a router connected to the Internet), while speeding up frequent requests by caching web contents and DNS resolution at the same time. It can also be used to deny (or grant) access to certain URLs by network segment or based on forbidden keywords, and keeps a log file of all connections made to the outside world on a per-user basis.

Squidguard is a redirector that implements blacklists to enhance squid, and integrates seamlessly with it.

# yum update && yum install squid squidGuard [On CentOS] # aptitude update && aptitude install squid3 squidguard [On Ubuntu] # zypper refresh && zypper install squid squidGuard [On openSUSE]

Installing Postfix and Dovecot

Postfix is a Mail Transport Agent (MTA). It is the application responsible for routing and delivering email messages from a source to a destination mail servers, whereas dovecot is a widely used IMAP and POP3 email server that fetches messages from the MTA and delivers them to the right user mailbox.

Dovecot plugins for several relational database management systems are also available.

# yum update && yum install postfix dovecot [On CentOS] # aptitude update && aptitude postfix dovecot-imapd dovecot-pop3d [On Ubuntu] # zypper refresh && zypper postfix dovecot [On openSUSE]

About Iptables

In few words, a firewall is a network resource that is used to manage access to or from a private network, and to redirect incoming and outgoing traffic based on certain rules.

Iptables is a tool installed by default in Linux and serves as a frontend to the netfilter kernel module, which is the ultimate responsible for implementing a firewall to perform packet filtering / redirection and network address translation functionalities.

Since iptables is installed in Linux by default, you only have to make sure it is actually running. To do that, we should check that the iptables modules are loaded:

# lsmod | grep ip_tables

If the above command does not return anything, it means the ip_tables module has not been loaded. In that case, run the following command to load the module.

# modprobe -a ip_tables

Read Also: Basic Guide to Linux Iptables Firewall

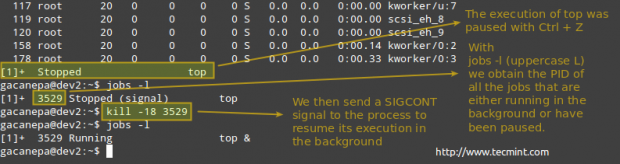

Configuring Services Automatic Start on Boot

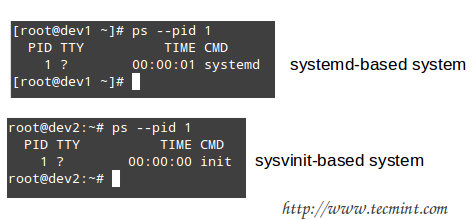

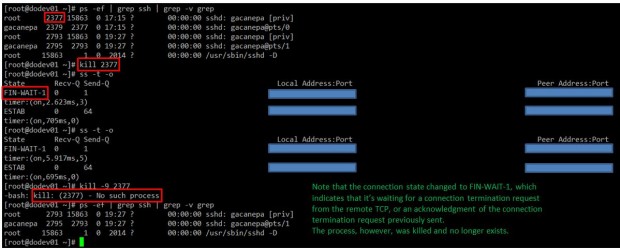

As discussed in Managing System Startup Process and Services – Part 7 of the 10-article series about the LFCS certification, there are several system and service managers available in Linux. Whatever your choice, you need to know how to start, stop, and restart network services on-demand, and how to enable them to automatically start on boot.

You can check what is your system and service manager by running the following command:

# ps --pid 1

Depending on the output of the above command, you will use one of the following commands to configure whether each service should start automatically on boot or not:

On systemd-based

----------- Enable Service to Start at Boot ----------- # systemctl enable [service]

----------- Prevent Service from Starting at Boot ----------- # systemctl disable [service] # prevent [service] from starting at boot

On sysvinit-based

----------- Start Service at Boot in Runlevels A and B ----------- # chkconfig --level AB [service] on

----------- Don’t Start Service at boot in Runlevels C and D ----------- # chkconfig --level CD service off

On upstart-based

Make sure the /etc/init/[service].conf script exists and contains the minimal configuration, such as:

# When to start the service start on runlevel [2345] # When to stop the service stop on runlevel [016] # Automatically restart process in case of crash respawn # Specify the process/command (add arguments if needed) to run exec /absolute/path/to/network/service/binary arg1 arg2

You may also want to check Part 7 of the LFCS series (which we just referred to in the beginning of this section) for other useful commands to manage network services on-demand.

Summary

By now you should have all the network services described in this article installed, and possibly running with the default configuration. In later articles we will explore how to configure them according to our needs, so make sure to stay tuned! And please feel free to share your comments (or post questions, if you have any) on this article using the form below.

Reference Links

Setting Up Standard Linux File Systems and Configuring NFSv4 Server – Part 2

A Linux Foundation Certified Engineer (LFCE) is trained to set up, configure, manage, and troubleshoot network services in Linux systems, and is answerable for the design and implementation of system architecture and solving everyday related issues.

Introducing The Linux Foundation Certification Program (LFCE).

In Part 1 of this series we explained how to install a NFS (Network File System) server, and set the service to start automatically on boot. If you haven’t already done so, please refer to that article and follow the outlined steps before proceeding.

I will now show you how to properly configure your NFSv4 server (without authentication security) so that you can set up network shares to use in Linux clients as if those file systems were installed locally. Note that you can use LDAP or NIS for authentication purposes, but both options are out of the scope of the LFCE certification.

Configuring a NFSv4 server

Once the NFS server is up and running, we will focus our attention on:

- specifying and configuring the local directories that we want to share over the network, and

- mounting those network shares in clients automatically, either through the /etc/fstab file or the automount kernel-based utility (autofs).

We will explain later when to choose one method or the other.

Before we being, we need to make sure that the idmapd daemon is running and configured. This service performs the mapping of NFSv4 names (user@mydomain) to user and group IDs, and is required to implement a NFSv4 server.

Edit /etc/default/nfs-common to enable idmapd.

NEED_IDMAPD=YES

And edit /etc/idmapd.conf with your local domain name (the default is the FQDN of the host).

Domain = yourdomain.com

Then start idmapd.

# service nfs-common start [sysvinit / upstart based systems] # systemctl start nfs-common [systemd based systems]

Exporting Network Shares

The /etc/exports file contains the main configuration directives for our NFS server, defines the file systems that will be exported to remote hosts and specifies the available options. In this file, each network share is indicated using a separate line, which has the following structure by default:

/filesystem/to/export client1([options]) clientN([options])

Where /filesystem/to/export is the absolute path to the exported file system, whereas client1 (up to clientN) represents the specific client (hostname or IP address) or network (wildcards are allowed) to which the share is being exported. Finally, options is a list of comma-separated values (options) that are taken into account while exporting the share, respectively. Please note that there are no spaces between each hostname and the parentheses it precedes.

Here is a list of the most-frequent options and their respective description:

- ro (short for read-only): Remote clients can mount the exported file systems with read permissions only.

- rw (short for read-write): Allows remote hosts to make write changes in the exported file systems.

- wdelay (short for write delay): The NFS server delays committing changes to disk if it suspects another related write request is imminent. However, if the NFS server receives multiple small unrelated requests, this option will reduce performance, so the no_wdelay option can be used to turn it off.

- sync: The NFS server replies to requests only after changes have been committed to permanent storage (i.e., the hard disk). Its opposite, the async option, may increase performance but at the cost of data loss or corruption after an unclean server restart.

- root_squash: Prevents remote root users from having superuser privileges in the server and assigns them the user ID for user nobody. If you want to “squash” all users (and not just root), you can use the all_squashoption.

- anonuid / anongid: Explicitly sets the UID and GID of the anonymous account (nobody).

- subtree_check: If only a subdirectory of a file system is exported, this option verifies that a requested file is located in that exported subdirectory. On the other hand, if the entire file system is exported, disabling this option with no_subtree_check will speed up transfers. The default option nowadays is no_subtree_check as subtree checking tends to cause more problems than it is worth, according to man 5 exports.

- fsid=0 | root (zero or root): Specifies that the specified file system is the root of multiple exported directories (only applies in NFSv4).

In this article we will use the directories /NFS-SHARE and /NFS-SHARE/mydir on 192.168.0.10 (NFS server) as our test file systems.

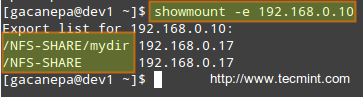

We can always list the available network shares in a NFS server using the following command:

# showmount -e [IP or hostname]

In the output above, we can see that the /NFS-SHARE and /NFS-SHARE/mydir shares on 192.168.0.10 have been exported to client with IP address 192.168.0.17.

Our initial configuration (refer to the /etc/exports directory on your NFS server) for the exported directory is as follows:

/NFS-SHARE 192.168.0.17(fsid=0,no_subtree_check,rw,root_squash,sync,anonuid=1000,anongid=1000) /NFS-SHARE/mydir 192.168.0.17(ro,sync,no_subtree_check)

After editing the configuration file, we must restart the NFS service:

# service nfs-kernel-server restart [sysvinit / upstart based system] # systemctl restart nfs-server [systemd based systems]

Mounting exported network shares using autofs

You may want to refer to Part 5 of the LFCS series (“How to Mount/Unmount Local and Network (Samba & NFS) Filesystems in Linux”) for details on mounting remote NFS shares on-demand using the mount command or permanently through the /etc/fstab file.

The downside of mounting a network file system using these methods is that the system must allocate the necessary resources to keep the share mounted at all times, or at least until we decide to unmount them manually. An alternative is to mount the desired file system on-demand automatically (without using the mountcommand) through autofs, which can mount file systems when they are used and unmount them after a period of inactivity.

Autofs reads /etc/auto.master, which has the following format:

[mount point] [map file]

Where [map file] is used to indicate multiple mount points within [mount point].

This master map file (/etc/auto.master) is then used to determine which mount points are defined, and then starts an automount process with the specified parameters for each mount point.

Mounting exported NFS shares using autofs

Edit your /etc/auto.master as follows:

/media/nfs /etc/auto.nfs-share --timeout=60

and create a map file named /etc/auto.nfs-share with the following contents:

writeable_share -fstype=nfs4 192.168.0.10:/ non_writeable_share -fstype=nfs4 192.168.0.10:/mydir

Note that the first field in /etc/auto.nfs-share is the name of a subdirectory inside /media/nfs. Each subdirectory is created dynamically by autofs.

Now, restart the autofs service:

# service autofs restart [sysvinit / upstart based systems] # systemctl restart autofs [systemd based systems]

and finally, to enable autofs to start on boot, run the following command:

# chkconfig --level 345 autofs on # systemctl enable autofs [systemd based systems]

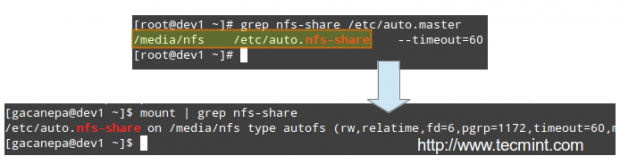

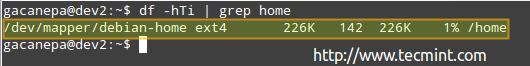

Examining mounted file systems after starting the autofs daemon

When we restart autofs, the mount command shows us that the map file (/etc/auto.nfs-share) is mounted on the specified directory in /etc/auto.master:

Please note that no directories have actually been mounted yet, but will be automatically when we try to access the shares specified in /etc/auto.nfs-share:

As we can see, the autofs service “mounts” the map file, so to speak, but waits until a request is made to the file systems to actually mount them.

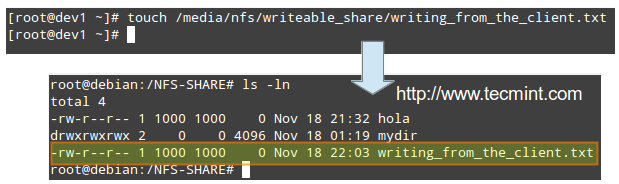

Performing write tests in exported file systems

The anonuid and anongid options, along with the root_squash as set in the first share, allow us to map requests performed by the root user in the client to a local account in the server.

In other words, when root in the client creates a file in that exported directory, its ownership will be automatically mapped to the user account with UID and GID = 1000, provided that such account exists on the server:

Conclusion

I hope you were able to successfully setup and configure a NFS server fit for your environment using this article as a guide. You may also want to refer to the relevant man pages for further help (man exports and man idmapd.conf, for example).

Feel free to experiment with other options and test cases as outlined earlier and do not hesitate to use the form below to send your comments, suggestions, or questions. We will be glad to hear from you.

How to Setup Encrypted Filesystems and Swap Space Using ‘Cryptsetup’ Tool in Linux – Part 3

A LFCE (short for Linux Foundation Certified Engineer) is trained and has the expertise to install, manage, and troubleshoot network services in Linux systems, and is in charge of the design, implementation and ongoing maintenance of the system architecture.

Introducing The Linux Foundation Certification Program (LFCE).

The idea behind encryption is to allow only trusted persons to access your sensitive data and to protect it from falling into the wrong hands in case of loss or theft of your machine / hard disk.

In simple terms, a key is used to “lock” access to your information, so that it becomes available when the system is running and unlocked by an authorized user. This implies that if a person tries to examine the disk contents (plugging it to his own system or by booting the machine with a LiveCD/DVD/USB), he will only find unreadable data instead of the actual files.

In this article we will discuss how to set up encrypted file systems with dm-crypt (short for device mapper and cryptographic), the standard kernel-level encryption tool. Please note that since dm-crypt is a block-level tool, it can only be used to encrypt full devices, partitions, or loop devices (will not work on regular files or directories).

Preparing A Drive / Partition / Loop Device for Encryption

Since we will wipe all data present in our chosen drive (/dev/sdb), first of all, we need to perform a backup of any important files contained in that partition BEFORE proceeding further.

Wipe all data from /dev/sdb. We are going to use dd command here, but you could also do it with other tools such as shred. Next, we will create a partition on this device, /dev/sdb1, following the explanation in Part 4 – Create Partitions and Filesystems in Linux of the LFCS series.

# dd if=/dev/urandom of=/dev/sdb bs=4096

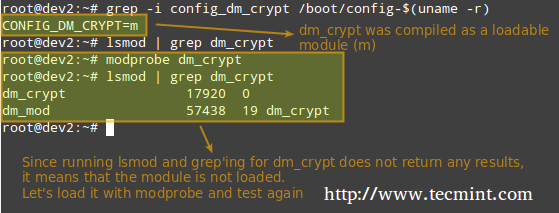

Testing for Encryption Support

Before we proceed further, we need to make sure that our kernel has been compiled with encryption support:

# grep -i config_dm_crypt /boot/config-$(uname -r)

As outlined in the image above, the dm-crypt kernel module needs to be loaded in order to set up encryption.

Installing Cryptsetup

Cryptsetup is a frontend interface for creating, configuring, accessing, and managing encrypted file systems using dm-crypt.

# aptitude update && aptitude install cryptsetup [On Ubuntu] # yum update && yum install cryptsetup [On CentOS] # zypper refresh && zypper install cryptsetup [On openSUSE]

Setting Up an Encrypted Partition

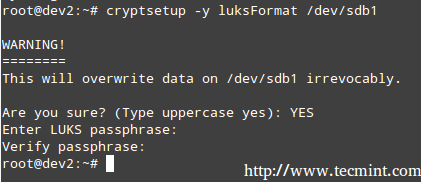

The default operating mode for cryptsetup is LUKS (Linux Unified Key Setup) so we’ll stick with it. We will begin by setting the LUKS partition and the passphrase:

# cryptsetup -y luksFormat /dev/sdb1

The command above runs cryptsetup with default parameters, which can be listed with,

# cryptsetup --version

Should you want to change the cipher, hash, or key parameters, you can use the –cipher, –hash, and –key-sizeflags, respectively, with the values taken from /proc/crypto.

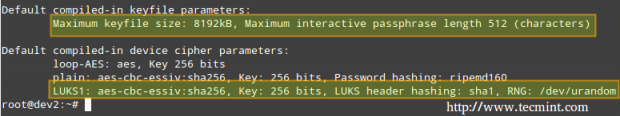

Next, we need to open the LUKS partition (we will be prompted for the passphrase that we entered earlier). If the authentication succeeds, our encrypted partition will be available inside /dev/mapper with the specified name:

# cryptsetup luksOpen /dev/sdb1 my_encrypted_partition

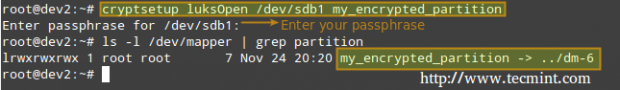

Now, we’ll format out partition as ext4.

# mkfs.ext4 /dev/mapper/my_encrypted_partition

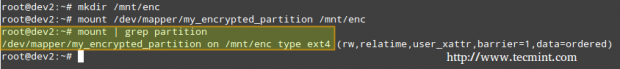

and create a mount point to mount the encrypted partition. Finally, we may want to confirm whether the mount operation succeeded.

# mkdir /mnt/enc # mount /dev/mapper/my_encrypted_partition /mnt/enc # mount | grep partition

When you are done writing to or reading from your encrypted file system, simply unmount it

# umount /mnt/enc

and close the LUKS partition using,

# cryptesetup luksClose my_encrypted_partition

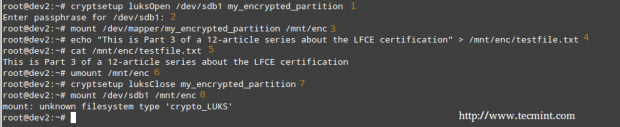

Testing Encryption

Finally, we will check whether our encrypted partition is safe:

1. Open the LUKS partition

# cryptsetup luksOpen /dev/sdb1 my_encrypted_partition

2. Enter your passphrase

3. Mount the partition

# mount /dev/mapper/my_encrypted_partition /mnt/enc

4. Create a dummy file inside the mount point.

# echo “This is Part 3 of a 12-article series about the LFCE certification” > /mnt/enc/testfile.txt

5. Verify that you can access the file that you just created.

# cat /mnt/enc/testfile.txt

6. Unmount the file system.

# umount /mnt/enc

7. Close the LUKS partition.

# cryptsetup luksClose my_encrypted_partition

8. Try to mount the partition as a regular file system. It should indicate an error.

# mount /dev/sdb1 /mnt/enc

Encryptin the Swap Space for Further Security

The passphrase you entered earlier to use the encrypted partition is stored in RAM memory while it’s open. If someone can get his hands on this key, he will be able to decrypt the data. This is especially easy to do in the case of a laptop, since while hibernating the contents of RAM are kept on the swap partition.

To avoid leaving a copy of your key accessible to a thief, encrypt the swap partition following these steps:

1 Create a partition to be used as swap with the appropriate size (/dev/sdd1 in our case) and encrypt it as explained earlier. Name it just “swap” for convenience.’

2.Set it as swap and activate it.

# mkswap /dev/mapper/swap # swapon /dev/mapper/swap

3. Next, change the corresponding entry in /etc/fstab.

/dev/mapper/swap none swap sw 0 0

4. Finally, edit /etc/crypttab and reboot.

swap /dev/sdd1 /dev/urandom swap

Once the system has finished booting, you can verify the status of the swap space:

# cryptsetup status swap

Summary

In this article we have explored how to encrypt a partition and swap space. With this setup, your data should be considerably safe. Feel free to experiment and do not hesitate to get back to us if you have questions or comments. Just use the form below – we’ll be more than glad to hear from you!

How to Setup Standalone Apache Server with Name-Based Virtual Hosting with SSL Certificate – Part 4

A LFCE (short for Linux Foundation Certified Engineer) is a trained professional who has the expertise to install, manage, and troubleshoot network services in Linux systems, and is in charge of the design, implementation and ongoing maintenance of the system architecture.

In this article we will show you how to configure Apache to serve web content, and how to set up name-based virtual hosts and SSL, including a self-signed certificate.

Introducing The Linux Foundation Certification Program (LFCE).

Note: That this article is not supposed to be a comprehensive guide on Apache, but rather a starting point for self-study about this topic for the LFCE exam. For that reason we are not covering load balancing with Apache in this tutorial either.

You may already know other ways to perform the same tasks, which is OK considering that the Linux Foundation Certification are strictly performance-based. Thus, as long as you ‘get the job done’, you stand good chances of passing the exam.

Requirements

Please refer to Part 1 of the current series (“Installing Network Services and Configuring Automatic Startup at Boot”) for instructions on installing and starting Apache.

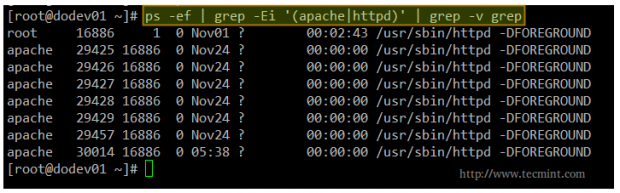

By now, you should have the Apache web server installed and running. You can verify this with the following command.

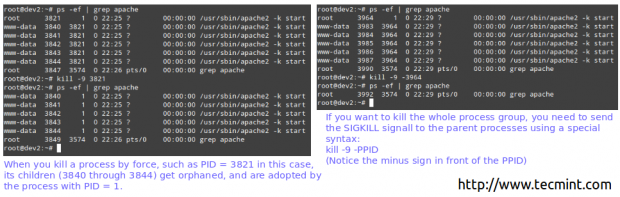

# ps -ef | grep -Ei '(apache|httpd)' | grep -v grep

Note: That the above command checks for the presence of either apache or httpd (the most common names for the web daemon) among the list of running processes. If Apache is running, you will get output similar to the following.

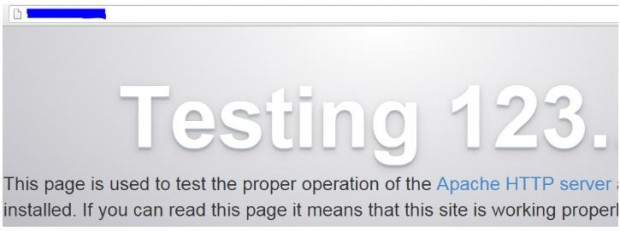

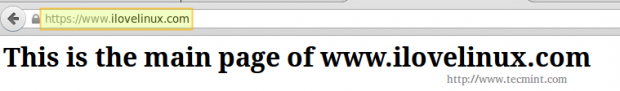

The ultimate method of testing the Apache installation and checking whether it’s running is launching a web browser and point to the IP of the server. We should be presented with the following screen or at least a message confirming that Apache is working.

Configuring Apache

The main configuration file for Apache can be located in different directories depending on your distribution.

/etc/apache2/apache2.conf [For Ubuntu] /etc/httpd/conf/httpd.conf [For CentOS] /etc/apache2/httpd.conf [For openSUSE]

Fortunately for us, the configuration directives are extremely well documented in the Apache project web site. We will refer to some of them throughout this article.

Serving Pages in a Standalone Server with Apache

The most basic usage of Apache is to serve web pages in a standalone server where no virtual hosts have been configured yet. The DocumentRoot directive specifies the directory out of which Apache will serve web pages documents.

Note that by default, all requests are taken from this directory, but you can also use symbolic links and / or aliases may be used to point to other locations as well.

Unless matched by the Alias directive (which allows documents to be stored in the local filesystem instead of under the directory specified by DocumentRoot), the server appends the path from the requested URL to the document root to make the path to the document.

For example, given the following DocumentRoot:

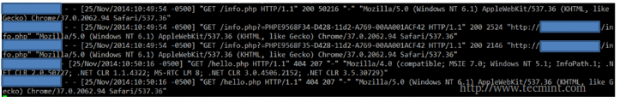

When the web browser points to [Server IP or hostname]/lfce/tecmint.html, the server will open /var/www/html/lfce/tecmint.html (assuming that such file exists) and save the event to its access log with a 200 (OK) response.

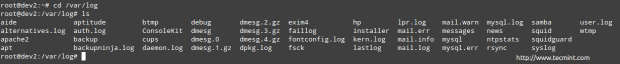

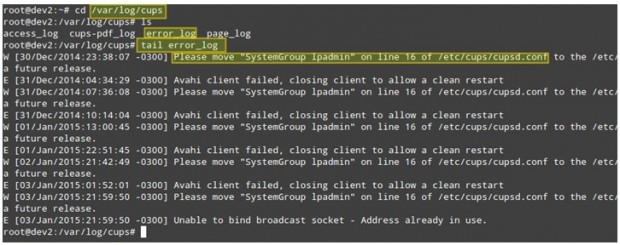

The access log is typically found inside /var/log under a representative name, such as access.log or access_log. You may even find this log (and the error log as well) inside a subdirectory (for example, /var/log/httpd in CentOS). Otherwise, the failed event will still be logged to the access log but with a 404 (Not Found) response.

In addition, the failed events will be recorded in the error log:

The format of the access log can be customized according to your needs using the LogFormat directive in the main configuration file, whereas you cannot do the same with the error log.

The default format of the access log is as follows:

LogFormat "%h %l %u %t \"%r\" %>s %b" [nickname]

Where each of the letters preceded by a percent sign indicates the server to log a certain piece of information:

| String | Description |

| %h | Remote hostname or IP address |

| %l | Remote log name |

| %u | Remote user if the request is authenticated |

| %t | Date and time when the request was received |

| %r | First line of request to the server |

| %>s | Final status of the request |

| %b | Size of the response [bytes] |

and nickname is an optional alias that can be used to customize other logs without having to enter the whole configuration string again.

You may refer to the LogFormat directive [Custom log formats section] in the Apache docs for further options.

Both log files (access and error) represent a great resource to quickly analyze at a glance what’s happening on the Apache server. Needless to say, they are the first tool a system administrator uses to troubleshoot issues.

Finally, another important directive is Listen, which tells the server to accept incoming requests on the specified port or address/port combination:

If only a port number is defined, the apache will listens to the given port on all network interfaces (the wildcard sign * is used to indicate ‘all network interfaces’).

If both IP address and port is specified, then the apache will listen on the combination of given port and network interface.

Please note (as you will see in the examples below) that multiple Listen directives can be used at the same time to specify multiple addresses and ports to listen to. This option instructs the server to respond to requests from any of the listed addresses and ports.

Setting Up Name-Based Virtual Hosts

The concept of virtual host defines an individual site (or domain) that is served by the same physical machine. Actually, multiple sites / domains can be served off a single “real” server as virtual host. This process is transparent to the end user, to whom it appears that the different sites are being served by distinct web servers.

Name-based virtual hosting allows the server to rely on the client to report the hostname as part of the HTTP headers. Thus, using this technique, many different hosts can share the same IP address.

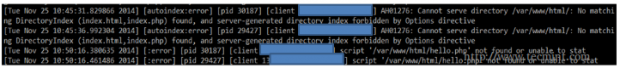

Each virtual host is configured in a directory within DocumentRoot. For our case, we will use the following dummy domains for the testing setup, each located in the corresponding directory:

- ilovelinux.com – /var/www/html/ilovelinux.com/public_html

- linuxrocks.org – /var/www/html/linuxrocks.org/public_html

In order for pages to be displayed correctly, we will chmod each VirtualHost’s directory to 755:

# chmod -R 755 /var/www/html/ilovelinux.com/public_html # chmod -R 755 /var/www/html/linuxrocks.org/public_html

Next, create a sample index.html file inside each public_html directory:

<html>

<head>

<title>www.ilovelinux.com</title>

</head>

<body>

<h1>This is the main page of www.ilovelinux.com</h1>

</body>

</html>

Finally, in CentOS and openSUSE add the following section at the bottom of /etc/httpd/conf/httpd.conf or /etc/apache2/httpd.conf, respectively, or just modify it if it’s already there.

<VirtualHost *:80>

ServerAdmin admin@ilovelinux.com

DocumentRoot /var/www/html/ilovelinux.com/public_html

ServerName www.ilovelinux.com

ServerAlias www.ilovelinux.com ilovelinux.com

ErrorLog /var/www/html/ilovelinux.com/error.log

LogFormat "%v %l %u %t \"%r\" %>s %b" myvhost

CustomLog /var/www/html/ilovelinux.com/access.log myvhost

</VirtualHost>

<VirtualHost *:80>

ServerAdmin admin@linuxrocks.org

DocumentRoot /var/www/html/linuxrocks.org/public_html

ServerName www.linuxrocks.org

ServerAlias www.linuxrocks.org linuxrocks.org

ErrorLog /var/www/html/linuxrocks.org/error.log

LogFormat "%v %l %u %t \"%r\" %>s %b" myvhost

CustomLog /var/www/html/linuxrocks.org/access.log myvhost

</VirtualHost>

Please note that you can also add each virtual host definition in separate files inside the /etc/httpd/conf.ddirectory. If you choose to do so, each configuration file must be named as follows:

/etc/httpd/conf.d/ilovelinux.com.conf /etc/httpd/conf.d/linuxrocks.org.conf

In other words, you need to add .conf to the site or domain name.

In Ubuntu, each individual configuration file is named /etc/apache2/sites-available/[site name].conf. Each site is then enabled or disabled with the a2ensite or a2dissite commands, respectively, as follows.

# a2ensite /etc/apache2/sites-available/ilovelinux.com.conf # a2dissite /etc/apache2/sites-available/ilovelinux.com.conf # a2ensite /etc/apache2/sites-available/linuxrocks.org.conf # a2dissite /etc/apache2/sites-available/linuxrocks.org.conf

The a2ensite and a2dissite commands create links to the virtual host configuration file and place (or remove) them in the /etc/apache2/sites-enabled directory.

To be able to browse to both sites from another Linux box, you will need to add the following lines in the /etc/hosts file in that machine in order to redirect requests to those domains to a specific IP address.

[IP address of your web server] www.ilovelinux.com [IP address of your web server] www.linuxrocks.org

As a security measure, SELinux will not allow Apache to write logs to a directory other than the default /var/log/httpd.

You can either disable SELinux, or set the right security context:

# chcon system_u:object_r:httpd_log_t:s0 /var/www/html/xxxxxx/error.log

where xxxxxx is the directory inside /var/www/html where you have defined your Virtual Hosts.

After restarting Apache, you should see the following page at the above addresses:

Installing and Configuring SSL with Apache

Finally, we will create and install a self-signed certificate to use with Apache. This kind of setup is acceptable in small environments, such as a private LAN.

However, if your server will expose content to the outside world over the Internet, you will want to install a certificate signed by a 3rd party to corroborate its authenticity. Either way, a certificate will allow you to encrypt the information that is transmitted to, from, or within your site.

In CentOS and openSUSE, you need to install the mod_ssl package.

# yum update && yum install mod_ssl [On CentOS] # zypper refresh && zypper install mod_ssl [On openSUSE]

Whereas in Ubuntu you’ll have to enable the ssl module for Apache.

# a2enmod ssl

The following steps are explained using a CentOS test server, but your setup should be almost identical in the other distributions (if you run into any kind of issues, don’t hesitate to leave your questions using the comments form).

Step 1 [Optional]: Create a directory to store your certificates.

# mkdir /etc/httpd/ssl-certs

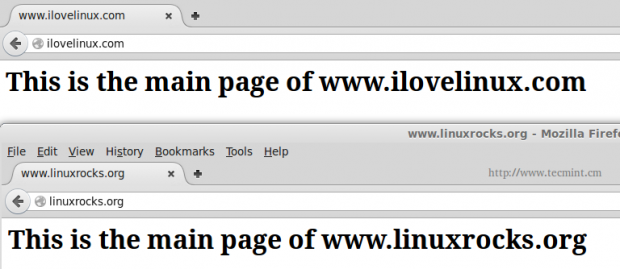

Step 2: Generate your self signed certificate and the key that will protect it.

# openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout /etc/httpd/ssl-certs/apache.key -out /etc/httpd/ssl-certs/apache.crt

A brief explanation of the options listed above:

- req -X509 indicates we are creating a x509 certificate.

- -nodes (NO DES) means “don’t encrypt the key”.

- -days 365 is the number of days the certificate will be valid for.

- -newkey rsa:2048 creates a 2048-bit RSA key.

- -keyout /etc/httpd/ssl-certs/apache.key is the absolute path of the RSA key.

- -out /etc/httpd/ssl-certs/apache.crt is the absolute path of the certificate.

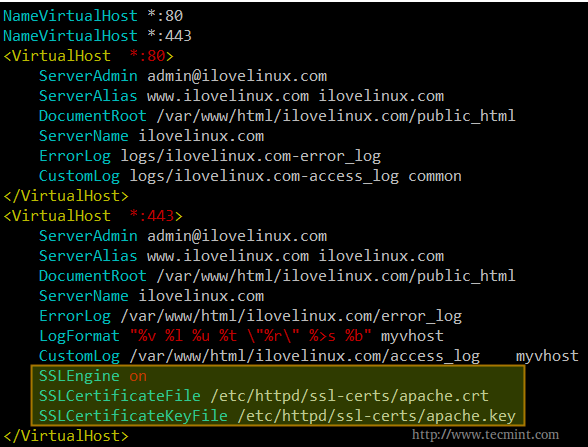

Step 3: Open your chosen virtual host configuration file (or its corresponding section in /etc/httpd/conf/httpd.conf as explained earlier) and add the following lines to a virtual host declaration listening on port 443.

SSLEngine on SSLCertificateFile /etc/httpd/ssl-certs/apache.crt SSLCertificateKeyFile /etc/httpd/ssl-certs/apache.key

Please note that you need to add.

NameVirtualHost *:443

at the top, right below

NameVirtualHost *:80

Both directives instruct apache to listen on ports 443 and 80 of all network interfaces.

The following example is taken from /etc/httpd/conf/httpd.conf:

Then restart Apache,

# service apache2 restart [sysvinit and upstart based systems] # systemctl restart httpd.service [systemd-based systems]

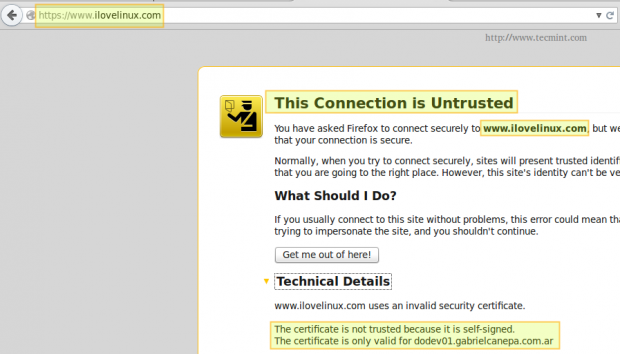

And point your browser to https://www.ilovelinux.com. You will be presented with the following screen.

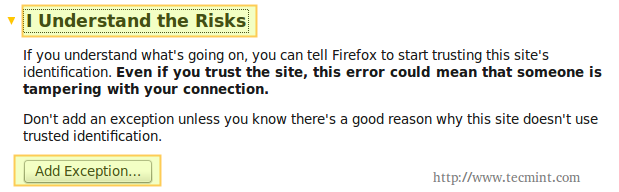

Go ahead and click on “I understand the risks” and “Add exception”.

Finally, check “Permanently store this exception” and click “Confirm Security Exception”.

And you will be redirected to your home page using https.

Summary

In this post we have shown how to configure Apache and name-based virtual hosting with SSL to secure data transmission. If for some reason you ran into any issues, feel free to let us know using the comment form below. We will be more than glad to help you perform a successful set up.

Read Also

- Apache IP Based and Name Based Virtual Hosting

- Creating Apache Virtual Hosts with Enable/Disable Vhosts Options

- Monitor “Apache Web Server” Using “Apache GUI” Tool

Configuring Squid Proxy Server with Restricted Access and Setting Up Clients to Use Proxy – Part 5

A Linux Foundation Certified Engineer is a skilled professional who has the expertise to install, manage, and troubleshoot network services in Linux systems, and is in charge of the design, implementation and ongoing maintenance of the system-wide architecture.

Introducing The Linux Foundation Certification Program.

In Part 1 of this series, we showed how to install squid, a proxy caching server for web clients. Please refer to that post (link given below) before proceeding if you haven’t installed squid on your system yet.

In this article, we will show you how to configure the Squid proxy server in order to grant or restrict Internet access, and how to configure an http client, or web browser, to use that proxy server.

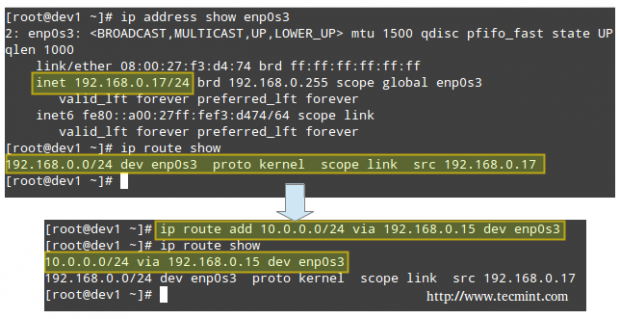

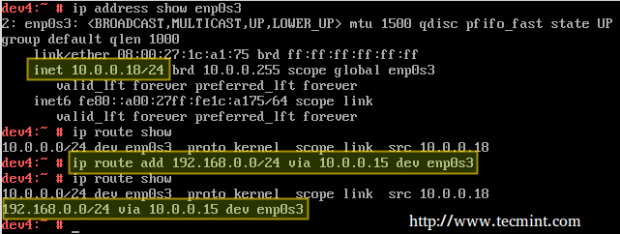

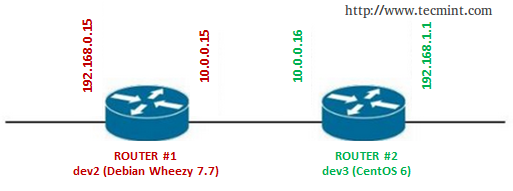

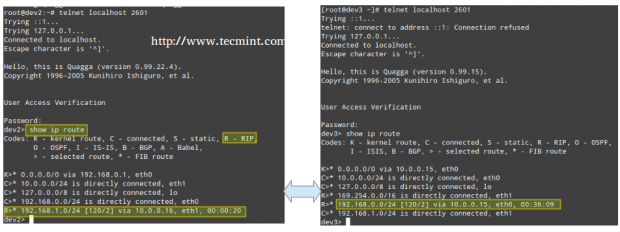

My Testing Environment Setup

Squid Server

Operating System : Debian Wheezy 7.5 IP Address : 192.168.0.15 Hostname : dev2.gabrielcanepa.com.ar

Client Machine 1

Operating System : Ubuntu 12.04 IP Address : 192.168.0.104 Hostname : ubuntuOS.gabrielcanepa.com.ar

Client Machine 2

Operating System : CentOS-7.0-1406 IP Address : 192.168.0.17 Hostname : dev1.gabrielcanepa.com.ar

Let us remember that, in simple terms, a web proxy server is an intermediary between one (or more) client computers and a certain network resource, the most common being access to the Internet. In other words, the proxy server is connected on one side directly to the Internet (or to a router that is connected to the Internet) and on the other side to a network of client computers that will access the World Wide Web through it.

You may be wondering, why would I want to add yet another piece of software to my network infrastructure?

Here are the top 3 reasons:

1. Squid stores files from previous requests to speed up future transfers. For example, suppose client1downloads CentOS-7.0-1406-x86_64-DVD.iso from the Internet. When client2 requests access to the same file, squid can transfer the file from its cache instead of downloading it again from the Internet. As you can guess, you can use this feature to speed up data transfers in a network of computers that require frequent updates of some kind.

2. ACLs (Access Control Lists) allow us to restrict the access to websites, and / or monitor the access on a per user basis. You can restrict access based on day of week or time of day, or domain, for example.

3. Bypassing web filters is made possible through the use of a web proxy to which requests are made and which returns requested content to a client, instead of having the client request it directly to the Internet.

For example, suppose you are logged on in client1 and want to access www.facebook.com through your company’s router. Since the site may be blocked by your company’s policies, you can instead connect to a web proxy server and have it request access to www.facebook.com. Remote content is then returned to you through the web proxy server again, bypassing your company’s router’s blocking policies.

Configuring Squid – The Basics

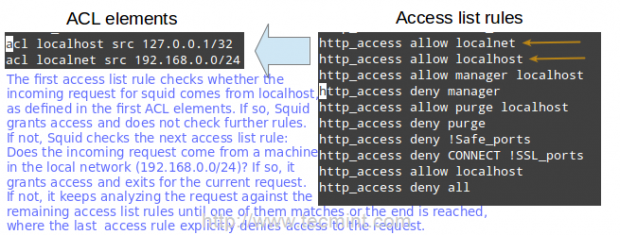

The access control scheme of the Squid web proxy server consists of two different components:

- The ACL elements are directive lines that begin with the word “acl” and represent types of tests that are performed against any request transaction.

- The access list rules consist of an allow or deny action followed by a number of ACL elements, and are used to indicate what action or limitation has to be enforced for a given request. They are checked in order, and list searching terminates as soon as one of the rules is a match. If a rule has multiple ACL elements, it is implemented as a boolean AND operation (all ACL elements of the rule must be a match in order for the rule to be a match).

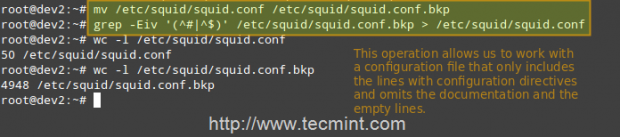

Squid’s main configuration file is /etc/squid/squid.conf, which is ~5000 lines long since it includes both configuration directives and documentation. For that reason, we will create a new squid.conf file with only the lines that include configuration directives for our convenience, leaving out empty or commented lines. To do so, we will use the following commands.

# mv /etc/squid/squid.conf /etc/squid/squid.conf.bkp

And then,

# grep -Eiv '(^#|^$)' /etc/squid/squid.conf.bkp OR # grep -ve ^# -ve ^$ /etc/squid/squid.conf.bkp > /etc/squid/squid.conf

Now, open the newly created squid.conf file, and look for (or add) the following ACL elements and access lists.

acl localhost src 127.0.0.1/32 acl localnet src 192.168.0.0/24

The two lines above represent a basic example of the usage of ACL elements.

- The first word, acl, indicates that this is a ACL element directive line.

- The second word, localhost or localnet, specify a name for the directive.

- The third word, src in this case, is an ACL element type that is used to represent a client IP address or range of addresses, respectively. You can specify a single host by IP (or hostname, if you have some sort of DNS resolution implemented) or by network address.

- The fourth parameter is a filtering argument that is “fed” to the directive.

The two lines below are access list rules and represent an explicit implementation of the ACL directives mentioned earlier. In few words, they indicate that http access should be granted if the request comes from the local network (localnet), or from localhost. Specifically what is the allowed local network or local host addresses? The answer is: those specified in the localhost and localnet directives.

http_access allow localnet http_access allow localhost

At this point you can restart Squid in order to apply any pending changes.

# service squid restart [Upstart / sysvinit-based distributions] # systemctl restart squid.service [systemd-based distributions]

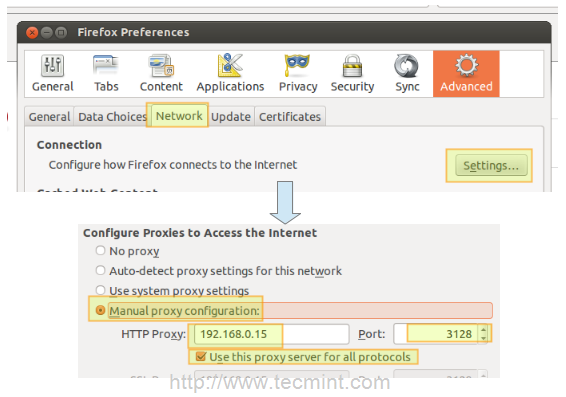

and then configure a client browser in the local network (192.168.0.104 in our case) to access the Internet through your proxy as follows.

In Firefox

1. Go to the Edit menu and choose the Preferences option.

2. Click on Advanced, then on the Network tab, and finally on Settings…

3. Check Manual proxy configuration and enter the IP address of the proxy server and the port where it is listening for connections.

Note That by default, Squid listens on port 3128, but you can override this behaviour by editing the access listrule that begins with http_port (by default it reads http_port 3128).

4. Click OK to apply the changes and you’re good to go.

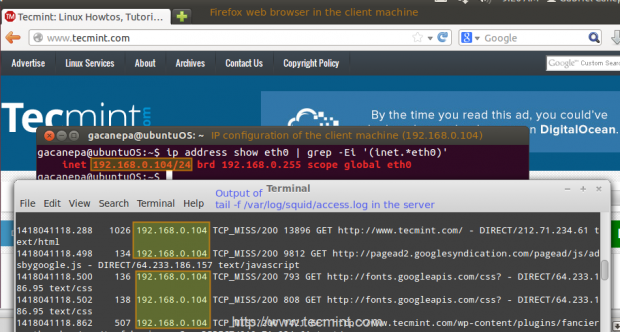

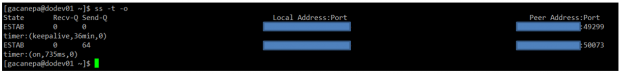

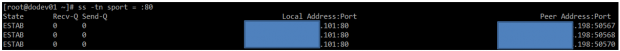

Verifying that a Client is Accessing the Internet

You can now verify that your local network client is accessing the Internet through your proxy as follows.

1. In your client, open up a terminal and type,

# ip address show eth0 | grep -Ei '(inet.*eth0)'

That command will display the current IP address of your client (192.168.0.104 in the following image).

2. In your client, use a web browser to open any given web site (www.tecmint.com in this case).

3. In the server, run.

# tail -f /var/log/squid/access.log

and you’ll get a live view of requests being served through Squid.

Restricting Access By Client

Now suppose you want to explicitly deny access to that particular client IP address, while yet maintaining access for the rest of the local network.

1. Define a new ACL directive as follows (I’ve named it ubuntuOS but you can name it whatever you want).

acl ubuntuOS src 192.168.0.104

2. Add the ACL directive to the localnet access list that is already in place, but prefacing it with an exclamation sign. This means, “Allow Internet access to clients matching the localnet ACL directive except to the one that matches the ubuntuOS directive”.

http_access allow localnet !ubuntuOS

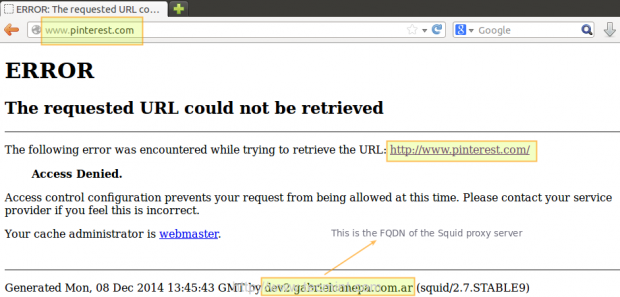

3. Now we need to restart Squid in order to apply changes. Then if we try to browse to any site we will find that access is denied now.

Configuring Squid – Fine Tuning

Restricting access by domain and / or by time of day / day of week

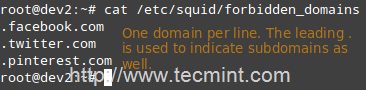

To restrict access to Squid by domain we will use the dstdomain keyword in a ACL directive, as follows.

acl forbidden dstdomain "/etc/squid/forbidden_domains"

Where forbidden_domains is a plain text file that contains the domains that we desire to deny access to.

Finally, we must grant access to Squid for requests not matching the directive above.

http_access allow localnet !forbidden

Or maybe we will only want to allow access to those sites during a certain time of the day (10:00 until 11:00 am) only on Monday (M), Wednesday (W), and Friday (F).

acl someDays time MWF 10:00-11:00 http_access allow forbidden someDays http_access deny forbidden

Otherwise, access to those domains will be blocked.

Restricting access by user authentication

Squid support several authentication mechanisms (Basic, NTLM, Digest, SPNEGO, and Oauth) and helpers (SQL database, LDAP, NIS, NCSA, to name a few). In this tutorial we will use Basic authentication with NCSA.

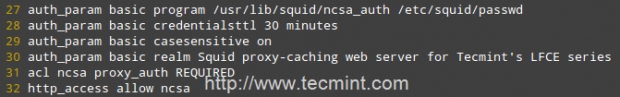

Add the following lines to your /etc/squid/squid.conf file.

auth_param basic program /usr/lib/squid/ncsa_auth /etc/squid/passwd auth_param basic credentialsttl 30 minutes auth_param basic casesensitive on auth_param basic realm Squid proxy-caching web server for Tecmint's LFCE series acl ncsa proxy_auth REQUIRED http_access allow ncsa

Note: In CentOS 7, the NCSA plugin for squid can be found in /usr/lib64/squid/basic_nsca_auth, so change accordingly in above line.

A few clarifications:

- We need to tell Squid which authentication helper program to use with the auth_param directive by specifying the name of the program (most likely, /usr/lib/squid/ncsa_auth or /usr/lib64/squid/basic_nsca_auth), plus any command line options (/etc/squid/passwd in this case) if necessary.

- The /etc/squid/passwd file is created through htpasswd, a tool to manage basic authentication through files. It will allow us to add a list of usernames (and their corresponding passwords) that will be allowed to use Squid.

- credentialsttl 30 minutes will require entering your username and password every 30 minutes (you can specify this time interval with hours as well).

- casesensitive on indicates that usernames and passwords are case sensitive.

- realm represents the text of the authentication dialog that will be used to authenticate to squid.

- Finally, access is granted only when proxy authentication (proxy_auth REQUIRED) succeeds.

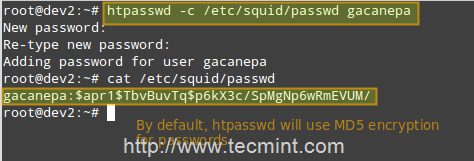

Run the following command to create the file and to add credentials for user gacanepa (omit the -c flag if the file already exists).

# htpasswd -c /etc/squid/passwd gacanepa

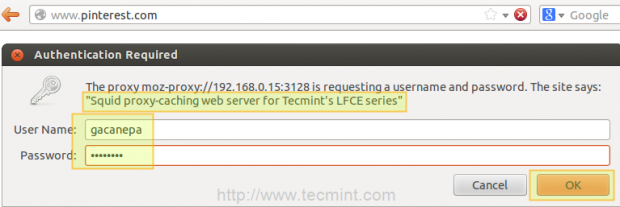

Open a web browser in the client machine and try to browse to any given site.

If authentication succeeds, access is granted to the requested resource. Otherwise, access will be denied.

Using Cache to Sped Up Data Transfer

One of Squid’s distinguishing features is the possibility of caching resources requested from the web to disk in order to speed up future requests of those objects either by the same client or others.

Add the following directives in your squid.conf file.

cache_dir ufs /var/cache/squid 1000 16 256 maximum_object_size 100 MB refresh_pattern .*\.(mp4|iso) 2880

A few clarifications of the above directives.

- ufs is the Squid storage format.

- /var/cache/squid is a top-level directory where cache files will be stored. This directory must exist and be writeable by Squid (Squid will NOT create this directory for you).

- 1000 is the amount (in MB) to use under this directory.

- 16 is the number of 1st-level subdirectories, whereas 256 is the number of 2nd-level subdirectories within /var/spool/squid.

- The maximum_object_size directive specifies the maximum size of allowed objects in the cache.

- refresh_pattern tells Squid how to deal with specific file types (.mp4 and .iso in this case) and for how long it should store the requested objects in cache (2880 minutes = 2 days).

The first and second 2880 are lower and upper limits, respectively, on how long objects without an explicit expiry time will be considered recent, and thus will be served by the cache, whereas 0% is the percentage of the objects’ age (time since last modification) that each object without explicit expiry time will be considered recent.

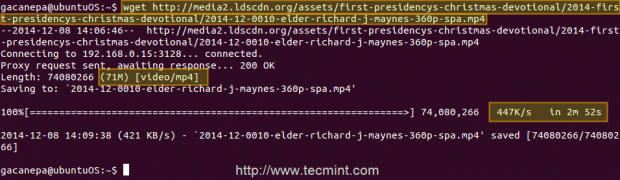

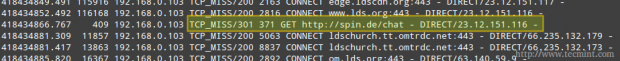

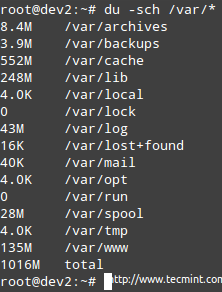

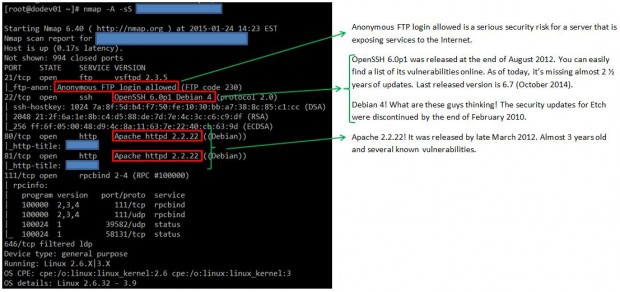

Case study: downloading a .mp4 file from 2 different clients and testing the cache

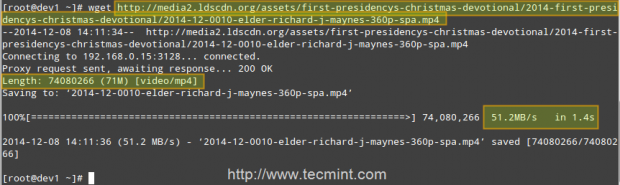

First client (IP 192.168.0.104) downloads a 71 MB .mp4 file in 2 minutes and 52 seconds.

Second client (IP 192.168.0.17) downloads the same file in 1.4 seconds!

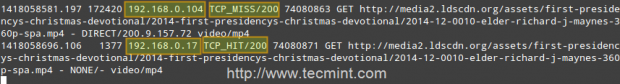

That is because the file was served from the Squid cache (indicated by TCP_HIT/200) in the second case, as opposed to the first instance, when it was downloaded directly from the Internet (represented by TCP_MISS/200).

The HIT and MISS keywords, along with the 200 http response code, indicate that the file was served successfully both times, but the cache was HIT and Missed respectively. When a request cannot be served by the cache for some reason, then Squid attempts to serve it from the Internet.

Conclusion

In this article we have discussed how to set up a Squid web caching proxy. You can use the proxy server to filter contents using a chosen criteria, and also to reduce latency (since identical incoming requests are served from the cache, which is closer to the client than the web server that is actually serving the content, resulting in faster data transfers) and network traffic as well (reducing the amount of used bandwidth, which saves you money if you’re paying for traffic).

You may want to refer to the Squid web site for further documentation (make sure to also check the wiki), but do not hesitate to contact us if you have any questions or comments. We will be more than glad to hear from you!

Configuring SquidGuard, Enabling Content Rules and Analyzing Squid Logs – Part 6

A LFCE (Linux Foundation Certified Engineer) is a professional who has the necessary skills to install, manage, and troubleshoot network services in Linux systems, and is in charge of the design, implementation and ongoing maintenance of the system architecture in its entirety.

Introducing The Linux Foundation Certification Program.

In previous posts we discussed how to install Squid + squidGuard and how to configure squid to properly handle or restrict access requests. Please make sure you go over those two tutorials and install both Squid and squidGuard before proceeding as they set the background and the context for what we will cover in this post: integrating squidguard in a working squid environment to implement blacklist rules and content control over the proxy server.

Requirements

- Install Squid and SquidGuard – Part 1

- Configuring Squid Proxy Server with Restricted Access – Part 5

What Can / Cannot I use SquidGuard For?

Though squidGuard will certainly boost and enhance Squid’s features, it is important to highlight what it can and what it cannot do.

squidGuard can be used to:

- limit the allowed web access for some users to a list of accepted/well known web servers and/or URLs only, while denying access to other blacklisted web servers and/or URLs.

- block access to sites (by IP address or domain name) matching a list of regular expressions or words for some users.

- require the use of domain names/prohibit the use of IP address in URLs.

- redirect blocked URLs to error or info pages.

- use distinct access rules based on time of day, day of the week, date etc.

- implement different rules for distinct user groups.

However, neither squidGuard nor Squid can be used to:

- analyze text inside documents and act in result.

- detect or block embedded scripting languages like JavaScript, Python, or VBscript inside HTML code.

BlackLists – The Basics

Blacklists are an essential part of squidGuard. Basically, they are plain text files that will allow you to implement content filters based on specific keywords. There are both freely available and commercial blacklists, and you can find the download links in the squidguard blacklists project’s website.

In this tutorial I will show you how to integrate the blacklists provided by Shalla Secure Services to your squidGuard installation. These blacklists are free for personal / non-commercial use and are updated on a daily basis. They include, as of today, over 1,700,000 entries.

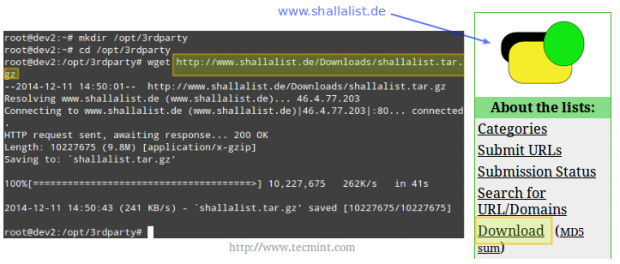

For our convenience, let’s create a directory to download the blacklist package.

# mkdir /opt/3rdparty # cd /opt/3rdparty # wget http://www.shallalist.de/Downloads/shallalist.tar.gz

The latest download link is always available as highlighted below.

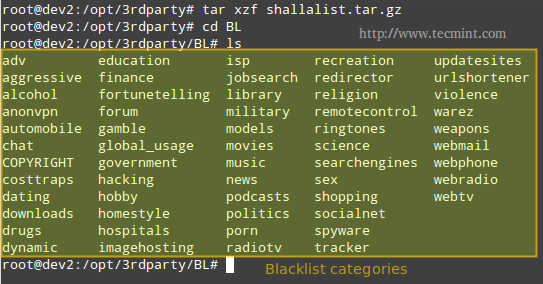

After untarring the newly downloaded file, we will browse to the blacklist (BL) folder.

# tar xzf shallalist.tar.gz # cd BL # ls

You can think of the directories shown in the output of ls as backlist categories, and their corresponding (optional) subdirectories as subcategories, descending all the way down to specific URLs and domains, which are listed in the files urls and domains, respectively. Refer to the below image for further details.

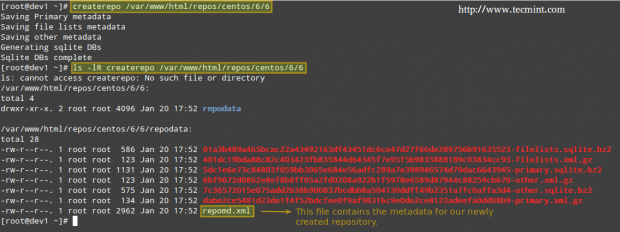

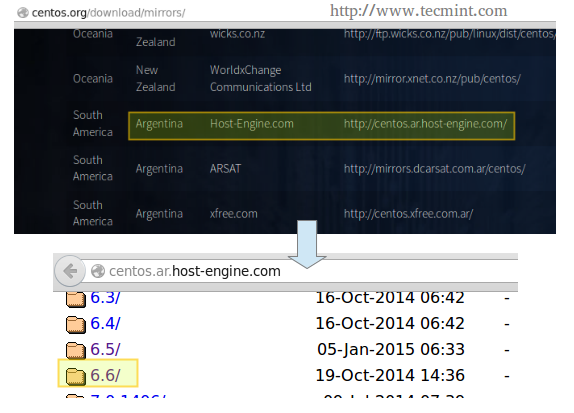

Installing Blacklists

Installation of the whole blacklist package, or of individual categories, is performed by copying the BL directory, or one of its subdirectories, respectively, to the /var/lib/squidguard/db directory.

Of course you could have downloaded the blacklist tarball to this directory in the first place, but the approach explained earlier gives you more control over what categories should be blocked (or not) at a specific time.

Next, I will show you how to install the anonvpn, hacking, and chat blacklists and how to configure squidGuard to use them.

Step 1: Copy recursively the anonvpn, hacking, and chat directories from /opt/3rdparty/BL to /var/lib/squidguard/db.

# cp -a /opt/3rdparty/BL/anonvpn /var/lib/squidguard/db # cp -a /opt/3rdparty/BL/hacking /var/lib/squidguard/db # cp -a /opt/3rdparty/BL/chat /var/lib/squidguard/db

Step 2: Use the domains and urls files to create squidguard’s database files. Please note that the following command will work for creating .db files for all the installed blacklists – even when a certain category has 2 or more subcategories.

# squidGuard -C all

Step 3: Change the ownership of the /var/lib/squidguard/db/ directory and its contents to the proxy user so that Squid can read the database files.

# chown -R proxy:proxy /var/lib/squidguard/db/

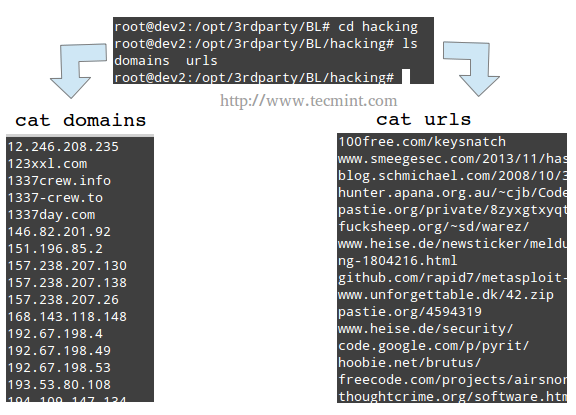

Step 4: Configure Squid to use squidGuard. We will use Squid’s url_rewrite_program directive in /etc/squid/squid.conf to tell Squid to use squidGuard as a URL rewriter / redirector.

Add the following line to squid.conf, making sure that /usr/bin/squidGuard is the right absolute path in your case.

# which squidGuard # echo "url_rewrite_program $(which squidGuard)" >> /etc/squid/squid.conf # tail -n 1 /etc/squid/squid.conf

Step 5: Add the necessary directives to squidGuard’s configuration file (located in /etc/squidguard/squidGuard.conf).

Please refer to the screenshot above, after the following code for further clarification.

src localnet {

ip 192.168.0.0/24

}

dest anonvpn {

domainlist anonvpn/domains

urllist anonvpn/urls

}

dest hacking {

domainlist hacking/domains

urllist hacking/urls

}

dest chat {

domainlist chat/domains

urllist chat/urls

}

acl {

localnet {

pass !anonvpn !hacking !chat !in-addr all

redirect http://www.lds.org

}

default {

pass local none

}

}

Step 6: Restart Squid and test.

# service squid restart [sysvinit / Upstart-based systems] # systemctl restart squid.service [systemctl-based systems]

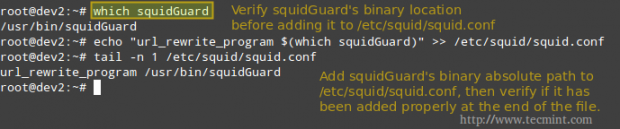

Open a web browser in a client within local network and browse to a site found in any of the blacklist files (domains or urls – we will use http://spin.de/ chat in the following example) and you will be redirected to another URL, www.lds.org in this case.

You can verify that the request was made to the proxy server but was denied (301 http response – Moved permanently) and was redirected to www.lds.org instead.

Removing Restrictions

If for some reason you need to enable a category that has been blocked in the past, remove the corresponding directory from /var/lib/squidguard/db and comment (or delete) the related acl in the squidguard.conf file.

For example, if you want to enable the domains and urls blacklisted by the anonvpn category, you would need to perform the following steps.

# rm -rf /var/lib/squidguard/db/anonvpn

And edit the squidguard.conf file as follows.

Please note that parts highlighted in yellow under BEFORE have been deleted in AFTER.

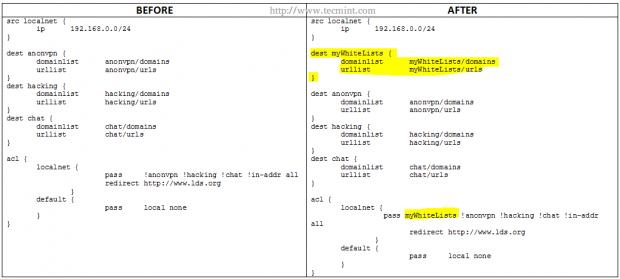

Whitelisting Specific Domains and URL’s

On occasions you may want to allow certain URLs or domains, but not an entire blacklisted directory. In that case, you should create a directory named myWhiteLists (or whatever name you choose) and insert the desired URLs and domains under /var/lib/squidguard/db/myWhiteLists in files named urls and domains, respectively.

Then, initialize the new content rules as before,

# squidGuard -C all

and modify the squidguard.conf as follows.

As before, the parts highlighted in yellow indicate the changes that need to be added. Note that the myWhiteLists string needs to be first in the row that starts with pass.

Finally, remember to restart Squid in order to apply changes.

Conclusion

After following the steps outlined in this tutorial you should have a powerful content filter and URL redirector working hand in hand with your Squid proxy. If you experience any issues during your installation / configuration process or have any questions or comments, you may want to refer to squidGuard’s web documentation but always feel free to drop us a line using the form below and we will get back to you as soon as possible.

Setting Up Email Services (SMTP, Imap and Imaps) and Restricting Access to SMTP – Part 7

A LFCE (Linux Foundation Certified Engineer) is a trained professional who has the skills to install, manage, and troubleshoot network services in Linux systems, and is in charge of the design, implementation and ongoing maintenance of the system architecture and user administration.

Introducing The Linux Foundation Certification Program.

In a previous tutorial we discussed how to install the necessary components of a mail service. If you haven’t installed Postfix and Dovecot yet, please refer to Part 1 of this series for instructions to do so before proceeding.

Requirement

In this post, I will show you how to configure your mail server and how to perform the following tasks:

- Configure email aliases

- Configure an IMAP and IMAPS service

- Configure an smtp service

- Restrict access to an smtp server

Note: That our setup will only cover a mail server for a local area network where the machines belong to the same domain. Sending email messages to other domains require a more complex setup, including domain name resolution capabilities, that is out of the scope of the LFCE certification.

But first off, let’s start with a few definitions.

Components Of a Mail Sending, Transport and Delivery Process

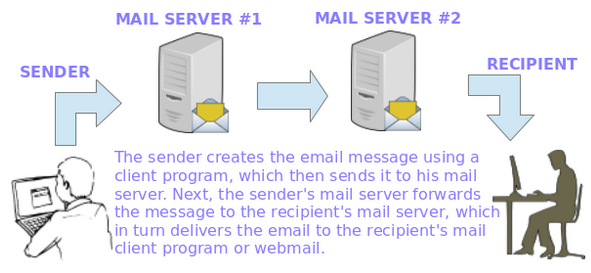

The following image illustrates the process of email transport starting with the sender until the message reaches the recipient’s inbox:

To make this possible, several things happen behind the scenes. In order for an email message to be delivered from a client application (such as Thunderbird, Outlook, or webmail services such as Gmail or Yahoo! Mail) to his / her mail server and from there to the destination server and finally to its intended recipient, a SMTP (Simple Mail Transfer Protocol) service must be in place in each server.

When talking about email services, you will find the following terms mentioned very often:

Message Transport Agent – MTA

MTA (short for Mail or Message Transport Agent), aka mail relay, is a software that is in charge of transferring email messages from a server to a client (and the other way around as well). In this series, Postfix acts as our MTA.

Mail User Agent – MUA

MUA, or Mail User Agent, is a computer program used to access and manage the user’s email inboxes. Examples of MUAs include, but are not limited to, Thunderbird, Outlook, and webmail interfaces such as Gmail, Outlook.com, to name a few. In this series, we will use Thunderbird in our examples.

Mail Delivery Agent

MDA (short for Message or Mail Delivery Agent) is the software part that actually delivers email messages to user’s inboxes. In this tutorial, we will use Dovecot as our MDA. Dovecot will also will handle user authentication.

Simple Mail Transfer Protocol – SMTP

In order for these components to be able to “talk” to each other, they must “speak” the same “language” (or protocol), namely SMTP (Simple Mail Transfer Protocol) as defined in the RFC 2821. Most likely, you will have to refer to that RFC while setting up your mail server environment.

Other protocols that we need to take into account are IMAP4 (Internet Message Access Protocol), which allows to manage email messages directly on the server without downloading them to our client’s hard drive, and POP3(Post Office Protocol), which allows to download the messages and folders to the user’s computer.

Our Testing Environment

Our testing environment is as follows:

Mail Server Setup

Mail Server OS : Debian Wheezy 7.5 IP Address : 192.168.0.15 Local Domain : example.com.ar User Aliases : sysadmin@example.com.ar is aliased to gacanepa@example.com.ar and jdoe@example.com.ar

Client Machine Setup

Mail Client OS : Ubuntu 12.04 IP Address : 192.168.0.103

On our client, we have set up elementary DNS resolution adding the following line to the /etc/hosts file.

192.168.0.15 example.com.ar mailserver

Adding Email Aliases

By default, a message sent to a specific user should be delivered to that user only. However, if you want to also deliver it to a group of users as well, or to a different user, you can create a mail alias or use one of the existing ones in /etc/postfix/aliases, following this syntax:

user1: user1, user2

Thus, emails sent to user1 will be also delivered to user2. Note that if you omit the word user1 after the colon, as in

user1: user2

the messages sent to user1 will only be sent to user2, and not to user1.

In the above example, user1 and user2 should already exist on the system. You may want to refer to Part 8 of the LFCS series if you need to refresh your memory before adding new users.

In our specific case, we will use the following alias as explained before (add the following line in /etc/aliases).

sysadmin: gacanepa, jdoe

And run the following command to create or refresh the aliases lookup table.

postalias /etc/postfix/aliases

So that messages sent to sysadmin@example.com.ar will be delivered to the inbox of the users listed above.

Configuring Postfix – The SMTP Service

The main configuration file for Postfix is /etc/postfix/main.cf. You only need to set up a few parameters before being able to use the mail service. However, you should become acquainted with the full configuration parameters (which can be listed with man 5 postconf) in order to set up a secure and fully customized mail server.

Note: That this tutorial is only supposed to get you started in that process and does not represent a comprehensive guide on email services with Linux.

Open /etc/postfix/main.cf file with your choice of editor and do following changes as explained.

# vi /etc/postfix/main.cf

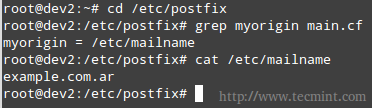

1. myorigin specifies the domain that appears in messages sent from the server. You may see the /etc/mailname file used with this parameter. Feel free to edit it if needed.

myorigin = /etc/mailname

If the value above is used, mails will be sent as user@example.com.ar, where user is the user sending the message.

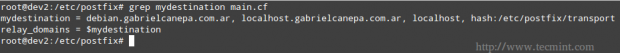

2. mydestination lists what domains this machine will deliver email messages locally, instead of forwarding to another machine (acting as a relay system). The default settings will suffice in our case (make sure to edit the file to suit your environment).

Where the /etc/postfix/transport file defines the relationship between domains and the next server to which mail messages should be forwarded. In our case, since we will be delivering messages to our local area network only (thus bypassing any external DNS resolution), the following configuration will suffice.

example.com.ar local: .example.com.ar local:

Next, we need to convert this plain text file to the .db format, which creates the lookup table that Postfix will actually use to know what to do with incoming and outgoing mail.

# postmap /etc/postfix/transport

You will need to remember to recreate this table if you add more entries to the corresponding text file.

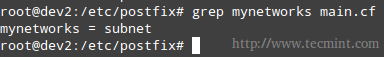

3. mynetworks defines the authorized networks Postfix will forward messages from. The default value, subnet, tells Postfix to forward mail from SMTP clients in the same IP subnetworks as the local machine only.

mynetworks = subnet

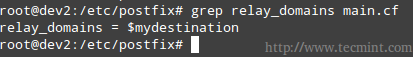

4. relay_domains specifies the destinations to which emails should be sent to. We will leave the default value untouched, which points to mydestination. Remember that we are setting up a mail server for our LAN.

relay_domains = $mydestination

Note that you can use $mydestination instead of listing the actual contents.

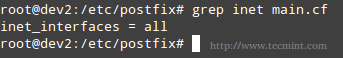

5. inet_interfaces defines which network interfaces the mail service should listen on. The default, all, tells Postfix to use all network interfaces.

inet_interfaces = all

6. Finally, mailbox_size_limit and message_size_limit will be used to set the size of each user’s mailbox and the maximum allowed size of individual messages, respectively, in bytes.

mailbox_size_limit = 51200000 message_size_limit = 5120000

Restricting Access to the SMTP Server

The Postfix SMTP server can apply certain restrictions to each client connection request. Not all clients should be allowed to identify themselves to the mail server using the smtp HELO command, and certainly not all of them should be granted access to send or receive messages.

To implement these restrictions, we will use the following directives in the main.cf file. Though they are self-explanatory, comments have been added for clarification purposes.

# Require that a remote SMTP client introduces itself with the HELO or EHLO command before sending the MAIL command or other commands that require EHLO negotiation. smtpd_helo_required = yes # Permit the request when the client IP address matches any network or network address listed in $mynetworks # Reject the request when the client HELO and EHLO command has a bad hostname syntax smtpd_helo_restrictions = permit_mynetworks, reject_invalid_helo_hostname # Reject the request when Postfix does not represent the final destination for the sender address smtpd_sender_restrictions = permit_mynetworks, reject_unknown_sender_domain # Reject the request unless 1) Postfix is acting as mail forwarder or 2) is the final destination smtpd_recipient_restrictions = permit_mynetworks, reject_unauth_destination

The Postfix configuration parameters postconf page may come in handy in order to further explore the available options.

Configuring Dovecot

Right after installing dovecot, it supports out-of-the-box for the POP3 and IMAP protocols, along with their secure versions, POP3S and IMAPS, respectively.

Add the following lines in /etc/dovecot/conf.d/10-mail.conf file.

# %u represents the user account that logs in # Mailboxes are in mbox format mail_location = mbox:~/mail:INBOX=/var/mail/%u # Directory owned by the mail group and the directory set to group-writable (mode=0770, group=mail) # You may need to change this setting if postfix is running a different user / group on your system mail_privileged_group = mail

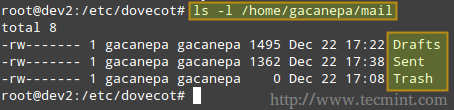

If you check your home directory, you will notice there is a mail subdirectory with the following contents.

Also, please note that the /var/mail/%u file is where the user’s mails are store on most systems.

Add the following directive to /etc/dovecot/dovecot.conf (note that imap and pop3 imply imaps and pop3s as well).

protocols = imap pop3

And make sure /etc/conf.d/10-ssl.conf includes the following lines (otherwise, add them).

ssl_cert = </etc/dovecot/dovecot.pem ssl_key = </etc/dovecot/private/dovecot.pem

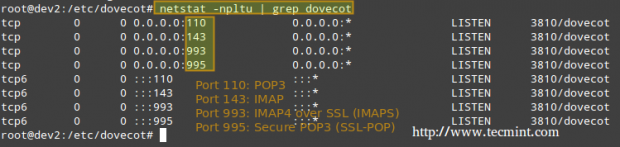

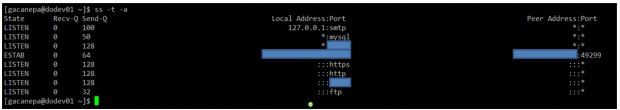

Now let’s restart Dovecot and verify that it listens on the ports related to imap, imaps, pop3, and pop3s.

# netstat -npltu | grep dovecot

Setting Up a Mail Client and Sending/Receving Mails

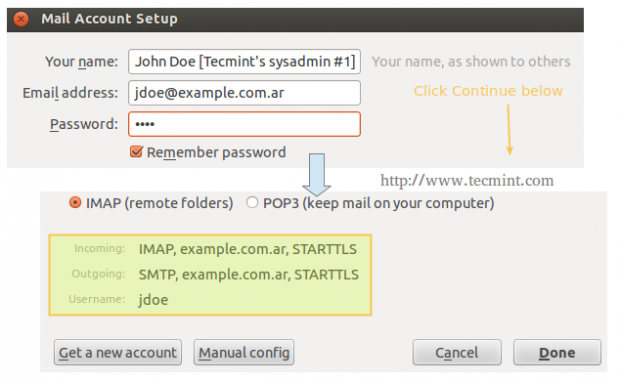

On our client computer, we will open Thunderbird and click on File → New → Existing mail account. We will be prompted to enter the name of the account and the associated email address, along with its password. When we click Continue, Thunderbird will then try to connect to the mail server in order to verify settings.

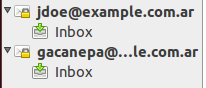

Repeat the process above for the next account (gacanepa@example.com.ar) and the following two inboxes should appear in Thunderbird’s left pane.

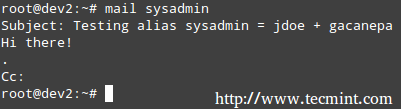

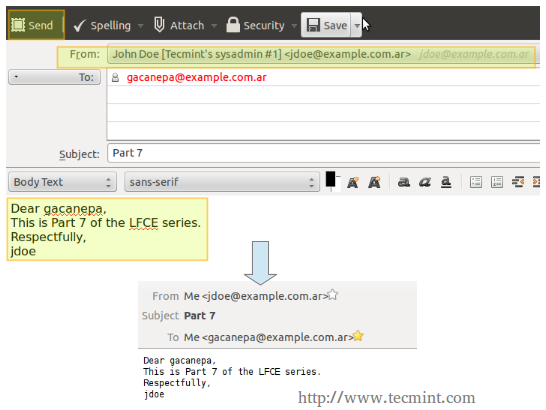

On our server, we will write an email message to sysadmin, which is aliased to jdoe and gacanepa.

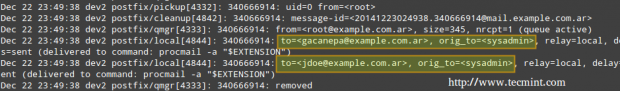

The mail log (/var/log/mail.log) seems to indicate that the email that was sent to sysadmin was relayed to jdoe@example.com.ar and gacanepa@example.com.ar, as can be seen in the following image.

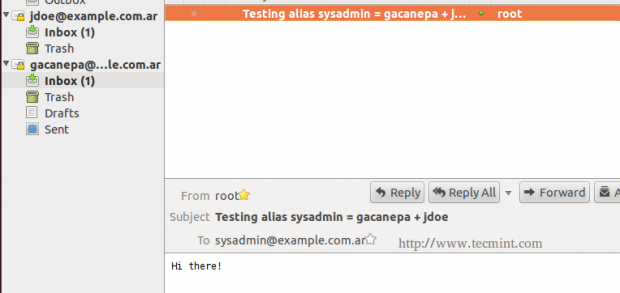

We can verify if the mail was actually delivered to our client, where the IMAP accounts were configured in Thunderbird.

Finally, let’s try to send a message from jdoe@example.com.ar to gacanepa@example.com.ar.

In the exam you will be asked to work exclusively with command-line utilities. This means you will not be able to install a desktop client application such as Thunderbird, but will be required to use mail instead. We have used Thunderbird in this chapter for illustrative purposes only.

Conclusion

In this post we have explained how to set up an IMAP mail server for your local area network and how to restrict access to the SMTP server. If you happen to run into an issue while implementing a similar setup in your testing environment, you will want to check the online documentation of Postfix and Dovecot (specially the pages about the main configuration files, /etc/postfix/main.cf and /etc/dovecot/dovecot.conf, respectively), but in any case do not hesitate to contact me using the comment form below. I will be more than glad to help you.

How To Setup an Iptables Firewall to Enable Remote Access to Services in Linux – Part 8

Introducing The Linux Foundation Certification Program

You will recall from Part 1 – About Iptables of this LFCE (Linux Foundation Certified Engineer) series that we gave a basic description of what a firewall is: a mechanism to manage packets coming into and leaving the network. By “manage” we actually mean:

- To allow or prevent certain packets to enter or leave our network.

- To forward other packets from one point of the network to another.

based on predetermined criteria.

In this article we will discuss how to implement basic packet filtering and how to configure the firewall with iptables, a frontend to netfilter, which is a native kernel module used for firewalling.

Please note that firewalling is a vast subject and this article is not intended to be a comprehensive guide to understanding all that there is to know about it, but rather as a starting point for a deeper study of this topic. However, we will revisit the subject in Part 10 of this series when we explore a few specific use cases of a firewall in Linux.

You can think of a firewall as an international airport where passenger planes come and go almost 24/7. Based on a number of conditions, such as the validity of a person’s passport, or his / her country of origin (to name a few examples) he or she may, or may not, be allowed to enter or leave a certain country.

At the same time, airport officers can instruct people to move from one place of the airport to another if necessary, for example when they need to go through Customs Services.

We may find the airport analogy useful during the rest of this tutorial. Just keep in mind the following relations as we proceed:

- Persons = Packets

- Firewall = Airport

- Country #1 = Network #1

- Country #2 = Network #2

- Airport regulations enforced by officers = firewall rules

Iptables – The Basics

At the low level, it is the kernel itself which “decides” what to do with packets based on rules grouped in chains, or sentences. These chains define what actions should be taken when a package matches the criteria specified by them.

The first action taken by iptables will consist in deciding what to do with a packet:

- Accept it (let it go through into our network)?

- Reject it (prevent it from accessing our network)?

- Forward it (to another chain)?

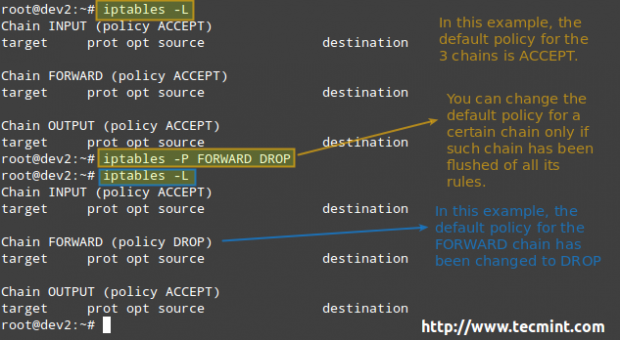

Just in case you were wondering why this tool is called iptables, it’s because these chains are organized in tables, with the filter table being the most well know and the one that is used to implement packet filtering with its three default chains:

1. The INPUT chain handles packets coming into the network, which are destined for local programs.

2. The OUTPUT chain is used to analyze packets originated in the local network, which are to be sent to the outside.

3. The FORWARD chain processes the packets which should be forwarded to another destination (as in the case of a router).

For each of these chains there is a default policy, which dictates what should be done by default when packets do not match any of the rules in the chain. You can view the rules created for each chain and the default policy by running the following command:

# iptables -L

The available policies are as follows:

- ACCEPT → lets the packet through. Any packet that does not match any rules in the chain is allowed into the network.

- DROP → drops the packet quietly. Any packet that does not match any rules in the chain is prevented from entering the network.

- REJECT → rejects the packet and returns an informative message. This one in particular does not work as a default policy. Instead, it is meant to complement packet filtering rules.

When it comes to deciding which policy you will implement, you need to consider the pros and cons of each approach as explained above – note that there is no one-size-fits-all solution.

Adding Rules

To add a rule to the firewall, invoke the iptables command as follows:

# iptables -A chain_name criteria -j target

where,

- -A stands for Append (append the current rule to the end of the chain).

- chain_name is either INPUT, OUTPUT, or FORWARD.

- target is the action, or policy, to apply in this case (ACCEPT, REJECT, or DROP).

- criteria is the set of conditions against which the packets are to be examined. It is composed of at least one (most likely more) of the following flags. Options inside brackets, separated by a vertical bar, are equivalent to each other. The rest represents optional switches:

[--protocol | -p] protocol: specifies the protocol involved in a rule. [--source-port | -sport] port:[port]: defines the port (or range of ports) where the packet originated. [--destination-port | -dport] port:[port]: defines the port (or range of ports) to which the packet is destined. [--source | -s] address[/mask]: represents the source address or network/mask. [--destination | -d] address[/mask]: represents the destination address or network/mask. [--state] state (preceded by -m state): manage packets depending on whether they are part of a state connection, where state can be NEW, ESTABLISHED, RELATED, or INVALID. [--in-interface | -i] interface: specifies the input interface of the packet. [--out-interface | -o] interface: the output interface. [--jump | -j] target: what to do when the packet matches the rule.

Our Testing Environment

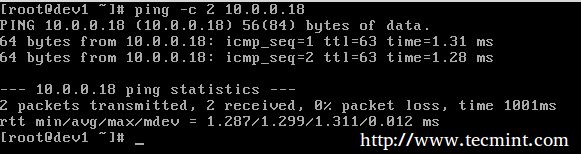

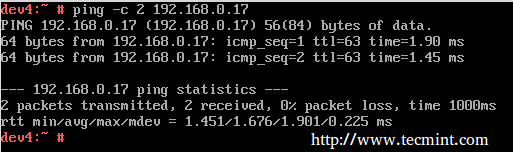

Let’s glue all that in 3 classic examples using the following test environment for the first two:

Firewall: Debian Wheezy 7.5 Hostname: dev2.gabrielcanepa.com IP Address: 192.168.0.15

Source: CentOS 7 Hostname: dev1.gabrielcanepa.com IP Address: 192.168.0.17

And this for the last example

NFSv4 server and firewall: Debian Wheezy 7.5 Hostname: debian IP Address: 192.168.0.10

Source: Debian Wheezy 7.5 Hostname: dev2.gabrielcanepa.com IP Address: 192.168.0.15

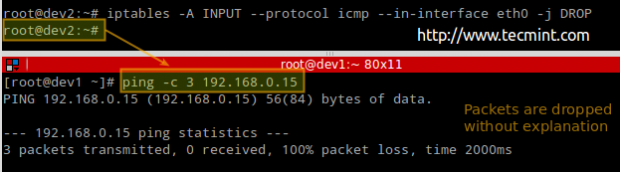

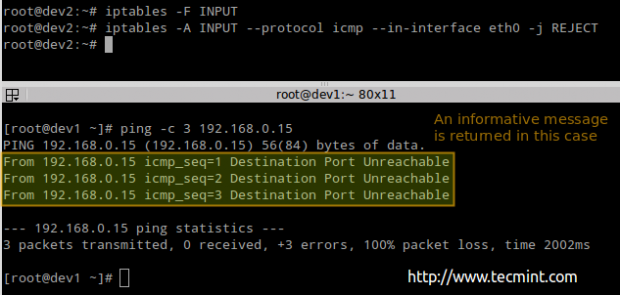

EXAMPLE 1: Analyzing the difference between the DROP and REJECT policies

We will define a DROP policy first for input pings to our firewall. That is, icmp packets will be dropped quietly.

# ping -c 3 192.168.0.15

# iptables -A INPUT --protocol icmp --in-interface eth0 -j DROP

Before proceeding with the REJECT part, we will flush all rules from the INPUT chain to make sure our packets will be tested by this new rule:

# iptables -F INPUT # iptables -A INPUT --protocol icmp --in-interface eth0 -j REJECT

# ping -c 3 192.168.0.15

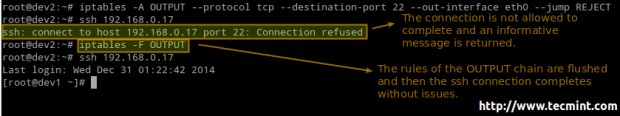

EXAMPLE 2: Disabling / re-enabling ssh logins from dev2 to dev1

We will be dealing with the OUTPUT chain as we’re handling outgoing traffic:

# iptables -A OUTPUT --protocol tcp --destination-port 22 --out-interface eth0 --jump REJECT

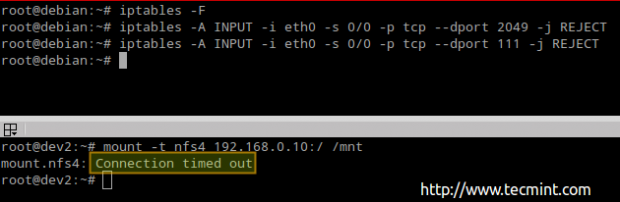

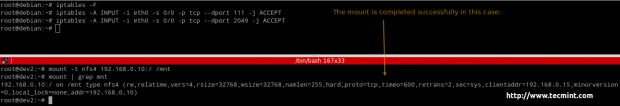

EXAMPLE 3: Allowing / preventing NFS clients (from 192.168.0.0/24) to mount NFS4 shares

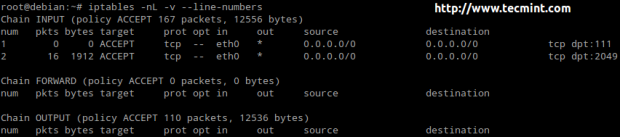

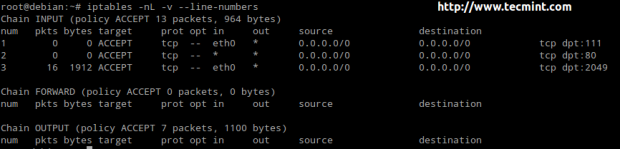

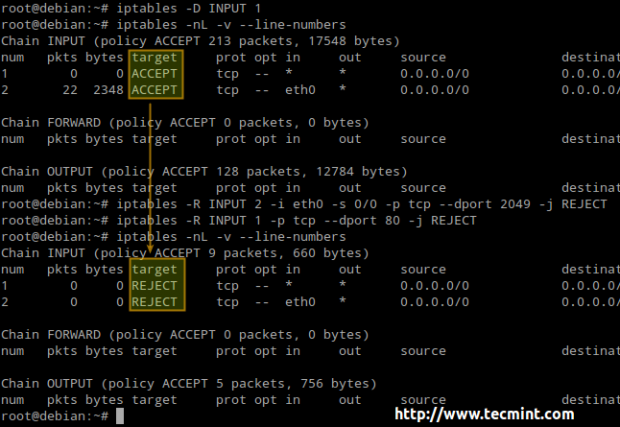

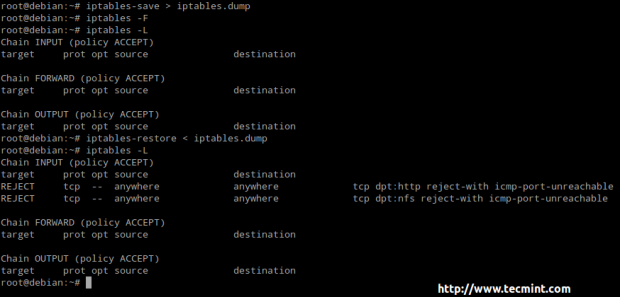

Run the following commands in the NFSv4 server / firewall to close ports 2049 and 111 for all kind of traffic: