LFCS: How to use GNU ‘sed’ Command to Create, Edit, and Manipulate files in Linux – Part 1

The Linux Foundation announced the LFCS (Linux Foundation Certified Sysadmin) certification, a new program that aims at helping individuals all over the world to get certified in basic to intermediate system administration tasks for Linux systems. This includes supporting running systems and services, along with first-hand troubleshooting and analysis, and smart decision-making to escalate issues to engineering teams.

Please watch the following video that demonstrates about The Linux Foundation Certification Program.

The series will be titled Preparation for the LFCS (Linux Foundation Certified Sysadmin) Parts 1 through 10 and cover the following topics for Ubuntu, CentOS, and openSUSE:

Important: Due to changes in the LFCS certification requirements effective Feb. 2, 2016, we are including the following necessary topics to the LFCS series published here. To prepare for this exam, your are highly encouraged to use the LFCE series as well.

This post is Part 1 of a 20-tutorial series, which will cover the necessary domains and competencies that are required for the LFCS certification exam. That being said, fire up your terminal, and let’s start.

Processing Text Streams in Linux

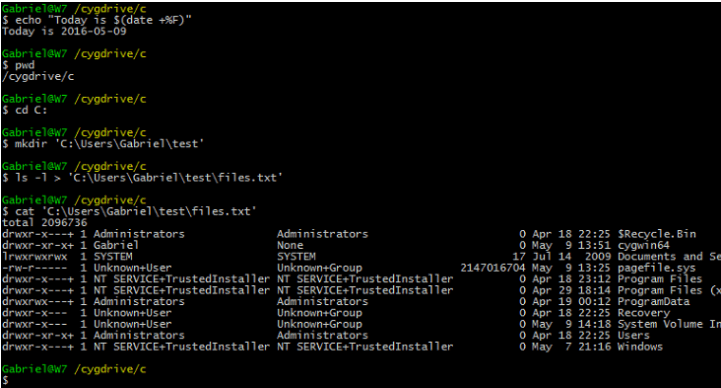

Linux treats the input to and the output from programs as streams (or sequences) of characters. To begin understanding redirection and pipes, we must first understand the three most important types of I/O (Input and Output) streams, which are in fact special files (by convention in UNIX and Linux, data streams and peripherals, or device files, are also treated as ordinary files).

The difference between > (redirection operator) and | (pipeline operator) is that while the first connects a command with a file, the latter connects the output of a command with another command.

# command > file # command1 | command2

Since the redirection operator creates or overwrites files silently, we must use it with extreme caution, and never mistake it with a pipeline. One advantage of pipes on Linux and UNIX systems is that there is no intermediate file involved with a pipe – the stdout of the first command is not written to a file and then read by the second command.

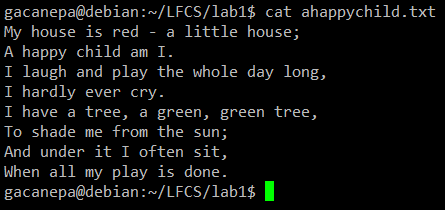

For the following practice exercises we will use the poem “A happy child” (anonymous author).

Using sed

The name sed is short for stream editor. For those unfamiliar with the term, a stream editor is used to perform basic text transformations on an input stream (a file or input from a pipeline).

The most basic (and popular) usage of sed is the substitution of characters. We will begin by changing every occurrence of the lowercase y to UPPERCASE Y and redirecting the output to ahappychild2.txt. The g flag indicates that sed should perform the substitution for all instances of term on every line of file. If this flag is omitted, sed will replace only the first occurrence of term on each line.

Basic syntax:

# sed ‘s/term/replacement/flag’ file

Our example:

# sed ‘s/y/Y/g’ ahappychild.txt > ahappychild2.txt

Should you want to search for or replace a special character (such as /, \, &) you need to escape it, in the term or replacement strings, with a backward slash.

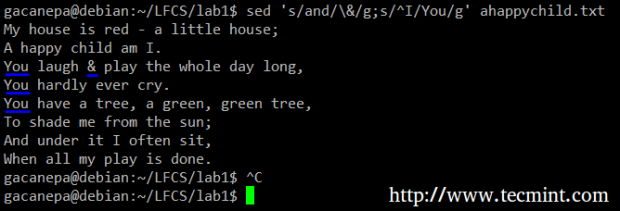

For example, we will substitute the word and for an ampersand. At the same time, we will replace the word Iwith You when the first one is found at the beginning of a line.

# sed 's/and/\&/g;s/^I/You/g' ahappychild.txt

In the above command, a ^ (caret sign) is a well-known regular expression that is used to represent the beginning of a line.

As you can see, we can combine two or more substitution commands (and use regular expressions inside them) by separating them with a semicolon and enclosing the set inside single quotes.

Another use of sed is showing (or deleting) a chosen portion of a file. In the following example, we will display the first 5 lines of /var/log/messages from Jun 8.

# sed -n '/^Jun 8/ p' /var/log/messages | sed -n 1,5p

Note that by default, sed prints every line. We can override this behaviour with the -n option and then tell sed to print (indicated by p) only the part of the file (or the pipe) that matches the pattern (Jun 8 at the beginning of line in the first case and lines 1 through 5 inclusive in the second case).

Finally, it can be useful while inspecting scripts or configuration files to inspect the code itself and leave out comments. The following sed one-liner deletes (d) blank lines or those starting with # (the | character indicates a boolean OR between the two regular expressions).

# sed '/^#\|^$/d' apache2.conf

uniq Command

The uniq command allows us to report or remove duplicate lines in a file, writing to stdout by default. We must note that uniq does not detect repeated lines unless they are adjacent. Thus, uniq is commonly used along with a preceding sort (which is used to sort lines of text files). By default, sort takes the first field (separated by spaces) as key field. To specify a different key field, we need to use the -k option.

Examples

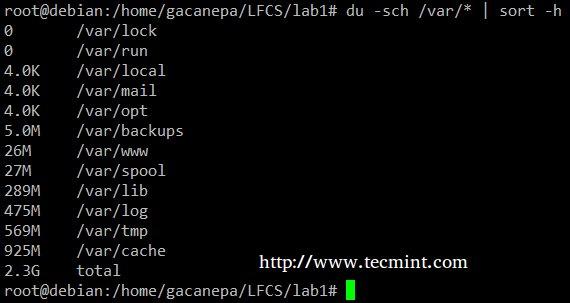

The du –sch /path/to/directory/* command returns the disk space usage per subdirectories and files within the specified directory in human-readable format (also shows a total per directory), and does not order the output by size, but by subdirectory and file name. We can use the following command to sort by size.

# du -sch /var/* | sort –h

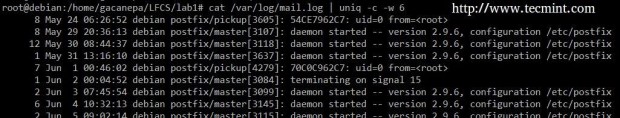

You can count the number of events in a log by date by telling uniq to perform the comparison using the first 6 characters (-w 6) of each line (where the date is specified), and prefixing each output line by the number of occurrences (-c) with the following command.

# cat /var/log/mail.log | uniq -c -w 6

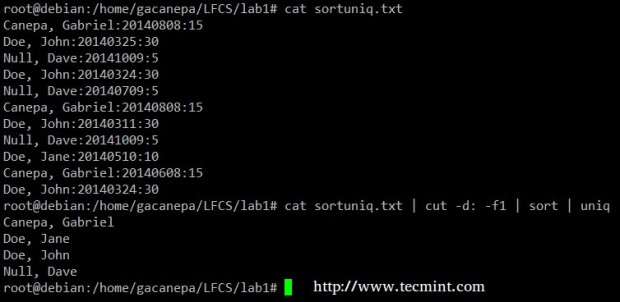

Finally, you can combine sort and uniq (as they usually are). Consider the following file with a list of donors, donation date, and amount. Suppose we want to know how many unique donors there are. We will use the following command to cut the first field (fields are delimited by a colon), sort by name, and remove duplicate lines.

# cat sortuniq.txt | cut -d: -f1 | sort | uniq

Read Also: 13 “cat” Command Examples

grep Command

grep searches text files or (command output) for the occurrence of a specified regular expression and outputs any line containing a match to standard output.

Examples

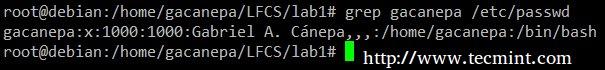

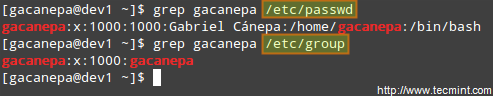

Display the information from /etc/passwd for user gacanepa, ignoring case.

# grep -i gacanepa /etc/passwd

Show all the contents of /etc whose name begins with rc followed by any single number.

# ls -l /etc | grep rc[0-9]

Read Also: 12 “grep” Command Examples

tr Command Usage

The tr command can be used to translate (change) or delete characters from stdin, and write the result to stdout.

Examples

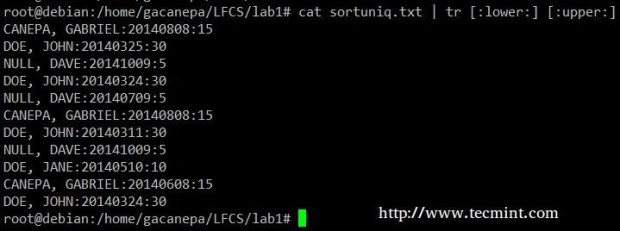

Change all lowercase to uppercase in sortuniq.txt file.

# cat sortuniq.txt | tr [:lower:] [:upper:]

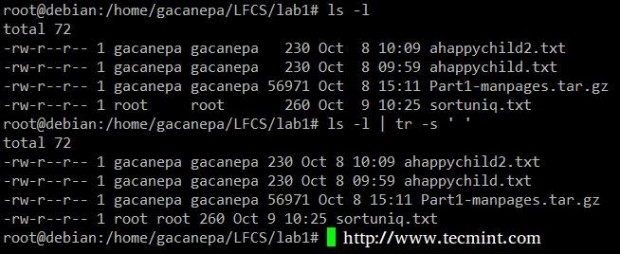

Squeeze the delimiter in the output of ls –l to only one space.

# ls -l | tr -s ' '

cut Command Usage

The cut command extracts portions of input lines (from stdin or files) and displays the result on standard output, based on number of bytes (-b option), characters (-c), or fields (-f). In this last case (based on fields), the default field separator is a tab, but a different delimiter can be specified by using the -d option.

Examples

Extract the user accounts and the default shells assigned to them from /etc/passwd (the –d option allows us to specify the field delimiter, and the –f switch indicates which field(s) will be extracted.

# cat /etc/passwd | cut -d: -f1,7

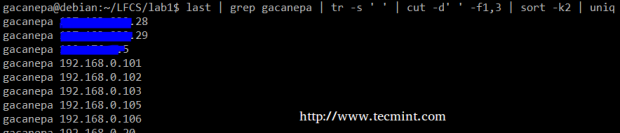

Summing up, we will create a text stream consisting of the first and third non-blank files of the output of the lastcommand. We will use grep as a first filter to check for sessions of user gacanepa, then squeeze delimiters to only one space (tr -s ‘ ‘). Next, we’ll extract the first and third fields with cut, and finally sort by the second field (IP addresses in this case) showing unique.

# last | grep gacanepa | tr -s ' ' | cut -d' ' -f1,3 | sort -k2 | uniq

The above command shows how multiple commands and pipes can be combined so as to obtain filtered data according to our desires. Feel free to also run it by parts, to help you see the output that is pipelined from one command to the next (this can be a great learning experience, by the way!).

Summary

Although this example (along with the rest of the examples in the current tutorial) may not seem very useful at first sight, they are a nice starting point to begin experimenting with commands that are used to create, edit, and manipulate files from the Linux command line. Feel free to leave your questions and comments below – they will be much appreciated!

Reference Links

LFCS: How to Install and Use vi/vim as a Full Text Editor – Part 2

A couple of months ago, the Linux Foundation launched the LFCS (Linux Foundation Certified Sysadmin) certification in order to help individuals from all over the world to verify they are capable of doing basic to intermediate system administration tasks on Linux systems: system support, first-hand troubleshooting and maintenance, plus intelligent decision-making to know when it’s time to raise issues to upper support teams.

Please take a look at the below video that explains The Linux Foundation Certification Program.

This post is Part 2 of a 10-tutorial series, here in this part, we will cover the basic file editing operations and understanding modes in vi/m editor, that are required for the LFCS certification exam.

Perform Basic File Editing Operations Using vi/m

Vi was the first full-screen text editor written for Unix. Although it was intended to be small and simple, it can be a bit challenging for people used exclusively to GUI text editors, such as NotePad++, or gedit, to name a few examples.

To use Vi, we must first understand the 3 modes in which this powerful program operates, in order to begin learning later about the its powerful text-editing procedures.

Please note that most modern Linux distributions ship with a variant of vi known as vim (“Vi improved”), which supports more features than the original vi does. For that reason, throughout this tutorial we will use vi and vim interchangeably.

If your distribution does not have vim installed, you can install it as follows.

- Ubuntu and derivatives: aptitude update && aptitude install vim

- Red Hat-based distributions: yum update && yum install vim

- openSUSE: zypper update && zypper install vim

Why should I want to learn vi?

There are at least 2 good reasons to learn vi.

1. vi is always available (no matter what distribution you’re using) since it is required by POSIX.

2. vi does not consume a considerable amount of system resources and allows us to perform any imaginable tasks without lifting our fingers from the keyboard.

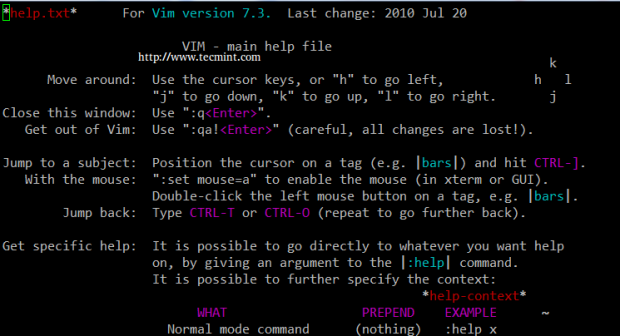

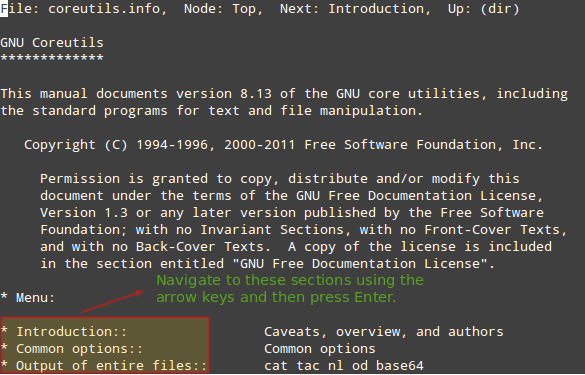

In addition, vi has a very extensive built-in manual, which can be launched using the :help command right after the program is started. This built-in manual contains more information than vi/m’s man page.

Launching vi

To launch vi, type vi in your command prompt.

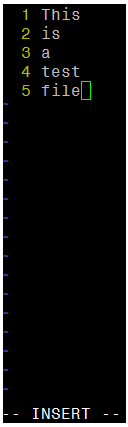

Then press i to enter Insert mode, and you can start typing. Another way to launch vi/m is.

# vi filename

Which will open a new buffer (more on buffers later) named filename, which you can later save to disk.

Understanding Vi modes

1. In command mode, vi allows the user to navigate around the file and enter vi commands, which are brief, case-sensitive combinations of one or more letters. Almost all of them can be prefixed with a number to repeat the command that number of times.

For example, yy (or Y) copies the entire current line, whereas 3yy (or 3Y) copies the entire current line along with the two next lines (3 lines in total). We can always enter command mode (regardless of the mode we’re working on) by pressing the Esc key. The fact that in command mode the keyboard keys are interpreted as commands instead of text tends to be confusing to beginners.

2. In ex mode, we can manipulate files (including saving a current file and running outside programs). To enter this mode, we must type a colon (:) from command mode, directly followed by the name of the ex-mode command that needs to be used. After that, vi returns automatically to command mode.

3. In insert mode (the letter i is commonly used to enter this mode), we simply enter text. Most keystrokes result in text appearing on the screen (one important exception is the Esc key, which exits insert mode and returns to command mode).

Vi Commands

The following table shows a list of commonly used vi commands. File edition commands can be enforced by appending the exclamation sign to the command (for example, <b.:q! enforces quitting without saving).

| Key command | Description |

| h or left arrow | Go one character to the left |

| j or down arrow | Go down one line |

| k or up arrow | Go up one line |

| l (lowercase L) or right arrow | Go one character to the right |

| H | Go to the top of the screen |

| L | Go to the bottom of the screen |

| G | Go to the end of the file |

| w | Move one word to the right |

| b | Move one word to the left |

| 0 (zero) | Go to the beginning of the current line |

| ^ | Go to the first nonblank character on the current line |

| $ | Go to the end of the current line |

| Ctrl-B | Go back one screen |

| Ctrl-F | Go forward one screen |

| i | Insert at the current cursor position |

| I (uppercase i) | Insert at the beginning of the current line |

| J (uppercase j) | Join current line with the next one (move next line up) |

| a | Append after the current cursor position |

| o (lowercase O) | Creates a blank line after the current line |

| O (uppercase o) | Creates a blank line before the current line |

| r | Replace the character at the current cursor position |

| R | Overwrite at the current cursor position |

| x | Delete the character at the current cursor position |

| X | Delete the character immediately before (to the left) of the current cursor position |

| dd | Cut (for later pasting) the entire current line |

| D | Cut from the current cursor position to the end of the line (this command is equivalent to d$) |

| yX | Give a movement command X, copy (yank) the appropriate number of characters, words, or lines from the current cursor position |

| yy or Y | Yank (copy) the entire current line |

| p | Paste after (next line) the current cursor position |

| P | Paste before (previous line) the current cursor position |

| . (period) | Repeat the last command |

| u | Undo the last command |

| U | Undo the last command in the last line. This will work as long as the cursor is still on the line. |

| n | Find the next match in a search |

| N | Find the previous match in a search |

| :n | Next file; when multiple files are specified for editing, this commands loads the next file. |

| :e file | Load file in place of the current file. |

| :r file | Insert the contents of file after (next line) the current cursor position |

| :q | Quit without saving changes. |

| :w file | Write the current buffer to file. To append to an existing file, use :w >> file. |

| :wq | Write the contents of the current file and quit. Equivalent to x! and ZZ |

| :r! command | Execute command and insert output after (next line) the current cursor position. |

Vi Options

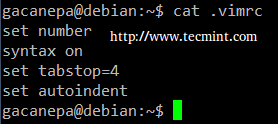

The following options can come in handy while running vim (we need to add them in our ~/.vimrc file).

# echo set number >> ~/.vimrc # echo syntax on >> ~/.vimrc # echo set tabstop=4 >> ~/.vimrc # echo set autoindent >> ~/.vimrc

- set number shows line numbers when vi opens an existing or a new file.

- syntax on turns on syntax highlighting (for multiple file extensions) in order to make code and config files more readable.

- set tabstop=4 sets the tab size to 4 spaces (default value is 8).

- set autoindent carries over previous indent to the next line.

Search and replace

vi has the ability to move the cursor to a certain location (on a single line or over an entire file) based on searches. It can also perform text replacements with or without confirmation from the user.

a). Searching within a line: the f command searches a line and moves the cursor to the next occurrence of a specified character in the current line.

For example, the command fh would move the cursor to the next instance of the letter h within the current line. Note that neither the letter f nor the character you’re searching for will appear anywhere on your screen, but the character will be highlighted after you press Enter.

For example, this is what I get after pressing f4 in command mode.

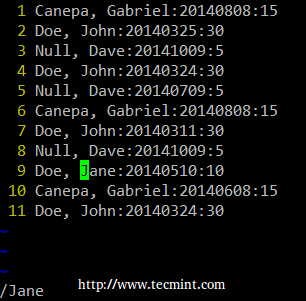

b). Searching an entire file: use the / command, followed by the word or phrase to be searched for. A search may be repeated using the previous search string with the n command, or the next one (using the N command). This is the result of typing /Jane in command mode.

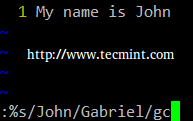

c). vi uses a command (similar to sed’s) to perform substitution operations over a range of lines or an entire file. To change the word “old” to “young” for the entire file, we must enter the following command.

:%s/old/young/g

Notice: The colon at the beginning of the command.

The colon (:) starts the ex command, s in this case (for substitution), % is a shortcut meaning from the first line to the last line (the range can also be specified as n,m which means “from line n to line m”), old is the search pattern, while young is the replacement text, and g indicates that the substitution should be performed on every occurrence of the search string in the file.

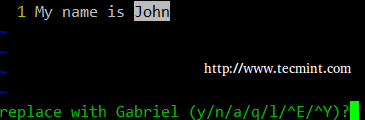

Alternatively, a c can be added to the end of the command to ask for confirmation before performing any substitution.

:%s/old/young/gc

Before replacing the original text with the new one, vi/m will present us with the following message.

- y: perform the substitution (yes)

- n: skip this occurrence and go to the next one (no)

- a: perform the substitution in this and all subsequent instances of the pattern.

- q or Esc: quit substituting.

- l (lowercase L): perform this substitution and quit (last).

- Ctrl-e, Ctrl-y: Scroll down and up, respectively, to view the context of the proposed substitution.

Editing Multiple Files at a Time

Let’s type vim file1 file2 file3 in our command prompt.

# vim file1 file2 file3

First, vim will open file1. To switch to the next file (file2), we need to use the :n command. When we want to return to the previous file, :N will do the job.

In order to switch from file1 to file3.

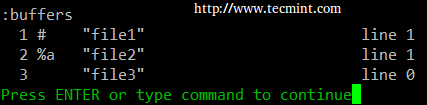

a). The :buffers command will show a list of the file currently being edited.

:buffers

b). The command :buffer 3 (without the s at the end) will open file3 for editing.

In the image above, a pound sign (#) indicates that the file is currently open but in the background, while %amarks the file that is currently being edited. On the other hand, a blank space after the file number (3 in the above example) indicates that the file has not yet been opened.

Temporary vi buffers

To copy a couple of consecutive lines (let’s say 4, for example) into a temporary buffer named a (not associated with a file) and place those lines in another part of the file later in the current vi section, we need to…

1. Press the ESC key to be sure we are in vi Command mode.

2. Place the cursor on the first line of the text we wish to copy.

3. Type “a4yy” to copy the current line, along with the 3 subsequent lines, into a buffer named a. We can continue editing our file – we do not need to insert the copied lines immediately.

4. When we reach the location for the copied lines, use “a before the p or P commands to insert the lines copied into the buffer named a:

- Type “ap to insert the lines copied into buffer a after the current line on which the cursor is resting.

- Type “aP to insert the lines copied into buffer a before the current line.

If we wish, we can repeat the above steps to insert the contents of buffer a in multiple places in our file. A temporary buffer, as the one in this section, is disposed when the current window is closed.

Summary

As we have seen, vi/m is a powerful and versatile text editor for the CLI. Feel free to share your own tricks and comments below.

Reference Links

Update: If you want to extend your VI editor skills, then I would suggest you read following two guides that will guide you to some useful VI editor tricks and tips.

Part 1: Learn Useful ‘Vi/Vim’ Editor Tips and Tricks to Enhance Your Skills

Part 2: 8 Interesting ‘Vi/Vim’ Editor Tips and Tricks

LFCS: How to Archive/Compress Files & Directories, Setting File Attributes and Finding Files in Linux – Part 3

Recently, the Linux Foundation started the LFCS (Linux Foundation Certified Sysadmin) certification, a brand new program whose purpose is allowing individuals from all corners of the globe to have access to an exam, which if approved, certifies that the person is knowledgeable in performing basic to intermediate system administration tasks on Linux systems. This includes supporting already running systems and services, along with first-level troubleshooting and analysis, plus the ability to decide when to escalate issues to engineering teams.

Please watch the below video that gives the idea about The Linux Foundation Certification Program.

This post is Part 3 of a 10-tutorial series, here in this part, we will cover how to archive/compress files and directories, set file attributes, and find files on the filesystem, that are required for the LFCS certification exam.

Archiving and Compression Tools

A file archiving tool groups a set of files into a single standalone file that we can backup to several types of media, transfer across a network, or send via email. The most frequently used archiving utility in Linux is tar. When an archiving utility is used along with a compression tool, it allows to reduce the disk size that is needed to store the same files and information.

The tar utility

tar bundles a group of files together into a single archive (commonly called a tar file or tarball). The name originally stood for tape archiver, but we must note that we can use this tool to archive data to any kind of writeable media (not only to tapes). Tar is normally used with a compression tool such as gzip, bzip2, or xz to produce a compressed tarball.

Basic syntax:

# tar [options] [pathname ...]

Where … represents the expression used to specify which files should be acted upon.

Most commonly used tar commands

| Long option | Abbreviation | Description |

| –create | c | Creates a tar archive |

| –concatenate | A | Appends tar files to an archive |

| –append | r | Appends files to the end of an archive |

| –update | u | Appends files newer than copy in archive |

| –diff or –compare | d | Find differences between archive and file system |

| –file archive | f | Use archive file or device ARCHIVE |

| –list | t | Lists the contents of a tarball |

| –extract or –get | x | Extracts files from an archive |

Normally used operation modifiers

| Long option | Abbreviation | Description |

| –directory dir | C | Changes to directory dir before performing operations |

| –same-permissions | p | Preserves original permissions |

| –verbose | v | Lists all files read or extracted. When this flag is used along with –list, the file sizes, ownership, and time stamps are displayed. |

| –verify | W | Verifies the archive after writing it |

| –exclude file | — | Excludes file from the archive |

| –exclude=pattern | X | Exclude files, given as a PATTERN |

| –gzip or –gunzip | z | Processes an archive through gzip |

| –bzip2 | j | Processes an archive through bzip2 |

| –xz | J | Processes an archive through xz |

Gzip is the oldest compression tool and provides the least compression, while bzip2 provides improved compression. In addition, xz is the newest but (usually) provides the best compression. This advantages of best compression come at a price: the time it takes to complete the operation, and system resources used during the process.

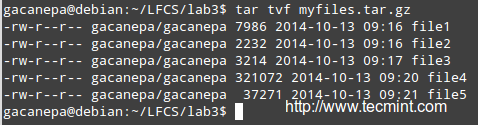

Normally, tar files compressed with these utilities have .gz, .bz2, or .xz extensions, respectively. In the following examples we will be using these files: file1, file2, file3, file4, and file5.

Grouping and compressing with gzip, bzip2 and xz

Group all the files in the current working directory and compress the resulting bundle with gzip, bzip2, and xz(please note the use of a regular expression to specify which files should be included in the bundle – this is to prevent the archiving tool to group the tarballs created in previous steps).

# tar czf myfiles.tar.gz file[0-9] # tar cjf myfiles.tar.bz2 file[0-9] # tar cJf myfile.tar.xz file[0-9]

Listing the contents of a tarball and updating / appending files to the bundle

List the contents of a tarball and display the same information as a long directory listing. Note that update or append operations cannot be applied to compressed files directly (if you need to update or append a file to a compressed tarball, you need to uncompress the tar file and update / append to it, then compress again).

# tar tvf [tarball]

Run any of the following commands:

# gzip -d myfiles.tar.gz [#1] # bzip2 -d myfiles.tar.bz2 [#2] # xz -d myfiles.tar.xz [#3]

Then

# tar --delete --file myfiles.tar file4 (deletes the file inside the tarball) # tar --update --file myfiles.tar file4 (adds the updated file)

and

# gzip myfiles.tar [ if you choose #1 above ] # bzip2 myfiles.tar [ if you choose #2 above ] # xz myfiles.tar [ if you choose #3 above ]

Finally,

# tar tvf [tarball] #again

and compare the modification date and time of file4 with the same information as shown earlier.

Excluding file types

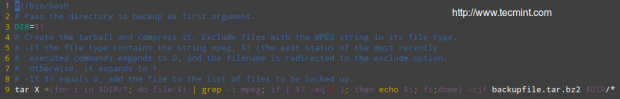

Suppose you want to perform a backup of user’s home directories. A good sysadmin practice would be (may also be specified by company policies) to exclude all video and audio files from backups.

Maybe your first approach would be to exclude from the backup all files with an .mp3 or .mp4 extension (or other extensions). What if you have a clever user who can change the extension to .txt or .bkp, your approach won’t do you much good. In order to detect an audio or video file, you need to check its file type with file. The following shell script will do the job.

#!/bin/bash # Pass the directory to backup as first argument. DIR=$1 # Create the tarball and compress it. Exclude files with the MPEG string in its file type. # -If the file type contains the string mpeg, $? (the exit status of the most recently executed command) expands to 0, and the filename is redirected to the exclude option. Otherwise, it expands to 1. # -If $? equals 0, add the file to the list of files to be backed up. tar X <(for i in $DIR/*; do file $i | grep -i mpeg; if [ $? -eq 0 ]; then echo $i; fi;done) -cjf backupfile.tar.bz2 $DIR/*

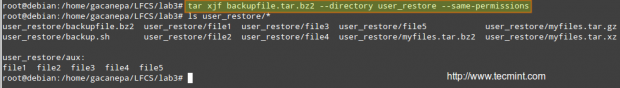

Restoring backups with tar preserving permissions

You can then restore the backup to the original user’s home directory (user_restore in this example), preserving permissions, with the following command.

# tar xjf backupfile.tar.bz2 --directory user_restore --same-permissions

Read Also:

Using find Command to Search for Files

The find command is used to search recursively through directory trees for files or directories that match certain characteristics, and can then either print the matching files or directories or perform other operations on the matches.

Normally, we will search by name, owner, group, type, permissions, date, and size.

Basic syntax:

# find [directory_to_search] [expression]

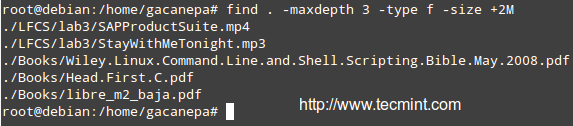

Finding files recursively according to Size

Find all files (-f) in the current directory (.) and 2 subdirectories below (-maxdepth 3 includes the current working directory and 2 levels down) whose size (-size) is greater than 2 MB.

# find . -maxdepth 3 -type f -size +2M

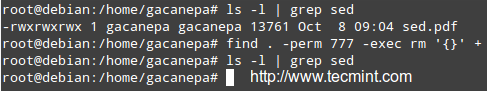

Finding and deleting files that match a certain criteria

Files with 777 permissions are sometimes considered an open door to external attackers. Either way, it is not safe to let anyone do anything with files. We will take a rather aggressive approach and delete them! (‘{}‘ + is used to “collect” the results of the search).

# find /home/user -perm 777 -exec rm '{}' +

Finding files per atime or mtime

Search for configuration files in /etc that have been accessed (-atime) or modified (-mtime) more (+180) or less (-180) than 6 months ago or exactly 6 months ago (180).

Modify the following command as per the example below:

# find /etc -iname "*.conf" -mtime -180 -print

Read Also: 35 Practical Examples of Linux ‘find’ Command

File Permissions and Basic Attributes

The first 10 characters in the output of ls -l are the file attributes. The first of these characters is used to indicate the file type:

- – : a regular file

- -d : a directory

- -l : a symbolic link

- -c : a character device (which treats data as a stream of bytes, i.e. a terminal)

- -b : a block device (which handles data in blocks, i.e. storage devices)

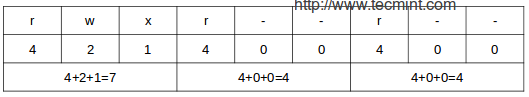

The next nine characters of the file attributes are called the file mode and represent the read (r), write (w), and execute (x) permissions of the file’s owner, the file’s group owner, and the rest of the users (commonly referred to as “the world”).

Whereas the read permission on a file allows the same to be opened and read, the same permission on a directory allows its contents to be listed if the execute permission is also set. In addition, the execute permission in a file allows it to be handled as a program and run, while in a directory it allows the same to be cd’ed into it.

File permissions are changed with the chmod command, whose basic syntax is as follows:

# chmod [new_mode] file

Where new_mode is either an octal number or an expression that specifies the new permissions.

The octal number can be converted from its binary equivalent, which is calculated from the desired file permissions for the owner, the group, and the world, as follows:

The presence of a certain permission equals a power of 2 (r=22, w=21, x=20), while its absence equates to 0. For example:

To set the file’s permissions as above in octal form, type:

# chmod 744 myfile

You can also set a file’s mode using an expression that indicates the owner’s rights with the letter u, the group owner’s rights with the letter g, and the rest with o. All of these “individuals” can be represented at the same time with the letter a. Permissions are granted (or revoked) with the + or – signs, respectively.

Revoking execute permission for a shell script to all users

As we explained earlier, we can revoke a certain permission prepending it with the minus sign and indicating whether it needs to be revoked for the owner, the group owner, or all users. The one-liner below can be interpreted as follows: Change mode for all (a) users, revoke (–) execute permission (x).

# chmod a-x backup.sh

Granting read, write, and execute permissions for a file to the owner and group owner, and read permissions for the world.

When we use a 3-digit octal number to set permissions for a file, the first digit indicates the permissions for the owner, the second digit for the group owner and the third digit for everyone else:

- Owner: (r=22 + w=21 + x=20 = 7)

- Group owner: (r=22 + w=21 + x=20 = 7)

- World: (r=22 + w=0 + x=0 = 4),

# chmod 774 myfile

In time, and with practice, you will be able to decide which method to change a file mode works best for you in each case. A long directory listing also shows the file’s owner and its group owner (which serve as a rudimentary yet effective access control to files in a system):

File ownership is changed with the chown command. The owner and the group owner can be changed at the same time or separately. Its basic syntax is as follows:

# chown user:group file

Where at least user or group need to be present.

Few Examples

Changing the owner of a file to a certain user.

# chown gacanepa sent

Changing the owner and group of a file to an specific user:group pair.

# chown gacanepa:gacanepa TestFile

Changing only the group owner of a file to a certain group. Note the colon before the group’s name.

# chown :gacanepa email_body.txt

Conclusion

As a sysadmin, you need to know how to create and restore backups, how to find files in your system and change their attributes, along with a few tricks that can make your life easier and will prevent you from running into future issues.

I hope that the tips provided in the present article will help you to achieve that goal. Feel free to add your own tips and ideas in the comments section for the benefit of the community. Thanks in advance!

Reference Links

LFCS: Partitioning Storage Devices, Formatting Filesystems and Configuring Swap Partition – Part 4

Last August, the Linux Foundation launched the LFCS certification (Linux Foundation Certified Sysadmin), a shiny chance for system administrators to show, through a performance-based exam, that they can perform overall operational support of Linux systems: system support, first-level diagnosing and monitoring, plus issue escalation – if needed – to other support teams.

Please aware that Linux Foundation certifications are precise, totally based on performance and available through an online portal anytime, anywhere. Thus, you no longer have to travel to a examination center to get the certifications you need to establish your skills and expertise.

Please watch the below video that explains The Linux Foundation Certification Program.

This post is Part 4 of a 10-tutorial series, here in this part, we will cover the Partitioning storage devices, Formatting filesystems and Configuring swap partition, that are required for the LFCS certification exam.

Partitioning Storage Devices

Partitioning is a means to divide a single hard drive into one or more parts or “slices” called partitions. A partition is a section on a drive that is treated as an independent disk and which contains a single type of file system, whereas a partition table is an index that relates those physical sections of the hard drive to partition identifications.

In Linux, the traditional tool for managing MBR partitions (up to ~2009) in IBM PC compatible systems is fdisk. For GPT partitions (~2010 and later) we will use gdisk. Each of these tools can be invoked by typing its name followed by a device name (such as /dev/sdb).

Managing MBR Partitions with fdisk

We will cover fdisk first.

# fdisk /dev/sdb

A prompt appears asking for the next operation. If you are unsure, you can press the ‘m‘ key to display the help contents.

In the above image, the most frequently used options are highlighted. At any moment, you can press ‘p‘ to display the current partition table.

The Id column shows the partition type (or partition id) that has been assigned by fdisk to the partition. A partition type serves as an indicator of the file system, the partition contains or, in simple words, the way data will be accessed in that partition.

Please note that a comprehensive study of each partition type is out of the scope of this tutorial – as this series is focused on the LFCS exam, which is performance-based.

Some of the options used by fdisk as follows:

You can list all the partition types that can be managed by fdisk by pressing the ‘l‘ option (lowercase l).

Press ‘d‘ to delete an existing partition. If more than one partition is found in the drive, you will be asked which one should be deleted.

Enter the corresponding number, and then press ‘w‘ (write modifications to partition table) to apply changes.

In the following example, we will delete /dev/sdb2, and then print (p) the partition table to verify the modifications.

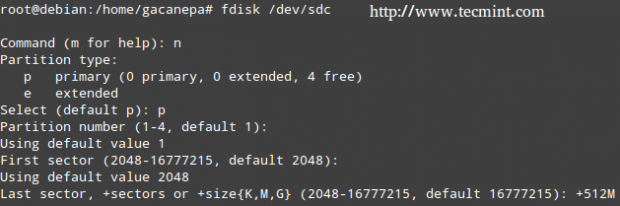

Press ‘n‘ to create a new partition, then ‘p‘ to indicate it will be a primary partition. Finally, you can accept all the default values (in which case the partition will occupy all the available space), or specify a size as follows.

If the partition Id that fdisk chose is not the right one for our setup, we can press ‘t‘ to change it.

When you’re done setting up the partitions, press ‘w‘ to commit the changes to disk.

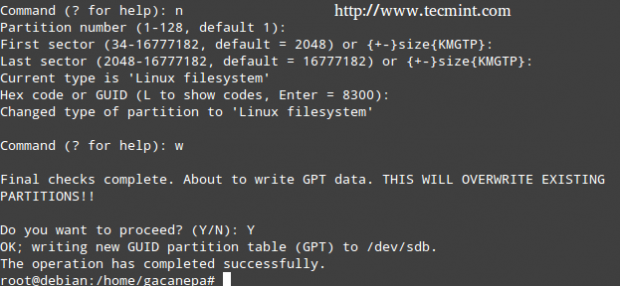

Managing GPT Partitions with gdisk

In the following example, we will use /dev/sdb.

# gdisk /dev/sdb

We must note that gdisk can be used either to create MBR or GPT partitions.

The advantage of using GPT partitioning is that we can create up to 128 partitions in the same disk whose size can be up to the order of petabytes, whereas the maximum size for MBR partitions is 2 TB.

Note that most of the options in fdisk are the same in gdisk. For that reason, we will not go into detail about them, but here’s a screenshot of the process.

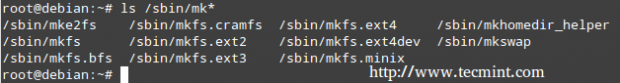

Formatting Filesystems

Once we have created all the necessary partitions, we must create filesystems. To find out the list of filesystems supported in your system, run.

# ls /sbin/mk*

The type of filesystem that you should choose depends on your requirements. You should consider the pros and cons of each filesystem and its own set of features. Two important attributes to look for in a filesystem are.

- Journaling support, which allows for faster data recovery in the event of a system crash.

- Security Enhanced Linux (SELinux) support, as per the project wiki, “a security enhancement to Linux which allows users and administrators more control over access control”.

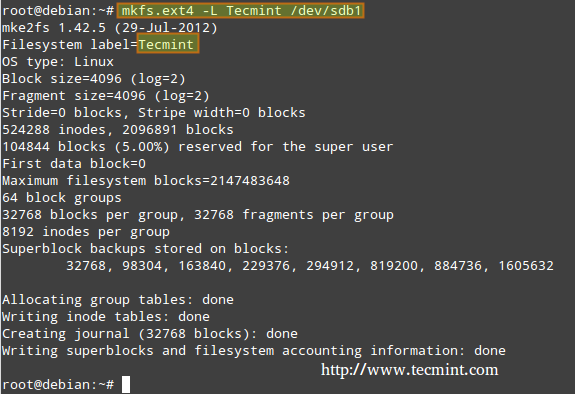

In our next example, we will create an ext4 filesystem (supports both journaling and SELinux) labeled Tecminton /dev/sdb1, using mkfs, whose basic syntax is.

# mkfs -t [filesystem] -L [label] device or # mkfs.[filesystem] -L [label] device

Creating and Using Swap Partitions

Swap partitions are necessary if we need our Linux system to have access to virtual memory, which is a section of the hard disk designated for use as memory, when the main system memory (RAM) is all in use. For that reason, a swap partition may not be needed on systems with enough RAM to meet all its requirements; however, even in that case it’s up to the system administrator to decide whether to use a swap partition or not.

A simple rule of thumb to decide the size of a swap partition is as follows.

Swap should usually equal 2x physical RAM for up to 2 GB of physical RAM, and then an additional 1x physical RAM for any amount above 2 GB, but never less than 32 MB.

So, if:

M = Amount of RAM in GB, and S = Amount of swap in GB, then

If M < 2 S = M *2 Else S = M + 2

Remember this is just a formula and that only you, as a sysadmin, have the final word as to the use and size of a swap partition.

To configure a swap partition, create a regular partition as demonstrated earlier with the desired size. Next, we need to add the following entry to the /etc/fstab file (X can be either b or c).

/dev/sdX1 swap swap sw 0 0

Finally, let’s format and enable the swap partition.

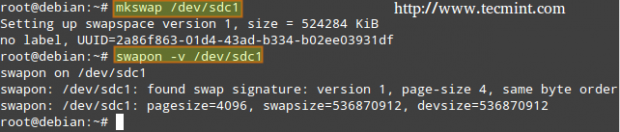

# mkswap /dev/sdX1 # swapon -v /dev/sdX1

To display a snapshot of the swap partition(s).

# cat /proc/swaps

To disable the swap partition.

# swapoff /dev/sdX1

For the next example, we’ll use /dev/sdc1 (=512 MB, for a system with 256 MB of RAM) to set up a partition with fdisk that we will use as swap, following the steps detailed above. Note that we will specify a fixed size in this case.

Conclusion

Creating partitions (including swap) and formatting filesystems are crucial in your road to Sysadminship. I hope that the tips given in this article will guide you to achieve your goals. Feel free to add your own tips & ideas in the comments section below, for the benefit of the community.

Reference Links

LFCS: How to Mount/Unmount Local and Network (Samba & NFS) Filesystems in Linux – Part 5

The Linux Foundation launched the LFCS certification (Linux Foundation Certified Sysadmin), a brand new program whose purpose is allowing individuals from all corners of the globe to get certified in basic to intermediate system administration tasks for Linux systems, which includes supporting running systems and services, along with overall monitoring and analysis, plus smart decision-making when it comes to raising issues to upper support teams.

The following video shows an introduction to The Linux Foundation Certification Program.

This post is Part 5 of a 10-tutorial series, here in this part, we will explain How to mount/unmount local and network filesystems in linux, that are required for the LFCS certification exam.

Mounting Filesystems

Once a disk has been partitioned, Linux needs some way to access the data on the partitions. Unlike DOS or Windows (where this is done by assigning a drive letter to each partition), Linux uses a unified directory tree where each partition is mounted at a mount point in that tree.

A mount point is a directory that is used as a way to access the filesystem on the partition, and mounting the filesystem is the process of associating a certain filesystem (a partition, for example) with a specific directory in the directory tree.

In other words, the first step in managing a storage device is attaching the device to the file system tree. This task can be accomplished on a one-time basis by using tools such as mount (and then unmounted with umount) or persistently across reboots by editing the /etc/fstab file.

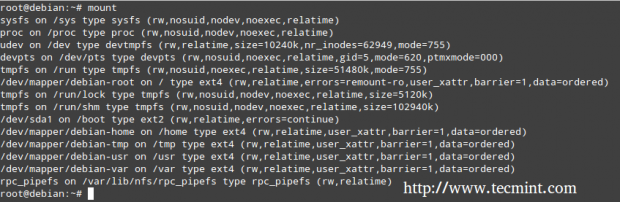

The mount command (without any options or arguments) shows the currently mounted filesystems.

# mount

In addition, mount is used to mount filesystems into the filesystem tree. Its standard syntax is as follows.

# mount -t type device dir -o options

This command instructs the kernel to mount the filesystem found on device (a partition, for example, that has been formatted with a filesystem type) at the directory dir, using all options. In this form, mount does not look in /etc/fstab for instructions.

If only a directory or device is specified, for example.

# mount /dir -o options or # mount device -o options

mount tries to find a mount point and if it can’t find any, then searches for a device (both cases in the /etc/fstabfile), and finally attempts to complete the mount operation (which usually succeeds, except for the case when either the directory or the device is already being used, or when the user invoking mount is not root).

You will notice that every line in the output of mount has the following format.

device on directory type (options)

For example,

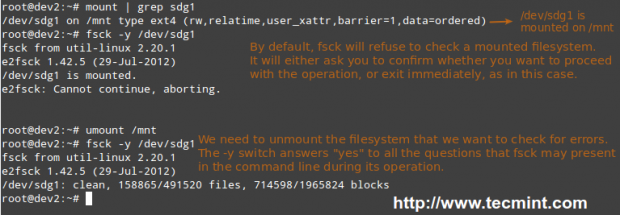

/dev/mapper/debian-home on /home type ext4 (rw,relatime,user_xattr,barrier=1,data=ordered)

Reads:

dev/mapper/debian-home is mounted on /home, which has been formatted as ext4, with the following options: rw,relatime,user_xattr,barrier=1,data=ordered

Mount Options

Most frequently used mount options include.

- async: allows asynchronous I/O operations on the file system being mounted.

- auto: marks the file system as enabled to be mounted automatically using mount -a. It is the opposite of noauto.

- defaults: this option is an alias for async,auto,dev,exec,nouser,rw,suid. Note that multiple options must be separated by a comma without any spaces. If by accident you type a space between options, mount will interpret the subsequent text string as another argument.

- loop: Mounts an image (an .iso file, for example) as a loop device. This option can be used to simulate the presence of the disk’s contents in an optical media reader.

- noexec: prevents the execution of executable files on the particular filesystem. It is the opposite of exec.

- nouser: prevents any users (other than root) to mount and unmount the filesystem. It is the opposite of user.

- remount: mounts the filesystem again in case it is already mounted.

- ro: mounts the filesystem as read only.

- rw: mounts the file system with read and write capabilities.

- relatime: makes access time to files be updated only if atime is earlier than mtime.

- user_xattr: allow users to set and remote extended filesystem attributes.

Mounting a device with ro and noexec options

# mount -t ext4 /dev/sdg1 /mnt -o ro,noexec

In this case we can see that attempts to write a file to or to run a binary file located inside our mounting point fail with corresponding error messages.

# touch /mnt/myfile # /mnt/bin/echo “Hi there”

Mounting a device with default options

In the following scenario, we will try to write a file to our newly mounted device and run an executable file located within its filesystem tree using the same commands as in the previous example.

# mount -t ext4 /dev/sdg1 /mnt -o defaults

In this last case, it works perfectly.

Unmounting Devices

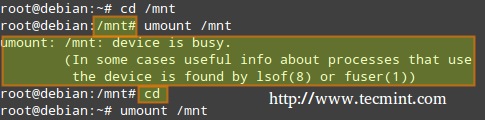

Unmounting a device (with the umount command) means finish writing all the remaining “on transit” data so that it can be safely removed. Note that if you try to remove a mounted device without properly unmounting it first, you run the risk of damaging the device itself or cause data loss.

That being said, in order to unmount a device, you must be “standing outside” its block device descriptor or mount point. In other words, your current working directory must be something else other than the mounting point. Otherwise, you will get a message saying that the device is busy.

An easy way to “leave” the mounting point is typing the cd command which, in lack of arguments, will take us to our current user’s home directory, as shown above.

Mounting Common Networked Filesystems

The two most frequently used network file systems are SMB (which stands for “Server Message Block”) and NFS (“Network File System”). Chances are you will use NFS if you need to set up a share for Unix-like clients only, and will opt for Samba if you need to share files with Windows-based clients and perhaps other Unix-like clients as well.

Read Also

- Setup Samba Server in RHEL/CentOS and Fedora

- Setting up NFS (Network File System) on RHEL/CentOS/Fedora and Debian/Ubuntu

The following steps assume that Samba and NFS shares have already been set up in the server with IP 192.168.0.10 (please note that setting up a NFS share is one of the competencies required for the LFCE exam, which we will cover after the present series).

Mounting a Samba share on Linux

Step 1: Install the samba-client samba-common and cifs-utils packages on Red Hat and Debian based distributions.

# yum update && yum install samba-client samba-common cifs-utils # aptitude update && aptitude install samba-client samba-common cifs-utils

Then run the following command to look for available samba shares in the server.

# smbclient -L 192.168.0.10

And enter the password for the root account in the remote machine.

In the above image we have highlighted the share that is ready for mounting on our local system. You will need a valid samba username and password on the remote server in order to access it.

Step 2: When mounting a password-protected network share, it is not a good idea to write your credentials in the /etc/fstab file. Instead, you can store them in a hidden file somewhere with permissions set to 600, like so.

# mkdir /media/samba # echo “username=samba_username” > /media/samba/.smbcredentials # echo “password=samba_password” >> /media/samba/.smbcredentials # chmod 600 /media/samba/.smbcredentials

Step 3: Then add the following line to /etc/fstab file.

# //192.168.0.10/gacanepa /media/samba cifs credentials=/media/samba/.smbcredentials,defaults 0 0

Step 4: You can now mount your samba share, either manually (mount //192.168.0.10/gacanepa) or by rebooting your machine so as to apply the changes made in /etc/fstab permanently.

# mount -a

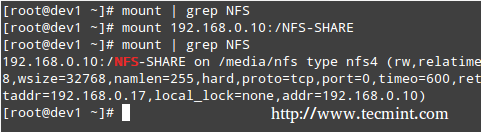

Mounting a NFS share on Linux

Step 1: Install the nfs-common and portmap packages on Red Hat and Debian based distributions.

# yum update && yum install nfs-utils nfs-utils-lib # aptitude update && aptitude install nfs-common

Step 2: Create a mounting point for the NFS share.

# mkdir /media/nfs

Step 3: Add the following line to /etc/fstab file.

192.168.0.10:/NFS-SHARE /media/nfs nfs defaults 0 0

Step 4: You can now mount your nfs share, either manually (mount 192.168.0.10:/NFS-SHARE) or by rebooting your machine so as to apply the changes made in /etc/fstab permanently.

Mounting Filesystems Permanently

As shown in the previous two examples, the /etc/fstab file controls how Linux provides access to disk partitions and removable media devices and consists of a series of lines that contain six fields each; the fields are separated by one or more spaces or tabs. A line that begins with a hash mark (#) is a comment and is ignored.

Each line has the following format.

<file system> <mount point> <type> <options> <dump> <pass>

Where:

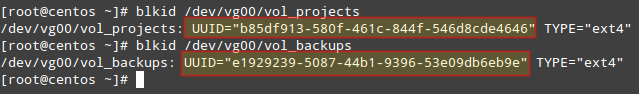

- <file system>: The first column specifies the mount device. Most distributions now specify partitions by their labels or UUIDs. This practice can help reduce problems if partition numbers change.

- <mount point>: The second column specifies the mount point.

- <type>: The file system type code is the same as the type code used to mount a filesystem with the mount command. A file system type code of auto lets the kernel auto-detect the filesystem type, which can be a convenient option for removable media devices. Note that this option may not be available for all filesystems out there.

- <options>: One (or more) mount option(s).

- <dump>: You will most likely leave this to 0 (otherwise set it to 1) to disable the dump utility to backup the filesystem upon boot (The dump program was once a common backup tool, but it is much less popular today.)

- <pass>: This column specifies whether the integrity of the filesystem should be checked at boot time with fsck. A 0 means that fsck should not check a filesystem. The higher the number, the lowest the priority. Thus, the root partition will most likely have a value of 1, while all others that should be checked should have a value of 2.

Mount Examples

1. To mount a partition with label TECMINT at boot time with rw and noexec attributes, you should add the following line in /etc/fstab file.

LABEL=TECMINT /mnt ext4 rw,noexec 0 0

2. If you want the contents of a disk in your DVD drive be available at boot time.

/dev/sr0 /media/cdrom0 iso9660 ro,user,noauto 0 0

Where /dev/sr0 is your DVD drive.

Summary

You can rest assured that mounting and unmounting local and network filesystems from the command line will be part of your day-to-day responsibilities as sysadmin. You will also need to master /etc/fstab. I hope that you have found this article useful to help you with those tasks. Feel free to add your comments (or ask questions) below and to share this article through your network social profiles.

Reference Links

LFCS: Assembling Partitions as RAID Devices – Creating & Managing System Backups – Part 6

Recently, the Linux Foundation launched the LFCS (Linux Foundation Certified Sysadmin) certification, a shiny chance for system administrators everywhere to demonstrate, through a performance-based exam, that they are capable of performing overall operational support on Linux systems: system support, first-level diagnosing and monitoring, plus issue escalation, when required, to other support teams.

The following video provides an introduction to The Linux Foundation Certification Program.

This post is Part 6 of a 10-tutorial series, here in this part, we will explain How to Assemble Partitions as RAID Devices – Creating & Managing System Backups, that are required for the LFCS certification exam.

Understanding RAID

The technology known as Redundant Array of Independent Disks (RAID) is a storage solution that combines multiple hard disks into a single logical unit to provide redundancy of data and/or improve performance in read / write operations to disk.

However, the actual fault-tolerance and disk I/O performance lean on how the hard disks are set up to form the disk array. Depending on the available devices and the fault tolerance / performance needs, different RAID levels are defined. You can refer to the RAID series here in Tecmint.com for a more detailed explanation on each RAID level.

RAID Guide: What is RAID, Concepts of RAID and RAID Levels Explained

Our tool of choice for creating, assembling, managing, and monitoring our software RAIDs is called mdadm(short for multiple disks admin).

---------------- Debian and Derivatives ---------------- # aptitude update && aptitude install mdadm

---------------- Red Hat and CentOS based Systems ---------------- # yum update && yum install mdadm

---------------- On openSUSE ---------------- # zypper refresh && zypper install mdadm #

Assembling Partitions as RAID Devices

The process of assembling existing partitions as RAID devices consists of the following steps.

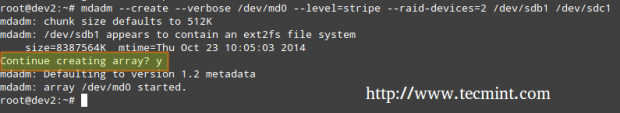

1. Create the array using mdadm

If one of the partitions has been formatted previously, or has been a part of another RAID array previously, you will be prompted to confirm the creation of the new array. Assuming you have taken the necessary precautions to avoid losing important data that may have resided in them, you can safely type y and press Enter.

# mdadm --create --verbose /dev/md0 --level=stripe --raid-devices=2 /dev/sdb1 /dev/sdc1

2. Check the array creation status

In order to check the array creation status, you will use the following commands – regardless of the RAID type. These are just as valid as when we are creating a RAID0 (as shown above), or when you are in the process of setting up a RAID5, as shown in the image below.

# cat /proc/mdstat or # mdadm --detail /dev/md0 [More detailed summary]

3. Format the RAID Device

Format the device with a filesystem as per your needs / requirements, as explained in Part 4 of this series.

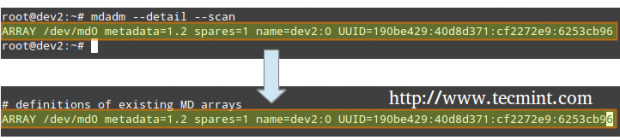

4. Monitor RAID Array Service

Instruct the monitoring service to “keep an eye” on the array. Add the output of mdadm –detail –scan to /etc/mdadm/mdadm.conf (Debian and derivatives) or /etc/mdadm.conf (CentOS / openSUSE), like so.

# mdadm --detail --scan

# mdadm --assemble --scan [Assemble the array]

To ensure the service starts on system boot, run the following commands as root.

Debian and Derivatives

Debian and derivatives, though it should start running on boot by default.

# update-rc.d mdadm defaults

Edit the /etc/default/mdadm file and add the following line.

AUTOSTART=true

On CentOS and openSUSE (systemd-based)

# systemctl start mdmonitor # systemctl enable mdmonitor

On CentOS and openSUSE (SysVinit-based)

# service mdmonitor start # chkconfig mdmonitor on

5. Check RAID Disk Failure

In RAID levels that support redundancy, replace failed drives when needed. When a device in the disk array becomes faulty, a rebuild automatically starts only if there was a spare device added when we first created the array.

Otherwise, we need to manually attach an extra physical drive to our system and run.

# mdadm /dev/md0 --add /dev/sdX1

Where /dev/md0 is the array that experienced the issue and /dev/sdX1 is the new device.

6. Disassemble a working array

You may have to do this if you need to create a new array using the devices – (Optional Step).

# mdadm --stop /dev/md0 # Stop the array # mdadm --remove /dev/md0 # Remove the RAID device # mdadm --zero-superblock /dev/sdX1 # Overwrite the existing md superblock with zeroes

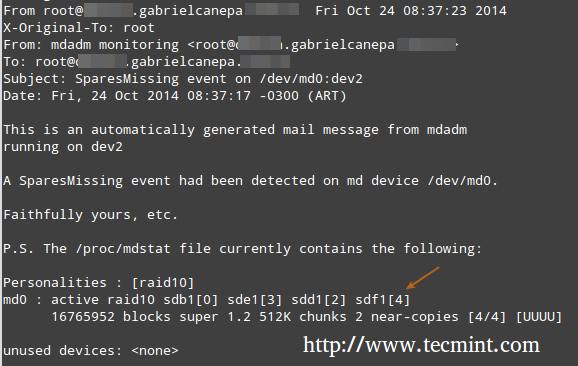

7. Set up mail alerts

You can configure a valid email address or system account to send alerts to (make sure you have this line in mdadm.conf). – (Optional Step)

MAILADDR root

In this case, all alerts that the RAID monitoring daemon collects will be sent to the local root account’s mail box. One of such alerts looks like the following.

Note: This event is related to the example in STEP 5, where a device was marked as faulty and the spare device was automatically built into the array by mdadm. Thus, we “ran out” of healthy spare devices and we got the alert.

Understanding RAID Levels

RAID 0

The total array size is n times the size of the smallest partition, where n is the number of independent disks in the array (you will need at least two drives). Run the following command to assemble a RAID 0 array using partitions /dev/sdb1 and /dev/sdc1.

# mdadm --create --verbose /dev/md0 --level=stripe --raid-devices=2 /dev/sdb1 /dev/sdc1

Common uses: Setups that support real-time applications where performance is more important than fault-tolerance.

RAID 1 (aka Mirroring)

The total array size equals the size of the smallest partition (you will need at least two drives). Run the following command to assemble a RAID 1 array using partitions /dev/sdb1 and /dev/sdc1.

# mdadm --create --verbose /dev/md0 --level=1 --raid-devices=2 /dev/sdb1 /dev/sdc1

Common uses: Installation of the operating system or important subdirectories, such as /home.

RAID 5 (aka drives with Parity)

The total array size will be (n – 1) times the size of the smallest partition. The “lost” space in (n-1) is used for parity (redundancy) calculation (you will need at least three drives).

Note that you can specify a spare device (/dev/sde1 in this case) to replace a faulty part when an issue occurs. Run the following command to assemble a RAID 5 array using partitions /dev/sdb1, /dev/sdc1, /dev/sdd1, and /dev/sde1 as spare.

# mdadm --create --verbose /dev/md0 --level=5 --raid-devices=3 /dev/sdb1 /dev/sdc1 /dev/sdd1 --spare-devices=1 /dev/sde1

Common uses: Web and file servers.

RAID 6 (aka drives with double Parity

The total array size will be (n*s)-2*s, where n is the number of independent disks in the array and s is the size of the smallest disk. Note that you can specify a spare device (/dev/sdf1 in this case) to replace a faulty part when an issue occurs.

Run the following command to assemble a RAID 6 array using partitions /dev/sdb1, /dev/sdc1, /dev/sdd1, /dev/sde1, and /dev/sdf1 as spare.

# mdadm --create --verbose /dev/md0 --level=6 --raid-devices=4 /dev/sdb1 /dev/sdc1 /dev/sdd1 /dev/sde --spare-devices=1 /dev/sdf1

Common uses: File and backup servers with large capacity and high availability requirements.

RAID 1+0 (aka stripe of mirrors)

The total array size is computed based on the formulas for RAID 0 and RAID 1, since RAID 1+0 is a combination of both. First, calculate the size of each mirror and then the size of the stripe.

Note that you can specify a spare device (/dev/sdf1 in this case) to replace a faulty part when an issue occurs. Run the following command to assemble a RAID 1+0 array using partitions /dev/sdb1, /dev/sdc1, /dev/sdd1, /dev/sde1, and /dev/sdf1 as spare.

# mdadm --create --verbose /dev/md0 --level=10 --raid-devices=4 /dev/sd[b-e]1 --spare-devices=1 /dev/sdf1

Common uses: Database and application servers that require fast I/O operations.

Creating and Managing System Backups

It never hurts to remember that RAID with all its bounties IS NOT A REPLACEMENT FOR BACKUPS! Write it 1000 times on the chalkboard if you need to, but make sure you keep that idea in mind at all times. Before we begin, we must note that there is no one-size-fits-all solution for system backups, but here are some things that you do need to take into account while planning a backup strategy.

- What do you use your system for? (Desktop or server? If the latter case applies, what are the most critical services – whose configuration would be a real pain to lose?)

- How often do you need to take backups of your system?

- What is the data (e.g. files / directories / database dumps) that you want to backup? You may also want to consider if you really need to backup huge files (such as audio or video files).

- Where (meaning physical place and media) will those backups be stored?

Backing Up Your Data

Method 1: Backup entire drives with dd command. You can either back up an entire hard disk or a partition by creating an exact image at any point in time. Note that this works best when the device is offline, meaning it’s not mounted and there are no processes accessing it for I/O operations.

The downside of this backup approach is that the image will have the same size as the disk or partition, even when the actual data occupies a small percentage of it. For example, if you want to image a partition of 20 GB that is only 10% full, the image file will still be 20 GB in size. In other words, it’s not only the actual data that gets backed up, but the entire partition itself. You may consider using this method if you need exact backups of your devices.

Creating an image file out of an existing device

# dd if=/dev/sda of=/system_images/sda.img OR --------------------- Alternatively, you can compress the image file --------------------- # dd if=/dev/sda | gzip -c > /system_images/sda.img.gz

Restoring the backup from the image file

# dd if=/system_images/sda.img of=/dev/sda OR --------------------- Depending on your choice while creating the image --------------------- gzip -dc /system_images/sda.img.gz | dd of=/dev/sda

Method 2: Backup certain files / directories with tar command – already covered in Part 3 of this series. You may consider using this method if you need to keep copies of specific files and directories (configuration files, users’ home directories, and so on).

Method 3: Synchronize files with rsync command. Rsync is a versatile remote (and local) file-copying tool. If you need to backup and synchronize your files to/from network drives, rsync is a go.

Whether you’re synchronizing two local directories or local < — > remote directories mounted on the local filesystem, the basic syntax is the same.

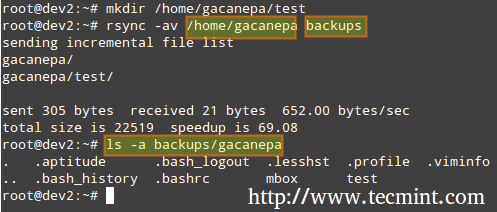

Synchronizing two local directories or local < — > remote directories mounted on the local filesystem

# rsync -av source_directory destination directory

Where, -a recurse into subdirectories (if they exist), preserve symbolic links, timestamps, permissions, and original owner / group and -v verbose.

In addition, if you want to increase the security of the data transfer over the wire, you can use ssh over rsync.

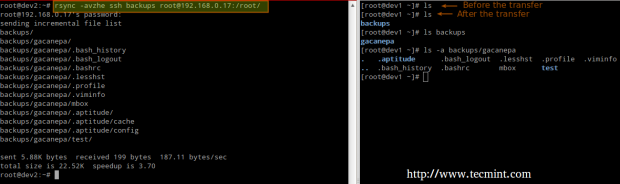

Synchronizing local → remote directories over ssh

# rsync -avzhe ssh backups root@remote_host:/remote_directory/

This example will synchronize the backups directory on the local host with the contents of /root/remote_directory on the remote host.

Where the -h option shows file sizes in human-readable format, and the -e flag is used to indicate a ssh connection.

Synchronizing remote → local directories over ssh.

In this case, switch the source and destination directories from the previous example.

# rsync -avzhe ssh root@remote_host:/remote_directory/ backups

Please note that these are only 3 examples (most frequent cases you’re likely to run into) of the use of rsync. For more examples and usages of rsync commands can be found at the following article.

Read Also: 10 rsync Commands to Sync Files in Linux

Summary

As a sysadmin, you need to ensure that your systems perform as good as possible. If you’re well prepared, and if the integrity of your data is well supported by a storage technology such as RAID and regular system backups, you’ll be safe.

If you have questions, comments, or further ideas on how this article can be improved, feel free to speak out below. In addition, please consider sharing this series through your social network profiles.

LFCS: Managing System Startup Process and Services (SysVinit, Systemd and Upstart) – Part 7

A couple of months ago, the Linux Foundation announced the LFCS (Linux Foundation Certified Sysadmin) certification, an exciting new program whose aim is allowing individuals from all ends of the world to get certified in performing basic to intermediate system administration tasks on Linux systems. This includes supporting already running systems and services, along with first-hand problem-finding and analysis, plus the ability to decide when to raise issues to engineering teams.

The following video describes an brief introduction to The Linux Foundation Certification Program.

This post is Part 7 of a 10-tutorial series, here in this part, we will explain how to Manage Linux System Startup Process and Services, that are required for the LFCS certification exam.

Managing the Linux Startup Process

The boot process of a Linux system consists of several phases, each represented by a different component. The following diagram briefly summarizes the boot process and shows all the main components involved.

When you press the Power button on your machine, the firmware that is stored in a EEPROM chip in the motherboard initializes the POST (Power-On Self Test) to check on the state of the system’s hardware resources. When the POST is finished, the firmware then searches and loads the 1st stage boot loader, located in the MBR or in the EFI partition of the first available disk, and gives control to it.

MBR Method

The MBR is located in the first sector of the disk marked as bootable in the BIOS settings and is 512 bytes in size.

- First 446 bytes: The bootloader contains both executable code and error message text.

- Next 64 bytes: The Partition table contains a record for each of four partitions (primary or extended). Among other things, each record indicates the status (active / not active), size, and start / end sectors of each partition.

- Last 2 bytes: The magic number serves as a validation check of the MBR.

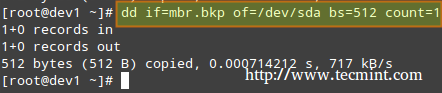

The following command performs a backup of the MBR (in this example, /dev/sda is the first hard disk). The resulting file, mbr.bkp can come in handy should the partition table become corrupt, for example, rendering the system unbootable.

Of course, in order to use it later if the need arises, we will need to save it and store it somewhere else (like a USB drive, for example). That file will help us restore the MBR and will get us going once again if and only if we do not change the hard drive layout in the meanwhile.

Backup MBR

# dd if=/dev/sda of=mbr.bkp bs=512 count=1

Restoring MBR

# dd if=mbr.bkp of=/dev/sda bs=512 count=1

EFI/UEFI Method

For systems using the EFI/UEFI method, the UEFI firmware reads its settings to determine which UEFI application is to be launched and from where (i.e., in which disk and partition the EFI partition is located).

Next, the 2nd stage boot loader (aka boot manager) is loaded and run. GRUB [GRand Unified Boot] is the most frequently used boot manager in Linux. One of two distinct versions can be found on most systems used today.

- GRUB legacy configuration file: /boot/grub/menu.lst (older distributions, not supported by EFI/UEFI firmwares).

- GRUB2 configuration file: most likely, /etc/default/grub.

Although the objectives of the LFCS exam do not explicitly request knowledge about GRUB internals, if you’re brave and can afford to mess up your system (you may want to try it first on a virtual machine, just in case), you need to run.

# update-grub

As root after modifying GRUB’s configuration in order to apply the changes.

Basically, GRUB loads the default kernel and the initrd or initramfs image. In few words, initrd or initramfs help to perform the hardware detection, the kernel module loading and the device discovery necessary to get the real root filesystem mounted.

Once the real root filesystem is up, the kernel executes the system and service manager (init or systemd, whose process identification or PID is always 1) to begin the normal user-space boot process in order to present a user interface.

Both init and systemd are daemons (background processes) that manage other daemons, as the first service to start (during boot) and the last service to terminate (during shutdown).

Starting Services (SysVinit)

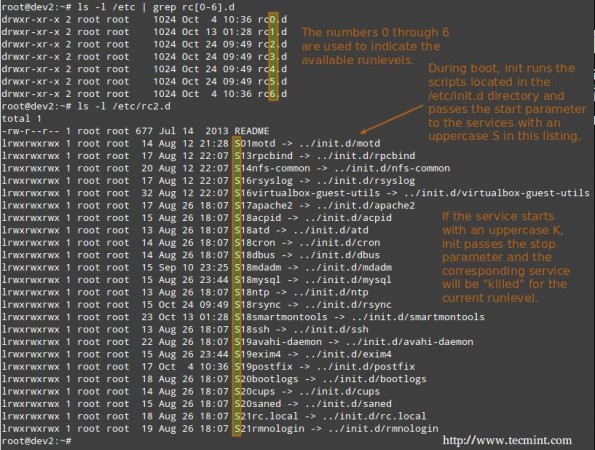

The concept of runlevels in Linux specifies different ways to use a system by controlling which services are running. In other words, a runlevel controls what tasks can be accomplished in the current execution state = runlevel (and which ones cannot).

Traditionally, this startup process was performed based on conventions that originated with System V UNIX, with the system passing executing collections of scripts that start and stop services as the machine entered a specific runlevel (which, in other words, is a different mode of running the system).

Within each runlevel, individual services can be set to run, or to be shut down if running. Latest versions of some major distributions are moving away from the System V standard in favour of a rather new service and system manager called systemd (which stands for system daemon), but usually support sysv commands for compatibility purposes. This means that you can run most of the well-known sysv init tools in a systemd-based distribution.

Read Also: Why ‘systemd’ replaces ‘init’ in Linux

Besides starting the system process, init looks to the /etc/inittab file to decide what runlevel must be entered.

| Runlevel | Description |

| 0 | Halt the system. Runlevel 0 is a special transitional state used to shutdown the system quickly. |

| 1 | Also aliased to s, or S, this runlevel is sometimes called maintenance mode. What services, if any, are started at this runlevel varies by distribution. It’s typically used for low-level system maintenance that may be impaired by normal system operation. |

| 2 | Multiuser. On Debian systems and derivatives, this is the default runlevel, and includes -if available- a graphical login. On Red-Hat based systems, this is multiuser mode without networking. |

| 3 | On Red-Hat based systems, this is the default multiuser mode, which runs everything except the graphical environment. This runlevel and levels 4 and 5 usually are not used on Debian-based systems. |

| 4 | Typically unused by default and therefore available for customization. |

| 5 | On Red-Hat based systems, full multiuser mode with GUI login. This runlevel is like level 3, but with a GUI login available. |

| 6 | Reboot the system. |

To switch between runlevels, we can simply issue a runlevel change using the init command: init N (where N is one of the runlevels listed above). Please note that this is not the recommended way of taking a running system to a different runlevel because it gives no warning to existing logged-in users (thus causing them to lose work and processes to terminate abnormally).

Instead, the shutdown command should be used to restart the system (which first sends a warning message to all logged-in users and blocks any further logins; it then signals init to switch runlevels); however, the default runlevel (the one the system will boot to) must be edited in the /etc/inittab file first.

For that reason, follow these steps to properly switch between runlevels, As root, look for the following line in /etc/inittab.

id:2:initdefault:

and change the number 2 for the desired runlevel with your preferred text editor, such as vim (described in How to use vi/vim editor in Linux – Part 2 of this series).

Next, run as root.

# shutdown -r now

That last command will restart the system, causing it to start in the specified runlevel during next boot, and will run the scripts located in the /etc/rc[runlevel].d directory in order to decide which services should be started and which ones should not. For example, for runlevel 2 in the following system.

Manage Services using chkconfig

To enable or disable system services on boot, we will use chkconfig command in CentOS / openSUSE and sysv-rc-conf in Debian and derivatives. This tool can also show us what is the preconfigured state of a service for a particular runlevel.

Read Also: How to Stop and Disable Unwanted Services in Linux

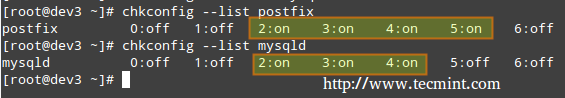

Listing the runlevel configuration for a service.

# chkconfig --list [service name] # chkconfig --list postfix # chkconfig --list mysqld

In the above image we can see that postfix is set to start when the system enters runlevels 2 through 5, whereas mysqld will be running by default for runlevels 2 through 4. Now suppose that this is not the expected behaviour.

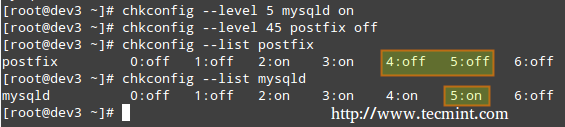

For example, we need to turn on mysqld for runlevel 5 as well, and turn off postfix for runlevels 4 and 5. Here’s what we would do in each case (run the following commands as root).

Enabling a service for a particular runlevel

# chkconfig --level [level(s)] service on # chkconfig --level 5 mysqld on

Disabling a service for particular runlevels

# chkconfig --level [level(s)] service off # chkconfig --level 45 postfix off

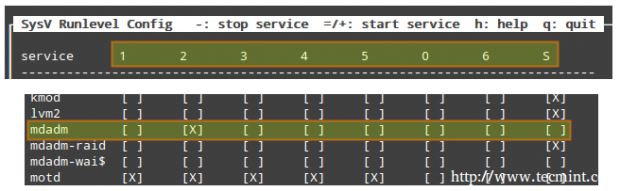

We will now perform similar tasks in a Debian-based system using sysv-rc-conf.

Manage Services using sysv-rc-conf

Configuring a service to start automatically on a specific runlevel and prevent it from starting on all others.

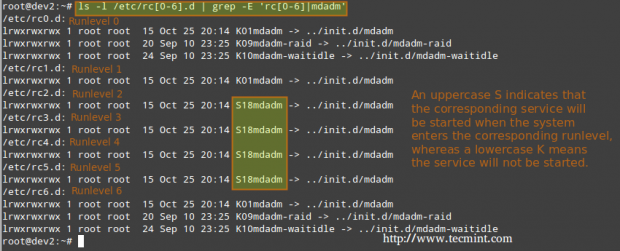

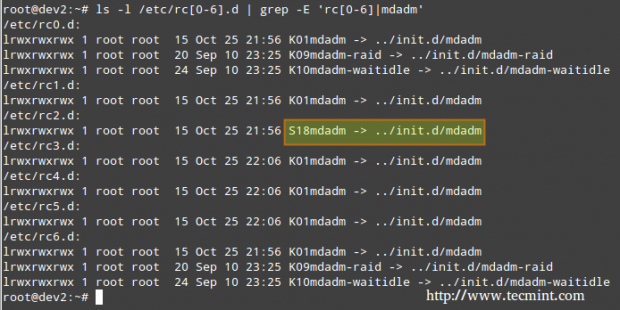

1. Let’s use the following command to see what are the runlevels where mdadm is configured to start.

# ls -l /etc/rc[0-6].d | grep -E 'rc[0-6]|mdadm'

2. We will use sysv-rc-conf to prevent mdadm from starting on all runlevels except 2. Just check or uncheck (with the space bar) as desired (you can move up, down, left, and right with the arrow keys).

# sysv-rc-conf

Then press q to quit.

3. We will restart the system and run again the command from STEP 1.

# ls -l /etc/rc[0-6].d | grep -E 'rc[0-6]|mdadm'

In the above image we can see that mdadm is configured to start only on runlevel 2.

What About systemd?

systemd is another service and system manager that is being adopted by several major Linux distributions. It aims to allow more processing to be done in parallel during system startup (unlike sysvinit, which always tends to be slower because it starts processes one at a time, checks whether one depends on another, and waits for daemons to launch so more services can start), and to serve as a dynamic resource management to a running system.

Thus, services are started when needed (to avoid consuming system resources) instead of being launched without a solid reason during boot.

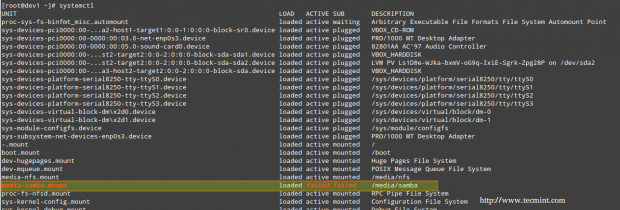

Viewing the status of all the processes running on your system, both systemd native and SysV services, run the following command.

# systemctl

The LOAD column shows whether the unit definition (refer to the UNIT column, which shows the service or anything maintained by systemd) was properly loaded, while the ACTIVE and SUB columns show the current status of such unit.

Displaying information about the current status of a service

When the ACTIVE column indicates that an unit’s status is other than active, we can check what happened using.

# systemctl status [unit]

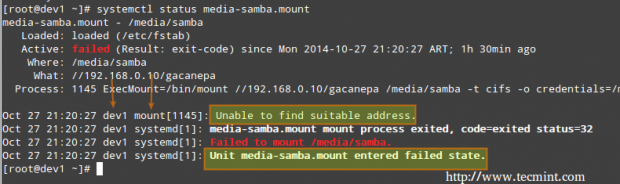

For example, in the image above, media-samba.mount is in failed state. Let’s run.

# systemctl status media-samba.mount

We can see that media-samba.mount failed because the mount process on host dev1 was unable to find the network share at //192.168.0.10/gacanepa.

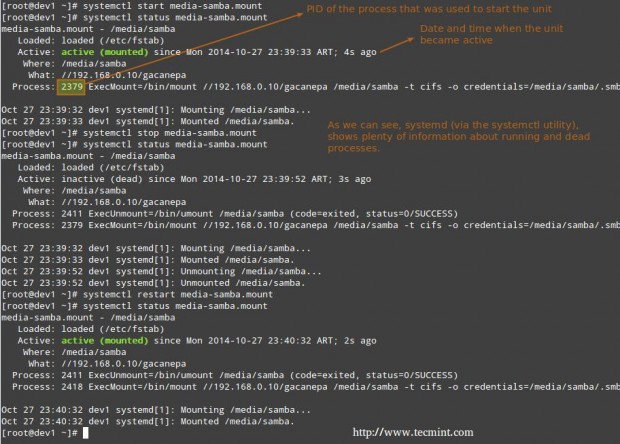

Starting or Stopping Services

Once the network share //192.168.0.10/gacanepa becomes available, let’s try to start, then stop, and finally restart the unit media-samba.mount. After performing each action, let’s run systemctl status media-samba.mount to check on its status.

# systemctl start media-samba.mount # systemctl status media-samba.mount # systemctl stop media-samba.mount # systemctl restart media-samba.mount # systemctl status media-samba.mount

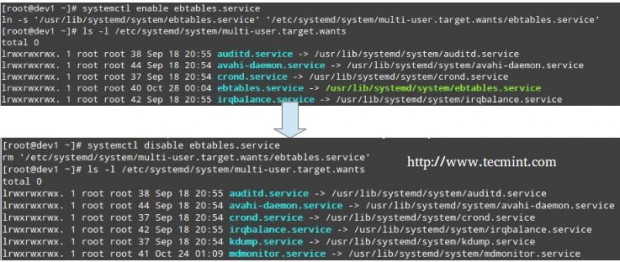

Enabling or disabling a service to start during boot

Under systemd you can enable or disable a service when it boots.

# systemctl enable [service] # enable a service # systemctl disable [service] # prevent a service from starting at boot

The process of enabling or disabling a service to start automatically on boot consists in adding or removing symbolic links in the /etc/systemd/system/multi-user.target.wants directory.

Alternatively, you can find out a service’s current status (enabled or disabled) with the command.

# systemctl is-enabled [service]

For example,

# systemctl is-enabled postfix.service

In addition, you can reboot or shutdown the system with.

# systemctl reboot # systemctl shutdown

Upstart

Upstart is an event-based replacement for the /sbin/init daemon and was born out of the need for starting services only, when they are needed (also supervising them while they are running), and handling events as they occur, thus surpassing the classic, dependency-based sysvinit system.

It was originally developed for the Ubuntu distribution, but is used in Red Hat Enterprise Linux 6.0. Though it was intended to be suitable for deployment in all Linux distributions as a replacement for sysvinit, in time it was overshadowed by systemd. On February 14, 2014, Mark Shuttleworth (founder of Canonical Ltd.) announced that future releases of Ubuntu would use systemd as the default init daemon.

Because the SysV startup script for system has been so common for so long, a large number of software packages include SysV startup scripts. To accommodate such packages, Upstart provides a compatibility mode: It runs SysV startup scripts in the usual locations (/etc/rc.d/rc?.d, /etc/init.d/rc?.d, /etc/rc?.d, or a similar location). Thus, if we install a package that doesn’t yet include an Upstart configuration script, it should still launch in the usual way.

Furthermore, if we have installed utilities such as chkconfig, you should be able to use them to manage your SysV-based services just as we would on sysvinit based systems.