There are so many tutorials available on web to mirror or take a backup of your web files with different methods, here I am creating this article for my future reference and here I’ll be using a very simple and versatile command of Linux to create a backup of your website. This tutorial will help you to sync data between your two web servers with “Rsync“.

The purpose of creating a mirror of your Web Server with Rsync is if your main web server fails, your backup server can take over to reduce downtime of your website. This way of creating a web server backup is very good and effective for small and medium size web businesses.

Advantages of Syncing Web Servers

The main advantages of creating a web server backup with rsync are as follows:

- Rsync syncs only those bytes and blocks of data that have changed.

- Rsync has the ability to check and delete those files and directories at backup server that have been deleted from the main web server.

- It takes care of permissions, ownerships and special attributes while copying data remotely.

- It also supports SSH protocol to transfer data in an encrypted manner so that you will be assured that all data is safe.

- Rsync uses compression and decompression method while transferring data which consumes less bandwidth.

How To Sync Two Apache Web Servers

Let’s proceed with setting up rsync to create a mirror of your web server. Here, I’ll be using two servers.

Main Server

- IP Address: 192.168.0.100

- Hostname: webserver.example.com

Backup Server

- IP Address: 192.168.0.101

- Hostname: backup.example.com

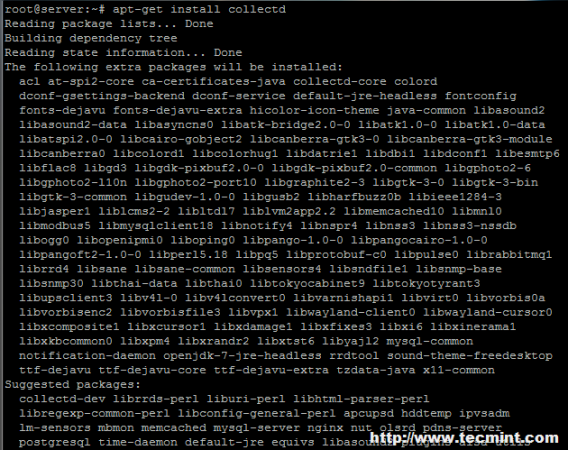

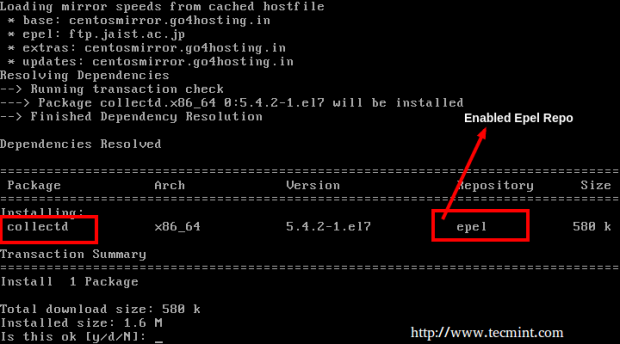

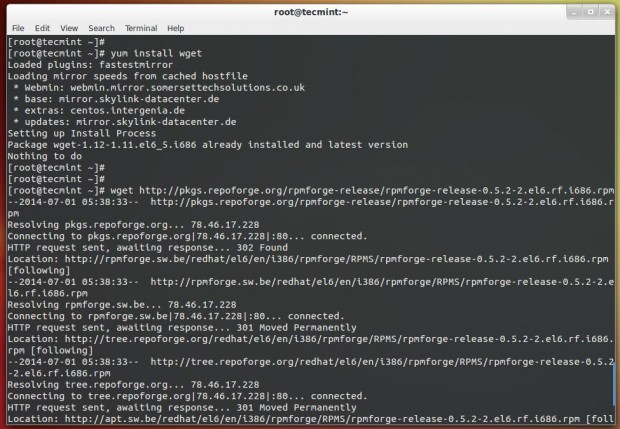

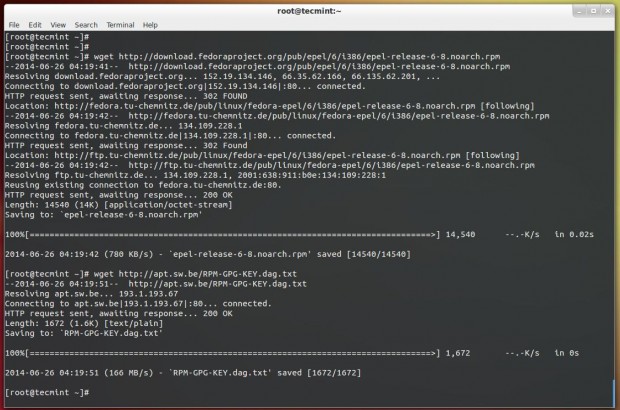

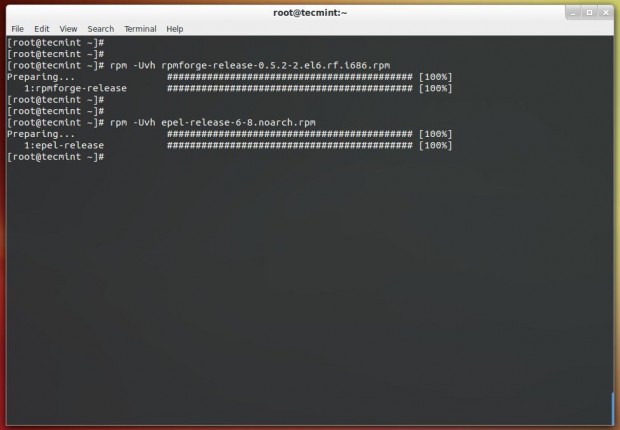

Step 1: Install Rsync Tool

Here in this case web server data of webserver.example.com will be mirrored on backup.example.com. And to do so first, we need to install Rsync on both the server with the help of following command.

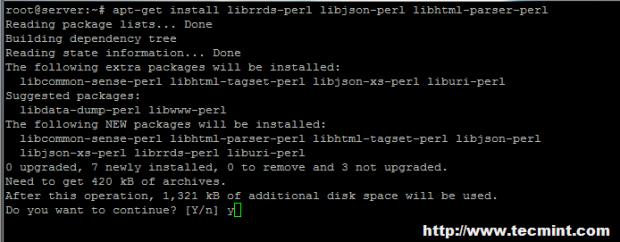

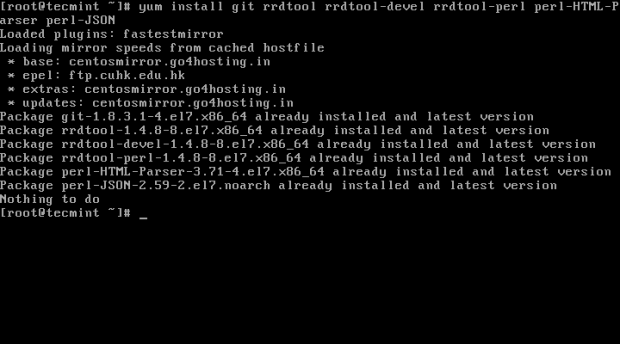

[root@tecmint]# yum install rsync [On Red Hat based systems] [root@tecmint]# apt-get install rsync [On Debian based systems]

Step 2: Create a User to run Rsync

We can setup rsync with root user, but for security reasons, you can create an unprivileged user on main webserver i.e webserver.example.com to run rsync.

[root@tecmint]# useradd tecmint [root@tecmint]# passwd tecmint

Here I have created a user “tecmint” and assigned a password to user.

Step 3: Test Rsync Setup

It’s time to test your rsync setup on your backup server (i.e. backup.example.com) and to do so, please type following command.

[root@backup www]# rsync -avzhe ssh tecmint@webserver.example.com:/var/www/ /var/www

Sample Output

tecmint@webserver.example.com's password: receiving incremental file list sent 128 bytes received 32.67K bytes 5.96K bytes/sec total size is 12.78M speedup is 389.70

You can see that your rsync is now working absolutely fine and syncing data. I have used “/var/www” to transfer; you can change the folder location according to your needs.

Step 4: Automate Sync with SSH Passwordless Login

Now, we are done with rsync setups and now its time to setup a cron for rsync. As we are going to use rsync with SSH protocol, ssh will be asking for authentication and if we won’t provide a password to cron it will not work. In order to work cron smoothly, we need to setup passwordless ssh logins for rsync.

Here in this example, I am doing it as root to preserve file ownerships as well, you can do it for alternative users too.

First, we’ll generate a public and private key with following commands on backups server (i.e. backup.example.com).

[root@backup]# ssh-keygen -t rsa -b 2048

When you enter this command, please don’t provide passphrase and click enter for Empty passphrase so that rsync cron will not need any password for syncing data.

Sample Output

Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: 9a:33:a9:5d:f4:e1:41:26:57:d0:9a:68:5b:37:9c:23 root@backup.exmple.com The key's randomart image is: +--[ RSA 2048]----+ | .o. | | .. | | ..++ . | | o=E * | | .Sooo o | | =.o o | | * . o | | o + | | . . | +-----------------+

Now, our Public and Private key has been generated and we will have to share it with main server so that main web server will recognize this backup machine and will allow it to login without asking any password while syncing data.

[root@backup html]# ssh-copy-id -i /root/.ssh/id_rsa.pub root@webserver.example.com

Now try logging into the machine, with “ssh ‘root@webserver.example.com‘”, and check in.ssh/authorized_keys.

[root@backup html]# root@webserver.example.com

Now, we are done with sharing keys. To know more in-depth about SSH password less login, you can read our article on it.

Step 5: Schedule Cron To Automate Sync

Let’s setup a cron for this. To setup a cron, please open crontab file with the following command.

[root@backup ~]# crontab –e

It will open up /etc/crontab file to edit with your default editor. Here In this example, I am writing a cron to run it every 5 minutes to sync the data.

*/5 * * * * rsync -avzhe ssh root@webserver.example.com:/var/www/ /var/www/

The above cron and rsync command simply syncing “/var/www/” from the main web server to a backup serverin every 5 minutes. You can change the time and folder location configuration according to your needs. To be more creative and customize with Rsync and Cron command, you can check out our more detailed articles at: