DocBook Authoring and Publishing Suite: A Fully-Fledged Authoring and Content Management System for Documentation Projects

If you are working in technical communication, banking on DocBook for your documentation projects comes with many advantages. However, over the past few years, software documentation projects started to move from DocBook to AsciiDoc, a lightweight markup language, as the document format. This is partly due to the ever-growing complexity of IT solutions and the need to involve external experts (not having a technical writing background) into documentation efforts.

Such a move usually not only requires converting the DocBook sources to AsciiDoc, but also changing the project setup, the toolchain and writing new stylesheets.

But we have good news: The new AsciiDoc support in the DocBook Authoring and Publishing Suite (DAPS) saves you from switching to a new toolchain and new stylesheets. Whether you convert an existing DAPS project from DocBook to AsciiDoc, or whether you have used DAPS before and are starting an AsciiDoc project from scratch, DAPS lets you use the:

- existing XSLT stylesheets (for converting DocBook into PDF, HTML, ePUB, etc.)

- same DAPS commands as with DocBook projects

- same project setup as with DocBook projects

Advantages of DocBook for Large Documentation Projects

DocBook is the ideal framework when it comes to publishing large documentation projects in different formats. The DocBook project consists of a language (DocBook XML) and a set of stylesheets to translate this language into different output formats such as HTML, PDF, and EPUB.

The stylesheets define the layout you want to apply when transforming the XML sources into output formats. You can use the stylesheets included with DocBook, or you can write your own XSLT stylesheets to ensure your corporate design is properly reflected.

The language DocBook XML is based on the eXtensible Markup Language (XML) and defines the content in a semantic way through elements like in HTML. DocBook itself is written as a schema that defines the element names and the content and where they can appear. The DocBook schema is used to fulfill two tasks: guided editing and validation.

Guided editing is done via an XML editor (and there are many choices, from XML-focused editors such as oXygen to general programming editors such as Emacs). The editor reads in the DocBook schema and suggests which elements are allowed in the current context. This is similar to sorting objects into drawers according to their function: For example, you place screwdriver and hammer into a drawer labeled Tools, whereas you place teddy bears and building blocks into a drawer labeled Toys. Similarly, when writing documents with DocBook, you would “sort” the author’s name into an XML tag called author, whereas you would “sort” a table into an XML element called table. Validation gives hints about structural errors in an XML document; this could, for example, be a missing element.

Similar products often share a considerable amount of features and differ in details only. If you want to generate multiple documentation variants from your XML files, you can do so with the help of conditional text – or profiling, as it is called in DocBook. For example, you can profile certain parts of your XML texts for different (processor) architectures, operating systems, vendors or target groups.

While learning DocBook XML might seem cumbersome at first sight, it comes with many unique advantages. Among others, it is ideal for the modular structures of complex documentation, it provides profiling, and you can generate many different output formats from the same XML sources.

Contribute to Documentation: AsciiDoc as Convenient Alternative

However, in the age of Cloud, “X as a Service” and “Y as a Platform”, technical projects become more and more complex. In consequence, documentation projects are reliant on contributions from external experts, such as engineers working on new technologies, consultants implementing product and solution stacks onsite at a customer’s, and many others. They don’t have a technical writing background, but they have to deliver specific content. And they don’t have any time at all to deep-dive into a writing language just to provide some documents.

For those projects and contributors, AsciiDoc offers a serious alternative. AsciiDoc belongs to the lightweight markup languages and provides a plain text documentation syntax and processor. It is not as modular and extensive as DocBook, but it is easy to understand and to use.

One of the biggest advantages of using AsciiDoc as a source for documentation is its seamless integration with GitHub. GitHub not only renders AsciiDoc sources, but also allows to edit them directly in the Web interface. This fits nicely with GitHub‘s Web-based pull request workflow: You edit the document online, click a button, and someone else (usually the repository owner) can review and integrate the change. All you need is a free GitHub account (which many developers and technical experts already have). This improves the contribution flow for external contributors.

DAPS Adds AsciiDoc Support

Transforming the XML sources to output formats such as PDF, requires several steps such as validating, filtering (profiling), converting images, and generating a .fo file. As the DocBook project does not provide a standard tool chain, custom solutions (written with make, ant or a scripting language) are necessary for publishing your DocBook documentation projects. That is a major hurdle for writers who would like to use DocBook. The DocBook Authoring and Publishing Suite, originally developed by me, with lots of contributions by Thomas Schraitle, fills this gap by providing a tool set for easy creation and publication of DocBook sources on Linux.

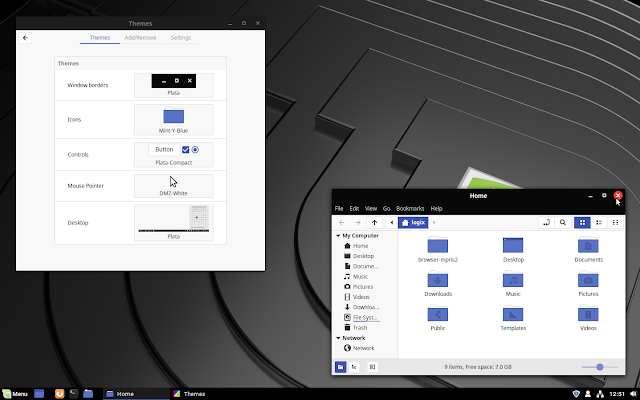

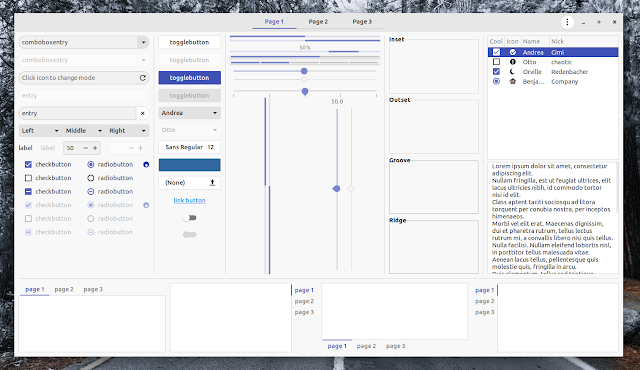

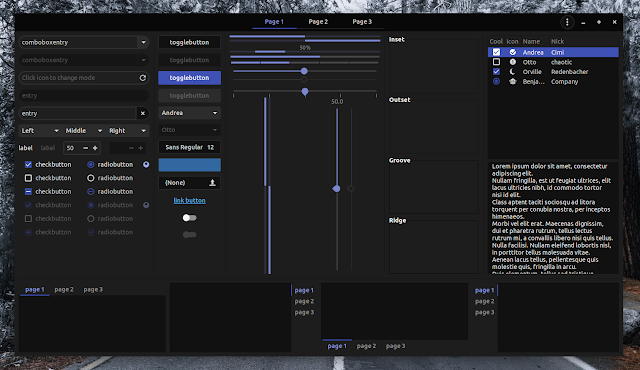

DAPS helps technical writers in the editing, translation and publishing process for documentation written in DocBook XML. DAPS is a command line based software for Linux and lets you create HTML, PDF, EPUB, man pages, and other formats with a single command. It automatically takes care of validating and profiling your sources and of converting the images into the format best suited for the selected output format. DAPS also allows you to manage the key tasks related to writing and editing, create profiled source tarballs for translation or review. DAPS supports authors by providing link checker, validator, spellchecker, and editor macros. Thus it is perfectly suited to manage large documentation projects with multiple authors.

Starting with version 3.0, DAPS supports also AsciiDoc sources. AsciiDoc sources are converted to DocBook and then processed the same way as DocBook sources. Projects with AsciiDoc sources are handled the same way as regular DocBook projects. Therefore, the full range of output formats supported by DAPS is supported also for AsciiDoc sources (HTML, single HTML, PDF, EPUB, plain text, etc.).

DAPS is released as open source. It offers a dual-licensing model at your choice (GPL 2.0 or GPL 3.0) and can be installed and used on any modern Linux system. DAPS packages are available for SUSE Linux Enterprise and for openSUSE, and previous versions of DAPS have successfully been tested and used on Fedora, Ubuntu, Xubuntu, and Debian.

Together with a text editor and a version management system such as Git, DAPS can be used as a fully-fledged authoring and content management system for documentation projects based on DocBook and AsciiDoc.

Curious? Want to try it yourself now? Check out the latest DAPS version, the most recent changes and the documentation and share your feedback with us – just send an email to doc-team@suse.com.

Share with friends and colleagues on social media

Source