Linux mint is a very popular Linux distribution for desktop, and is a free and an open source operating system like most of the Linux distros. Linux Mint is built on top of Ubuntu and Debian kernel architectures. Mint is completely community driven and provides a very interactive user interface like its Windows counterpart. Tools for customizing the desktop and menus that are unique in nature and an overwhelming multimedia support are some of the vital features of the Linux Mint OS. Mint is immensely easy to install with DVD and BluRay playback options available for the users. Mint includes several preinstalled software such as LibreOffice, VLC Media Player, Firefox, Thunderbird, Hexchat, Gimp, Pidgin, Transmission, and third party apps can be also installed with ease with its Software Manager. Apart from the inbuilt ones, Mint also supports third party browser plugins, which are useful for extending the functionality of the operating system.

What Are Wallpapers and Why Are They Used?

A wallpaper is an image which is used for decorative purpose, and it’s installed in the background of the home screen. It is the first thing that is being displayed on the screen when your desktop or laptop has booted up. A wallpaper may be a trivial thing, but for some people who spend hours on the computer it is important to choose a suitable wallpaper. A bold and colourful wallpaper can cheer you up, a wallpaper based on the cool colours scheme can soothe and stimulate your senses. Thus, choosing an appropriate wallpaper is quite necessary as it plays an important psychological role. Usual preference of wallpapers include nature, landscapes, cars, abstract, flowers, celebrities, super models regardless of the attributes of the individual. Here, is a collection of some beautiful HD wallpapers along with the source:

Source : https://www.opendesktop.org/p/1144571/

Description: Kings and Pawns on a chess board. The cool colour scheme and the glass pin effect is calming.

Source : https://www.opendesktop.org/p/1144987/

Description: The aesthetic beauty of the Swiss mountains makes a beautiful wallpaper.

Source : https://www.opendesktop.org/p/1144967/

Description: Creekside waters and beautifully balanced colours capturing scenic beauty.

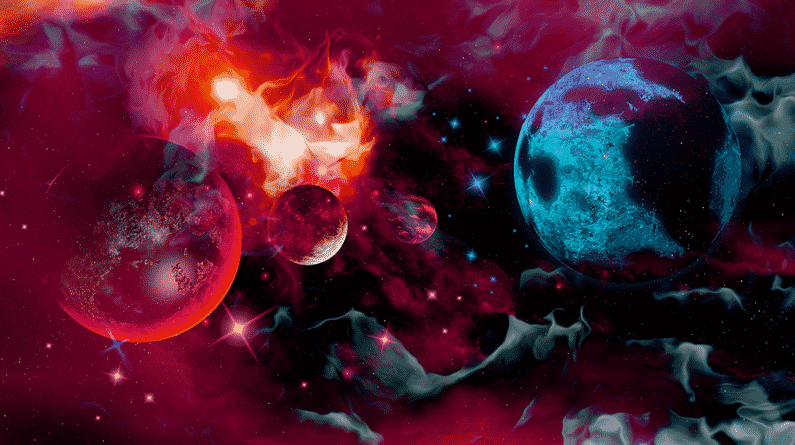

Source : https://www.opendesktop.org/p/1265881/

Description: A starry and a colourful nebula to provide an aesthetic appeal to your desktop. The colour scheme and editing effects just fall into place.

Source : https://winaero.com/blog/download-wallpapers-linux-mint-19/

Description: An artistic capture of a blue fence with the correct amount of focus and the correct amount of blur.

Source : https://winaero.com/blog/download-wallpapers-linux-mint-19/

Description: A high quality coffee beans image for team coffee out there.

Source : https://winaero.com/blog/download-wallpapers-linux-mint-19/

Description: An image of the sky at dawn time with right amount of shade and light. This HD image creates a soothing visual sense.

Source :https://www.opendesktop.org/p/1264112/

Description: An HD quality interior of a ship, with a view of the ocean proves to be an interesting wallpaper. This one is the best for a vintage effect.

Source : https://www.opendesktop.org/p/1266223/

Description: Water gushing and colliding with the stones and the sun peeping out from the clouds makes the picture a perfect wallpaper.

Source : https://grepitout.com/linux-mint-wallpapers-download-free/

Description: The fern trees and the huge hill create a scenic appeal which makes for the best wallpaper.

Source : https://grepitout.com/linux-mint-wallpapers-download-free/

Description: The setting sun painting an oranges hue in the sky, and the hill rocks at the side stimulates a soothing visual sense.

Source : https://grepitout.com/linux-mint-wallpapers-download-free/

Description: The mustard of the pretty flowers leaves a cheerful impression and makes for an amazing wallpaper.

Source : https://www.pexels.com/photo/aerial-view-beach-beautiful-cliff-462162/

Description: The scenic beauty of the bucolic lands and sapphire blue water prove to be a beautiful wallpaper.

Source : https://www.pexels.com/photo/bridge-clouds-cloudy-dark-clouds-556416/

Description: Autumn leaves on the bridge and beautiful mountains at the back make a picture perfect wallpaper.

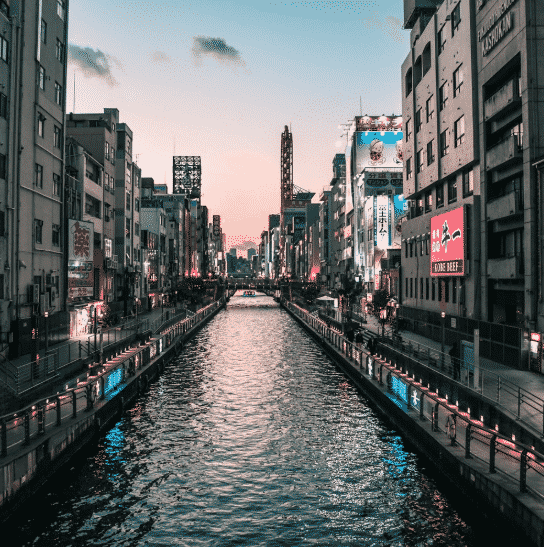

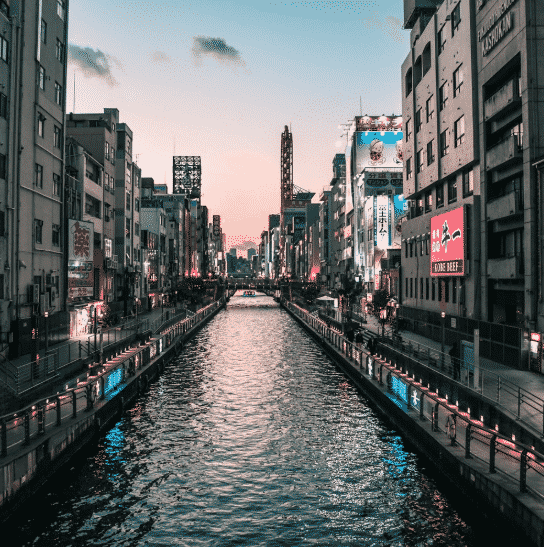

Source : https://www.pexels.com/photo/river-with-high-rise-buildings-on-the-sides-11302/

Description: The beautiful city of Venice and its architectural style captured in the most artistic way prove to be an amazing wallpaper.

Source : https://www.opendesktop.org/p/1245861/

Description: A vintage car and the orange fiery sky subjecting all its colours on the water below create an unnerving yet a calming effect.

Source : https://www.pexels.com/photo/photo-of-man-riding-canoe-1144265/

Description: A calm colour scheme image of a small tunnel surrounded by buildings.

Source : https://www.opendesktop.org/p/1262309/

Description: A fascinating wallpaper choice consisting of pleasant skies and mountains.

Source : https://www.pexels.com/photo/cinque-torri-dolomites-grass-landscape-259705/

Description: Irregular rocks resting in an irregular manner with the striking sky in the back make for an amazing wallpaper.

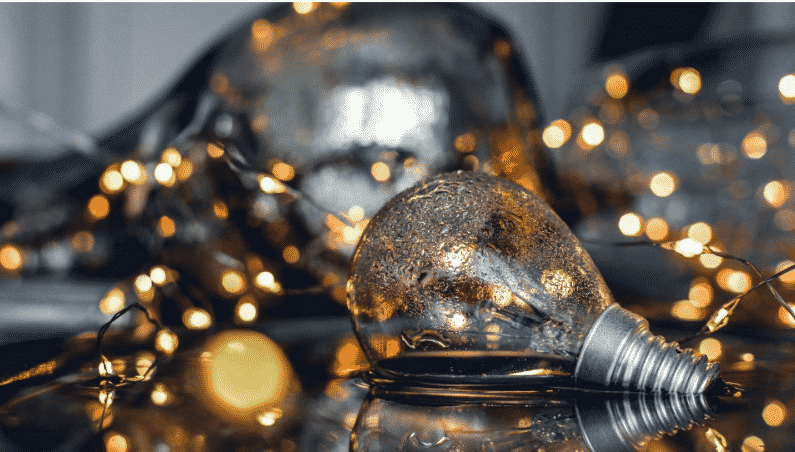

Source : https://www.opendesktop.org/p/1260264/

Description: Blubs and bokeh lights together create an engaging effect for a creative wallpaper.

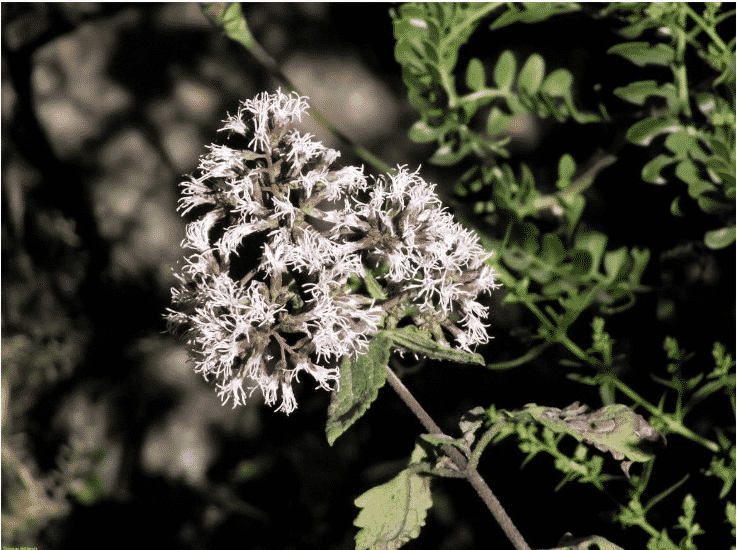

Source : https://www.opendesktop.org/p/1144903/

Description: White flowers with partial blur create a soothing and calming visual sense.

Steps to Install/Use The Wallpapers in Linux Mint 19

The following steps demonstrate how to install the aforesaid wallpapers in your computer. To download the wallpapers, please navigate to the appropriate website stated right under each wallpaper, and click on the download button to download them. Make sure to pick the wallpaper with the right resolution to match with the monitor where wallpaper is being used. Larger ones tend to consume more video memory, whereas smaller ones stretch the image, and thereby making the image blur.

- Right click on the desktop, on a blank spot, a window will appear. Click on Change Desktop Background from the menu.

- A System Settings application window will be launched. Where select the background tab.

- In the left pane, click on Background. You will see a list of all the background images available in Linux Mint.

- Go through all the images, until you find an appropriate one. Left-click on the image to select it.

- You can also add existing items in your Pictures folder as a wallpaper. All you have to do is select Pictures in the left pane. This will display everything in the Pictures folder and you can select the image of your choice for background.

- You can also add another location by clicking on the plus button in the left pane. Navigate until you find the folder and select it.

Using Default Wallpapers

Wallpapers are available within Linux Mint as well. In order to use the default wallpapers provided, you need to install these wallpapers from the Launchpad. This is useful in case want to revert back to default wallpapers from the newer wallpaper. You can do so in the following way:

Open the terminal and type the following commands:

add-get install mint-backgrounds-*

Thus, all the wallpaper packages available will be installed. The * indicates that all the items in the package are installed.

For a specific package, mention the name of the package instead of the asterisk. For example:

add-get install mint-backgrounds-maya

All the installed packages will be stored in a folder named as

Images can be manually dragged and dropped to “/usr/share/backgrounds” and used them through “Backgrounds” window.

Thus, in this way you can install all the predefined wallpaper packages offered by Linux Mint.

Another way to install wallpapers is using PPA

PPA (Personal Package Archives) are packages which contain limited smaller number of packages within them. PPAs are generally hosted by individuals, and thus they are often stayed up-to-date, but the risk can be higher as they are from individual people not from the official website.

Steps to install using a PPA are as follows:

1. In the terminal type the following commands

sudo add-apt-repository ppa:___

2. Copy the ppa and paste it after the colon in the terminal.

3. To get any updates if necessary.

sudo apt-get install package_name

This package is stored in the Synaptic Package Manager. Click on Properties -> Installed Files to view all the installed packages in the /user/share/backgrounds folder.

Wallpaper Changer

You can assign random wallpapers using Variety wallpaper changer. It keeps rotating images within a defined time period or on demand. This is a good way to update the downloaded wallpapers in the background from time to time automatically.

The following commands need to be typed in the terminal to install variety wallpaper changer:

1. Add the Variety PPA in the terminal.

sudo add-apt-repository ppa:peterlevi/ppa

2. Update resource list.

3. Install Variety

sudo apt-get install variety

A variety window will appear which allow you to enter your preferences and then you are good to go.

Thus, above are the different ways in which wallpapers can be installed and used in Linux Mint 19. Wallpapers can be downloaded from various sources. The aforesaid wallpapers are some of the examples, however much better ones can be downloaded by googling them. High definition images that are formatted in PNG image format, and big in size, and resolution make eye-candy wallpapers.

Source