The Linux distribution Debian GNU/Linux [1] is made available as different CD/DVD ISO images. These images are prepared to fit to the needs of different interests and usage cases — desktop environment, server, or mobile devices. At present, the following image variants are offered from the website of the Debian project and the according mirror network:

- a full set of CD/DVD images that contains all the available packages[2]

- a single CD/DVD image with a selection of packages that are tailor-made for a specific desktop environment — GNOME [3], XFCE [4], and for the commandline, only.

- a smaller CD image for network-based installation [5]

- a tiny CD image for network-based installation [5]

- a live CD/DVD [6] in order to test Debian GNU/Linux before installing it

- a cloud image [7]

Downloading the right image file depends on your internet connection (bandwidth), which combination of packages fits your needs, and your level of experience in order to setup and maintain your installation. All the images are available from the mirror network behind the website of the Debian project [8].

What is Debian Netinstall?

As already briefly discussed above a Netinstall image is a smaller CD/DVD image with a size between 150Mb and 300Mb. The actual image size depends on the processor architecture used on your system. Solely, the image contains the setup routines (called Debian Installer) for both text-only and graphical installation as well as the software packages in order to setup a very basic but working Debian GNU/Linux installation. In contrast, the tiny image with a size of about 120Mb contains the Debian Installer, and the network configuration, only.

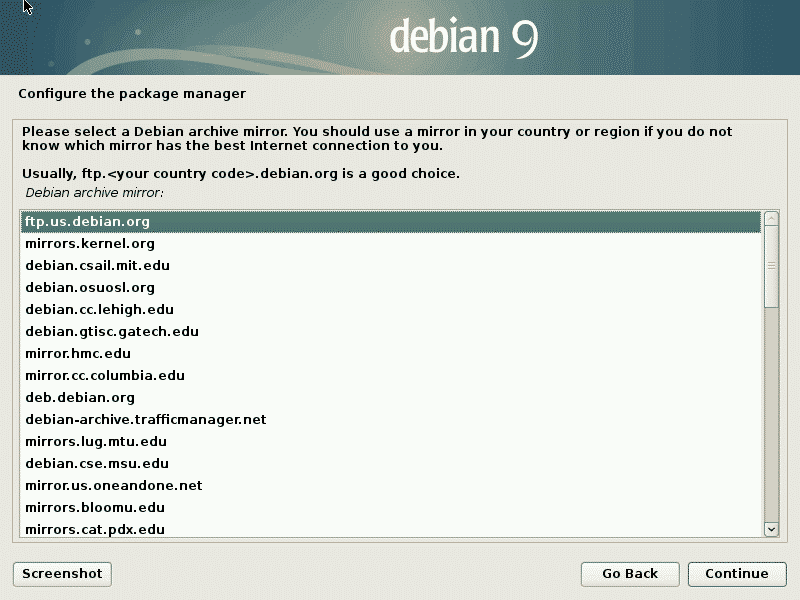

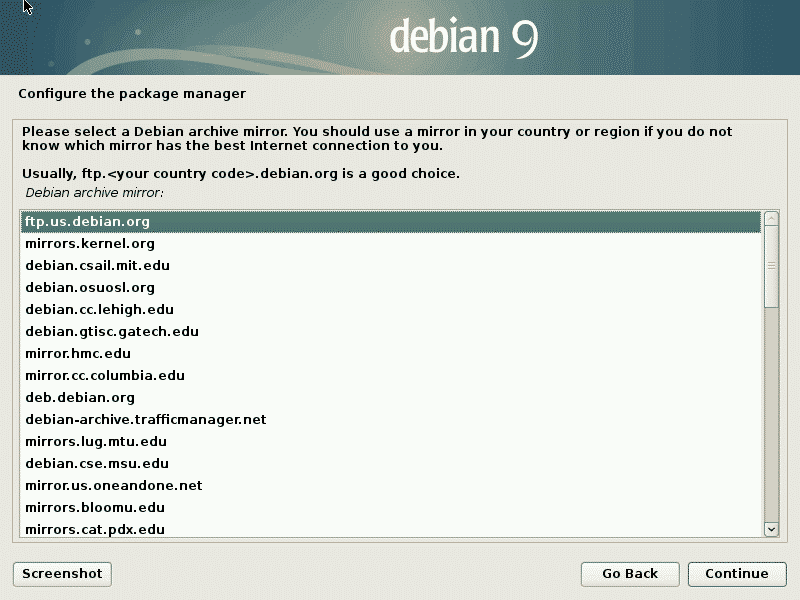

During the setup, the Debian Installer will ask you which Apt repository you would like to use. An Apt repository is a place that provides the Debian software packages. The tools for package management will retrieve the selected software packages from this location, and install them locally on your system. In this case as an Apt repository we do not use the CD/DVD but a so-called package mirror. This package mirror is a server that is connected to the internet, and that is why internet access is required during the setting up of your system. Furthermore, installing new software or updating existing software packages needs to meet the same technical requirements as above — the packages are retrieved from the same Apt repository too.

Choosing the desired package mirror in Debian GNU/Linux 9

Apt Repositories

The address of the chosen Apt repository is stored in the file /etc/apt/sources.list. In general, this is a text file and contains several entries. According to the previously chosen package mirror it looks as follows:

deb http:

//ftp.us.debian.org

/debian

/ stretch main contrib

deb-src http:

//ftp.us.debian.org

/debian

/ stretch main contrib

deb http://security.debian.org/ stretch/updates main contrib

deb-src http://security.debian.org/ stretch/updates main contrib

# stretch-updates, previously known as ‘volatile’

deb http://ftp.us.debian.org/debian/ stretch-updates main contrib

The first group of lines refers to regular software packages, the second group to the according security updates, and the third group to software updates for these packages. Each line refers to Debian packages (a line starting with deb), or Debian source packages (a line starting with deb-src). Source packages are of interest for you in case you would like to download the source code of the software you use.

The Debian GNU/Linux release is either specified by the alias name of the release — here it is Stretch from Toy Story [9] –, or its release state, for example stable, testing, or unstable. At the end of each line, main and contrib reflect the chosen package categories. The keyword main refers to free software, contrib refers to free software that depends on non-free software, and non-free indicates software packages that do not meet the Debian Free Software Guidelines (DFSG)[10].

Finding the right package mirror

Up until now our setup is based on static entries, only, that are not intended to change. This works well for computers that are kept mostly at the same place during their entire usage.

As of a Debian network installation, the right package mirror plays an important role. When choosing a package mirror take the following criteria into account:

- your network connection

- your geographic location

- the desired availability of the package mirror

- reliability

Experiences from managing Linux systems for the last decade show, that chosing a primary package mirror in the same country as the system works best. Such a package mirror should be network-wise nearby, and provide software packages for all the architectures we need. Reliability refers to the person, institute, or company that is responsible for the package mirror we retrieve software from.

A rather dynamic setup can be helpful for mobile devices such as laptops and notebooks. The two commands netselect [11] and netselect-apt [12] come into play. netselect simply expects a list of package mirrors, and validates them regarding availability, ping time as well as the packet loss between the package mirror and your system. The example below demonstrates this for five different mirrors. The last line of the output contains the result — the recommended package mirror is ftp.debian.org.

# netselect -vv ftp.debian.org http.us.debian.org ftp.at.debian.org download.unesp.br

ftp.debian.org.br netselect: unknown host ftp.debian.org.br

Running netselect to choose 1 out of 8 addresses.

………………………………………………………

128.61.240.89 141 ms 8 hops 88% ok ( 8/ 9) [ 284]

ftp.debian.org 41 ms 8 hops 100% ok (10/10) [ 73]

128.30.2.36 118 ms 19 hops 100% ok (10/10) [ 342]

64.50.233.100 112 ms 14 hops 66% ok ( 2/ 3) [ 403]

64.50.236.52 133 ms 15 hops 100% ok (10/10) [ 332]

ftp.at.debian.org 47 ms 13 hops 100% ok (10/10) [ 108]

download.unesp.br 314 ms 10 hops 75% ok ( 3/ 4) [ 836]

ftp.debian.org.br 9999 ms 30 hops 0% ok

73 ftp.debian.org

#

In contrast, netselect-apt uses netselect to find the best package mirror for your location. netselect-apt asks for the country (-c), the number of package mirrors (-t), the architecture (-a), and the release state (-n). The example below discovers the top-five package mirrors in France that offer stable packages for the amd64 architecture:

# netselect-apt -c france -t 5 -a amd64 -n stable

Using distribution stable.

Retrieving the list of mirrors from www.debian.org…

–2019-01-09 11:47:21— http://www.debian.org/mirror/mirrors_full

Aufl√∂sen des Hostnamen ¬ªwww.debian.org (www.debian.org)¬´… 130.89.148.14,

5.153.231.4, 2001:41c8:1000:21::21:4, …

Verbindungsaufbau zu www.debian.org (www.debian.org)|130.89.148.14|:80… verbunden.

HTTP-Anforderung gesendet, warte auf Antwort… 302 Found

Platz: https://www.debian.org/mirror/mirrors_full[folge]

–2019-01-09 11:47:22— https://www.debian.org/mirror/mirrors_full

Verbindungsaufbau zu www.debian.org (www.debian.org)|130.89.148.14|:443… verbunden.

HTTP-Anforderung gesendet, warte auf Antwort… 200 OK

Länge: 189770 (185K) [text/html]

In »»/tmp/netselect-apt.Kp2SNk«« speichern.

/tmp/netselect-apt.Kp2SNk 100%[==========================================>]

185,32K 1,19MB/s in 0,2s

2019-01-09 11:47:22 (1,19 MB/s) Р»»/tmp/netselect-apt.Kp2SNk«« gespeichert

[189770/189770

Choosing a main Debian mirror using netselect.

(will filter only for mirrors in country france)

netselect: 19 (19 active) nameserver request(s)…

Duplicate address 212.27.32.66 (http://debian.proxad.net/debian/,

http://ftp.fr.debian.org/debian/); keeping only under first name.

Running netselect to choose 5 out of 18 addresses.

………………………………………………………………………….

The fastest 5 servers seem to be:

http://debian.proxad.net/debian/

http://debian.mirror.ate.info/

http://debian.mirrors.ovh.net/debian/

http://ftp.rezopole.net/debian/

http://mirror.plusserver.com/debian/debian/

Of the hosts tested we choose the fastest valid for HTTP:

http://debian.proxad.net/debian/

Writing sources.list.

Done.

#

The output is a file called sources.list that is stored in the directory you run the command from. Using the additional option “-o filename” you specify an output file with a name and path of your choice. Nevertheless, you can directly use the new file as a replacement for your original file /etc/apt/sources.list.

Software Strategy

Doing a setup from a smaller installation image gives you the opportunity to make decisions which software to use. We recommend to install what you need on your system, only. The less software packages are installed, the less updates have to be done. So far, this strategy works well for server, desktop systems, routers (specialized devices), and mobile devices.

Keeping your system up-to-date

Maintaining a system means taking care of your setup, and keeping it up-to-date. Install security patches and do software updates regularly, with the help of the package manager like apt.

Often the next step is forgotten — tidying up your system. This includes removing unused software packages, and cleaning the package cache that is located in /var/cache/apt/archives. In the first case the commands “apt autoremove”, “deborphan” [13] and “debfoster” [14] help — they detect unused packages, and let you specify which software shall be kept. Mostly, the removed packages belong to the categories library (lib and oldlib), or development (libdevel). The following example demonstrates this for the tool deborphan. The output columns represent the package size, the package category, the package name, and the package priority.

$ deborphan -Pzs

20 main/oldlibs mktemp extra

132 main/libs liblwres40 standard

172 main/libs libdvd0 optional

…

$

In order to remove the orphaned packages you can use the following command:

# apt remove $(deborphan)

…

#

Still, it will ask you to confirm before removal of the software packages. Next, cleaning the package cache needs to be done. You may either remove the files by “rm /var/cache/apt/archives/*.deb”), or use apt or apt-get as follows:

Dealing with Release Changes

In contrast to other Linux distributions, Debian GNU/Linux does not have a fixed release cycle. A new release is available about every two years. Version 10 is expected to be published in mid-2019.

Updating your existing setup is comparable easy. Take the following thoughts into account and follow these steps:

- Read the documentation for the release change, the so-called Release Notes. They are available from the website of the Debian project, and also part of the image you have chosen before.

- Have your credentials for administrative actions at hand.

- Open a terminal, and run the next steps in a terminal multiplexer like screen [15] or tmux [16].

- Backup the most important data of your system, and validate the backup for being complete.

- Update your current package list using “apt-get update” or “apt update”.

- Check your system for orphans and unused software packages using deborphan, or “apt-get autoremove”. Unused packages do not need to be updated.

- Run the command “apt-get upgrade” to install the latest software updates.

- Edit the file /etc/apt/sources.list, and set the new distribution name, for example from Stretch to Buster.

- Update the package list using “apt update” or “apt-get update”.

- Start the release change by running “apt-get dist-upgrade”. All existing packages are updated.

The last step may take a while, but leads to a new Debian GNU/Linux system. It might be helpful to reboot the system once in order to start with a new Linux kernel.

Conclusion

Setting up a network-based installation, and keeping it alive is simple. Follow the recommendations we gave you in this article, and using your Linux system will be fun.

Links and References

* [1] Debian GNU/Linux, http://debian.org/

* [2] Debian on CDs/DVDs, https://www.debian.org/CD/index.en.html

* [3] GNOME, https://www.gnome.org/

* [4] XFCE, https://xfce.org/

* [5] Installing Debian via the Internet, https://www.debian.org/distrib/netinst.en.html

* [6] Debian Live install images, https://www.debian.org/CD/live/index.en.html

* [7] Debian Official Cloud Images, https://cloud.debian.org/images/cloud/

* [8] Debian mirror network, https://cdimage.debian.org/

* [9] Stretch at the Pixar Wiki, http://pixar.wikia.com/wiki/Stretch

* [10] Debian Free Software Guidelines (DFSG), https://wiki.debian.org/DFSGLicenses

* [11] netselect Debian package, https://packages.debian.org/stretch/netselect

* [12] netselect-apt Debian package, https://packages.debian.org/stretch/netselect-apt

* [13] deborphan Debian package, https://packages.debian.org/stretch/deborphan

* [14] debfoster Debian package, https://packages.debian.org/stretch/debfoster

* [15] screen, https://www.gnu.org/software/screen/

* [16] tmux, https://github.com/tmux/tmux/wiki

Source

A powerful and cross-platform, yet commercial application for file and folder comparison and synchronization

A powerful and cross-platform, yet commercial application for file and folder comparison and synchronization

An easy-to-use and cross-platform Mercurial, Subversion and Git client software!

An easy-to-use and cross-platform Mercurial, Subversion and Git client software!

The Web browser of choice when you are using text mode console or the Linux Terminal

The Web browser of choice when you are using text mode console or the Linux Terminal