This is the penultimate article in a series entitled Securing Kubernetes for Cloud Native Applications, and follows our discussion about securing the important components of a cluster, such as the API server and Kubelet. In this article, we’re going to address the application of best-practice security controls, using some of the cluster’s inherent security mechanisms. If Kubernetes can be likened to a kernel, then we’re about to discuss securing user space – the layer that sits above the kernel – where our workloads run. Let’s start with authentication.

Authentication

We touched on authenticating access to the Kubernetes API server in the last article, mainly in terms of configuring it to disable anonymous authentication. There are a number of different authentication schemes are available in Kubernetes, so let’s delve into this a little deeper.

X.509 Certificates

X.509 certificates are a required ingredient for encrypting any client communication with the API server using TLS. X.509 certificates can also be used as one of the methods for authenticating with the API server, where a client’s identity is provided in the attributes of the certificate – the Common Name provides the username, whilst a variable number of Organization attributes provide the groups that the identity belongs to.

X.509 certificates are a tried and tested method for authentication, but there are a couple of limitations that apply in the context of Kubernetes:

- If an identity is no longer valid (maybe an individual has left your organization), the certificate associated with that identity may need to be revoked. There is currently no way in Kubernetes to query the validity of certificates with a Certificate Revocation List (CRL), or by using an Online Certificate Status Protocol (OSCP) responder. There are a few approaches to get around this (for example, recreate the CA and reissue every client certificate), or it might be considered enough to rely on the authorization step, to deny access for a regular user already authenticated with a revoked certificate. This means we should be careful about the choice of groups in the Organization attribute of certificates. If a certificate we’re not able to revoke contains a group (for example, system:masters) that has an associated default binding that can’t be removed, then we can’t rely on the authorization step to prevent access.

- If there are a large number of identities to manage, the task of issuing and rotating certificates becomes onerous. In such circumstances – unless there is a degree of automation involved – the overhead may become prohibitive.

OpenID Connect

Another increasingly popular method for client authentication is to make use of the built-in Kubernetes support for OpenID Connect (OIDC), with authentication provided by an external identity provider. OpenID Connect is an authentication layer that sits on top of OAuth 2.0, and uses JSON Web Tokens (JWT) to encode the identity of a user and their claims. The ID token provided by the identity provider – stored as part of the user’s kubeconfig – is provided as a bearer token each time the user attempts an API request. As ID tokens can’t be revoked, they tend to have a short lifespan, which means they can only be used during the period of their validity for authentication. Usually, the user will also be issued a refresh token – which can be saved together with the ID token – and used for obtaining a new ID token on its expiry.

Just as we can embody the username and its associated groups as attributes of an X.509 certificate, we can do exactly the same with the JWT ID token. These attributes are associated with the identity’s claims embodied in the token, and are mapped using config options applied to the kube-apiserver.

Kubernetes can be configured to use any one of several popular OIDC identity providers, such as the Google Identity Platform and Azure Active Directory. But what happens if your organization uses a directory service, such as LDAP, for holding user identities? One OIDC-based solution that enables authentication against LDAP, is the open source Dex identity service, which acts as an authentication intermediary to numerous types of identity provider via ‘connectors’. In addition to LDAP, Dex also provides connectors for GitHub, GitLab, and Microsoft accounts using OAuth, amongst others.

Authorization

We shouldn’t rely on authentication alone to control access to the API server – ‘one size fits all’, is too coarse when it comes to controlling access to the resources that make up the cluster. For this reason, Kubernetes provides the means to subject authenticated API requests to authorization scrutiny, based on the authorization modes configured on the API server. We discussed configuring API server authorization modes in the previous article.

Whilst it’s possible to defer authorization to an external authorization mechanism, the de-facto standard authorization mode for Kubernetes is the in-built Role-Based Access Control (RBAC) module. As most pre-packaged application manifests come pre-defined with RBAC roles and bindings – unless there is a very good reason for using an alternative method – RBAC should be the preferred method for authorizing API requests.

RBAC is implemented by defining roles, which are then bound to subjects using ‘role bindings’. Let’s provide some clarification on these terms.

Roles – define what actions can be performed on which objects. The role can either be restricted to a specific namespace, in which case it’s defined in a Role object, or it can be a cluster-wide role, which is defined in a ClusterRole object. In the following example cluster-wide role, a subject bound to the role has the ability to perform get and list operations on the ‘pods’ and ‘pods/log’ resource objects – no more, no less:

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: pod-and-pod-logs-reader

rules:

– apiGroups: [“”]

resources: [“pods”, “pods/log”]

verbs: [“get”, “list”]

If this were a namespaced role, then the object kind would be Role instead of a ClusterRole, and there would be a namespace key with an associated value, in the metadata section.

Role Bindings – bind a role to a set of subjects. A RoleBinding object binds a Role or ClusterRole to subjects in the scope of a specific namespace, whereas a ClusterRoleBinding binds a ClusterRole to subjects on a cluster-wide basis.

Subjects – are users and groups (as provided by a suitable authentication scheme), and Service Accounts, which are API objects used to provide pods that require access to the Kubernetes API, with an identity.

When thinking about the level of access that should be defined in a role, always be guided by the principle of least privilege. In other words, only provide the role with the access that is absolutely necessary for achieving its purpose. From a practical perspective – when creating the definition of a new role, it’s easier to start with an existing role (for example, the edit role), and remove all that is not required. If you find your configuration too restrictive, and you need to determine which roles need creating for a particular action or set of actions, you could use audit2rbac, which will automatically generate the necessary roles and role bindings based on what it observes from the API server’s audit log.

When it comes to providing API access for applications running in pods through service accounts, it might be tempting to bind a new role to the default service account that gets created for each namespace, which is made available to each pod in the namespace. Instead, create a specific service account and role for the pod that requires API access, and then bind that role to the new service account.

Clearly, thinking carefully about who or what needs access to the API server, which parts of the API, and what actions they can perform via the API, is crucial to maintaining a secure Kubernetes cluster. Give it the time and attention it deserves, and if you need some extra help, Giant Swarm has some in-depth documentation that you may find useful!

Pod Security Policy

The containers that get created as constituents of pods are generally configured with sane, practical security defaults, which serve the majority of typical use cases. Often, however, a pod may need additional privileges to perform its intended task – a networking plugin, or an agent for monitoring or logging, for example. In such circumstances, we’d need to enhance the default privileges for pods, but restrict the pods that don’t need the enhanced privileges, to a more restrictive set of privileges. We can, and absolutely should do this, by enabling the PodSecurityPolicy admission controller, and defining policy using the pod security policy API.

Pod security policy defines the security configuration that is required for pods to pass admission, allowing them to be created or updated in the cluster. The controller compares a Pod’s defined security context with any of the policies that the Pod’s creator (be that a Deployment or a user) is allowed to ‘use’, and where the security context exceeds the policy, it will refuse to create or update the pod. The policy can also be used to provide default values, by defining a minimal, restrictive policy, which can be bound to a very general authorization group, such as system:authenticated (applies to all authenticated users), to limit the access those users have to the API server.

Pod Security Fields

There’s quite a lot of configurable security options that can be defined in a PodSecurityPolicy (PSP) object, and the policy that you choose to define will be very dependent on the nature of the workload and the security posture of your organization. Here’s a few example fields from the API object:

- privileged – specifies whether a pod can run in privileged mode, allowing it to access the host’s devices, which in normal circumstances it would not be able to do.

- allowedHostPaths – provides a whitelist of filesystem paths on the host that can be used by the pod as a hostPath volume.

- runAsUser – allows for controlling the UID which a pod’s containers will be run with.

- allowedCapabilities – whitelists the capabilities that can be added on top of the default list provided to a pod’s containers.

Making Use of Pod Security Policy

A word of warning when enabling the PodSecurityPolicy admission controller – unless policy has already been defined in a PSP, pods will fail to get created as the admission controller’s default behavior is to deny pod creation where no match is found against policy – no policy, no match. The pod security policy API is enabled independently of the admission controller though, so it’s entirely possible to define policy ahead of enabling it.

It’s worth pointing out that unlike RBAC, pre-packaged applications rarely contain PSPs in their manifests, which means it falls to the users of those applications to create the necessary policy.

Once PSPs have been defined, they can’t be used to validate pods, unless either the user creating the pod, or the service account associated with the pod, has permission to use the policy. Granting permission is usually achieved with RBAC, by defining a role that allows the use of a particular PSP, and a role binding that binds the role to the user and/or service account.

From a practical perspective – especially in production environments – it’s unlikely that users will create pods directly. Pods are more often than not created as part of a higher level workload abstraction, such as a Deployment, and as a result, it’s the service account associated with the Pod that requires the role for using any given PSP.

Once again, Giant Swarm’s documentation provides some great insights into the use of PSPs for providing applications with privileged access.

Isolating Workloads

In most cases, a Kubernetes cluster is established as a general resource for running multiple, different, and often unrelated application workloads. Co-tenanting workloads in this way brings enormous benefits, but at the same time may increase the risk associated with accidental or intentional exposure of those workloads and their associated data to untrusted sources. Organizational policy – or even regulatory requirement – might dictate that deployed services are isolated from any other unrelated services.

One means of ensuring this, of course, is to separate out a sensitive application into its very own cluster. Running applications in separate clusters ensures the highest possible isolation of application workloads. Sometimes, however, this degree of isolation might be more than is absolutely necessary, and we can instead make use of some of the in-built isolation features available in Kubernetes. Let’s take a look at these.

Namespaces

Namespaces are a mechanism in Kubernetes for providing distinct environments for all of the objects that you might deem to be related, and that need to be separate from other unrelated objects. They provide the means for partitioning the concerns of workloads, teams, environments, customers, and just about anything you deem worthy of segregation.

Usually, a Kubernetes cluster is initially created with three namespaces:

- kube-system – used for objects created by Kubernetes itself.

- kube-public – used for publicly available, readable objects.

- default – used for all objects that are created without an explicit association with a specific namespace.

To make effective use of namespaces – rather than having every object ending up in the default namespace – namespaces should be created and used for isolating objects according to their intended purpose. There is no right or wrong way for namespacing objects, and much will depend on your organization’s particular requirements. Some careful planning will save a lot of re-engineering work later on, so it will pay to give this due consideration up front. Some ideas for consideration might include; different teams and/or areas of the organization, environments such as development, QA, staging, and production, different applications workloads, and possibly different customers in a co-tenanted scenario. It can be tempting to plan your namespaces in a hierarchical fashion, but namespaces have a flat structure, so it’s not possible to do this. Instead, you can provide inferred hierarchies with suitable namespace names, teamA-appY and teamB-appZ, for example.

Adopting namespaces for segregating workloads also helps with managing the use of the cluster’s resources. If we view the cluster as a shared compute resource segregated into discrete namespaces, then it’s possible to apply resource quotas on a per-namespace basis. Resource hungry and more critical workloads that are judiciously namespaced can then benefit from a bigger share of the available resources.

Network Policies

Out-of-the-box, Kubernetes allows all network traffic originating from any pod in the cluster to be sent to and be received by any other pod in the cluster. This open approach doesn’t help us particularly when we’re trying to isolate workloads, so we need to apply network policies to help us achieve the desired isolation.

The Kubernetes NetworkPolicy API enables us to apply ingress and egress rules to selected pods – for layer 3 and layer 4 traffic – and relies on the deployment of a compliant network plugin, that implements the Container Networking Interface (CNI). Not all Kubernetes network plugins provide support for network policy, but popular choices (such as Calico, Weave Net and Romana) do.

Network policy is namespace scoped, and is applied to pods based on selection, courtesy of a matched label (for example, tier: backend). When the pod selector for a NetworkPolicy object matches a pod, traffic to and from the pod is governed according to the ingress and egress rules defined in the policy. All traffic originating from or destined for the pod is then denied – unless there is a rule that allows it.

To properly isolate applications at the network and transport layer of the stack in a Kubernetes cluster, network policies should start with a default premise of ‘deny all’. Rules for each of the application’s components and their required sources and destinations should then be whitelisted one by one, and tested to ensure the traffic pattern works as intended.

Service-to-Service Security

Network policies are just what we need for layer 3/4 traffic isolation, but it would serve us well if we could also ensure that our application services can authenticate with one another, that their communication is encrypted, and that we have the option of applying fine-grained access control for intra-service interaction.

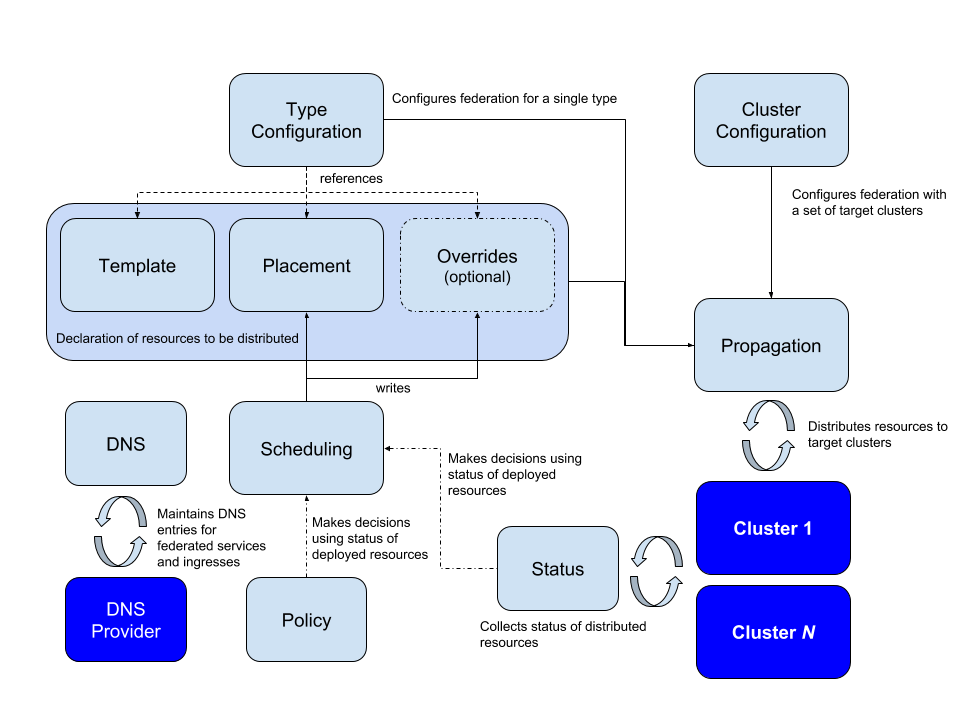

Solutions that help us to achieve this rely on policy applied at layers 5-7 of the network stack, and are a developing capability for cloud-native applications. Istio is one such tool, whose purpose involves the management of application workloads as a service mesh, including; advanced traffic management and service observability, as well as authentication and authorization based on policy. Istio deploys a sidecar container into each pod, which is based on the Envoy reverse proxy. The sidecar containers form a mesh, and proxy traffic between pods from different services, taking account of the defined traffic rules, and the security policy.

Istio’s authentication mechanism for service-to-service communication is based on mutual TLS, and the identity of the service entity is embodied in an X.509 certificate. The identities conform to the Secure Production Identity Framework for Everyone (SPIFFE) specification, which aims to provide a standard for issuing identities to workloads. SPIFFE is a project hosted by the Cloud Native Computing Foundation (CNCF).

Istio has far reaching capabilities, and if its suite of functions aren’t all required, then the benefits it provides might be outweighed by the operational overhead and maintenance it brings on deployment. An alternative solution for providing authenticated service identities based on SPIFFE, is SPIRE, a set of open source tools for creating and issuing identities.

Yet another solution for securing the communication between services in a Kubernetes cluster is the open source Cilium project, which uses Berkeley Packet Filters (BPF) within the Linux kernel to enforce defined security policy for layer 7 traffic. Cilium supports other layer 7 protocols such as Kafka and gRPC, in addition to HTTP.

Summary

As with every layer in the Kubernetes stack, from a security perspective, there is also a huge amount to consider in the user space layer. Kubernetes has been built with security as a first-class citizen, and the various inherent security controls, and mechanisms for interfacing with 3rd party security tooling, provide a comprehensive security capability.

It’s not just about defining policy and rules, however. It’s equally important to ensure, that as well as satisfying your organization’s wider security objectives, your security configuration supports the way your teams are organized, and the way in which they work. This requires careful, considered planning.

In the next and final article in this series, Managing the Security of Kubernetes Container Workloads, we’ll be discussing the security associated with the content of container workloads, and how security needs to be made a part of the end-to-end workflow.

Source

A

A

Want to learn more about

Want to learn more about

For any team using

For any team using

Next, create a

Next, create a Verify that the

Verify that the Test that the

Test that the Once it’s been

Once it’s been