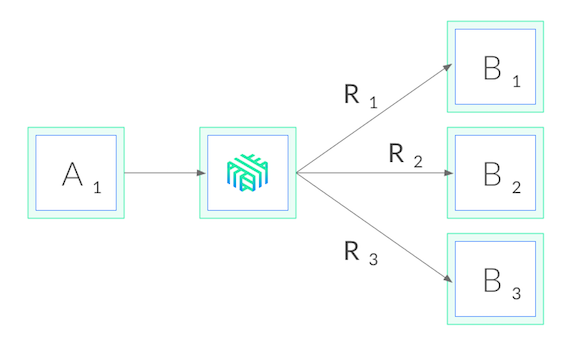

Learn How Rancher 2.0 Solves Enterprise Kubernetes Challenges

Understand the comparitive advantage of Rancher 2.0 for DevOps teams, IT Admins, and Operations.

Read the Report

Container monitoring environments come in all shapes and sizes. Some are open source while others are commercial. Some are in the Rancher Catalog while others require manual configuration. Some are general purpose while others are aimed specifically at container environments. Some are hosted in the cloud while others require installation on own cluster hosts. In this post, I take an updated look at 10 container monitoring solutions. This effort builds on earlier work including Ismail Usman’s

Comparing 7 Monitoring Options for Docker from 2015 and The Great Container Monitoring Bake Off Meetup in October of 2016. The number of monitoring solutions is daunting. New solutions are coming on the scene continuously, and existing solutions evolve in functionality. Rather than looking at each solution in depth, I’ve taken the approach of drawing high-level comparisons. With this approach, readers can hopefully “narrow the list” and do more serious

evaluations of solutions best suited to their own needs.

The monitoring solutions covered here include:

In the following sections, I suggest a framework for comparing monitoring solutions, present a high-level comparison of each, and then discuss each solution in more detail by addressing how each solution works with Rancher. I also cover a few additional solutions you may have come across that did not make my top 10.

A Framework for Comparison

A challenge with objectively comparing monitoring solutions is that architectures, capabilities, deployment models, and costs can vary widely. One solution may extract and graph Docker-related metrics from a single host while another aggregates data from many hosts, measures application response times, and sends automated alerts under particular conditions. Having a framework is useful when comparing solutions. I’ve somewhat arbitrarily proposed the following tiers of functionality that most monitoring solutions have in common as a basis for my comparison. Like any self-respecting architectural stack, this one has seven layers.

Figure 1: A seven-layer model for comparing monitoring solutions

- Host Agents – The host agent represents the “arms and legs” of the monitoring solution, extracting time-series data from various sources like APIs and log files. Agents are usually installed on each cluster host (either on-premises or cloud-resident) and are themselves often packaged as Docker containers for ease of deployment and management.

- Data gathering framework – While single-host metrics are sometimes useful, administrators likely need a consolidated view of all hosts and applications. Monitoring solutions typically have some mechanism to gather data from each host and persist it in a shared data store.

- Datastore – The datastore may be a traditional database, but more commonly it is some form of scalable, distributed database optimized for time-series data comprised of key-value pairs. Some solutions have native datastores while others leverage pluggable open-source datastores.

- Aggregation engine – The problem with storing raw metrics from dozens of hosts is that the amount of data can become overwhelming. Monitoring frameworks often provide data aggregation capabilities, periodically crunching raw data into consolidated metrics (like hourly or daily summaries), purging old data that is no longer needed, or re-factoring data in some fashion to support anticipated queries and analysis.

- Filtering & Analysis – A monitoring solution is only as good as the insights you can gain from the data. Filtering and analysis capabilities vary widely. Some solutions support a few pre-packaged queries presented as simple time-series graphs, while others have customizable dashboards, embedded query languages, and sophisticated analytic functions.

- Visualization tier – Monitoring tools usually have a visualization tier where users can interact with a web interface to generate charts, formulate queries and, in some cases, define alerting conditions. The visualization tier may be tightly coupled with the filtering and analysis functionality, or it may be separate depending on the solution.

- Alerting & Notification – Few administrators have time to sit and monitor graphs all day. Another common feature of monitoring systems is an alerting subsystem that can provide notification if pre-defined thresholds are met or exceeded.

Beyond understanding how each monitoring solution implements the basic capabilities above, users will be interested in other aspects of the monitoring solution as well:

- Completeness of the solution

- Ease of installation and configuration

- Details about the web UI

- Ability to forward alerts to external services

- Level of community support and engagement (for open-source projects)

- Availability in Rancher Catalog

- Support for monitoring non-container environments and apps

- Native Kubernetes support (Pods, Services, Namespaces, etc.)

- Extensibility (APIs, other interfaces)

- Deployment model (self-hosted, cloud)

- Cost, if applicable

Comparing Our 10 Monitoring Solutions

The diagram below shows a high-level view of how our 10 monitoring solutions map to our seven-layer model, which components implement the capabilities at each layer, and where the components reside. Each framework is complicated, and this is a simplification to be sure, but it provides a useful view of which component does what. Read on for

additional detail.

Figure 2 – 10 monitoring solutions at a glance Additional attributes of each monitoring solution are presented in a summary fashion below. For some solutions, there are multiple deployment options, so the comparisons become a little more nuanced.

Looking at Each Solution in More Depth

Docker Stats

At the most basic level, Docker provides built-in command monitoring for Docker

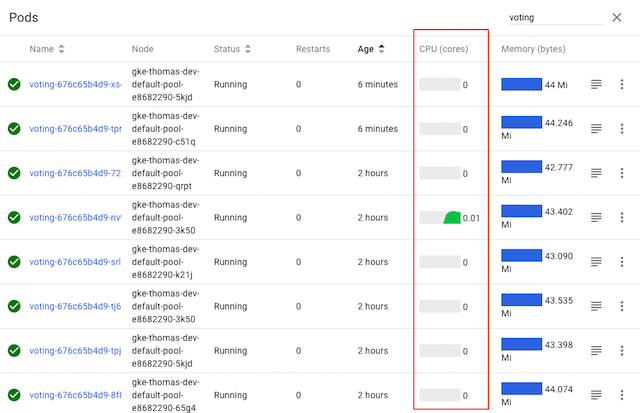

hosts via the docker stats command. Administrators can query the Docker daemon and obtain detailed, real-time information about container resource consumption metrics, including CPU and memory usage, disk and network I/O, and the number of running processes. Docker stats leverages the Docker Engine API to retrieve this information. Docker stats has no notion of history, and it can only monitor a single host, but clever administrators can write scripts to gather metrics from multiple hosts. Docker stats is of limited use on its own, but docker stats data can be combined with other data sources like Docker log files and docker events to feed higher level monitoring services. Docker only knows about metrics reported by a single host, so Docker stats is of limited use monitoring Kubernetes or Swarm clusters with multi-host application services. With no visualization interface, no aggregation, no datastore, and no ability to collect data from multiple hosts, Docker stats does not fare well against our seven-layer model. Because Rancher runs on Docker, basic docker stats functionality is automatically available to Rancher users.

cAdvisor

cAdvisor (container advisor) is an open-source project that like Docker stats provides users with resource usage information about running containers. cAdvisor was originally developed by Google to manage its lmctfy containers, but it now supports Docker as well. It is implemented as a daemon process that collects, aggregates, processes, and exports information about running containers. cAdvisor exposes a web interface and can generate multiple graphs but, like Docker stats, it monitors only a single Docker host. It can be installed on a Docker machine either as a container or natively

cAdvisor (container advisor) is an open-source project that like Docker stats provides users with resource usage information about running containers. cAdvisor was originally developed by Google to manage its lmctfy containers, but it now supports Docker as well. It is implemented as a daemon process that collects, aggregates, processes, and exports information about running containers. cAdvisor exposes a web interface and can generate multiple graphs but, like Docker stats, it monitors only a single Docker host. It can be installed on a Docker machine either as a container or natively

on the Docker host itself. cAdvisor itself only retains information for 60 seconds. cAdvisor needs to be configured to log data to an external datastore. Datastores commonly used with cAdvisor data include Prometheus and

InfluxDB. While cAdvisor itself is not a complete monitoring solution, it is often a component of other monitoring solutions. Before Rancher version 1.2 (late December), Rancher embedded cAdvisor in the rancher-agent (for internal use by Rancher), but this is no longer the case. More recent versions of Rancher use Docker stats to gather information exposed through the Rancher UI because they can do so with less overhead. Administrators can

easily deploy cAdvisor on Rancher, and it is part of several comprehensive monitoring stacks, but cAdvisor is no longer part of Rancher itself.

Scout

Scout is a Colorado-based company that provides a cloud-based application and database-monitoring service aimed mainly at Ruby and Elixir environments. One of many use cases it supports is monitoring Docker containers leveraging its existing monitoring and alerting framework. We mention Scout because it was covered in previous comparisons as a solution for monitoring Docker. Scout provides comprehensive data gathering, filtering, and monitoring functionality with flexible alerts and integrations to third-party alerting services. The team at Scout provides guidance on how to write scripts using Ruby and StatsD to tap the Docker Stats

Scout is a Colorado-based company that provides a cloud-based application and database-monitoring service aimed mainly at Ruby and Elixir environments. One of many use cases it supports is monitoring Docker containers leveraging its existing monitoring and alerting framework. We mention Scout because it was covered in previous comparisons as a solution for monitoring Docker. Scout provides comprehensive data gathering, filtering, and monitoring functionality with flexible alerts and integrations to third-party alerting services. The team at Scout provides guidance on how to write scripts using Ruby and StatsD to tap the Docker Stats

API (above), the Docker Event API, and relay metrics to Scout for monitoring. They’ve also packaged a docker-scout container, available

on Docker Hub (scoutapp/docker-scout), that makes installing and configuring the scout agent simple. The ease of use will depend on whether users configure the StatsD agent themselves or leverage the packaged docker-scout container. As a hosted cloud service, ScoutApp can save a lot of headaches when it comes to getting a container-monitoring solution up and running quickly. If you’re deploying Ruby apps or running the database environments supported by Scout, it probably makes good sense to consolidate your Docker, application, and database-level monitoring and use the Scout solution. Users might want to watch out for a few things, however. At most service levels, the platform only allows for 30 days of data retention, and rather than being priced month per monitored host,

standard packages are priced per transaction ranging from $99 to $299

per month. The solution out of the box is not Kubernetes-aware, and

extracts and relays a limited set of metrics. Also, while docker-scout

is available on Docker Hub, development is by Pingdom, and there have

been only minor updates in the last two years to the agent component.

Scout is not natively supported in Rancher but, because it is a cloud

service, it is easy to deploy and use, particularly when the

container-based agent is used. At present, the docker-scout agent is

not in the Rancher Catalog.

Pingdom

Because we’ve mentioned Scout as a cloud-hosted app, we also need to mention a similar solution called Pingdom. Pingdom

Because we’ve mentioned Scout as a cloud-hosted app, we also need to mention a similar solution called Pingdom. Pingdom

is a hosted-cloud service operated by

SolarWinds, an Austin, TX,

company focused on monitoring IT infrastructure. While the main use case

for Pingdom is website monitoring, as a part of its server monitor

platform, Pingdom offers approximately 90 plug-ins. In fact, Pingdom

maintains

docker-scout,

the same StatsD agent used by Scout. Pingdom is worth a look because its

pricing scheme appears better suited to monitoring Docker

environments. Pricing is flexible, and users can choose between

per-server based plans and plans based on the number of StatsD metrics

collected ($1 per 10 metrics per month). Pingdom makes sense for users

who need a full-stack monitoring solution that is easy to set up and

manage, and who want to monitor additional services beyond the container

management platform. Like Scout, Pingdom is a cloud service that can be

easily used with Rancher.

Datadog

Datadog is another commercial hosted-cloud monitoring service similar to Scout and Pingdom. Datadog also provides a Dockerized agent for installation on each Docker host; however, rather than using StatsD like the

Datadog is another commercial hosted-cloud monitoring service similar to Scout and Pingdom. Datadog also provides a Dockerized agent for installation on each Docker host; however, rather than using StatsD like the

cloud-monitoring solutions mentioned previously, Datadog has developed

an enhanced StatsD called

DogStatsD. The Datadog

agent collects and relays the full set of metrics available from the

Docker API providing more detailed, granular monitoring. While Datadog

does not have native support for Rancher, a Datadog catalog entry in the

Rancher UI makes the Datadog agent easy to install and configure on

Rancher. Rancher tags can be used as well so that reporting in Datadog

reflects labels you’ve used for hosts and applications in Rancher.

Datadog provides better access to metrics and more granularity in

defining alert conditions than the cloud services mentioned earlier.

Like the other services, Datadog can be used to monitor other services

and applications as well, and it boasts a library of over 200

integrations. Datadog also retains data at full resolution for 18

months, which is longer than the cloud services above. An advantage of

Datadog over some of other cloud services is that it has integrations

beyond Docker and can collect metrics from Kubernetes, Mesos, etcd, and

other services that you may be running in your Rancher environment. This

versatility is important to users running Kubernetes on Rancher because

they want to be able to monitor metrics for things like Kubernetes pods,

services, namespaces, and kubelet health. The Datadog-Kubernetes

monitoring solution uses DaemonSets in Kubernetes to automatically

deploy the data collection agent to each cluster node. Pricing for

Datadog starts at approximately $15 per host per month and goes up from

there depending services required and the number of monitored containers

per host.

Sysdig

Sysdig is a California company that provides a cloud-based monitoring solution. Unlike some of the cloud-based monitoring solutions described so far, Sysdig focuses more narrowly on monitoring container environments including Docker, Swarm, Mesos, and Kubernetes. Sysdig also makes some of its functionality available in open-source projects, and they provide

Sysdig is a California company that provides a cloud-based monitoring solution. Unlike some of the cloud-based monitoring solutions described so far, Sysdig focuses more narrowly on monitoring container environments including Docker, Swarm, Mesos, and Kubernetes. Sysdig also makes some of its functionality available in open-source projects, and they provide

the option of either cloud or on-premises deployments of the Sysdig

monitoring service. In these respects, Sysdig is different than the

cloud-based solutions looked at so far. Like Datadog, catalog entries

are available for Rancher, but for Sysdig there are separate entries for

on-premises and cloud installations. Automated installation from the

Rancher Catalog is not available for Kubernetes; however, it can be

installed on Rancher outside of the catalog. The commercial Sysdig

Monitor has Docker monitoring, alerting, and troubleshooting facilities

and is also Kubernetes, Mesos, and Swarm-aware. Sysdig is automatically

aware of Kubernetes pods and services, making it a good solution if

you’ve chosen Kubernetes as your orchestration framework on Rancher.

Sysdig is priced monthly per host like Datadog. While the entry price is

slightly higher, Sysdig includes support for more containers per host,

so actual pricing will likely be very similar depending on the user’s

environment. Sysdig also provides a comprehensive CLI, csysdig,

differentiating it from some of the offerings.

Prometheus

Prometheus is a popular, open-source monitoring and alerting toolkit originally built at SoundCloud. It is now a CNCF project, the company’s second hosted project after Kubernetes. As a toolkit, it is substantially different

from monitoring solutions described thus far. A first major difference

is that rather being offered as a cloud service, Prometheus is modular

and self-hosted, meaning that users deploy Prometheus on their clusters

whether on-premises or cloud-resident. Rather than pushing data to a

cloud service, Prometheus installs on each Docker host and pulls or

“scrapes” data from an extensive variety of

exporters

available to Prometheus via HTTP. Some exporters are officially

maintained as a part of the Prometheus GitHub project, while others are

external contributions. Some projects expose Prometheus metrics natively

so that exporters are not needed. Prometheus is highly extensible. Users

need to mind the number of exporters and configure polling intervals

appropriately depending on the amount of data they are collecting. The

Prometheus server retrieves time-series data from various sources and

stores data in its internal datastore. Prometheus provides features like

service discovery, a separate push gateway for specific types of metrics

and has an embedded query language (PromQL) that excels at querying

multidimensional data. It also has an embedded web UI and API. The web

UI in Prometheus provides good functionality but relies on users knowing

PromQL, so some sites prefer to use Grafana as an interface for charting

and viewing cluster-related metrics. Prometheus has a discrete Alert

Manager with a distinct UI that can work with data stored in Prometheus.

Like other alert managers, it works with a variety of external alerting

services including email, Hipchat, Pagerduty, #Slack, OpsGenie,

VictorOps, and others. Because Prometheus is comprised of many

components, and exporters need to be selected and installed depending on

the services monitored, it is more difficult to install; but as a free

offering, the price is right. While not quite as refined as tools like

Datadog or Sysdig, Prometheus offers similar functionality, extensive

third-party software integrations, and best-in-class cloud monitoring

solutions. Prometheus is aware of Kubernetes and other container

management frameworks. An entry in the Rancher Catalog developed by

Infinityworks makes getting started

with Prometheus easier when Cattle is used as the Rancher orchestrator

but, because of the wide variety of configuration options,

administrators need to spend some time to get it properly installed and

configured. Infinityworks have contributed useful add-ons including the

prometheus-rancher-exporter that

exposes the health of Rancher stacks and hosts obtained from the Rancher

API to a Prometheus compatible endpoint. For administrators who don’t

mind going to a little more effort, Prometheus is one of the most

capable monitoring solutions and should be on your shortlist for

consideration.

Heapster

Heapster is another solution that often comes up related to monitoring-container

environments. Heapster is a project under the Kubernetes umbrella that

helps enable container-cluster monitoring and performance analysis.

Heapster specifically supports Kubernetes and OpenShift and is most

relevant for Rancher users running Kuberenetes as their orchestrator. It

is not typically be used with Cattle or Swarm. People often describe

Heapster as a monitoring solution, but it is more precisely a

“cluster-wide aggregator of monitoring and event data.” Heapster is

never deployed alone; rather, it is a part of a stack of open-source

components. The Heapster monitoring stack is typically comprised of:

- A data gathering tier – e.g., cAdvisor accessed with the

kubelet on each cluster host

- Pluggable storage backends – e.g., ElasticSearch, InfluxDB,

Kafka, Graphite, or roughly a dozen

others

- A data visualization component – Grafana or Google Cloud

Monitoring

A popular stack is comprised of Heapster, InfluxDB, and Grafana, and

this combination is installed by default on Rancher when users choose to

deploy Kubernetes. Note that these components are considered add-ons to

Kubernetes, so they may not be automatically deployed with all

Kubernetes distributions. One of the reasons that InfluxDB is popular is

that it is one of the few data backends that supports both Kubernetes

events and metrics, allowing for more comprehensive monitoring of

Kubernetes. Note that Heapster does not natively support alerting or

services related to Application Performance Management (APM) found in

commercial cloud-based solutions or Prometheus. Users that need

monitoring services can supplement their Heapster installation using

Hawkular, but this is not automatically

configured as part of the Rancher deployment and will require extra user

effort.

ELK Stack

Another

Another

open-source software stack available for monitoring container environments is ELK, comprised of three open-source projects contributed by Elastic. The ELK stack is versatile and

is widely used for a variety of analytic applications, log file

monitoring being a key one. ELK is named for its key components:

- Elasticsearch – a

distributed search engine based on Lucene

- Logstash – a

data-processing pipeline that ingests data and sends it to

Elastisearch (or other “stashes”)

- Kibana – a visual search

dashboard and analysis tool for Elasticsearch

An unsung member of the Elastic stack is

Beats, described by the project

developers as “lightweight data shippers.” There are a variety of

off-the-shelf Beats shippers including Filebeat (used for log files),

Metricbeat (using for gathering data metrics from various sources), and

Heartbeat for simple uptime monitoring among others. Metricbeat is

Docker-aware, and the authors provide

guidance

on how to use it to extract host metrics and monitor services in Docker

containers. There are variations in how the ELK stack is

deployed. Lorenzo Fontana of Kiratech explains in this

article

how to use cAdvisor to collect metrics from Docker Swarm hosts for

storage in ElasticSearch and analysis using Kibana. In another

article,

Aboullaite Mohammed describes a different use case focused on collecting

Docker log files for analysis focusing on analyzing various Linux and

NGINX log files (error.log, access.log, and syslog). There are

commercial ELK stack providers such as logz.io and

Elastic Co themselves that offer “ELK as a

service” supplementing the stack’s capabilities with alerting

functionality. Additional information about using ELK with Docker is

available at https://elk-docker.readthedocs.io/. For Rancher users

that wish to experiment with ELK, the stack is available as a Rancher

Catalog entry, and a tutorial by Rachid

Zaroualli

explains how to deploy it. Zaroualli has contributed an additional

article

on how the ELK stack can be used for monitoring Twitter data. While

knowledgeable administrators can use ELK for container monitoring, this

is a tougher solution to implement compared to Sysdig, Prometheus, or

Datadog, all of which are more directly aimed at container monitoring.

Sensu

Sensu is a general-purpose, self-hosted monitoring solution that supports a variety of monitoring applications. A free Sensu Core edition is available under an MIT license, while an enterprise version with added functionality is available for $99 per month for 50 Sensu clients. Sensu uses the term client to refer to its monitoring agents, so depending on the number of hosts and application environments you are monitoring, the enterprise

Sensu is a general-purpose, self-hosted monitoring solution that supports a variety of monitoring applications. A free Sensu Core edition is available under an MIT license, while an enterprise version with added functionality is available for $99 per month for 50 Sensu clients. Sensu uses the term client to refer to its monitoring agents, so depending on the number of hosts and application environments you are monitoring, the enterprise

edition can get expensive. Sensu has impressive capabilities outside of

container management, but consistent with the other platforms I’ve

looked at it from the perspective of monitoring the container

environment and containerized applications. The number of Sensu

plug-ins continues

to grow, and there are dozens of Sensu and community supported plug-ins

that allow metrics to be extracted from various sources. In an earlier

evaluation of Sensu on Rancher in 2015, it was necessary for the author

to develop shell scripts to extract information from Docker, but an

actively developed Docker

plug-in is now

available for this purpose making Sensu easier to use with Rancher.

Plug-ins tend to be written in Ruby with gem-based installation scripts

that need to run on the Docker host. Users can develop additional

plug-ins in the languages they choose. Sensu plug-ins are not deployed

in their own containers, as common with other monitoring solutions we’ve

considered. (This is no doubt because Sensu does not come from a

heritage of monitoring containers.) Different users will want to mix and

match plug-ins depending on their monitoring requirements, so having

separate containers for each plug-in would become unwieldy, and this is

possibly why containers are not used for deployment. Plug-ins are

deployable using platforms like Chef, Puppet, and Ansible, however. For

Docker alone, for example, there are six separate

plug-ins

that gather Docker-related data from various sources, including Docker

stats, container counts, container health, Docker ps, and more. The

number of plug-ins is impressive and includes many of the application

stacks that users will likely be running in container environments

(ElasticSearch, Solr, Redis, MongoDB, RabbitMQ, Graphite, and Logstash,

to name a few). Plug-ins for management and orchestration frameworks

like AWS services (EC2, RDS, ELB) are also provided with Sensi.

OpenStack and Mesos support is available in Sensu as well. Kubernetes

appears to be missing from the list of plug-ins a present. Sensu uses a

message bus implemented using RabbitMQ to facilitate communication

between the agents/clients and the Sensu server. Sensu uses Redis to

store data, but it is designed to route data to external time-series

databases. Among the databases supported are Graphite, Librato, and

InfluxDB. Installing and configuring

Sensu

takes some effort. Pre-requisites to installing Sensu are Redis and

RabbitMQ. The Sensu server, Sensu clients, and the Sensu dashboard

require separate installation, and the process varies depending on

whether you are deploying Sensu core or the enterprise version. Sensu as

mentioned, do not offer a container friendly deployment model. For

convenience, a Docker image is available

(hiroakis/docker-sensu-server)

that runs redis, rabbitmq-server, uchiwa (the open-source web tier) and

the Sensu server components, but this package is more useful for

evaluation than a production deployment. Sensu has a large number of

features, but a drawback for container users is that the framework is

harder to install, configure, and maintain because the components are

not themselves Dockerized. Also, many of the alerting features like

sending alerts to services like PagerDuty, Slack, or HipChat, for

example, that are available in competing cloud-based solutions or

open-source solutions like Prometheus require that purchase of the Sensu

enterprise license. Particularly if you are running Kubernetes, there

are probably better choices out there.

The Monitoring Solutions We Missed

- Graylog is another open-source solution

that comes up when monitoring Docker. Like ELK, Graylog is suited to

Docker log file analysis. It can accept and parse logs and event

data from multiple data sources and supports third-party collectors

like Beats, Fluentd, and NXLog. There’s a good

tutorial

on configuring Graylog for use with Rancher.

- Nagios is usually viewed as better suited

for monitoring cluster hosts rather than containers but, for those

of us who grew up monitoring clusters, Nagios is a crowd favorite.

For those interested in using Nagios with

Rancher, some work has

been done here.

- Netsil is a Silicon Valley startup offering a

monitoring application with plugins for Docker, Kubernetes, Mesos,

and a variety of applications and cloud providers. Netsil’s

Application Operations Center (AOC) provides framework-aware

monitoring for cloud application services. Like some of the other

monitoring frameworks discussed, it is offered as a cloud/SaaS or

self-hosted.

Gord Sissons, Principal Consultant at StoryTek

Source

Because we’ve mentioned Scout as a cloud-hosted app, we also need to mention a similar solution called

Because we’ve mentioned Scout as a cloud-hosted app, we also need to mention a similar solution called  Datadog is another commercial hosted-cloud monitoring service similar to Scout and Pingdom. Datadog also provides a Dockerized agent for installation on each Docker host; however, rather than using StatsD like the

Datadog is another commercial hosted-cloud monitoring service similar to Scout and Pingdom. Datadog also provides a Dockerized agent for installation on each Docker host; however, rather than using StatsD like the Sysdig is a California company that provides a cloud-based monitoring solution. Unlike some of the cloud-based monitoring solutions described so far, Sysdig focuses more narrowly on monitoring container environments including Docker, Swarm, Mesos, and Kubernetes. Sysdig also makes some of its functionality available in open-source projects, and they provide

Sysdig is a California company that provides a cloud-based monitoring solution. Unlike some of the cloud-based monitoring solutions described so far, Sysdig focuses more narrowly on monitoring container environments including Docker, Swarm, Mesos, and Kubernetes. Sysdig also makes some of its functionality available in open-source projects, and they provide

Another

Another Sensu is a general-purpose, self-hosted monitoring solution that supports a variety of monitoring applications. A free Sensu Core edition is available under an MIT license, while an enterprise version with added functionality is available for $99 per month for 50 Sensu clients. Sensu uses the term client to refer to its monitoring agents, so depending on the number of hosts and application environments you are monitoring, the enterprise

Sensu is a general-purpose, self-hosted monitoring solution that supports a variety of monitoring applications. A free Sensu Core edition is available under an MIT license, while an enterprise version with added functionality is available for $99 per month for 50 Sensu clients. Sensu uses the term client to refer to its monitoring agents, so depending on the number of hosts and application environments you are monitoring, the enterprise