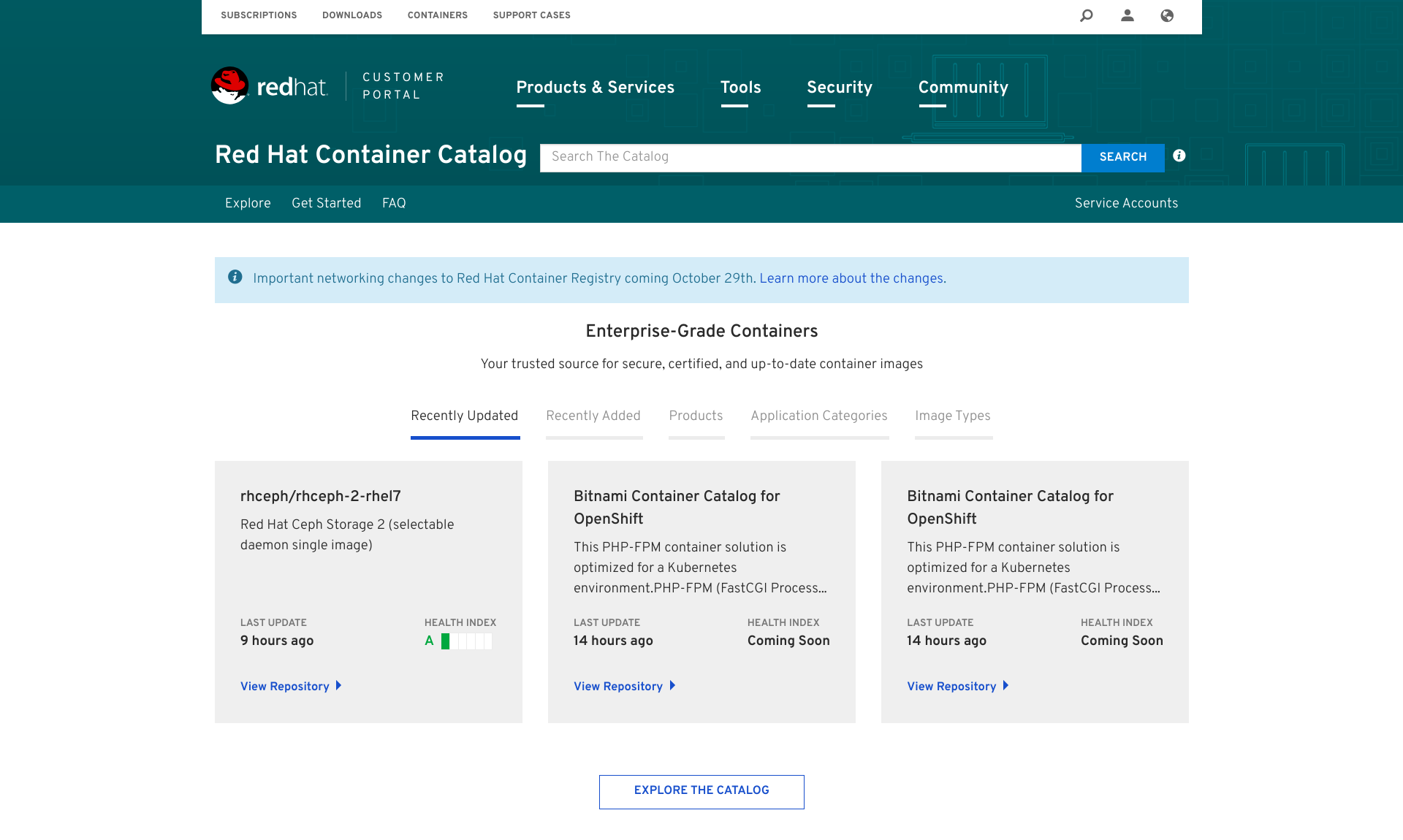

In the previous article of this series Securing Kubernetes for Cloud Native Applications, we discussed what needs to be considered when securing the infrastructure on which a Kubernetes cluster is deployed. This time around, we’re turning our attention to the cluster itself.

Kubernetes is a complex system, and the diagram above shows the many different constituent parts that make up a cluster. Each of these components needs to be carefully secured in order to maintain the overall integrity of the cluster.

We won’t be able to cover every aspect of cluster-level security in this article, but we’ll aim to address the more important topics. As we’ll see later, help is available from the wider community, in terms of best-practice security for Kubernetes clusters, and the tooling for measuring adherence to that best-practice.

Cluster Installers

We should start with a brief observation about the many different tools that can be used to install the cluster components.

Some of the default configuration parameters for the components of a Kubernetes cluster, are sub-optimal from a security perspective, and need to be set correctly to ensure a secure cluster. Unless you opt for a managed Kubernetes cluster (such as that provided by Giant Swarm), where the entire cluster is managed on your behalf, this problem is exacerbated by the many different cluster installation tools available, each of which will apply a subtly different configuration. While most installers come with sane defaults, we should never consider that they have our backs covered when it comes to security, and we should make it our objective to ensure that whichever installer mechanism we elect to use, it’s configured to secure the cluster according to our requirements.

Let’s take a look at some of the important aspects of security for the control plane.

API Server

The API server is the hub of all communication within the cluster, and it’s on the API server where the majority of the cluster’s security configuration is applied. The API server is the only component of the cluster’s control plane, that is able to interact directly with the cluster’s state store. Users operating the cluster, other control plane components, and sometimes cluster workloads, all interact with the cluster using the server’s HTTP-based REST API.

Because of its pivotal role in the control of the cluster, carefully managing access to the API server is crucial as far as security is concerned. If somebody or something gains unsolicited access to the API, it may be possible for them to acquire all kinds of sensitive information, as well as gain control of the cluster itself. For this reason, client access to the Kubernetes API should be encrypted, authenticated, and authorized.

Securing Communication with TLS

To prevent man-in-the-middle attacks, the communication between each and every client and the API server should be encrypted using TLS. To achieve this, the API server needs to be configured with a private key and X.509 certificate.

The X.509 certificate for the root certificate authority (CA) that issued the API server’s certificate, must be available to any clients needing to authenticate to the API server during a TLS handshake, which leads us to the question of certificate authorities for the cluster in general. As we’ll see in a moment, there are numerous ways for clients to authenticate to the API server, and one of these is by way of X.509 certificates. If this method of client authentication is employed, which is probably true in the majority of cases (at least for cluster components), each cluster component should get its own certificate, and it makes a lot of sense to establish a cluster-wide PKI capability.

There are numerous ways that a PKI capability can be realised for a cluster, and no one way is better than another. It could be configured by hand, it may be configured courtesy of your chosen installer, or by some other means. In fact, the cluster can be configured to have its own in-built CA, that can issue certificates in response to certificate signing requests submitted via the API server. Here, at Giant Swarm, we use an operator called cert-operator, in conjunction with Hashicorp’s Vault.

Whilst we’re on the topic of secure communication with the API server, be sure to disable its insecure port (prior to Kubernetes 1.13), which serves the API over plain HTTP (–insecure-port=0)!

Authentication, Authorization, and Admission Control

Now let’s turn our attention to controlling which clients can perform which operations on which resources in the cluster. We won’t go into much detail here, as by and large, this is a topic for the next article. What’s important, is to make sure that the components of the control plane are configured to provide the underlying access controls.

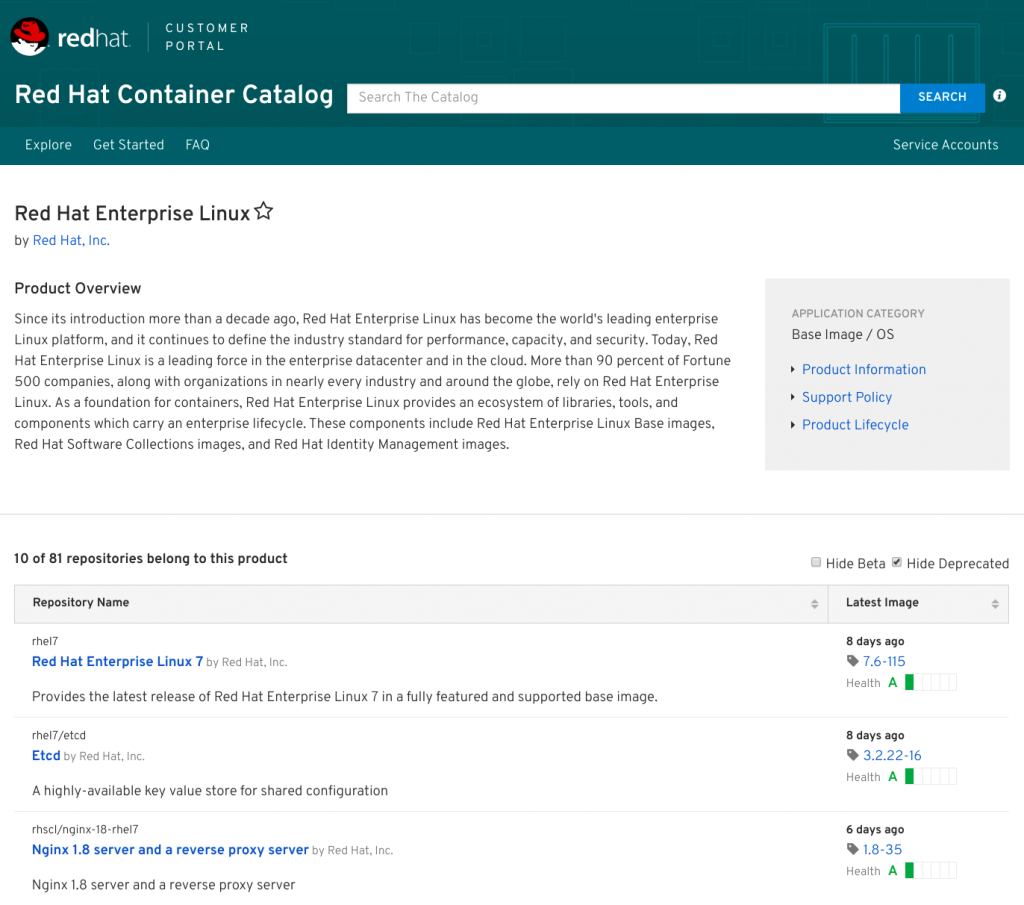

When an API request lands at the API server, it performs a series of checks to determine whether to serve the request or not, and if it does serve the request, whether to validate or mutate the the resource object according to defined policy. The chain of execution is depicted in the diagram above.

Kubernetes supports many different authentication schemes, which are almost always implemented externally to the cluster, including X.509 certificates, basic auth, bearer tokens, OpenID Connect (OIDC) for authenticating with a trusted identity provider, and so on. The various schemes are enabled using relevant config options on the API server, so be sure to provide these for the authentication scheme(s) you plan to use. X.509 client certificate authentication requires the path to a file containing one or more certificates for CAs (–client-ca-file), for example. One important point to remember, is that by default, any API requests that are not authenticated by one of the authentication schemes, are treated as anonymous requests. Whilst the access that anonymous requests gain can be limited by authorization, if they’re not required, they should be turned off altogether (–anonymous-auth=false).

Once a request is authenticated, the API server then considers the request against authorization policy. Again, the authorization modes are a configuration option (–authorization-mode), which should at the very least be altered from the default value of AlwaysAllow. The list of authorization modes ideally should include RBAC and Node, the former for enabling the RBAC API for fine-grained access control, and the latter to authorize kubelet API requests (see below).

Once an API request has been authenticated and authorized, the resource object can be subject to validation or mutation before it’s persisted to the cluster’s state database, using admission controllers. A minimum set of admission controllers are recommended for use, and shouldn’t be removed from the list, unless there is very good reason to do so. Additional security related admission controllers that are worthy of consideration are:

- DenyEscalatingExec – if it’s necessary to allow your pods to run with enhanced privileges (e.g. using the host’s IPC/PID namespaces), this admission controller will prevent users from executing commands in the pod’s privileged containers.

- PodSecurityPolicy – provides the means for applying various security mechanisms for all created pods. We’ll discuss this further in the next article in this series, but for now it’s important to ensure this admission controller is enabled, otherwise our security policy cannot be applied.

- NodeRestriction – an admission controller that governs the access a kubelet has to cluster resources, which is covered in more detail below.

- ImagePolicyWebhook – allows for the images defined for a pod’s containers, to be checked for vulnerabilities by an external ‘image validator’, such as the Image Enforcer. Image Enforcer is based on the Open Policy Agent (OPA), and works in conjunction with the open source vulnerability scanner, Clair.

Dynamic admission control, which is a relatively new feature in Kubernetes, aims to provide much greater flexibility over the static plugin admission control mechanism. It’s implemented with admission webhooks and controller-based initializers, and promises much for cluster security, just as soon as community solutions reach a level of sufficient maturity.

Kubelet

The kubelet is an agent that runs on each node in the cluster, and is responsible for all pod-related activities on the node that it runs on, including starting/stopping and restarting pod containers, reporting on the health of pod containers, amongst other things. After the API server, the kubelet is the next most important cluster component to consider when it comes to security.

Accessing the Kubelet REST API

The kubelet serves a small REST API on ports 10250 and 10255. Port 10250 is a read/write port, whilst 10255 is a read-only port with a subset of the API endpoints.

Providing unfettered access to port 10250 is dangerous, as it’s possible to execute arbitrary commands inside a pod’s containers, as well as start arbitrary pods. Similarly, both ports provide read access to potentially sensitive information concerning pods and their containers, which might render workloads vulnerable to compromise.

To safeguard against potential compromise, the read-only port should be disabled, by setting the kubelet’s configuration, –read-only-port=0. Port 10250, however, needs to be available for metrics collecting and other important functions. Access to this port should be carefully controlled, so let’s discuss the key security configurations.

Client Authentication

Unless its specifically configured, the kubelet API is open to unauthenticated requests from clients. It’s important, therefore, to configure one of the available authentication methods; X.509 client certificates, or requests with Authorization headers containing bearer tokens.

In the case of X.509 client certificates, the contents of a CA bundle needs to be made available to the kubelet, so that it can authenticate the certificates presented by clients during a TLS handshake. This is provided as part of the kubelet configuration (–client-ca-file).

In an ideal world, the only client that needs access to a kubelet’s API, is the Kubernetes API server. It needs to access the kubelet’s API endpoints for various functions, such as collecting logs and metrics, executing a command in a container (think kubectl exec), forwarding a port to a container, and so on. In order for it to be authenticated by the kubelet, the API server needs to be configured with client TLS credentials (–kubelet-client-certificate and –kubelet-client-key).

Anonymous Authentication

If you’ve taken the care to configure the API server’s access to the kubelet’s API, you might be forgiven for thinking ‘job done’. But this isn’t the case, as any requests hitting the kubelet’s API that don’t attempt to authenticate with the kubelet, are deemed to be anonymous requests. By default, the kubelet passes anonymous requests on for authorization, rather than rejecting them as unauthenticated.

If it’s essential in your environment to allow for anonymous kubelet API requests, then there is the authorization gate, which gives some flexibility in determining what can and can’t get served by the API. It’s much safer, however, to disallow anonymous API requests altogether, by setting the kubelet’s –anonymous-auth configuration to false. With such a configuration, the API returns a 401 Unauthorized response to unauthorized clients.

Authorization

With authorizing requests to the kubelet API, once again it’s possible to fall foul of a default Kubernetes setting. Authorization to the kubelet API operates in one of two modes; AlwaysAllow (default) or Webhook. The AlwaysAllow mode does exactly what you’d expect – it will allow all requests that have passed through the authentication gate, to succeed. This includes anonymous requests.

Instead of leaving this wide open, the best approach is to offload the authorization decision to the Kubernetes API server, using the kubelet’s –authorization-mode config option, with the webhook value. With this configuration, the kubelet calls the SubjectAccessReview API (which is part of the API server) to determine whether the subject is allowed to make the request, or not.

Restricting the Power of the Kubelet

In older versions of Kubernetes (prior to 1.7), the kubelet had read-write access to all Node and Pod API objects, even if the Node and Pod objects were under the control of another kubelet running on a different node. They also had read access to all objects that were contained within pod specs; the Secret, ConfigMap, PersistentVolume and PersistentVolumeClaim objects. In other words, a kubelet had access to, and control of, numerous resources it had no responsibility for. This is very powerful, and in the event of a cluster node compromise, the damage could quickly escalate beyond the node in question.

Node Authorizer

For this reason, a Node Authorization mode was introduced specifically for the kubelet, with the goal of controlling its access to the Kubernetes API. The Node authorizer limits the kubelet to read operations on those objects that are relevant to the kubelet (e.g. pods, nodes, services), and applies further read-only limits to Secrets, Configmap, PersistentVolume and PersistentVolumeClaim objects, that are related specifically to the pods bound to the node on which the kubelet runs.

NodeRestriction Admission Controller

Limiting a kubelet to read-only access for those objects that are relevant to it, is a big step in preventing a compromised cluster or workload. The kubelet, however, needs write access to its Node and Pod objects as a means of its normal function. To allow for this, once a kubelet’s API request has passed through Node Authorization, it’s then subject to the NodeRestriction admission controller, which limits the Node and Pod objects the kubelet can modify – its own. For this to work, the kubelet user must be system:node:<nodeName>, which must belong in the system:nodes group. It’s the nodeName component of the kubelet user, of course, which the NodeRestriction admission controller uses to allow or disallow kubelet API requests that modify Node and Pod objects. It follows, that each kubelet should have a unique X.509 certificate for authenticating to the API server, with the Common Name of the subject distinguished name reflecting the user, and the Organization reflecting the group.

Again, these important configurations don’t happen automagically, and the API server needs to be started with Node as one of the comma-delimited list of plugins for the –authorization-mode config option, whilst NodeRestriction needs to be in the list of admission controllers specified by the –enable-admission-plugins option.

Best Practice

It’s important to emphasize that we’ve only covered a sub-set of of the security considerations for the cluster layer (albeit important ones), and if you’re thinking that this all sounds very daunting, then fear not, because help is at hand.

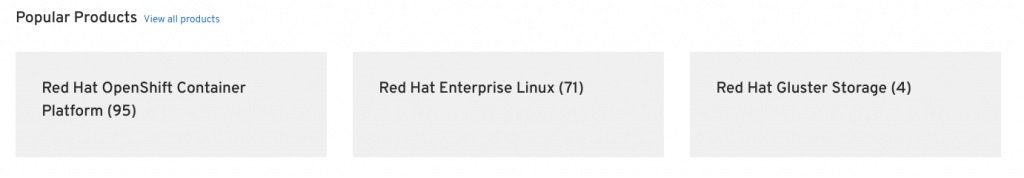

In the same way that benchmark security recommendations have been created for elements of the infrastructure layer, such as Docker, they have also been created for a Kubernetes cluster. The Center for Internet Security (CIS) have compiled a thorough set of configuration settings and filesystem checks for each component of the cluster, published as the CIS Kubernetes Benchmark.

You might also be interested to know that the Kubernetes community has produced an open source tool for auditing a Kubernetes cluster against the benchmark, the Kubernetes Bench for Security. It’s a Golang application, and supports a number of different Kubernetes versions (1.6 onwards), as well as different versions of the benchmark.

If you’re serious about properly securing your cluster, then using the benchmark as a measure of compliance, is a must.

Summary

Evidently, taking precautionary steps to secure your cluster with appropriate configuration, is crucial to protecting the workloads that run in the cluster. Whilst the Kubernetes community has worked very hard to provide all of the necessary security controls to implement that security, for historical reasons some of the default configuration overlooks what’s considered best-practice. We ignore these shortcomings at our peril, and must take the responsibility for closing the gaps whenever we establish a cluster, or when we upgrade to newer versions that provide new functionality.

Some of what we’ve discussed here, paves the way for the next layer in the stack, where we make use of the security mechanisms we’ve configured, to define and apply security controls to protect the workloads that run on the cluster. The next article is called Applying Best Practice Security Controls to a Kubernetes Cluster.

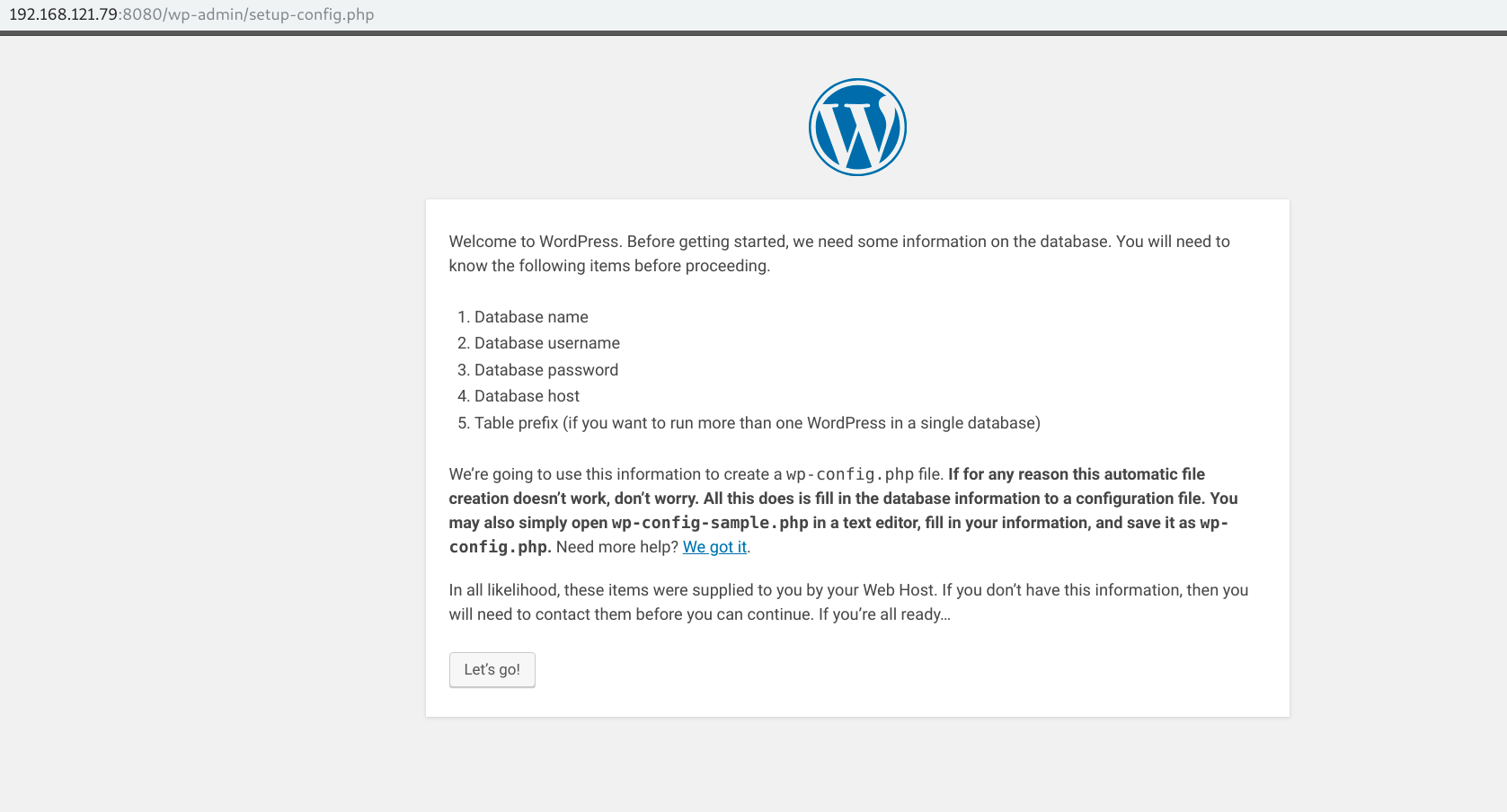

This simple

This simple We’ve created each

We’ve created each